The easiest way to use Agentic RAG in any enterprise.

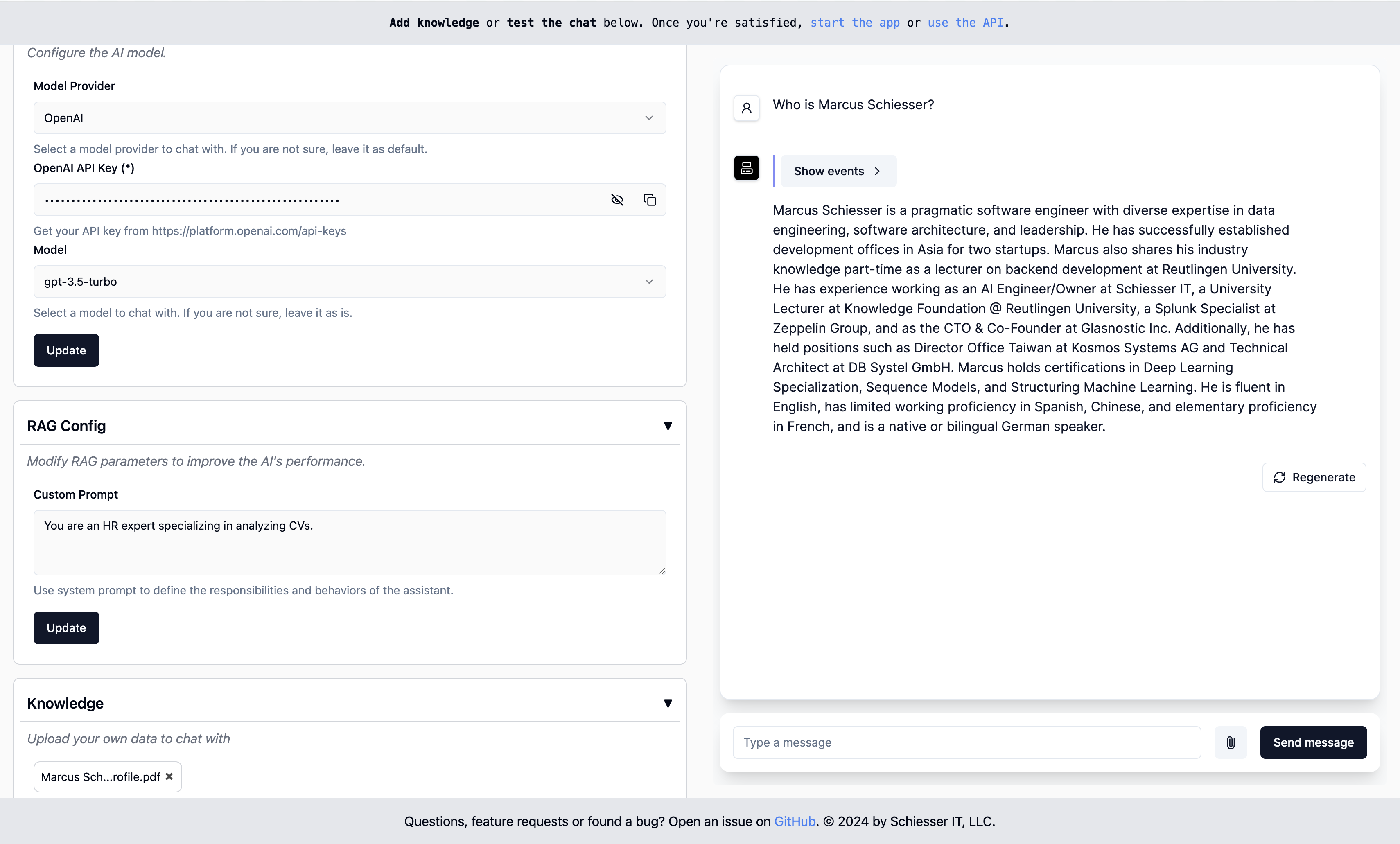

As simple to configure as OpenAI's custom GPTs, but deployable in your own cloud infrastructure using Docker. Built using LlamaIndex.

Get Started · Endpoints · Deployment · Contact

To run, start a docker container with our image:

docker run -p 8000:8000 ragapp/ragappThen, access the Admin UI at https://localhost:8000/admin to configure your RAGapp.

You can use hosted AI models from OpenAI or Gemini, and local models using Ollama.

The docker container exposes the following endpoints:

- Admin UI: https://localhost:8000/admin

- Chat UI: https://localhost:8000

- API: https://localhost:8000/docs

Note: The Chat UI and API are only functional if the RAGapp is configured.

RAGapp doesn't come with any authentication layer by design. Just protect the /admin path in your cloud environment to secure your RAGapp.

We provide a docker-compose.yml file to make deploying RAGapp with Ollama and Qdrant easy in your own infrastructure.

Using the MODEL environment variable, you can specify which model to use, e.g. llama3:

MODEL=llama3 docker-compose upIf you don't specify the MODEL variable, the default model used is phi3, which is less capable than llama3 but faster to download.

Note: The

setupcontainer in thedocker-compose.ymlfile will download the selected model into theollamafolder - this will take a few minutes.

Using the OLLAMA_BASE_URL environment variables, you can specify which Ollama host to use.

If you don't specify the OLLAMA_BASE_URL variable, the default points to the Ollama instance started by Docker Compose (https://ollama:11434).

If you're running a local Ollama instance, you can choose to connect it to RAGapp by setting the OLLAMA_BASE_URL variable to https://host.docker.internal:11434:

MODEL=llama3 OLLAMA_BASE_URL=https://host.docker.internal:11434 docker-compose upThis is necessary if you're running RAGapp on macOS, as Docker for Mac does not support GPU acceleration.

It's easy to deploy RAGapp in your own cloud infrastructure. Customized K8S deployment descriptors are coming soon.

poetry install --no-root

make build-frontends

make devNote: To check out the admin UI during development, please go to https://localhost:3000/admin.

Questions, feature requests or found a bug? Open an issue or reach out to marcusschiesser.