This project aims at developing multiple image Generative Adversarial Networks (GAN) using different datasets (64px and 256px images of pokemon), different models (DC-GAN and W-GAN will be used in this project), and different training processes.

Check and try the Notebook that I used to train my models : here on Google Colaboratory

In this project I have used different datasets :

- A pokemon dataset (with data augmentation in 64px) that you can find here.

- A pokemon dataset (with data augmentation in 256px) that you can find here.

The following networks are mostly convolutional and deconvolutional networks. Yet it is known that GANs are based on complex equilibrium and that they can quickly become unstable and go into collapse mode :

In both DCGAN and WGAN models a Mini Batch Discrimination Layer is implemented in the Discriminant. This layer prevents the Generator to produce the same image for multiple inputs. For more informations see this link.To avoid overconfidence for the Discriminant in the DCGAN model a BCE with label smoothing is used. See the custom function in basic_functions.py.

Once again to avoid overconfidence in the Discriminant, in one of the trainings of the DCGAN model, the labels are swapped between real images and fake, see in training_boosting.py.

A Deep Convolutional GAN (DC-GAN) is developed for (64px and 256px) using gan_64.py and gan_256.py with the mode "dcgan". The architecture of the GAN is given by the following figures :

The generator input is an nz-sized vector (noise) that will be deconvoluted into an image.

The discriminant's input is an image and the output is a number

The first training used is a classic DC GAN training, defined in training_classic.py. The discriminant D is fed with real (x) and fake (G(z)) images of the generator G at each iteration and the loss is calculated with a Binary Cross Entropy loss function :

Then discriminant and generator are optimized to minimize these losses. An Adam optimizer (lr=0.00015, beta1=0.5, beta2=0.999) has been used for both networks.

The second training used is a classic DC GAN training with a monitoring of the loss values that influences the training, defined in training_monitoring.py. The discriminant and generator loss are defined as for a classic training. A threshold is defined such as at each iteration the discriminant is optimized only if :

This is done to prevent the discriminant to become too powerful compared to the generator. An Adam optimizer (lr=0.00015, beta1=0.5, beta2=0.999) has been used for both networks.

The third training used is a DC GAN training defined in training_boosting.py. At each iteration, a random number k (uniform distribution) is computed and this number defines what will be trained during this iteration and how.

- if

$0.0001 \lt k \lt 0.001$ for the next 100 iterations(including this one) ONLY the discriminant will be trained with real images labeled as real and fake images labeled as fake. This results in a boost in the training of the discriminant. - if

$0.001 \lt k \lt 0.93$ this iteration the discriminant will be trained with real images labeled as real and fake images labeled as fake. - if

$0.93 \le k \le 1$ this iteration the discriminant will be trained with real images labeled as fake and fake images labeled as real (Noisy Label) in order to add noise in the training for a more robust discriminant. - if

$0 \le k \lt 0.0001$ for the next 100 iteration(including this one) ONLY the generator will be trained. This results in a boost in the training of the generator. - if

$0.001 \lt k \le 1$ for this iteration the generator will be trained.

An Adam optimizer (lr=0.00015, beta1=0.5, beta2=0.999) has been used for both networks.

A Wasserstein GAN (W-GAN) is developed for (64px and 256px) using gan_64.py and gan_256.py with the mode "wgan". The architecture of the GAN is given by the following figures :

The generator input is a nz-sized vector (noise) that will be deconvoluted into an image.

The discriminant's input is an image and the output is a number

The training is a Wasserstein GAN training defined in training_wgan.py. The discriminant D is fed with real (x) and fake (G(z)) images of the generator G at each iteration and the loss is calculated with a Binary Cross Entropy loss function :

The goal of the discriminant is to maximize the distance

An RMSProp optimizer (lr=5e-5) has been used for both networks for stability reasons.

For more informations on WGAN training check this website.

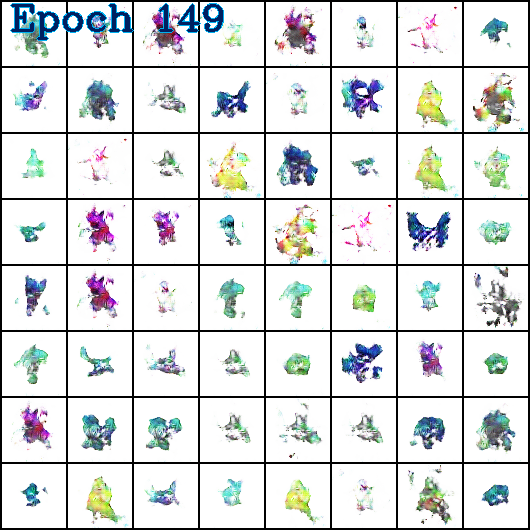

Here are some results of the networks :

Once trained, the 64px networks produce images with sharp edges like small terrifying monsters.

Moreover the Mini Bacth Discrimination layer seems to perform well : even images that looks close have some variations (same type of pokemon but still a bit different). Even with a small latent space the networks always produce different outputs.

An improvement of these 64px networks is planned for the future.

For now, I have trained the 256px networks and obtained good results (images with sharp edges). Yet I believe that I need to tune the hyperparameters to get better results. These networks takes a lot of time to train so I will update this project as soon as I will have the time to compute good results !