*Pingping Zhang✉️1,Tianyu Yan1,Yang Liu1, Huchuan Lu1

Dalian University of Technology, IIAU-Lab1

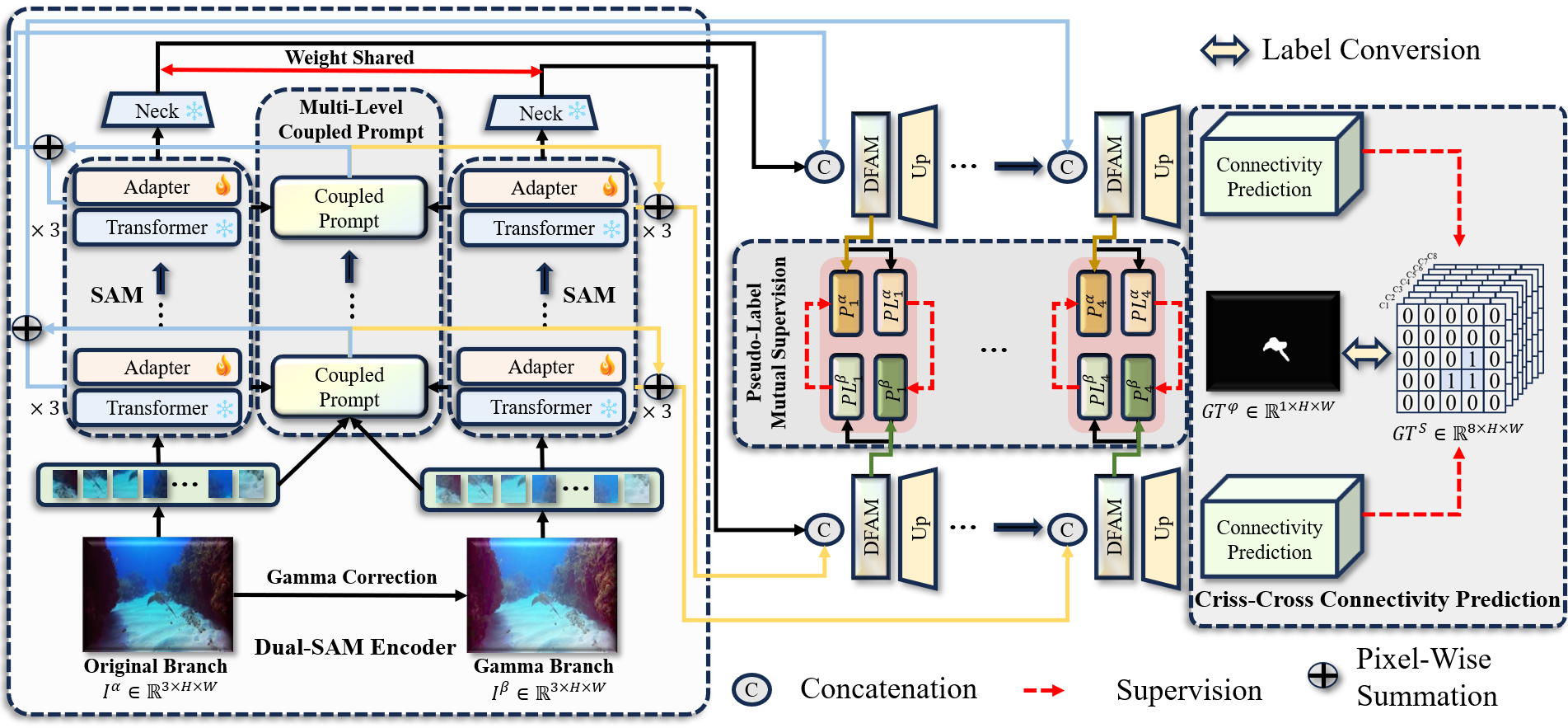

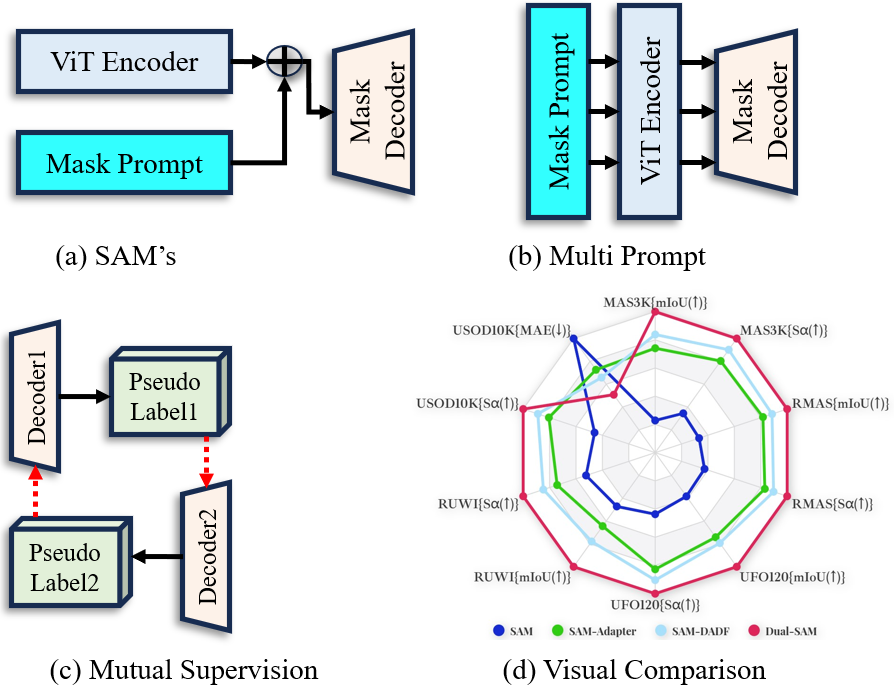

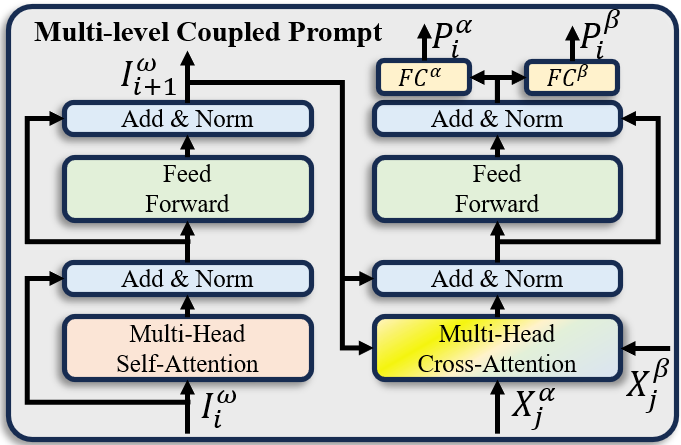

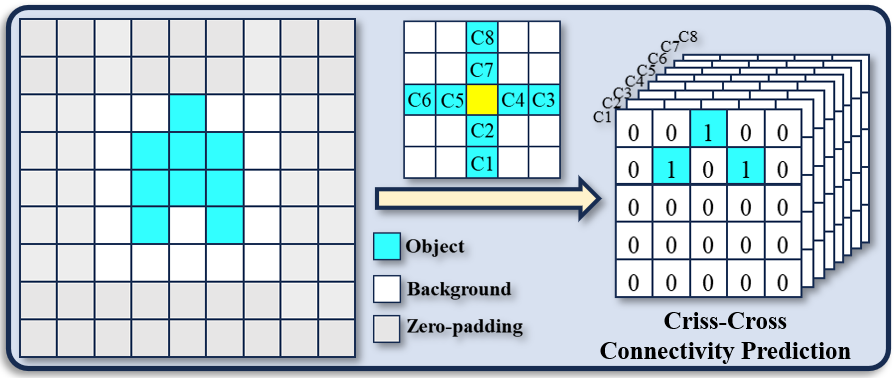

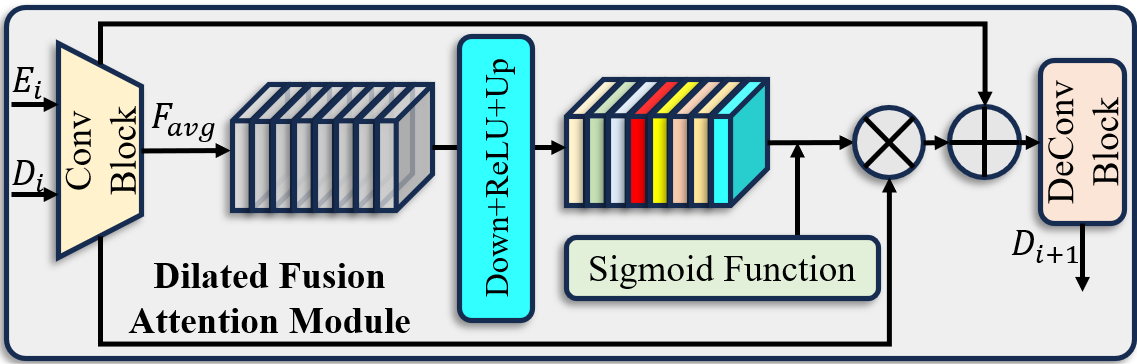

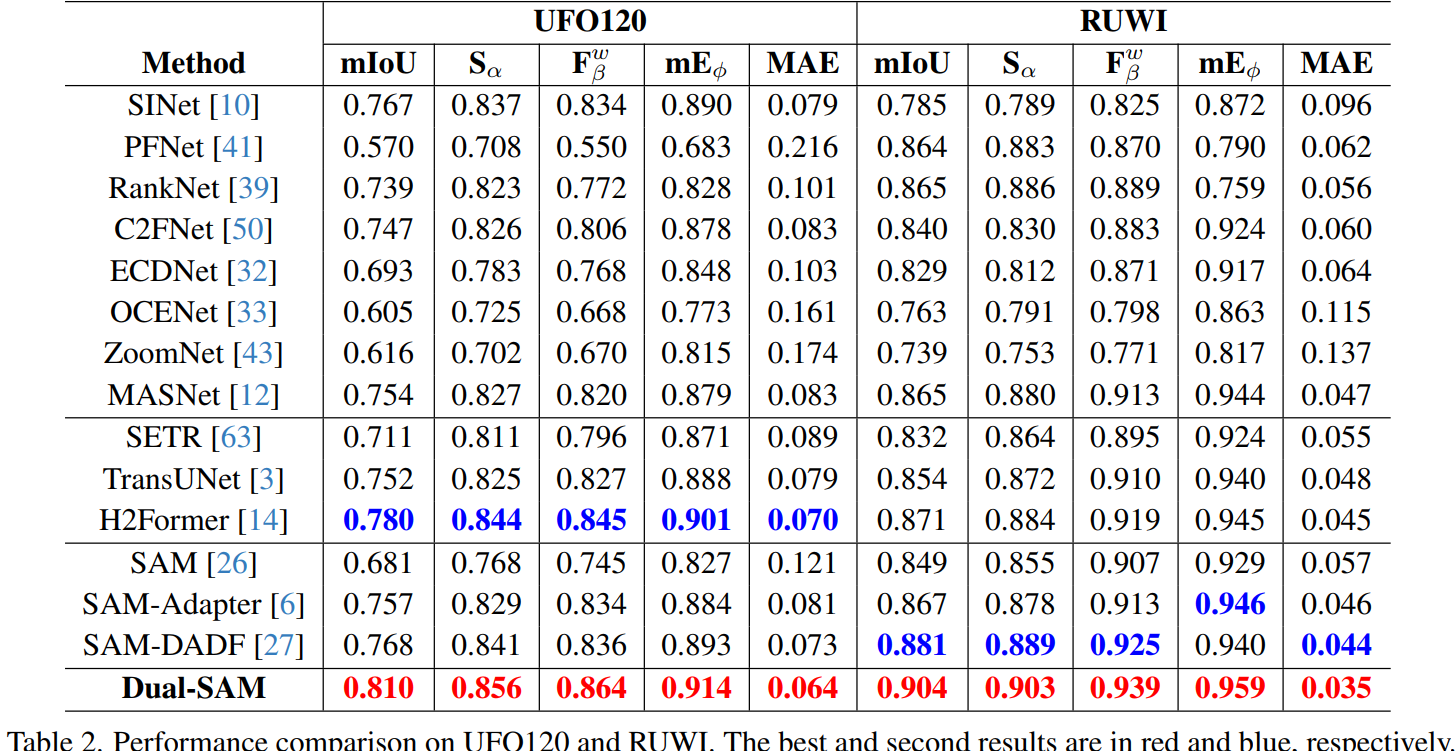

As an important pillar of underwater intelligence, Marine Animal Segmentation (MAS) involves segmenting animals within marine environments. Previous methods don't excel in extracting long-range contextual features and overlook the connectivity between pixels. Recently, Segment Anything Model (SAM) offers a universal framework for general segmentation tasks. Unfortunately, trained with natural images, SAM does not obtain the prior knowledge from marine images. In addition, the single-position prompt of SAM is very insufficient for prior guidance. To address these issues, we propose a novel learning framework, named Dual-SAM for high-performance MAS. To this end, we first introduce a dual structure with SAM's paradigm to enhance feature learning of marine images. Then, we propose a Multi-level Coupled Prompt (MCP) strategy to instruct comprehensive underwater prior information, and enhance the multi-level features of SAM's encoder with adapters. Subsequently, we design a Dilated Fusion Attention Module (DFAM) to progressively integrate multi-level features from SAM's encoder. With dual decoders, it generates pseudo-labels and achieves mutual supervision for harmonious feature representations. Finally, instead of directly predicting the masks of marine animals, we propose a Criss-Cross Connectivity Prediction ( C3P) paradigm to capture the inter-connectivity between pixels. It shows significant improvements over previous techniques. Extensive experiments show that our proposed method achieve state-of-the-art performances on five widely-used MAS datasets.

- [Dual-SAM] is a novel learning framework for high performance Marine Animal Segmentation (MAS). The framework inherits the ability of SAM and adaptively incorporate prior knowledge of underwater scenarios.

- Motivation of Our proposed Mehtod

- Multi-level Coupled Prompt

- Criss-Cross Connectivity Prediction

- Dilated Fusion Attention Module

We rely on five public datasets and five evaluation metrics to thoroughly validate our model’s performance.

step1:Clone the Dual_SAM repository:

To get started, first clone the Dual_SAM repository and navigate to the project directory:

git clone https://github.com/Drchip61/Dual_SAM.git

cd Dual_SAM

step2:Environment Setup:

Dual_SAM recommends setting up a conda environment and installing dependencies via pip. Use the following commands to set up your environment:

conda create -n Dual_SAM

conda activate Dual_SAMpip install -r requirements.txtPlease put the pretrained SAM model in the Dual-SAM file.

Training

# Change the hyper parameter in the train_s.py

python train_s.pyTesting

# Change the hyper parameter in the test_y.py

python test_y.py# First threshold the prediction mask

python bimap.py

# Then evaluate the perdiction mask

python test_score.py@inproceedings{

anonymous2024fantastic,

title={Fantastic Animals and Where to Find Them: Segment Any Marine Animal with Dual {SAM}},

author={Pingping Zhang,Tianyu Yan, Yang Liu,Huchuan Lu},

booktitle={Conference on Computer Vision and Pattern Recognition 2024},

year={2024}

}