Lightweight log/event processor.

Goals:

- fast

- easy to deploy

- simple configuration

Concept:

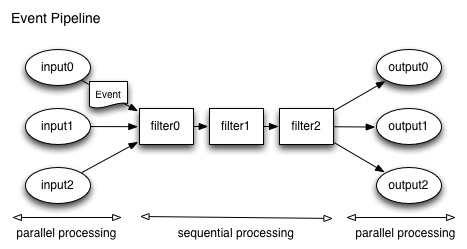

The underlying idea is a very simple concept that is based on pipes and filters. An event gets registered by an input, put through a couple of filters and in the end is published by an output.

[shell1] $ wget http:https://dirtyhack.net/d/translog/latest/translog.linux-x86_64 -O translog

[shell1] $ chmod +x translog

[shell1] $ echo "

[input.File]

source=file0

[filter.KeyValueExtractor]

[output.Stdout]

" > translog.conf

[shell1] $ chmod a+rx translog

[shell1] $ ./translog -config demo.conf

[sheel2] $ echo "foo=bar baz baz baz" >> file0

[shell1]

2013/09/27 18:01:54 [inputProcessor] register *translog.FileReaderPlugin

2013/09/27 18:01:54 [filterChain] register *translog.KeyValueExtractor

2013/09/27 18:01:54 [outputProcessor] register *translog.StdoutWriterPlugin

2013/09/27 18:01:54 [EventPipeline] 0 msg, 0 msg/sec, 0 total, 5 goroutines

2013/09/27 18:02:04 [EventPipeline] 0 msg, 0 msg/sec, 0 total, 6 goroutines

<#Event> Host: bender.local

Source: file:https://file0

InitTime: 2013-09-27 18:02:10.40223812 +0200 CEST

Time: 2013-09-27 18:02:10.402238172 +0200 CEST

Raw:

foo=bar baz baz baz

Fields:

[foo] bar

2013/09/27 18:02:14 [EventPipeline] 1 msg, 0 msg/sec, 1 total, 6 goroutines

CTRL-C

$ git clone https://github.com/invadersmustdie/translog.git $ cd translog $ rake prep $ rake $ ./bin/translog -h

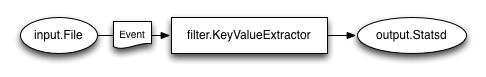

[input.File]

source=/var/log/nginx/access.log

[filter.KeyValueExtractor]

[output.Statsd]

host=metrics.local

port=8125

proto=udp

field.0.raw=test.nginx.runtime:%{runtime}|ms

field.1.raw=test.nginx.response.%{RC}:1|c

Q: Why not use logstash?

A: First of all, logstash is awesome. It's the tool of choice if you want to do enhanced log/event processing. Translog does not intend to replace logstash at all. It just provides a lite alternative if you want to have real simple log processing.

Q: How about more plugins for translog?

A: As translog tries to keep the number of dependencies down (to zero) I'm really careful about adding new plugins. If you need to work with redis, elasticsearch, ... use logstash.

Q: What throughput can it handle?

A: There is no real answer to this question because it depends on the filters and configuration given. In general you will see a drop in throughput when using complex regex patterns.

On my local box (MBP2012 2.7GHz 8GB SSD) (-cpus=1) it achieved the following numbers:

- 1x FileReaderPlugin

[input.File] source=tmp/source.file0

-> 52540 msg/sec

- 1x FileReaderPlugin + 1x KeyValueFilter

[input.File] source=tmp/source.file0 [filter.KeyValueExtractor]

-> 5168 msg/sec

- 1x FileReaderPlugin + 1x KeyValueFilter + 1x FieldExtractor

[input.FileReaderPlugin] source=tmp/source.file0 [filter.KeyValueExtractor] [filter.FieldExtractor] field.visitorId=visitorId=([a-zA-Z0-9]+); field.varnish_director=backend="([A-Z]+)_ field.varnish_cluster=backend="[A-Z]+_([A-Z]+)_

-> 4926 msg/sec

Reads raw messages from tcp socket.

Example

[input.Tcp] port=8888

Reads messsages from file.

Example

[input.File] source=tmp/source.file0

Reads messsages from named pipe.

Example

[input.NamedPipe] source=tmp/source.pipe0

Extracts key/value pairs from raw message.

Example

[filter.KeyValueExtractor] # no configuration

Extracts value from raw message and adds it to event. Regex pattern named in first group (parenthesis) will be used.

Example

[filter.FieldExtractor] debug=true field.visitorId=visitorId=([a-zA-Z0-9]+);

Drops event by matching field or raw message.

Example

[filter.DropEventFilter] debug=true field.direction=c msg.match=^foo

msg.match also support inverted behaviour, this means allowing patterns insteads of permitting them. This can be achieved by putting a '!' in front of the pattern.

Example

[filter.DropEventFilter] msg.match=!^foo

Modifies fields for event.

Currently supports:

- removing fields by name or pattern

- substituting patterns in raw message

Example: modifying fields

[filter.ModifyEventFilter] debug=true field.remove.list=cookie,set-cookie field.remove.match=^cache msg.replace.pattern=foo msg.replace.substitute=bar

Example: modifying raw message

[filter.ModifyEventFilter] debug=true msg.replace.pattern=foo msg.replace.substitute=bar

Example: modifying raw message with backref

Inside substitute, $ signs are interpreted as in Expand, so for instance $1 represents the text of the first submatch.

[filter.ModifyEventFilter]

debug=true

msg.replace.pattern=foo=([a-z]+)

msg.replace.substitute=bar=${1}

before: "[2013/10/18 12:01:02] Sed non pharetra leo. Vestibulum aliquet porttitor foo=baz xx" after: "[2013/10/18 12:01:02] Sed non pharetra leo. Vestibulum aliquet porttitor bar=baz xx"

Writes internal structure of message to stdout.

Example

[output.Stdout] # no configration

Sends message in graylog2 format to given endpoint.

Gelf format: https://github.com/Graylog2/graylog2-docs/wiki/GELF

Example

[output.Gelf] debug=true host=localhost port=12312 proto=tcp

Writes messages into named pipe (fifo).

Example

[output.NamedPipe] debug=true filename=/var/run/out.fifo

Sends metrics to statsd.

Example

[output.Statsd]

debug=true

host=foo.local

port=8125

proto=udp

field.0.raw=test.nginx.runtime:%{runtime}|ms

field.1.raw=test.nginx.response.%{RC}:1|c

field.2.raw=test.nginx.%{foo}.%{bar}:1|c

Writes raw message to network socket.

Example

[output.NetworkSocket] debug=true host=localhost port=9001 proto=tcp