This is a manual on how to use the Microsoft Phi-3 family.

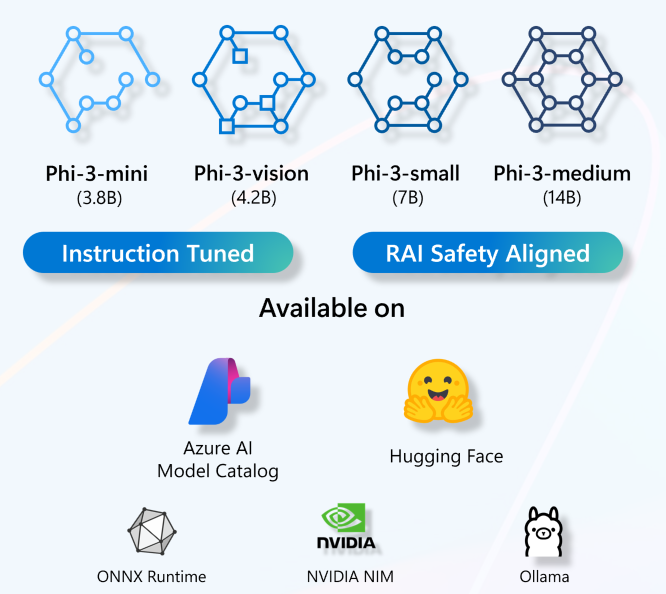

Phi-3, a family of open AI models developed by Microsoft. Phi-3 models are the most capable and cost-effective small language models (SLMs) available, outperforming models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks.

Phi-3-mini, a 3.8B language model is available on Microsoft Azure AI Studio, Hugging Face, and Ollama. Phi-3 models significantly outperform language models of the same and larger sizes on key benchmarks (see benchmark numbers below, higher is better). Phi-3-mini does better than models twice its size, and Phi-3-small and Phi-3-medium outperform much larger models, including GPT-3.5T.

All reported numbers are produced with the same pipeline to ensure that the numbers are comparable. As a result, these numbers may differ from other published numbers due to slight differences in the evaluation methodology. More details on benchmarks are provided in our technical paper.

Phi-3-small with only 7B parameters beats GPT-3.5T across a variety of language, reasoning, coding and math benchmarks.

Phi-3-medium with 14B parameters continues the trend and outperforms Gemini 1.0 Pro.

Phi-3-vision with just 4.2B parameters continues that trend and outperforms larger models such as Claude-3 Haiku and Gemini 1.0 Pro V across general visual reasoning tasks, OCR, table and chart understanding tasks.

Note: Phi-3 models do not perform as well on factual knowledge benchmarks (such as TriviaQA) as the smaller model size results in less capacity to retain facts.

We are introducing Phi Silica which is built from the Phi series of models and is designed specifically for the NPUs in Copilot+ PCs. Windows is the first platform to have a state-of-the-art small language model (SLM) custom built for the NPU and shipping inbox. Phi Silica API along with OCR, Studio Effects, Live Captions, Recall User Activity APIs will be available in Windows Copilot Library in June. More APIs like Vector Embedding, RAG API, Text Summarization will be coming later.

You can learn how to use Microsoft Phi-3 and how to build E2E solutions in your different hardware devices. To experience Phi-3 for yourself, start with playing with the model and customizing Phi-3 for your scenarios using the Azure AI Studio, Azure AI Model Catalog

Playground Each model has a dedicated playground to test the model Azure AI Playground.

You can also find the model on the Hugging Face

Playground Hugging Chat playground

This cookbook includes:

-

- Downloading & Creating Sample Data Set(✅)

- Fine-tuning Scenarios(✅)

- Fine-tuning vs RAG(✅)

- Fine-tuning Let Phi-3 become an industry expert(✅)

- Fine-tuning Phi-3 with AI Toolkit for VS Code(✅)

- Fine-tuning Phi-3 with Azure Machine Learning Service(✅)

- Fine-tuning Phi-3 with Lora(✅)

- Fine-tuning Phi-3 with QLora(✅)

- Fine-tuning Phi-3 with Azure AI Studio(✅)

- Fine-tuning Phi-3 with Azure ML CLI/SDK(✅)

- Fine-tuning with Microsoft Olive(✅)

- Fine-tuning Phi-3-vision with Weights and Bias(✅)

- Fine-tuning Phi-3 with Apple MLX Framework(✅)

-

- Introduction to End to End Samples(✅)

- Prepare your industry data(✅)

- Use Microsoft Olive to architect your projects(✅)

- Inference Your Fine-tuning ONNX Runtime Model(✅)

- Multi Model - Interactive Phi-3-mini and OpenAI Whisper(✅)

- MLFlow - Building a wrapper and using Phi-3 with MLFlow(✅)

- WinUI3 App with Phi-3 mini-4k-instruct-onnx(✅)

- WinUI3 Multi Model AI Powered Notes App Sample(✅)

-

Labs and workshops samples Phi-3

- C# .NET Labs(✅)

- Build your own Visual Studio Code GitHub Copilot Chat with Microsoft Phi-3 Family(✅)

- Phi-3 ONNX Tutorial(✅)

- Phi-3-vision ONNX Tutorial(✅)

- Run the Phi-3 models with the ONNX Runtime generate() API(✅)

- Phi-3 ONNX Multi Model LLM Chat UI, This is a chat demo(✅)

- C# Hello Phi-3 ONNX example Phi-3(✅)

- C# API Phi-3 ONNX example to support Phi3-Vision(✅)

- Run C# Phi-3 samples in a CodeSpace(✅)

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.