Self-Referencing Embedded Strings (SELFIES): A 100% robust molecular string representation

Mario Krenn, Florian Haese, AkshatKumar Nigam, Pascal Friederich, Alan Aspuru-Guzik

Machine Learning: Science and Technology 1, 045024 (2020), extensive blog post January 2021.

Talk on youtube about SELFIES.

Blog explaining SELFIES in Japanese language

Major contributors of v1.0.n: Alston Lo and Seyone Chithrananda

Main developer of v2.0.0: Alston Lo

Chemistry Advisor: Robert Pollice

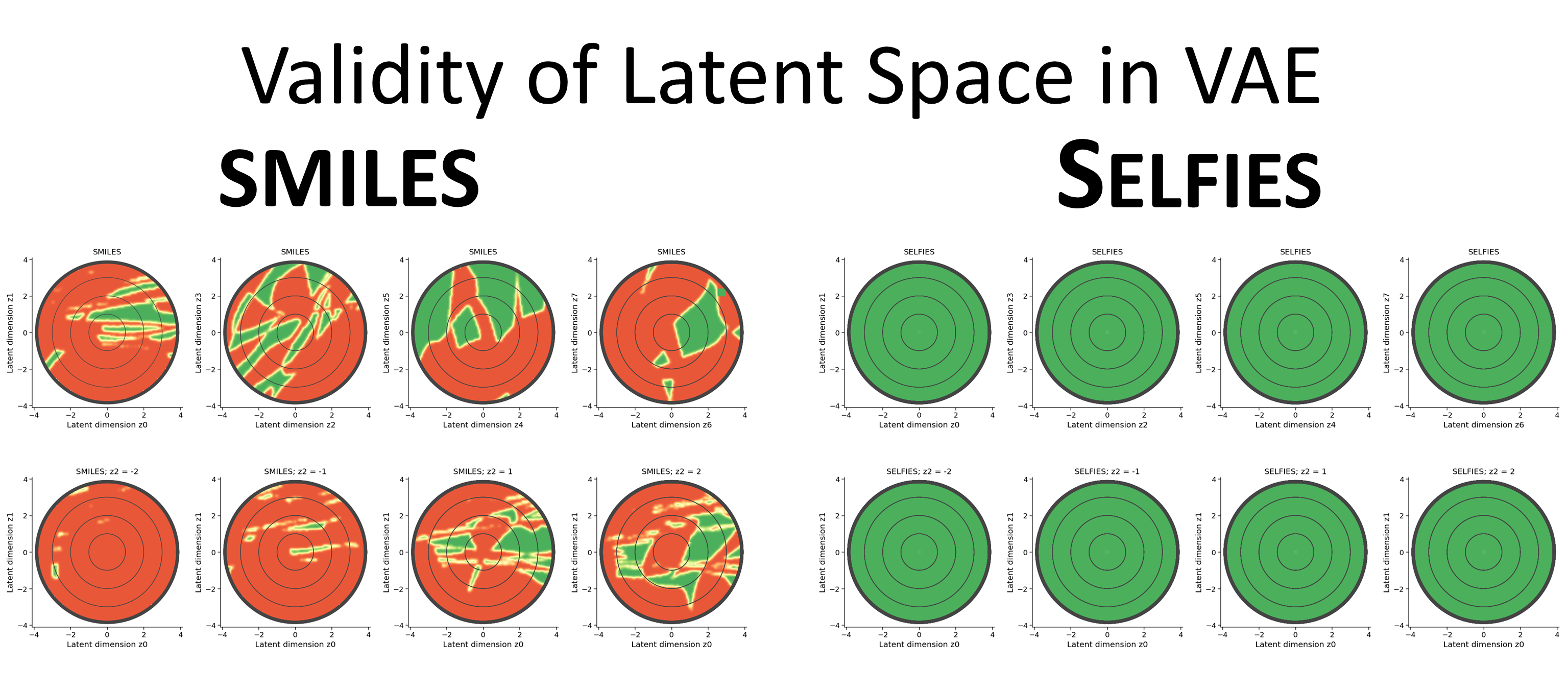

A main objective is to use SELFIES as direct input into machine learning models, in particular in generative models, for the generation of molecular graphs which are syntactically and semantically valid.

Use pip to install selfies.

pip install selfiesTo check if the correct version of selfies is installed, use

the following pip command.

pip show selfiesTo upgrade to the latest release of selfies if you are using an

older version, use the following pip command. Please see the

CHANGELOG

to review the changes between versions of selfies, before upgrading:

pip install selfies --upgradePlease refer to the documentation,

which contains a thorough tutorial for getting started with selfies

and detailed descriptions of the functions

that selfies provides. We summarize some key functions below.

| Function | Description |

|---|---|

selfies.encoder |

Translates a SMILES string into its corresponding SELFIES string. |

selfies.decoder |

Translates a SELFIES string into its corresponding SMILES string. |

selfies.set_semantic_constraints |

Configures the semantic constraints that selfies operates on. |

selfies.len_selfies |

Returns the number of symbols in a SELFIES string. |

selfies.split_selfies |

Tokenizes a SELFIES string into its individual symbols. |

selfies.get_alphabet_from_selfies |

Constructs an alphabet from an iterable of SELFIES strings. |

selfies.selfies_to_encoding |

Converts a SELFIES string into its label and/or one-hot encoding. |

selfies.encoding_to_selfies |

Converts a label or one-hot encoding into a SELFIES string. |

import selfies as sf

benzene = "c1ccccc1"

# SMILES -> SELFIES -> SMILES translation

try:

benzene_sf = sf.encoder(benzene) # [C][=C][C][=C][C][=C][Ring1][=Branch1]

benzene_smi = sf.decoder(benzene_sf) # C1=CC=CC=C1

except sf.EncoderError:

pass # sf.encoder error!

except sf.DecoderError:

pass # sf.decoder error!

len_benzene = sf.len_selfies(benzene_sf) # 8

symbols_benzene = list(sf.split_selfies(benzene_sf))

# ['[C]', '[=C]', '[C]', '[=C]', '[C]', '[=C]', '[Ring1]', '[=Branch1]']You can get an "attribution" list that traces the connection between input and output tokens. For example let's see which tokens in the SELFIES string [C][N][C][Branch1][C][P][C][C][Ring1][=Branch1] are responsible for the output SMILES tokens.

selfies = "[C][N][C][Branch1][C][P][C][C][Ring1][=Branch1]"

smiles, attr = sf.decoder(

selfies, attribute=True)

print('SELFIES', selfies)

print('SMILES', smiles)

print('Attribution:')

for smiles_token, a in attr:

print(smiles_token)

if a:

for j, selfies_token in a:

print(f'\t{j}:{selfies_token}')

# output

SELFIES [C][N][C][Branch1][C][P][C][C][Ring1][=Branch1]

SMILES C1NC(P)CC1

Attribution:

C

0:[C]

N

1:[N]

C

2:[C]

P

3:[Branch1]

5:[P]

C

6:[C]

C

7:[C]attr is a list of tuples containing the output token and the SELFIES tokens and indices that led to it. For example, the P appearing in the output SMILES at that location is a result of both the [Branch1] token at position 3 and the [P] token at index 5. This works for both encoding and decoding. For finer control of tracking the translation (like tracking rings), you can access attributions in the underlying molecular graph with get_attribution.

In this example, we relax the semantic constraints of selfies to allow

for hypervalences (caution: hypervalence rules are much less understood

than octet rules. Some molecules containing hypervalences are important,

but generally, it is not known which molecules are stable and reasonable).

import selfies as sf

hypervalent_sf = sf.encoder('O=I(O)(O)(O)(O)O', strict=False) # orthoperiodic acid

standard_derived_smi = sf.decoder(hypervalent_sf)

# OI (the default constraints for I allows for only 1 bond)

sf.set_semantic_constraints("hypervalent")

relaxed_derived_smi = sf.decoder(hypervalent_sf)

# O=I(O)(O)(O)(O)O (the hypervalent constraints for I allows for 7 bonds)In this example, we first build an alphabet from a dataset of SELFIES strings,

and then convert a SELFIES string into its padded encoding. Note that we use the

[nop] (no operation)

symbol to pad our SELFIES, which is a special SELFIES symbol that is always

ignored and skipped over by selfies.decoder, making it a useful

padding character.

import selfies as sf

dataset = ["[C][O][C]", "[F][C][F]", "[O][=O]", "[C][C][O][C][C]"]

alphabet = sf.get_alphabet_from_selfies(dataset)

alphabet.add("[nop]") # [nop] is a special padding symbol

alphabet = list(sorted(alphabet)) # ['[=O]', '[C]', '[F]', '[O]', '[nop]']

pad_to_len = max(sf.len_selfies(s) for s in dataset) # 5

symbol_to_idx = {s: i for i, s in enumerate(alphabet)}

dimethyl_ether = dataset[0] # [C][O][C]

label, one_hot = sf.selfies_to_encoding(

selfies=dimethyl_ether,

vocab_stoi=symbol_to_idx,

pad_to_len=pad_to_len,

enc_type="both"

)

# label = [1, 3, 1, 4, 4]

# one_hot = [[0, 1, 0, 0, 0], [0, 0, 0, 1, 0], [0, 1, 0, 0, 0], [0, 0, 0, 0, 1], [0, 0, 0, 0, 1]]- More examples can be found in the

examples/directory, including a variational autoencoder that runs on the SELFIES language. - This ICLR2020 paper used SELFIES in a genetic algorithm to achieve state-of-the-art performance for inverse design, with the code here.

- SELFIES allows for highly efficient exploration and interpolation of the chemical space, with a deterministic algorithms, see code.

- We use SELFIES for Deep Molecular dreaming, a new generative model inspired by interpretable neural networks in computational vision. See the code of PASITHEA here.

- Kohulan Rajan, Achim Zielesny, Christoph Steinbeck show in two papers that SELFIES outperforms other representations in img2string and string2string translation tasks, see the codes of DECIMER and STOUT.

- An improvement to the old genetic algorithm, the authors have also released JANUS, which allows for more efficient optimization in the chemical space. JANUS makes use of STONED-SELFIES and a neural network for efficient sampling.

selfies uses pytest with tox as its testing framework.

All tests can be found in the tests/ directory. To run the test suite for

SELFIES, install tox and run:

tox -- --trials=10000 --dataset_samples=10000By default, selfies is tested against a random subset

(of size dataset_samples=10000) on various datasets:

- 130K molecules from QM9

- 250K molecules from ZINC

- 50K molecules from a dataset of non-fullerene acceptors for organic solar cells

- 160K+ molecules from various MoleculeNet datasets

- 36M+ molecules from the eMolecules Database.

Due to its large size, this dataset is not included on the repository. To run tests

on it, please download the dataset into the

tests/test_setsdirectory and run thetests/run_on_large_dataset.pyscript.

See CHANGELOG.

We thank Jacques Boitreaud, Andrew Brereton, Nessa Carson (supersciencegrl), Matthew Carbone (x94carbone), Vladimir Chupakhin (chupvl), Nathan Frey (ncfrey), Theophile Gaudin, HelloJocelynLu, Hyunmin Kim (hmkim), Minjie Li, Vincent Mallet, Alexander Minidis (DocMinus), Kohulan Rajan (Kohulan), Kevin Ryan (LeanAndMean), Benjamin Sanchez-Lengeling, Andrew White, Zhenpeng Yao and Adamo Young for their suggestions and bug reports, and Robert Pollice for chemistry advices.