👉 Organisation notes:

- 💡 All material presented during the short course will be uploaded here and made available to participants 5 days prior to the event on this repository.

⚠️ Live participation to this short course requires registration to EGU's main meeting.- Due to the time-limited course schedule (60 min), an interactive overview will cover the course's objectives replacing an extensive hands-on.

- Further interests in solving PDEs with Julia on GPUs❓

- Sign-up (free) for a hands-on workshop at JuliaCon2021

- Check out this online geo-HPC tutorial

This short course covers trendy areas in modern geocomputing with broad geoscientific applications. The physical processes governing natural systems' evolution are often mathematically described as systems of differential equations. A performant numerical implementation of the solving algorithm leveraging modern hardware is key and permits to tackle problems that were technically not possible a decade ago.

- Objectives

- About this repository

- Getting started (not part of the live course)

- 👉 Short course material

- Extras (not part of the live course)

- Further reading

The goal of this short course is to offer an interactive overview on how to solve systems of (partial) differential equations in parallel on GPUs using the Julia language. Julia combines high-level language simplicity and low-level language performance. The resulting codes and applications are fast, short and readable [1, 2, 3].

We will design and implement a numerical algorithm that predicts ice flow dynamics over mountainous topography (Greenland) using GPU computing (e.g. Fig. below). We will discretise the shallow ice approximation (SIA) equations in our ice flow solver to assess Greenland's ice cap evolution as function of a climate scenario.

The online course consists of 2 parts:

- Part 1 - You will learn about the Julia language and iterative PDE solvers.

- Part 2 - You will implement a GPU parallel PDE solver to predict ice flow dynamics on real topography.

By the end of this short course, you will:

- Have an iterative GPU PDE solver that predicts ice-flow;

- Have a Julia code that achieves similar performance than legacy codes (C, CUDA, MPI);

- Know how the Julia language solves the "two-language problem";

- Be able to leverage the computing power of modern GPU accelerated servers and supercomputers.

💡 Disclaimer

- The solvers presented in this short course, based on the pseudo-transient method, enable to solve PDEs iteratively and are well-suited for parallel execution (on GPUs). It is not the purpose of this course to provide an extensive overview of various solution techniques.

- The performance assessment is done using the time / iteration metric which reflects the ability of the algorithm to efficiently exploit the memory bandwidth of the (parallel) hardware. Further performance considerations regarding the metric can be found here.

The course repository lists following folders and items:

- the data folder contains various low resolution Greenland input data (bedrock topography, surface elevation, ice thickness, masks, ...) downscaled from BedMachine Greenland v3 - note the filenames include grid resolution information

(nx, ny); - the docs folder contains documentation linked in the README;

- the various output folder will contain the codes output, mainly figures in png format;

- the scripts folder contains the scripts this course is about 🎉

- the extras folder contains supporting course material (not discussed live during the course);

- the

Project.tomlfile is a Julia project file, tracking the used packages and enabling a reproducible environment.

👉 This repository is an interactive and dynamic source of information related to the short course.

- Check out the Discussion tab if you have general comments, ideas to share or for Q&A.

- File an Issue if you encounter any technical problems with the distributed codes.

- Interact in a open-minded, respectful and inclusive manner.

⚠️ Due to the time limitation, the short course will not cover the Getting started steps. These are meant to provide directions to the participant willing to actively try out the examples during the short course. It is warmly recommended to perform the Getting started steps before the beginning of the workshop.

The provided directions will get you started with:

- Installing Julia v1.6 - 2 configurations are suggested:

- running Julia from the terminal with an external text editor

- running Julia from VS Code

- Running the scripts from the course repository.

👉 Note: This course relies on Julia v1.6. The install configuration are tested on a MacBook Pro running macOS 10.15.7, a Linux GPU server running Ubuntu 20.04 LTS and a Linux GPU server running CentOS 8.

Check you have an active internet connexion and download Julia v1.6 for your platform following the install directions provided under [help] if needed.

Alternatively, open a terminal and download the binaries (select the one for your platform):

wget https://julialang-s3.julialang.org/bin/winnt/x64/1.6/julia-1.6.0-win64.exe # Windows

wget https://julialang-s3.julialang.org/bin/mac/x64/1.6/julia-1.6.0-mac64.dmg # macOS

wget https://julialang-s3.julialang.org/bin/linux/x64/1.6/julia-1.6.0-linux-x86_64.tar.gz # Linux x86Then add Julia to PATH (usually done in your .bashrc, .profile, or config file).

Ensure you have a text editor with syntax highlighting support for Julia. From within the terminal, type

juliaand you should be all set.

If you'd enjoy a more IDE type of environment, check out VS Code. Follow the installation directions for the Julia VS Code extension.

To get started with the course,

- clone (or download the ZIP archive) the course repository (help here)

git clone https://github.com/luraess/julia-parallel-course-EGU21.git- Navigate to

julia-parallel-course-EGU21

cd julia-parallel-course-EGU21- From the terminal, launch Julia with the

--projectflag to read-in project environment related informations such as used packages

julia --project- From VS Code, follow the instructions from the documentation to get started.

Now that you launched Julia, you should be in the Julia REPL. You need to ensure all the packages you need to be installed before using them. To do so, enter the Pkg mode by typing ]. Then, instantiate the project which should trigger the download of the packages (st lists the package status). Exit the Pkg mode with CRTL+C:

julia> ]

(julia-parallel-course-EGU21) pkg> st

Status `~/julia-parallel-course-EGU21/Project.toml`

# [...]

(julia-parallel-course-EGU21) pkg> instantiate

Updating registry at `~/.julia/registries/General`

Updating git-repo `https://github.com/JuliaRegistries/General.git`

# [...]

julia>To test your install, go to the scripts folder and run the iceflow.jl code. You can execute shell commands from within the Julia REPL first typing ;:

julia> ;

shell> cd scripts/

julia> include("iceflow.jl")Running this the first time will (pre-)complie the various installed packages and will take some time. Subsequent runs, by executing include("iceflow.jl"), should take around 10s.

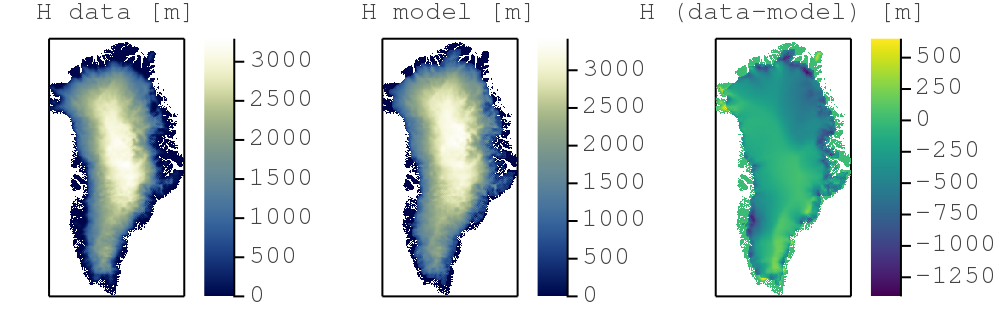

You should then see two figures saved in a newly created output folder, the second being the comparison between modelled and observed ice thickness distribution over Greenland:

On the CPU, multi-threading is made accessible via Base.Threads and the environment variable JULIA_NUM_THREADS can be used to define the number of cores to use on the CPU, e.g. export JULIA_NUM_THREADS=2 to enable 2 threads (2 CPU cores).

The CUDA.jl module permits to launch compute kernels on Nvidia GPUs natively from within Julia. JuliaGPU provides further reading and introductory material about GPU ecosystems within Julia. If you have an Nvidia CUDA capable GPU device, also export following environment vaiable prior to installing the CUDA.jl package:

export JULIA_CUDA_USE_BINARYBUILDER=falseWe will use the GPU acceleration in the second part of the course.

This section lists the material discussed within this 60 min. short course:

💡 In this course we will implement a 2D nonlinear diffusion equation on GPUs in Julia using the finite-difference method and an iterative solving approach. The goal is to resolve the shallow ice approximation (SIA) and predict ice flow over Greenland.

by M. Werder

🚧 WIP

- the cool Julia ecosystem

- git integration

- modern code dev and CI

- ...

Let's start with a 1D linear diffusion example to implement both an explicit and iterative implicit PDE solver. The diffusion of a quantity H over time t can be described as (1a) a diffusive flux, (1b) a flux balance and (1c) an update rule:

qH = -D*dH/dx (1a)

dHdt = -dqH/dx (1b)

dH/dt = dHdt (1c)The diffusion_1D_expl.jl code implements an iterative and explicit solution of eq. (1) for an initial Gaussian profile:

H = exp(-(x-lx/2.0)^2)How to go with an implicit solution and keeping it "matrix-free" ?

The diffusion_1D_impl.jl code implements an iterative implicit solution of eq. (1). How ? We add the physical time derivative dh/dt=(H-Hold)/dt to the rate of change (or residual) dHdt

dHdt = -(H-Hold)/dt -dqH/dxand iterate until the values of dHdt (the residual of the eq. (1)) drop below a defined tolerance level tol.

It works, but the "naive" Picard iteration count seems to be pretty high (niter>1000). A efficient way to circumvent this is to add "damping" (damp) to the rate-of-change dHdt, analogous to add friction enabling faster convergence [4]

dHdt = -(H-Hold)/dt -dqH/dx + damp*dHdtThe diffusion_1D_damp.jl code implements a damped iterative implicit solution of eq. (1). The iteration count drops to niter<200. This pseudo-transient approach enables fast as the iteration count sclaes close to O(N) and not O(N^2).

Performance evaluation is a complex topic as different metrics would lead to different conclusions. Ultimately, efficient algorithms should minimise the time to solution. For iterative algorithms this means:

- Ensure fast iterations (minimise the time per iteration).

- Keep the iteration count as low as possible avoiding it to increase to much when increasing the numerical resolution.

We will here report (1) for various implementations on various computer architectures.

Let's move from the simple 1D linear diffusion example to the shallow ice approximation (SIA) equation, a 2D nonlinear diffusion equation:

qHx = -D*dS/dx (2a)

qHy = -D*dS/dy (2b)

dHdt = -(dqHx/dx + dqHy/dy) + M (2c)

dH/dt = dHdt (2d)where S=B+H is the surface elevation, B is the bedrock elevation, H the ice thickness, M the mass balance (accumulation, ablation). The diffusion coefficient D is nonlinear and function of S and the power-law exponent n:

D = a*H^(npow+2)*sqrt((dS/dx)^2 + (dS/dy)^2)^(npow-1)We implement climate forcing using a simple mass balance (accumulation and ablation) M formulation:

M = min(grad_b*(S - z_ELA), b_max)as function of the surface elevation S and capped by the maximal accumulation rate b_max. The mass balance gradient grad_b is defined as

grad_b = (1.3517 - 0.014158*LAT)/100.0*0.91where LAT is the latitude (taken from [5]). The equilibrium line altitude z_ELA (where accumulation = ablation) is latitude dependent, ranging from 1300m (South) to 1000m (North) as suggested by [5].

The iceflow.jl code implements the 2D SIA equations using the iterative implicit damped formulation as in diffusion_1D_damp.jl using the pseudo-transient approach. The calculation of the SIA PDEs resumes in these 13 lines of Julia code:

# mass balance

M .= min.(grad_b.*(S .- z_ELA), b_max)

# compute diffusivity

dSdx .= diff(S, dims=1)/dx

dSdy .= diff(S, dims=2)/dy

gradS .= sqrt.(av_ya(dSdx).^2 .+ av_xa(dSdy).^2)

D .= a*av(H).^(npow+2) .* gradS.^(npow-1)

# compute flux

qHx .= .-av_ya(D).*diff(S[:,2:end-1], dims=1)/dx

qHy .= .-av_xa(D).*diff(S[2:end-1,:], dims=2)/dy

# update ice thickness

dtau .= dtausc*min.(10.0, cfl./(epsi .+ av(D)))

ResH .= .-(diff(qHx, dims=1)/dx .+ diff(qHy, dims=2)/dy) .+ inn(M)

dHdt .= dHdt.*damp .+ ResH

H[2:end-1,2:end-1] .= max.(0.0, inn(H) .+ dtau.*dHdt)

# apply mask

H[Mask.==0] .= 0.0

# update surface

S .= B .+ H💡 Note that the here discussed SIA codes do not implement any flux limiter scheme to circumvent known accuracy and stability issues. Check out [6, 7] for further references (the

iceflow_bench.jlscript implements the benchmark [6] that reflects this issue).

The model output is the ice surface elevation, the ice thickness, the ice velocity magnitude and the mass balance:

This implementation of the SIA equations solves the steady-state (i.e. the physical time derivative being removed as dt->∞). The last part of this course (Greenland's ice cap evolution) will show how to achieve an (implicit) ice flow predictions over a specific time span dt by including the physical time derivative in the ResH term.

The figure below depicts the time 1000 iterations (or pseudo-time steps) take running the iceflow.jl code on a Intel Xeon Gold 6244 (1 thread - plain Julia):

So now we have a cool iterative and implicit SIA solver in less than 100 lines of code 🎉. Good enough for low resolution calculations. What if we need higher resolution and faster time to solution ? Parallel and GPU computing makes it possible. Let's start from the iceflow.jl code and port it to GPU (with some intermediate steps).

The main idea of GPU parallelisation is to calculate each grid point concurently by a different GPU thread (instaed of the more serial CPU execution) as depicted hereafter:

- Extract the flux

qHx, qHyfrom the physics calculations iniceflow.jl:

# [...] skipped lines

# compute flux

qHx .= .-av_ya(D).*diff(S[:,2:end-1], dims=1)/dx

qHy .= .-av_xa(D).*diff(S[2:end-1,:], dims=2)/dy

# [...] skipped lines- Let's modify these lines into a

compute_fluxfunction we will then be able to turn into a GPU kernel.

# [...] skipped lines

function compute_flux!(qHx, qHy, S, D, dx, dy, nx, ny)

Threads.@threads for iy=1:ny

for ix=1:nx

if (ix<=nx-1 && 2<=iy<=ny-1) qHx[ix,iy] = -0.5*(D[ix,iy]+D[ix+1,iy])*(S[ix+1,iy]-S[ix,iy])/dx end

if (2<=ix<=nx-1 && iy<=ny-1) qHy[ix,iy] = -0.5*(D[ix,iy]+D[ix,iy+1])*(S[ix,iy+1]-S[ix,iy])/dy end

end

end

return

end

# [...] skipped lines

compute_flux!(qHx, qHy, S, D, dx, dy, nx, ny)

# [...] skipped lines💡 Julia enables multi-threading capabilities by simply adding

Threads.@threadsto the outermost loop (here overiy).

- The last step is to replace the (multi-threaded) loops by a vectorised GPU index

ix = (blockIdx().x-1) * blockDim().x + threadIdx().x

iy = (blockIdx().y-1) * blockDim().y + threadIdx().yspecific to GPU execution. Each ix and iy are executed concurrently on a different GPU thread:

using CUDA

# [...] skipped lines

compute_flux!(qHx, qHy, S, D, dx, dy, nx, ny)

ix = (blockIdx().x-1) * blockDim().x + threadIdx().x

iy = (blockIdx().y-1) * blockDim().y + threadIdx().y

if (ix<=nx-1 && 2<=iy<=ny-1) qHx[ix,iy] = -0.5*(D[ix,iy]+D[ix+1,iy])*(S[ix+1,iy]-S[ix,iy])/dx end

if (2<=ix<=nx-1 && iy<=ny-1) qHy[ix,iy] = -0.5*(D[ix,iy]+D[ix,iy+1])*(S[ix,iy+1]-S[ix,iy])/dy end

return

end

# [...] skipped lines

@cuda blocks=cublocks threads=cuthreads compute_flux!(qHx, qHy, S, D, dx, dy, nx, ny)

synchronize()

# [...] skipped lines💡 We use

@cuda blocks=cublocks threads=cuthreadsto launch the GPU function on the appropriate number of threads, i.e. "parallel workers". The numerical grid resolutionnxandnymust now be chosen accordingly to the number of parallel workers.

The here described porting procedure steps are exemplified for the 1D diffusion equation (Porting the diffusion equation to GPUs) available in the extra material:

- (1)

diffusion_1D_damp.jl - (2)

diffusion_1D_damp_fun.jl - (3)

diffusion_1D_damp_gpu.jl - (4)

diffusion_1D_damp_xpu.jl

Wouldn't it be great to be able to combine the multi-thread CPU and GPU implementations into a single "XPU" code to be able to run on various hardware with only changing a USE_GPU switch ? Using ParallelStencil.jl enables this, as many more cool features. The iceflow_xpu.jl script uses ParallelStencil.jl for an XPU implementation on various backends:

const USE_GPU = false

using ParallelStencil

using ParallelStencil.FiniteDifferences2D

@static if USE_GPU

@init_parallel_stencil(CUDA, Float64, 2)

else

@init_parallel_stencil(Threads, Float64, 2)

end

# [...] skipped lines

@parallel function compute_flux!(qHx, qHy, D, S, dx, dy)

@all(qHx) = -@av_ya(D)*@d_xi(S)/dx

@all(qHy) = -@av_xa(D)*@d_yi(S)/dy

return

end

# [...] skipped lines

@parallel compute_flux!(qHx, qHy, D, S, dx, dy)

# [...] skipped lines💡 Various macros

@(...)permit to deal with the low-level technicalities and theUSE_GPUflag enables to switch between CPU and GPU backend.

The resulting code is short and readable and solves the "two-language problem"; development and production code implementations are regrouped into a single code.

The figure below depicts the time 1000 iterations (or pseudo-time steps) take running the iceflow_xpu.jl code on:

- an Intel Xeon Gold 6244 (4 threads - cores)

- an Nvidia RTX 2070 GPU

We can finally use our XPU ice flow solver to simulate the evolution of Greenland's ice cap for a specific climate scenario. The steps are following:

- Compute the steady-state ice thickness for current climate forcing (mass balance function as described in SIA equation section)

- Apply a time-dependent linear increase of the ELA (the equilibrium line where accumulation = ablation) of 1m/yr over the next 2500yrs. We here assume that an increase in annual mean temperature of 0.6°C is equivalent to a 100m shift of the ELA, thus 1m/yr represents 0.3°C per 50yrs. The

iceflow_xpu_evo.jlcode implements this climate evolution model and produces the following predictions for the Greenland ice cap evolution:

Note that our climate scenario may reflect some extreme warming over the next 2500yrs.

⚠️ Due to the time limitation, the short course will not cover the extras material. This information complements the course's main content and may provide intermediate and/or additional development steps.

Extra material comprises:

This extra example complements the GPU SIA implementation and the XPU SIA implementation.

Let's start from the diffusion_1D_damp.jl code, a cool iterative and implicit solver in about 30 lines of code 🎉, and port it to GPU (with some intermediate steps).

- Extract the physics calculations from

diffusion_1D_damp.jl, i.e. the time loop:

# [...] skipped lines

qH .= -D*diff(H)/dx

dHdt .= -(H[2:end-1].-Hold[2:end-1])/dt .-diff(qH)/dx .+ damp*dHdt

H[2:end-1] .= H[2:end-1] .+ dtau*dHdt

# [...] skipped lines- Split the calculations into separate functions (or kernels) and call those functions within the time loop. The

diffusion_1D_damp_fun.jlimplements those modifications:

function compute_flux!(qH, H, D, dx, nx)

Threads.@threads for ix=1:nx

if (ix<=nx-1) qH[ix] = -D*(H[ix+1]-H[ix])/dx end

end

return

end

function compute_rate!(dHdt, H, Hold, qH, dt, damp, dx, nx)

Threads.@threads for ix=1:nx

if (2<=ix<=nx-1) dHdt[ix-1] = -(H[ix] - Hold[ix])/dt -(qH[ix]-qH[ix-1])/dx + damp*dHdt[ix-1] end

end

return

end

function compute_update!(H, dHdt, dtau, nx)

Threads.@threads for ix=1:nx

if (2<=ix<=nx-1) H[ix] = H[ix] + dtau*dHdt[ix-1] end

end

return

end

# [...] skipped lines

compute_flux!(qH, H, D, dx, nx)

compute_rate!(dHdt, H, Hold, qH, dt, damp, dx, nx)

compute_update!(H, dHdt, dtau, nx)

# [...] skipped lines💡 Julia enables multi-threading capabilities by simply adding

Threads.@threadsto the outermost loop (here overix).

- The last step is to replace the (multi-threaded) loop by a vectorised index

ix = (blockIdx().x-1) * blockDim().x + threadIdx().xspecific to GPU execution. Eachixis executed concurrently on a different GPU thread. Thediffusion_1D_damp_gpu.jlimplements those modifications to run on GPUs:

using CUDA

# [...] skipped lines

function compute_flux!(qH, H, D, dx, nx)

ix = (blockIdx().x-1) * blockDim().x + threadIdx().x

if (ix<=nx-1) qH[ix] = -D*(H[ix+1]-H[ix])/dx end

return

end

function compute_rate!(dHdt, H, Hold, qH, dt, damp, dx, nx)

ix = (blockIdx().x-1) * blockDim().x + threadIdx().x

if (2<=ix<=nx-1) dHdt[ix-1] = -(H[ix] - Hold[ix])/dt -(qH[ix]-qH[ix-1])/dx + damp*dHdt[ix-1] end

return

end

function compute_update!(H, dHdt, dtau, nx)

ix = (blockIdx().x-1) * blockDim().x + threadIdx().x

if (2<=ix<=nx-1) H[ix] = H[ix] + dtau*dHdt[ix-1] end

return

end

# [...] skipped lines

@cuda blocks=cublocks threads=cuthreads compute_flux!(qH, H, D, dx, nx)

synchronize()

@cuda blocks=cublocks threads=cuthreads compute_rate!(dHdt, H, Hold, qH, dt, damp, dx, nx)

synchronize()

@cuda blocks=cublocks threads=cuthreads compute_update!(H, dHdt, dtau, nx)

synchronize()

# [...] skipped lines💡 We use

@cuda blocks=cublocks threads=cuthreadsto launch the GPU function on the appropriate number of threads, i.e. "parallel workers". The numerical grid resolutionnxmust now be chosen accordingly to the number of workersnx=cublocks*cuthreads.

Wouldn't it be great to be able to combine the multi-thread CPU and GPU implementations into a single "XPU" code to be able to run on various hardware with only changing a USE_GPU switch ? Using ParallelStencil.jl enables this, as well more other cool features. The diffusion_1D_damp_xpu.jl uses ParallelStencil.jl for an XPU implementation on various backends:

const USE_GPU = false

using ParallelStencil

using ParallelStencil.FiniteDifferences1D

@static if USE_GPU

@init_parallel_stencil(CUDA, Float64, 1)

else

@init_parallel_stencil(Threads, Float64, 1)

end

# [...] skipped lines

@parallel function compute_flux!(qH, H, D, dx)

@all(qH) = -D*@d(H)/dx

return

end

@parallel function compute_rate!(dHdt, H, Hold, qH, dt, damp, dx)

@all(dHdt) = -(@all(H) - @all(Hold))/dt -@d(qH)/dx + damp*@all(dHdt)

return

end

@parallel function compute_update!(H, dHdt, dtau)

@inn(H) = @inn(H) + dtau*@all(dHdt)

return

end

# [...] skipped lines

@parallel compute_flux!(qH, H, D, dx)

@parallel compute_rate!(dHdt, H, Hold, qH, dt, damp, dx)

@parallel compute_update!(H, dHdt, dtau)

# [...] skipped linesVarious macros @(...) permit to deal with the low-level technicalities and the USE_GPU flag enables to switch between CPU and GPU backend.

Using the inversion approach proposed by [7], our ice flow solver iceflow.jl can be embedded into an inversion framework to retrieve spatially variable maximum accumulation rate b_max in order to constrain ice thickness distribution over Greenland. The following animation depicts the evolution of the inversion procedure as function of the 30 inversion steps and was produced using the iceflow_inverse.jl code. Gam represents the misfit between the observed Hice and the calculated H ice thickness, B_max represents the spatially variable maximal accumulation.

The ice thickness obtained from the inversion procedure can be further compared to the BedMachine Greenland v3 ice thickness data:

💡 Note that the inversion procedure serves here as proof of concept, as higher resolution and finer tuning may be needed to further improve the misfit minimisation.

Majority of stencil based codes as in this course are memory bounded, meaning the limiting factor in performance is the rate at which memory is transferred from and back between the memory and the arithmetic units. The maximal rate at which the memory transfers occur is the memory copy rate, in the order of 50 GB/s for CPUs and about 1 TB/s for modern GPUs. The effective memory throughput metric (T_eff) measures how good an iterative stencil-based algorithm performs in terms of memory throughput, to be compared to the memory copy rate.

Check out the performance metric section from the ParallelStencil.jl module and this JuliaCon2020 presentation [1].

Check out this material to figure out how combining ImplicitGlobalGrid.jl to ParallelStencil.jl enables efficient distributed memory parallelisation on multiple XPUs.