· Implementation of CNN for Natural Images Classifier ·

· Implementation of CNN for Natural Images Classifier ·

The dataset used for the construction of the model is perfectly balanced since an unbalanced dataset is detrimental to the training of the network. In other words, each of the different classes that make up the dataset has the same number of images.

The images in the dataset must be well centered and occupy as much of the image as possible, since filters will be used, so it is necessary to extract the characteristic patterns present in each of the images. This is because, if we have an image very much on the left edge, it will go through the image from left to right, so it would detect the feature present, however, the rest of the image would be invalid and, therefore, it would not detect the features it might have.

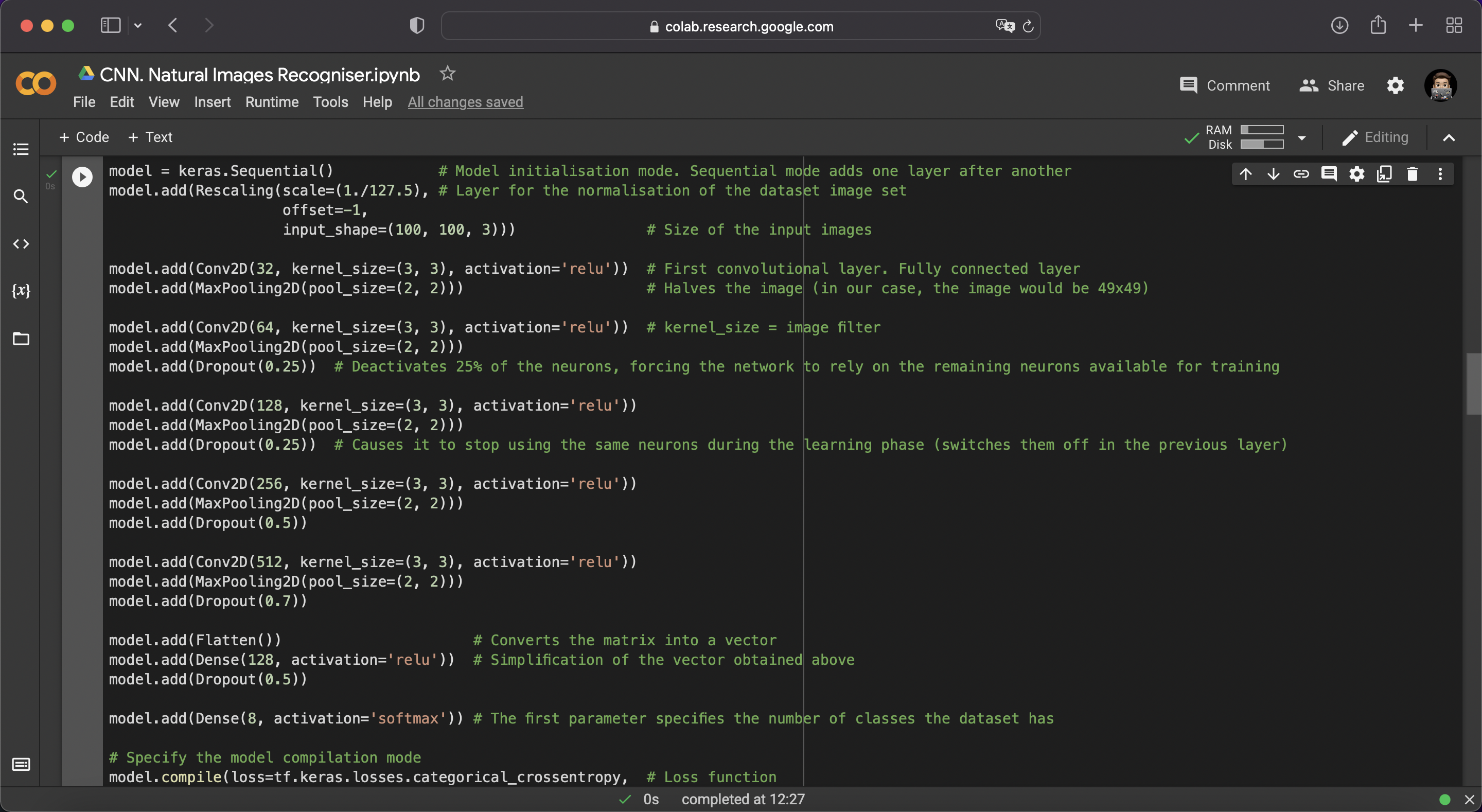

- Dropout Layer

Dropout is a regularization technique for neural network models proposed by Nitish Srivastava in their 2014 paper Dropout: A Simple Way to Prevent Neural Networks from Overfitting (download the paper).

Dropout is a technique where randomly selected neurons are switched off during the training phase. They are “dropped out” randomly. This means that their contribution to the activation of downstream neurons is temporally removed. As a neural network learns, neutron weights settle into their context within the network. Weights of neurons are tuned for specific features providing some specialization according to the features of the dataset.

Neighboring neurons become to rely on this specialization, which if taken too far can result in a fragile model too specialized for the training data. This reliance on a neuron during training is referred to as complex co-adaptations and is an overfitting effect of the network to the training dataset.

You can imagine that if neurons are randomly dropped out of the network during training, those other neurons will have to step in and handle the representation required to make predictions for the missing neurons. This is believed to result in multiple independent internal representations being learned by the network.

Therefore the effect is that the network becomes less sensitive to the specific weights of neurons. This in turn results in a network that is capable of better generalization and is less likely to overfit the training data.

- Flatten Layer

Flattening is converting the data into a 1-dimensional array for inputting it to the next convolutional layer. We flatten the output of the convolutional layers to create a single long feature vector and it is connected to the final classification model, which is called a fully-connected layer.

- Dense Layer

The dense layer is a neural network layer that is deeply connected with its preceding layer which means the neurons of the layer are connected to every neuron of its preceding layer. This layer is the most commonly used in neural network networks.

The dense layer’s neuron in a model receives output from every neuron of its preceding layer, where neurons of the dense layer perform matrix-vector multiplication. The values used in the matrix are parameters that can be trained and updated with the help of backpropagation.

The output generated by the dense layer is an ‘m’ dimensional vector. Thus, the dense layer is basically used for changing the dimensions of the vector. Dense layers also apply operations like rotation, scaling, and translation on the vector.

- Crossentropy Function

The objective is to develop an image classifier neural network with various classes. In order to achieve this objective satisfactorily, the accuracy percentage of the network is sought to be as high as possible, thus indicating that the overfitting effect has been reduced and that the network is reliable. To prevent the overfitting that the neural network may suffer, changes are made to the number of filters that are applied to it, estimating the percentage error of the network according to the following function:

Loss Function: -log(error)

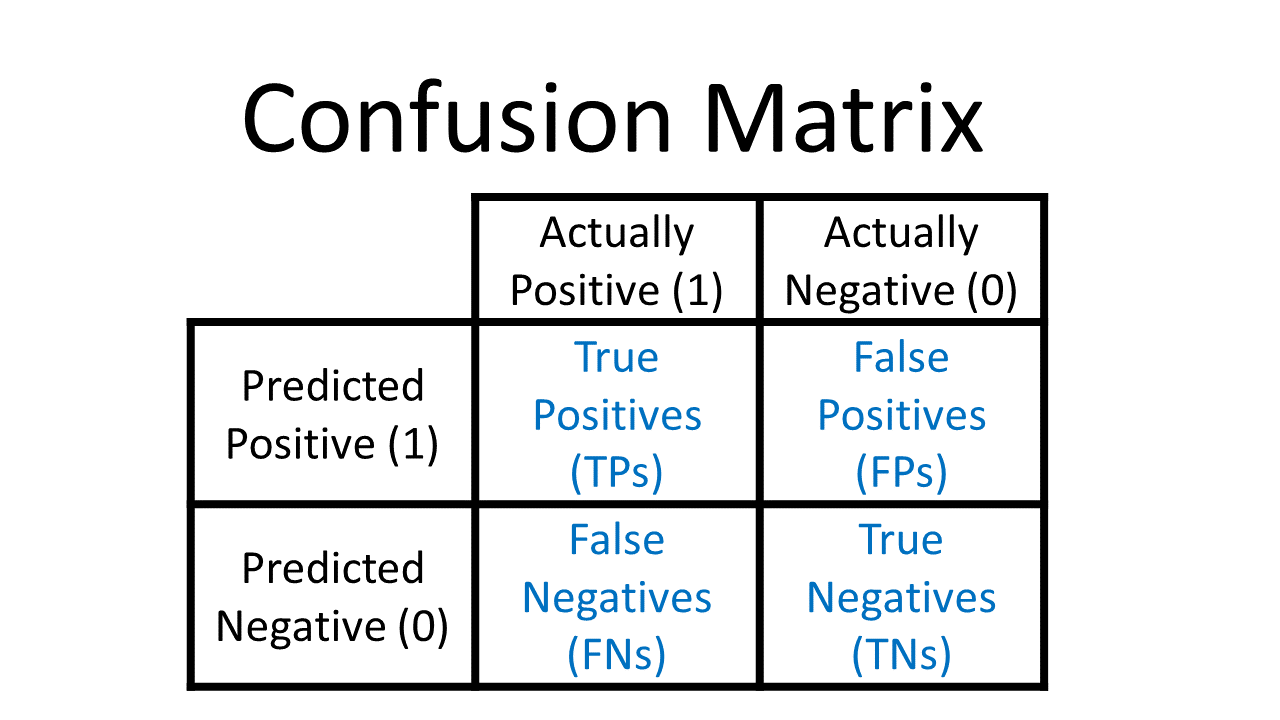

- Confusion Matrix

A confusion matrix is a tool that allows visualization of the performance of a neural network. Each column of the matrix represents the number of predictions in each class, while each row represents the instances in the actual class. One of the benefits of confusion matrices is that they make it easy to see if the system is confusing two classes.

Accuracy = TP(maximum value is sought)/TP+FP(minimum value is sought) → It allows us to know the False Positives.

Recall = TP(maximum value is sought)/TP+FN(minimum value is sought) → It allows us to know the False Negatives.

F1-Score = 2*precisión*recall/precisión+recall(They are intended to take the highest values possible) → Combines Precision and Recall metrics into a single metric

Data augmentation is a technique that can be used to artificially enlarge the size of a training set by creating modified data from the existing one. It is good practice to use data augmentation if you want to avoid overfitting the neural network, or the initial data set is too small to train on, or even if you want to squeeze better performance out of the model.

The code implemented to apply the data augmentation to a dataset is available for downloading here.

The dataset used for the training and validation of the developed convolutional neural network is available here.

The result of the neural network run with the adjustment of the corresponding hyperparameters is available here.

Made with