🚀 [20240504] SelfReformer with Swin backbone (params. ~220M) has been released!

DUTS-TR checkpoint Link, Pre-calculated map Link, Swin backbone Link. Please place the Swin backbone under the model/pretrain folder. A new branch for SelfReformer-Swin will be released soon!

🚀 [20240223] Our arxiv paper SelfReformer was accepted by Transactions on Multimedia (TMM)!

Ubuntu 18.04

Python==3.8.3

Torch==1.8.2+cu111

Torchvision==0.9.2+cu111

kornia

Besides, we use git-lfs for large file management. Please also install it otherwise the .pth file might not be correctly downloaded. However, if you failed to download the lfs file, you can download via Google Drive HERE

For all datasets, they should be organized in below's fashion:

|__dataset_name

|__Images: xxx.jpg ...

|__Masks : xxx.png ...

For training, put your dataset folder under:

dataset/

For evaluation, download below datasets and place them under:

dataset/benchmark/

Suppose we use DUTS-TR for training, the overall folder structure should be:

|__dataset

|__DUTS-TR

|__Images: xxx.jpg ...

|__Masks : xxx.png ...

|__benchmark

|__ECSSD

|__Images: xxx.jpg ...

|__Masks : xxx.png ...

|__HKU-IS

|__Images: xxx.jpg ...

|__Masks : xxx.png ...

...

ECSSD || HKU-IS || DUTS-TE || DUT-OMRON || PASCAL-S

Firstly, make sure you have enough GPU RAM.

With default setting (batchsize=16), 24GB RAM is required, but you can always reduce the batchsize to fit your hardware.

Default values in option.py are already set to the same configuration as our paper, so

after setting the --dataset_root flag in option.py, to train the model (default dataset: DUTS-TR), simply:

python main.py --GPU_ID 0

to test the model located in the ckpt folder (default dataset: DUTS-TE), simply:

python main.py --test_only --pretrain "bal_bla.pt" --GPU_ID 0

If you want to train/test with different settings, please refer to option.py for more control options.

Currently only support training on single GPU.

Pre-calculated saliency map: [Google]

Pre-trained model trained on DUTS-TR for testing on DUTS-TE: [Google]

Firstly, obtain predictions via

python main.py --test_only --pretrain "xxx/bal_bla.pt" --GPU_ID 0 --save_result --save_msg "abc"

Output will be saved in ./output/abc if you specified the save_msg flag.

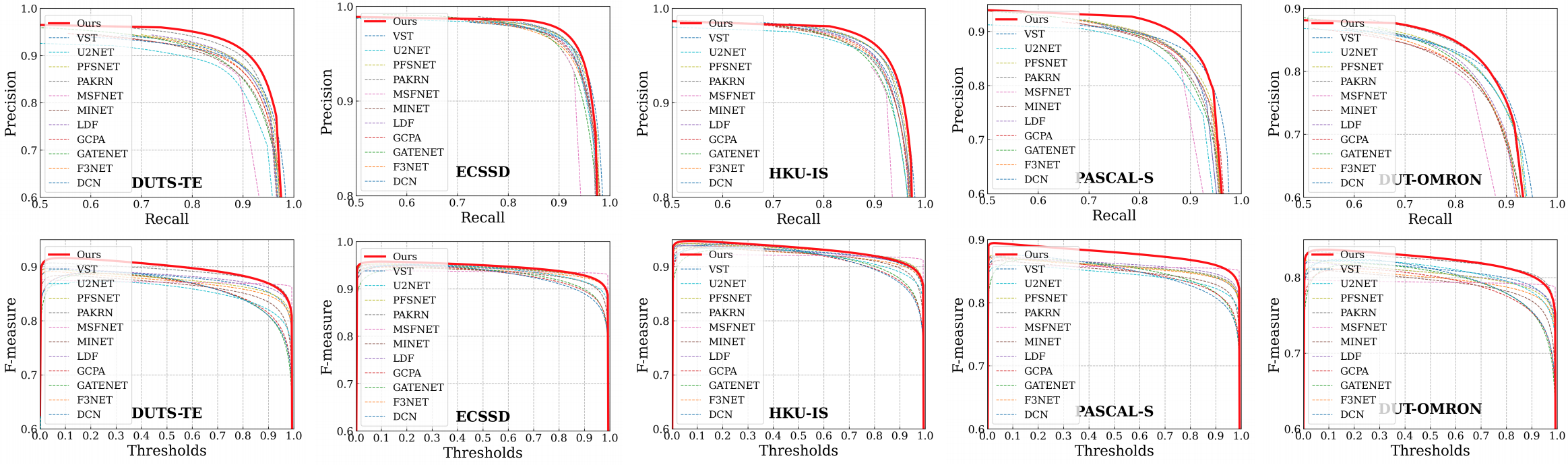

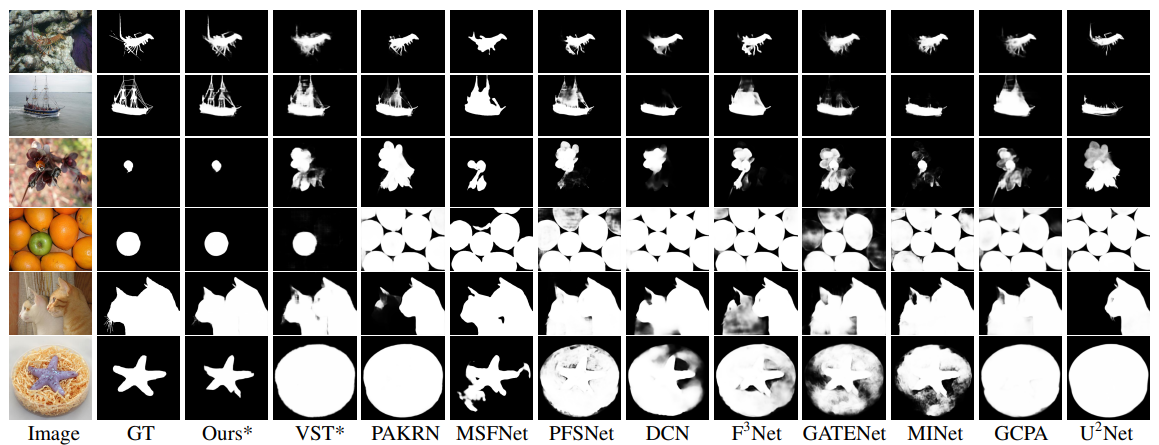

For PR curve and F curve, we use the code provided by this repo: [BASNet, CVPR-2019]

For MAE, F measure, E score and S score, we use the code provided by this repo: [F3Net, AAAI-2020]

If you want to contribute or make the code better, simply submit a Pull-Request to develop branch.

@ARTICLE{10287608,

author={Yun, Yi Ke and Lin, Weisi},

journal={IEEE Transactions on Multimedia},

title={Towards a Complete and Detail-Preserved Salient Object Detection},

year={2023},

volume={},

number={},

pages={1-15},

doi={10.1109/TMM.2023.3325731}}