Official PyTorch implementation of "From Noisy Prediction to True Label: Noisy Prediction Calibration via Generative Model" (ICML 2022) by HeeSun Bae*, Seungjae Shin*, Byeonghu Na, JoonHo Jang, Kyungwoo Song, and Il-Chul Moon.

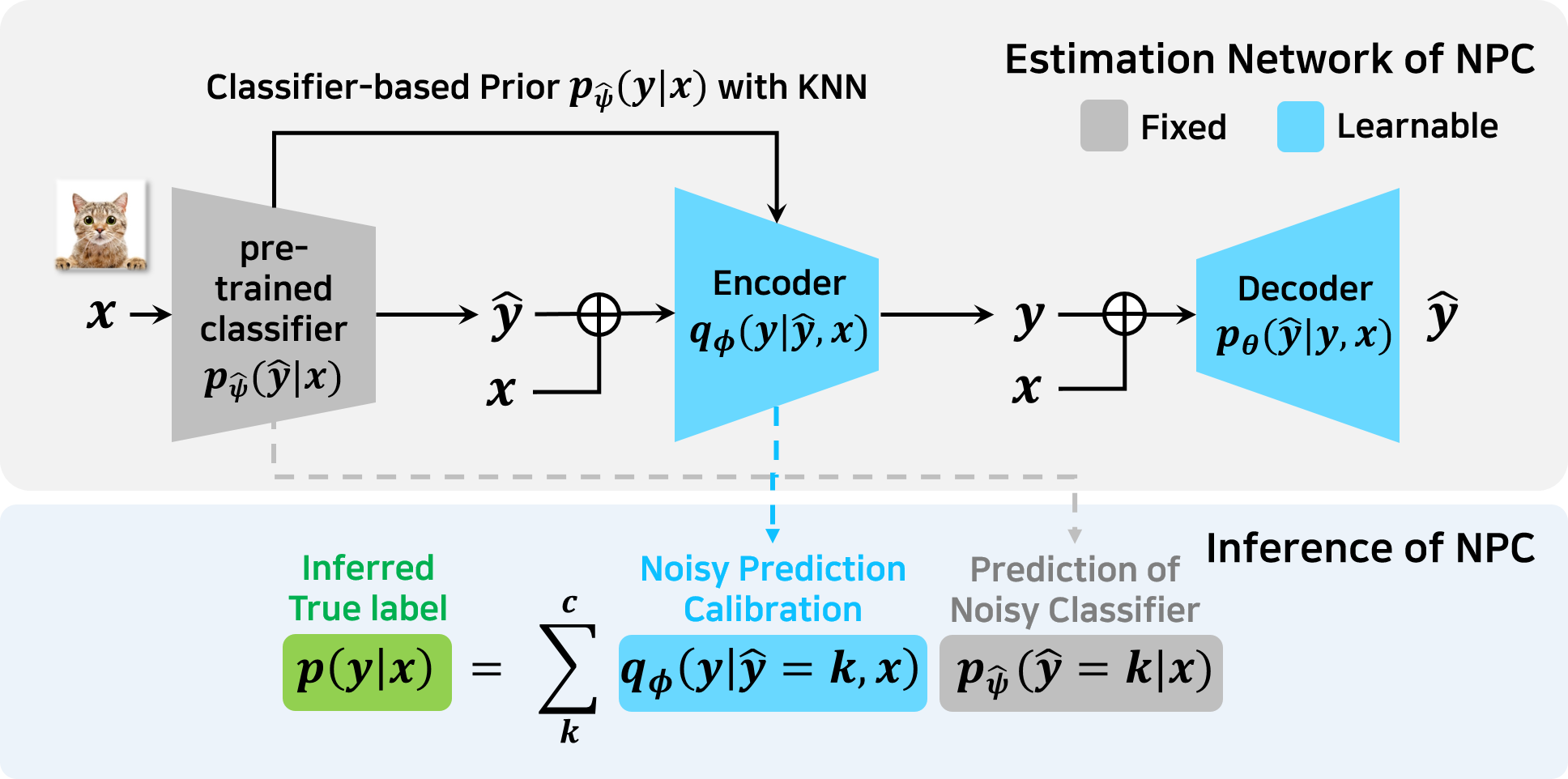

Our method, NPC, calibrates the prediction from a imperfectly pre-trained classifier to a true label via utilizing a deep generative model.

NPC operates as a post-processing module to a black-box classifier, without further access into classifier parameters.

Install required libraries.

We kindly suggest other researchers to run this code on python >= 3.6 version.

pip install -r requirements.txt

Pretrained models are available at dropbox.

Generate synthetic noisy dataset.

python generate_noisy_data.py

We also provide pre-processed dataset at dropbox.

Train the classifier model.

python train_classifier.py --dataset MNIST -- noise_type sym --noisy_ratio 0.2 --class_method no_stop --seed 0 --data_dir {your_data_directory}

It will train the base classifier with CE (Cross Entropy) loss on the MNIST dataset with sym (symmetric 20%) noise.

We also provide other noise types:

clean: no noisesym: symmetricasym: asymmetricidnx: instance-dependentidn: Similarity related instance-dependent

Please refer the code for the notation of each pre-training method. (e.g. vanilla for early-stopping).

For the provided codes for baseline models, some were taken from the author's code, others reproduced directly. Because of this, there may be slight differences from the reported performance of each method.

Generated dataset should be located in your_data_directory.

To save your time, We also provide the checkpoints of pre-trained classifiers at dropbox.

Compute prior information from pre-trained classifier model.

python main_prior.py --dataset MNIST --noise_type sym --noisy_ratio 0.2 --class_method no_stop --seed 0 --data_dir {your_data_directory}

It will compute the prior information from the base classifier with CE (Cross Entropy) loss on the MNIST dataset with sym (symmetric 20%) noise.

Train NPC to calibrate the prediction of pre-trained classifier.

python main_npc.py --dataset MNIST --noise_type sym --noisy_ratio 0.2 --class_method no_stop --post_method npc --knn_mode onehot --prior_norm 5 --data_dir {your_data_directory}

It will train NPC from the KNN prior and the corresponding base classifier with CE (Cross Entropy) loss on the MNIST dataset with sym (symmetric 20%) noise.