A library of tools for lexical-based Named Entity Recognition (NER), based on word vector representations to expand lexicon terms. Vecner is particularly helpful in instances such as: a) the lexicon contains limited terms ; b) interested in domain-specific NER and c) a large unlabelled corpora is available.

An example: if in the lexicon we have the only the word football under the label sports, we can expect that similar terms include soccer, basketball and others. As such, we can leverage that to our advantage when we have a lexicon with limited terms (see Examples below), by expanding the terms under sports using a w2vec model. This works also well when we have a domain-specific problem and a corpus, which we use to train a w2vec model. As such, the similar terms suddenly become much more relevant to our application.

Vecner supports:

- Exact entity matching based on Spacy's PhraseMatcher

- Finding entities based on similar terms in the lexicon.

- Chunking by:

- Using Spacy's noun chunking

- Using entity edges from a dependency graph

- Using a user-defined script (see more here)

Can install by running:

python setup.py installThis example shows how to use a pre-trained on general corpora Gensim w2vec model to perform NER with vecner (run this in jupyter).

from vecner import ExactMatcher, ExtendedMatcher

import gensim.downloader as api

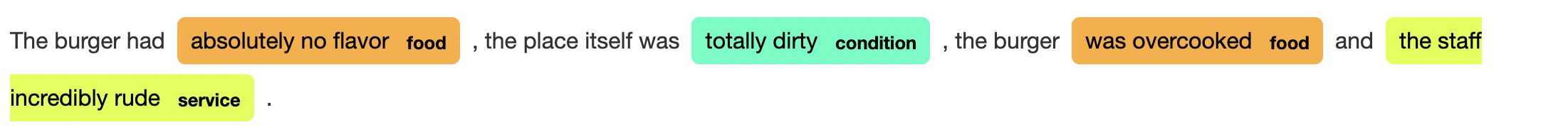

text = """

The burger had absolutely no flavor,

the place itself was totally dirty, the burger

was overcooked and the staff incredibly rude.

"""

# loads the pretrained on general corpora model

model = api.load("glove-wiki-gigaword-100")

# sense check and test

model.most_similar('food')

# custom defined lexicon

food_lexicon = {

'service' : [

'rude',

'service',

'friendly'

],

'general' : [

'clean',

'dirty',

'decoration',

'atmosphere'

],

'food' : [

'warm',

'cold',

'flavor',

'tasty',

'stale',

'disgusting',

'delicious'

]

}

# init the exact matcher to not miss

# any entities from the lexicon if in text

matcher = ExactMatcher(

food_lexicon,

spacy_model = 'en_core_web_sm'

)

# init the Extended Matcher, which expands the lexicon

# using the w2vec model based on similar terms

# and then matches them in the sequence

extendedmatcher = ExtendedMatcher(

food_lexicon,

w2vec_model = model,

in_pipeline = True,

spacy_model = 'en_core_web_sm',

chunking_method = 'edge_chunking',

sensitivity = 20

)

# exact mapping

output = matcher.map(

text = text

)

# extended matching mapping

output = extendedmatcher.map(

document = output['doc'],

ents = output['ents'],

ids = output['ids']

)This example shows how to use a custom trained Gensim w2vec model on your own corpora to perform NER with vecner (run this in jupyter).

from vecner import ExactMatcher, ThresholdMatcher

from gensim.models import KeyedVectors

text = """

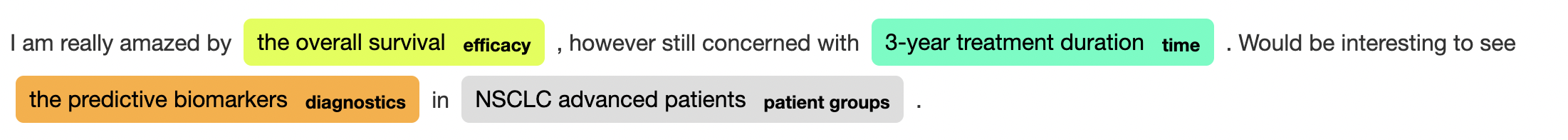

I am really amazed by the overall survival,

however still concerned with 3-year treatment duration.

Would be interesting to see the predictive biomarkers in NSCLC advanced patients.

"""

# loads the pre-trained Gensim model

model = KeyedVectors.load('path_to_model')

# check that model was loaded properly

model.most_similar('pfs')

# custom defined lexicon

bio_lexicon = {

'efficacy' : [

'overall survival',

'pfs'

],

'diagnostics' : [

'marker'

],

'time' : [

'year',

'month'

],

'patient groups' : [

'squamous',

'resectable'

]

}

# init the Exact Matcher for finding entities

# as exactly mentioned in the lexicon

matcher = ExactMatcher(

bio_lexicon,

spacy_model='en_core_web_sm'

)

# init the ThresholdMatcher which finds entities

# based on a cosine similarity threshold

thresholdmatcher = ThresholdMatcher(

bio_lexicon,

w2vec_model=model,

in_pipeline=True,

spacy_model='en_core_web_sm',

chunking_method='noun_chunking',

threshold = 0.55

)

# map exact entities

output = matcher.map(

text = text

)

# use in pipeline to map inexact entities

output = thresholdmatcher.map(

document = output['doc'],

ents = output['ents'],

ids = output['ids']

)from vecner import ExactMatcher, ThresholdMatcher

from gensim.models import KeyedVectors

text = """

I am really amazed by the overall survival,

however still concerned with 3-year treatment duration.

Would be interesting to see the predictive biomarkers in NSCLC advanced patients.

"""

# loads the pre-trained Gensim model

model = KeyedVectors.load('path_to_model')

# check that model was loaded properly

model.most_similar('pfs')

# custom defined lexicon

bio_lexicon = {

'efficacy' : [

'overall survival',

'pfs'

],

'diagnostics' : [

'marker'

],

'time' : [

'year',

'month'

],

'patient groups' : [

'squamous',

'resectable'

]

}

# init the ThresholdMatcher which finds entities

# based on a cosine similarity threshold

thresholdmatcher = ThresholdMatcher(

bio_lexicon,

w2vec_model=model,

in_pipeline=False,

spacy_model='en_core_web_sm',

chunking_method='custom_chunking',

threshold = 0.55

)

# use in pipeline to map inexact entities

output = thresholdmatcher.map(

document = text

)where the custom script from which this reads from, must:

- be named as

custom_file.py - have the function

rule_chunker, with input arguments:- doc

- ents

- ids

You can find and play with the following example script prepared. To run it simply rename to custom_file.py in the directory in which you will run your main script. To prepare your own you can follow the template below:

## Template - custom_file.py

"""

Template for the custom rules file

"""

from typing import List, Dict, Any, Optional

def rule_chunker(

doc : object,

ents : Optional[List[Dict[str, any]]],

ids : Optional[Dict[int,str]] = None

) -> List[Dict[str, any]]:

"""

a custom chunker and/or entity expander

Args:

doc (object): spacy.doc object

ents (List[Dict[str, any]]): the entities -> [ {

'start' : int,

'end' : int,

'text' : str,

'label' : label,

'idx' : int

},

...

]

where 'start' is the start character in the sequence

'end' the end character in the sequence

'text' the entity textstring

'label' the entity name

'idx' the position of the first word in the entity in the sequence

ids (Dict[int,str]) : the entity idx's and their labels for easy recognition -> e.g. {

1 : 'time',

5 : 'cost',

...

}

Returns:

List[Dict[str, any]]

"""

new_ents = []

raise NotImplementedError

return new_entsThird Party Libraries licenses for the dependencies:

Spacy : MIT License

Gensim : LGPL-2.1 license

NLTK : Apache License 2.0