qdurllm (Qdrant URLs and Large Language Models) is a local search engine that lets you select and upload URL content to a vector database: after that, you can search, retrieve and chat with this content.

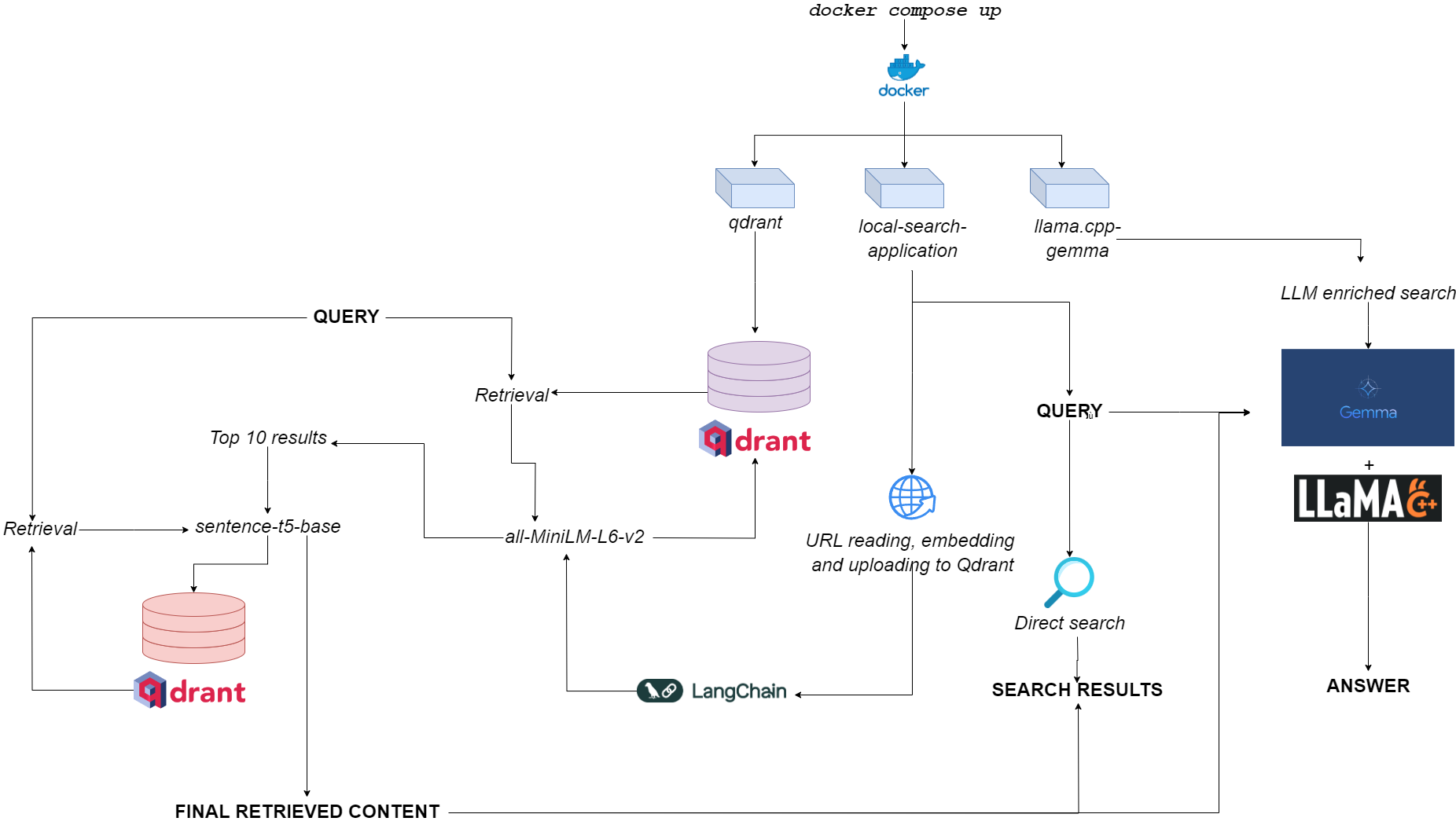

This is provisioned through a multi-container Docker application, leveraging Qdrant, Langchain, llama.cpp, quantized Gemma and Gradio.

Head over to the demo space on HuggingFace🦀

The only requirement is to have docker and docker-compose.

If you don't have them, make sure to install them here.

You can install the application by cloning the GitHub repository

git clone https://github.com/AstraBert/qdurllm.git

cd qdurllmOr you can simply paste the following text into a compose.yaml file:

networks:

mynet:

driver: bridge

services:

local-search-application:

image: astrabert/local-search-application

networks:

- mynet

ports:

- "7860:7860"

qdrant:

image: qdrant/qdrant

ports:

- "6333:6333"

volumes:

- "./qdrant_storage:/qdrant/storage"

networks:

- mynet

llama_server:

image: astrabert/llama.cpp-gemma

ports:

- "8000:8000"

networks:

- mynetPlacing the file in whatever directory you want in your file system.

Prior to running the application, you can optionally pull all the needed images from Docker hub:

docker pull qdrant/qdrant

docker pull astrabert/llama.cpp-gemma

docker pull astrabert/local-search-applicationWhen launched (see Usage), the application runs three containers:

qdrant(port 6333): serves as vector database provider for semantic search-based retrievalllama.cpp-gemma(port 8000): this is an implementation of a quantized Gemma model provided by LMStudio and Google, served withllama.cppserver. This works for text-generation scopes, enriching the search experience of the user.local-search-application(port 7860): a Gradio tabbed interface with:- The possibility to upload one or multiple contents by specifying the URL (thanks to Langchain)

- The possibility to chat with the uploaded URLs thanks to

llama.cpp-gemma - The possibility to perform a direct search that leverages double-layered retrieval with

all-MiniLM-L6-v2(that identifies the 10 best matches) andsentence-t5-base(that re-encodes the 10 best matches and extracts the best hit from them) - this is the same RAG implementation used in combination withllama.cpp-gemma. Wanna see how double-layered RAG performs compared to single-layered RAG? Head over here!

The overall computational burden is light enough to make the application run not only GPUless, but also with low RAM availability (>=8GB, although it can take up to 10 mins for Gemma to respond on 8GB RAM).

You can make the application work with the following - really simple - command, which has to be run within the same directory where you stored your compose.yaml file:

docker compose up -dIf you've already pulled all the images, you'll find the application running at https://localhost:7860 or https://0.0.0.0:7860 in less than a minute.

If you have not pulled the images, you'll have to wait that their installation is complete before actually using the application.

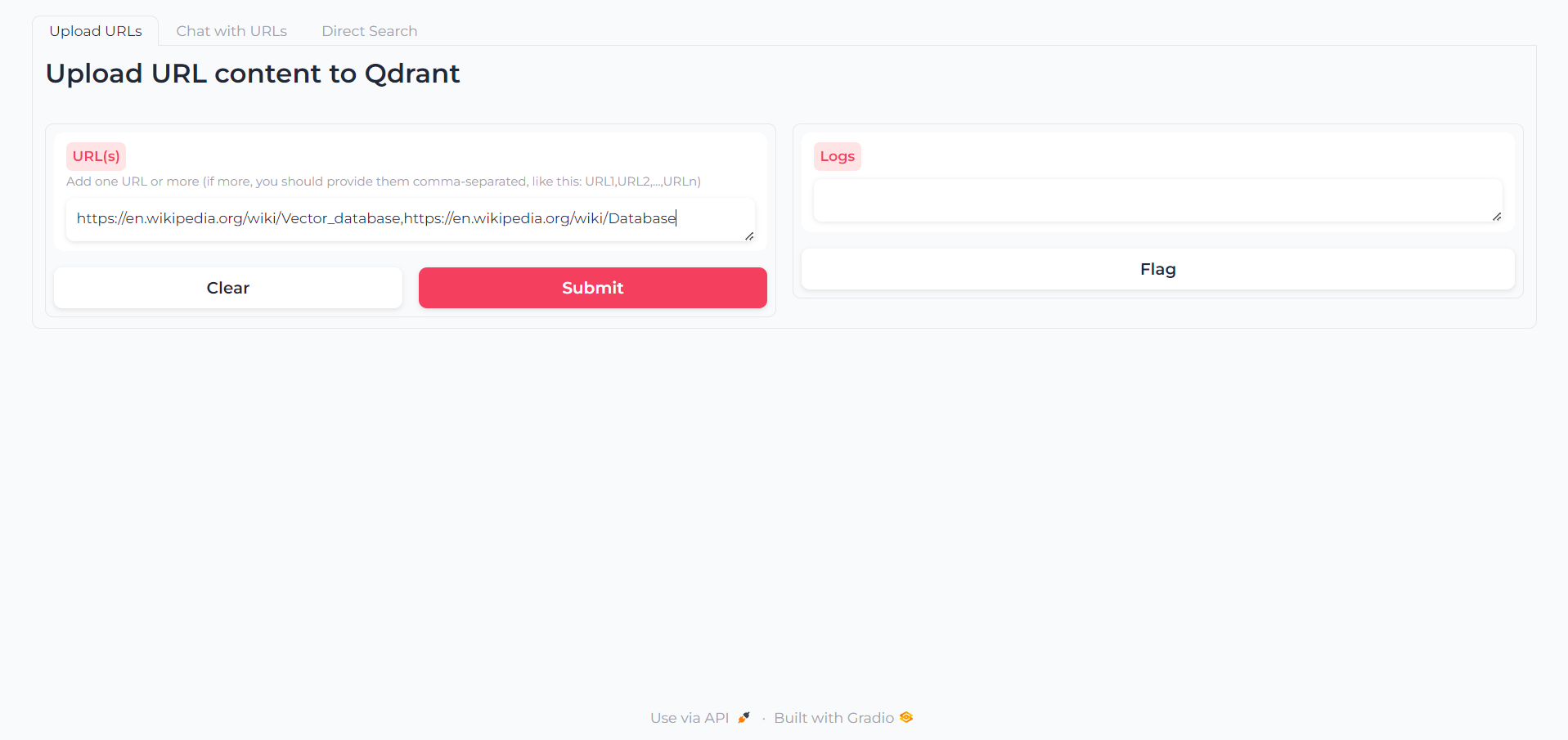

Once the app is loaded, you'll find a first tab in which you can write the URLs whose content you want to interact with:

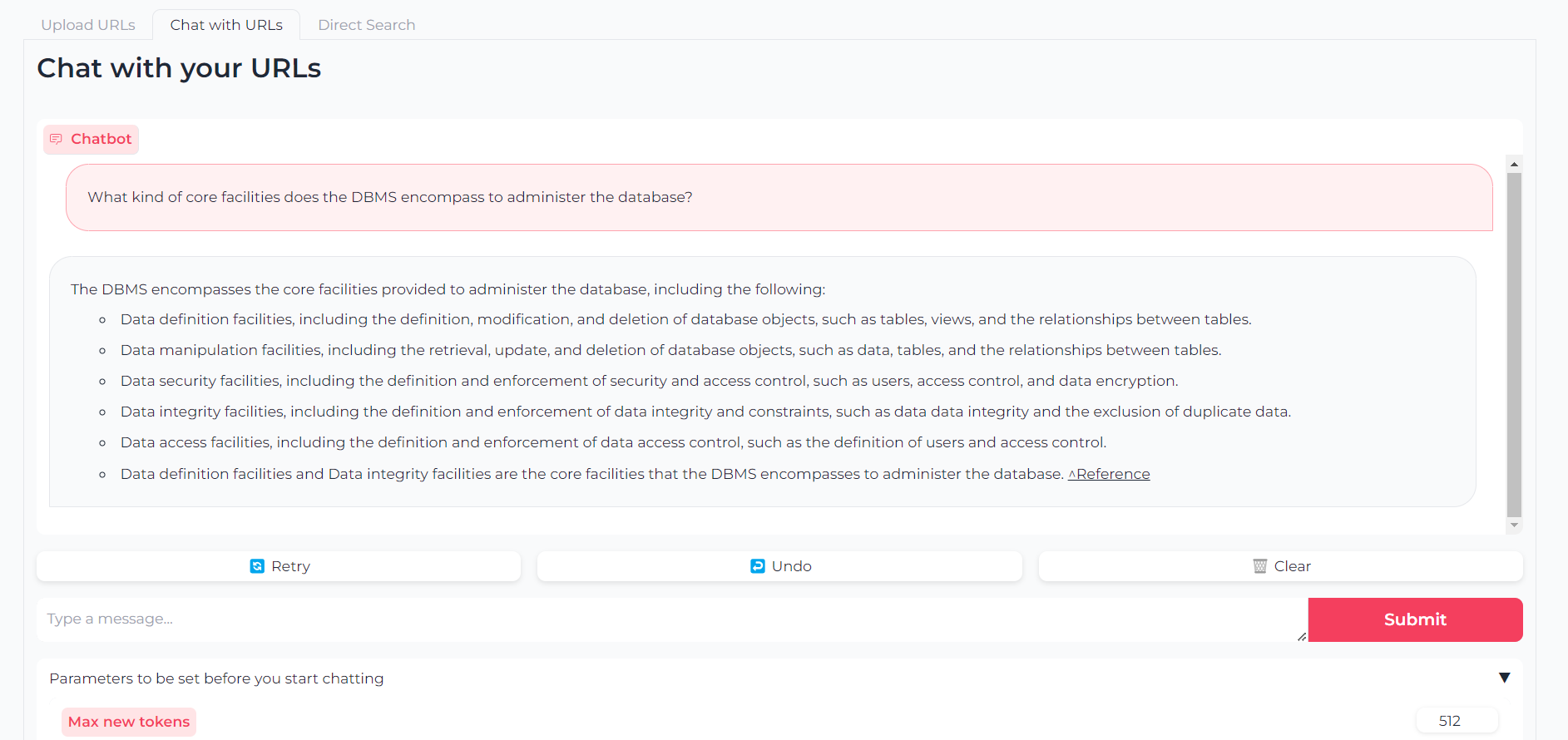

Now that your URLs are uploaded, you can either chat with their content through llama.cpp-gemma:

Note that you can also set parameters like maximum output tokens, temperature, repetition penalty and generation seed

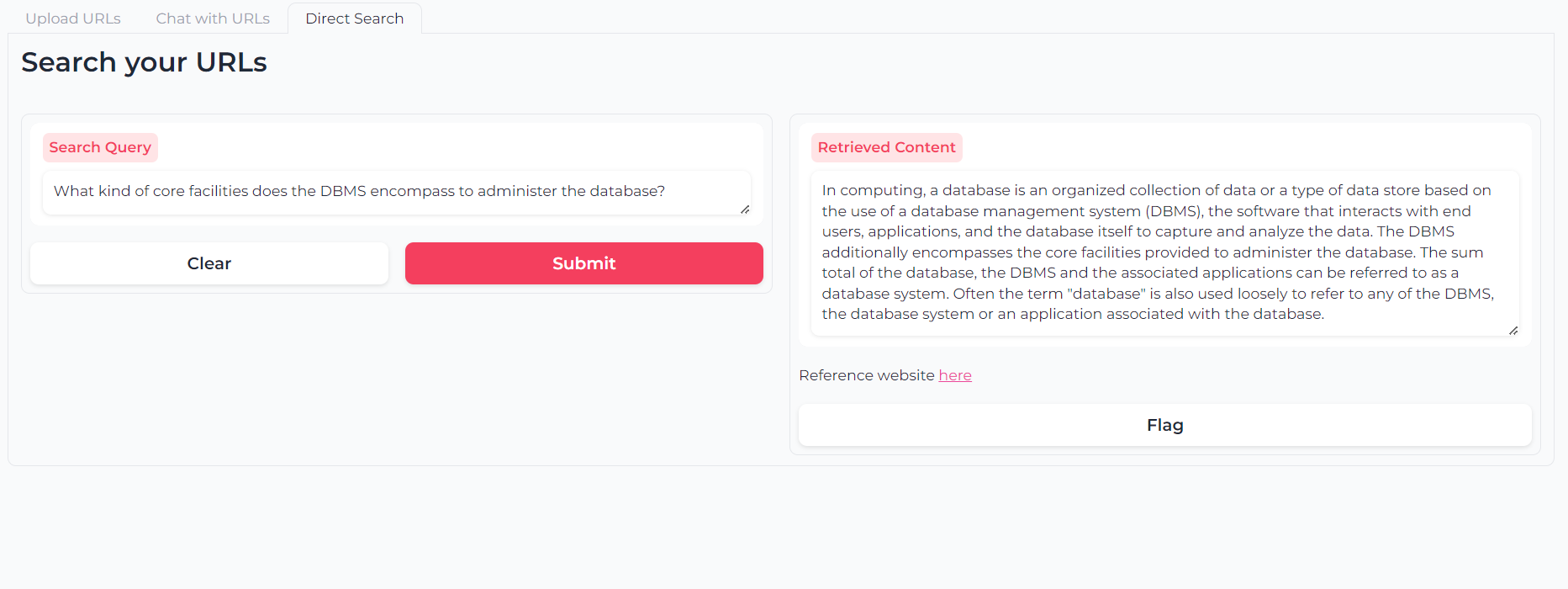

Or you can use double-layered-retrieval semantic search to query your URL content(s) directly:

The software is (and will always be) open-source, provided under MIT license.

Anyone can use, modify and redistribute any portion of it, as long as the author, Astra Clelia Bertelli is cited.

Contribution are always more than welcome! Feel free to flag issues, open PRs or contact the author to suggest any changes, request features or improve the code.

If you found the application useful, please consider funding it in order to allow improvements!