This repository contains the implementation of a Retrieval-Augmented Generation (RAG) system using LangChain and LangSmith. The project aims to leverage the capabilities of LangChain for language processing and LangSmith for knowledge retrieval to build a robust system for generating accurate and context-aware responses.

Retrieval-Augmented Generation (RAG) combines the strengths of retrieval-based and generation-based models to improve the accuracy and relevance of generated responses. This project integrates LangChain and LangSmith to create a powerful RAG system capable of handling complex queries and generating informative answers.

- Retrieval-Augmented Generation: Combines retrieval and generation techniques for better response quality.

- LangChain Integration: Utilizes LangChain for advanced language processing and generation.

- LangSmith Integration: Employs LangSmith for effective knowledge retrieval.

- Modular Design: Easy to extend and customize for various use cases.

RAG systems have a wide range of applications across various domains. Here are some key use cases:

- Question Answering Systems: Providing accurate and detailed answers by retrieving relevant documents or snippets and generating coherent responses.

- Customer Support Chatbots: Enhancing chatbot responses by integrating real-time data retrieval from FAQs, knowledge bases, and support documents.

- Document Summarization: Creating concise summaries by retrieving key information from long documents and generating coherent summaries.

- Content Creation: Assisting in writing articles, reports, or creative content by retrieving relevant information and generating coherent narratives.

- Personalized Recommendations: Generating personalized suggestions by retrieving user-specific data and generating tailored recommendations.

- Educational Tools: Providing detailed explanations or tutoring by retrieving educational resources and generating informative responses.

- Medical and Legal Assistance: Generating responses based on retrieved medical or legal documents to assist professionals with up-to-date information.

- Code Generation and Documentation: Assisting developers by retrieving relevant code snippets or documentation and generating comprehensive explanations or code completions.

- Interactive Storytelling: Creating dynamic narratives by retrieving contextually relevant story elements and generating continuous storylines.

- Translation and Localization: Enhancing translation accuracy by retrieving contextually similar sentences or phrases and generating precise translations.

- Market Research and Analysis: Summarizing market reports and retrieving relevant data points to generate comprehensive market analysis.

- Scientific Research: Assisting researchers by retrieving relevant scientific papers and generating summaries or explanations of findings.

- E-commerce and Product Search: Enhancing product search capabilities by retrieving relevant product information and generating detailed descriptions or comparisons.

- Travel Planning and Recommendations: Providing personalized travel recommendations by retrieving relevant travel guides and generating tailored itineraries.

- Financial Analysis: Generating financial reports and insights by retrieving relevant financial data and documents.

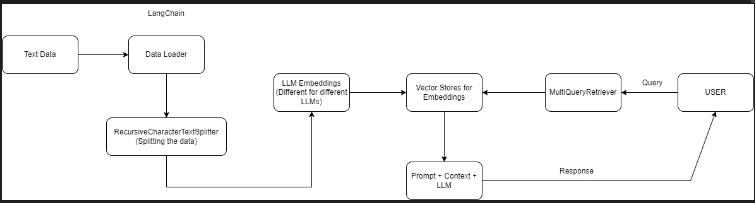

The following is the pipeline -

a. RAG_pdf_Ollama (RAG document search using Ollama and OpenAI)

- Extract text from a PDF (used single pdf but can use any number of documents)

- Chunk the data into k size with w overlap (used 800 and 200).

- Extract (source, relation, target) from the chunks and create a knowledge store.

- Extract embeddings for the nodes and relationships (different for OpenAI and Ollama).

- Store the text and vectors in vector database (used chroma vector store).

- Load a pre-configured question-answering chain from Langchain to enable Question Answering model.

- Query your Knowledge store (can also provide prompt templates available on Langchain or custom Prompt Templates).

b. RAG_Q&A_OpenAI

- Extract text data from webpages (used beautiful soup).

- Load the extracted text.

- Split the text (chunk_size=1000, chunk_overlap=200).

- Embedding Generation - Extract embeddings for the nodes and relationships (different for OpenAI and Ollama).

- Store the embeddings and vectors in vector database (used chroma vector store).

- Make vectorstore as the retriever.

- Load a pre-configured rag prompt from Langchain hub.

- Use Lanchain Expression Language to define the processing pipeline for a Retrieval-Augmented Generation (RAG) system.

- Query your Knowledge store use LangChains Invoke method to execute with given input (here query is the input).

To set up the project locally, follow these steps:

-

Clone the repository:

git clone https://github.com/AjayKrishna76/RAG.git cd RAG -

Create a virtual environment:

python -m venv venv source venv/bin/activate # On Windows, use `venv\Scripts\activate`

-

Install dependencies:

pip install -r requirements.txt

-

Set up environment variables: Create a .env file in the root directory and add your API keys and other configuration settings:

LANGCHAIN_API_KEY=<your_langchain_api_key> LANGSMITH_API_KEY=<your_langsmith_api_key> LANGCHAIN_ENDPOINT='https://api.smith.langchain.com' OPENAI_API_KEY=<your_openai_api_key> LANGCHAIN_TRACING_V2='true'

-

Install Ollama:

go to https://ollama.com/download and download ollama which helps in using the different Llama LLM versions

example:

ollama pull llama3

ollama pull mxbai-embed-large

- Chromadb

LLMs

- OpenAI

- Llama Embedding Models Embed the data as vectors in a vector store and this store is used for retrieval of the data

- OpenAI 'embedding=OpenAIEmbeddings()'

- Ollama 'OllamaEmbeddings(model_name="llama2")'

- LangChain Expression Language, or LCEL, is a declarative way to easily compose chains together. LCEL was designed from day 1 to support putting prototypes in production, with no code changes, from the simplest “prompt + LLM” chain to the most complex chains (we’ve seen folks successfully run LCEL chains with 100s of steps in production).

Track the model using LangSmith UI

- All LLMs come with built-in LangSmith tracing.

- Any LLM invocation (whether it’s nested in a chain or not) will automatically be traced.

- A trace will include inputs, outputs, latency, token usage, invocation params, environment params, and more.

If you'd like to contribute to this repository, follow these steps:

Fork the repository.

Create a new branch (git checkout -b feature/new-feature).

Make your changes and commit them (git commit -am 'Add new feature').

Push to the branch (git push origin feature/new-feature).

Create a new pull request.

For any questions or feedback, feel free to reach out: Email: [email protected]