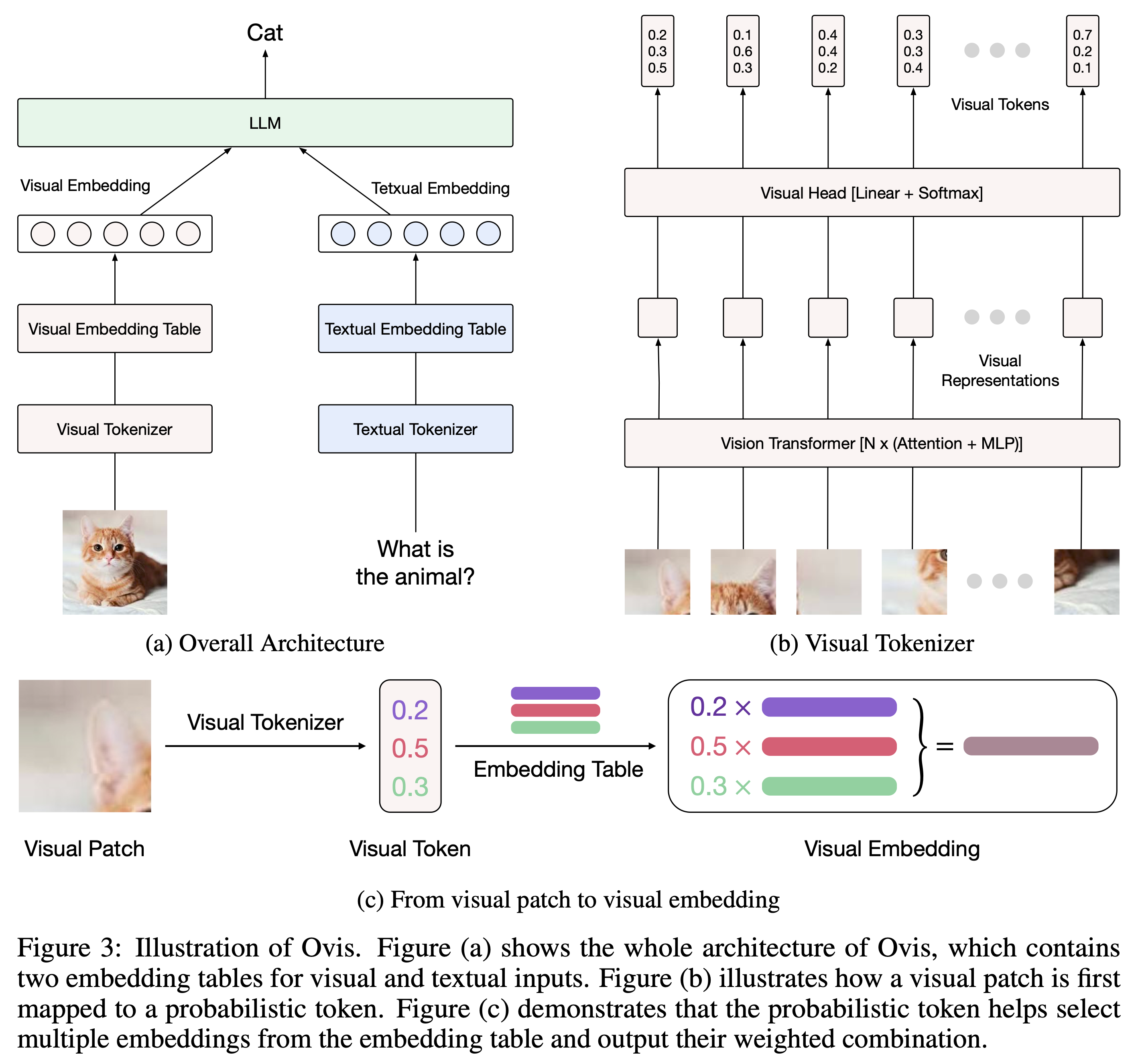

Ovis (Open VISion) is a novel Multimodal Large Language Model (MLLM) architecture, designed to structurally align visual and textual embeddings. For a comprehensive introduction, please refer to the Ovis paper.

- [08/07] 🔥 Demos launched on Huggingface: Ovis1.5-Llama3-8B and Ovis1.5-Gemma2-9B

- [07/24] 🔥 Announcing Ovis1.5, the latest version of Ovis! As always, Ovis1.5 remains fully open-source: we release the training datasets, training & inference codes, and model weights for reproducible transparency and community collaboration.

- [06/14] 🔥 We release the paper, code, models, and datasets.

Ovis has been tested with Python 3.10, Torch 2.1.2, Transformers 4.43.2, and DeepSpeed 0.14.0. For a comprehensive list of package dependencies, please consult the requirements.txt file. Before training or inference, please install Ovis as follows.

git clone [email protected]:AIDC-AI/Ovis.git

conda create -n ovis python=3.10 -y

conda activate ovis

cd Ovis

pip install -r requirements.txt

pip install -e .Ovis can be instantiated with popular LLMs (e.g., Llama3, Gemma2). We provide the following pretrained Ovis MLLMs:

| Ovis MLLMs | ViT | LLM | Model Weights |

|---|---|---|---|

| Ovis1.5-Llama3-8B | Siglip-400M | Llama3-8B-Instruct | Huggingface |

| Ovis1.5-Gemma2-9B | Siglip-400M | Gemma2-9B-It | Huggingface |

We evaluate Ovis1.5 across various multimodal benchmarks using VLMEvalKit and compare its performance to leading MLLMs with similar parameter scales.

| MiniCPM-Llama3-V2.5 | GLM-4V-9B | Ovis1.5-Llama3-8B | Ovis1.5-Gemma2-9B | |

|---|---|---|---|---|

| Open Weights | ✅ | ✅ | ✅ | ✅ |

| Open Datasets | ❌ | ❌ | ✅ | ✅ |

| MMTBench-VAL | 57.6 | 48.8 | 60.7 | 62.7 |

| MMBench-EN-V1.1 | 74 | 68.7 | 78.2 | 78.0 |

| MMBench-CN-V1.1 | 70.1 | 67.1 | 75.2 | 75.1 |

| MMStar | 51.8 | 54.8 | 57.2 | 58.7 |

| MMMU-Val | 45.8 | 46.9 | 48.6 | 49.8 |

| MathVista-Mini | 54.3 | 51.1 | 62.4 | 65.7 |

| HallusionBenchAvg | 42.4 | 45 | 44.5 | 48.0 |

| AI2D | 78.4 | 71.2 | 82.5 | 84.7 |

| OCRBench | 725 | 776 | 743 | 756 |

| MMVet | 52.8 | 58 | 52.2 | 56.5 |

| RealWorldQA | 63.5 | 66 | 64.6 | 66.9 |

| CharXiv Reasoning | 24.9 | - | 28.2 | 28.4 |

| CharXiv Descriptive | 59.3 | - | 60.2 | 62.6 |

All training datasets are summarized in the JSON file located at ovis/train/dataset/dataset_info_v1_5.json. Each dataset entry includes the following attributes:

meta_file: This file contains a collection of samples where each sample consists of text and (optionally) image. The text data is embedded directly within themeta_file, while the image is represented by its filename. This filename refers to the image file located in theimage_dir.image_dir: The directory where the images are stored.data_format: Specifies the format of the data, which is used to determine the dataset class for processing the dataset.

We provide the meta_file for each training dataset at Huggingface. The images can be downloaded from their respective sources listed below.

Below is an example of the folder structure consistent with dataset_info_v1_5.json. You can alter the folder structure as needed and modify dataset_info_v1_5.json accordingly.

|-- mllm_datasets

|-- meta_files

|-- v1

|-- v1_5

|-- pixelprose-14m.parquet

|-- cc12m-description-387k.json

|-- A-OKVQA-18k.json

|-- CLEVR-MATH-85k.json

...

|-- images

|-- pixelprose-14m

|-- ovis_cc12m

|-- COCO

|-- CLEVR-MATH

...

Ovis is trained in three stages, with each stage's training scripts located in the scripts directory. Before starting the training, ensure you properly set the ROOT variable in the scripts. Below are the commands to train Ovis1.5-Llama3-8B:

bash scripts/v1_5/Ovis1.5-Llama3-8B-S1.sh

bash scripts/v1_5/Ovis1.5-Llama3-8B-S2.sh

bash scripts/v1_5/Ovis1.5-Llama3-8B-S3.shWe provide an inference wrapper in ovis/serve/runner.py, which can be used as:

from PIL import Image

from ovis.serve.runner import RunnerArguments, OvisRunner

image = Image.open('IMAGE_PATH')

text = 'PROMPT'

runner_args = RunnerArguments(model_path='MODEL_PATH')

runner = OvisRunner(runner_args)

generation = runner.run([image, text])Based on Gradio, Ovis can also be accessed via a web user interface:

python ovis/serve/server.py --model_path MODEL_PATH --port PORTIf you find Ovis useful, please cite the paper

@article{lu2024ovis,

title={Ovis: Structural Embedding Alignment for Multimodal Large Language Model},

author={Shiyin Lu and Yang Li and Qing-Guo Chen and Zhao Xu and Weihua Luo and Kaifu Zhang and Han-Jia Ye},

year={2024},

journal={arXiv:2405.20797}

}

This work is a collaborative effort by the MarcoVL team. We would also like to provide links to the following MLLM papers from our team:

- Parrot: Multilingual Visual Instruction Tuning

- Wings: Learning Multimodal LLMs without Text-only Forgetting

The project is licensed under the Apache 2.0 License and is restricted to uses that comply with the license agreements of Qwen, Llama3, Gemma2, Clip, and Siglip.