This library implements a distributed version of the Proximal Policy Optimization algorithm and uses the carla_rllib environment. In addition, there is an older implementation of the Asynchronous Actor-Critic which was not further developed.

To get started with the CARLA simulator, click here.

To get started with the CARLA reinforcment learning library, click here.

2. Follow the instructions here to install ROS melodic.

3. Follow the instructions here to create a catkin workspace.

4. Clone this repository into the src folder of your catkin workspace:

cd catkin_ws/src

git clone https://github.com/50sven/ros_rl.git5. Build your package with catkin_make:

cd catkin_ws

source devel/setup.bash

catkin_makeNote: Every time you have made changes to the code, the package must be rebuilt.

1. In order to start training, take a look at the following files:

node_ppo.launch: configurate the parameters of the algorithm and the environmentsetting.config: configurate the parameters regarding ROS and workstations used

2. Start training with the bash script train.sh:

cd catkin_ws/src/ros_carla_rllib/scripts/

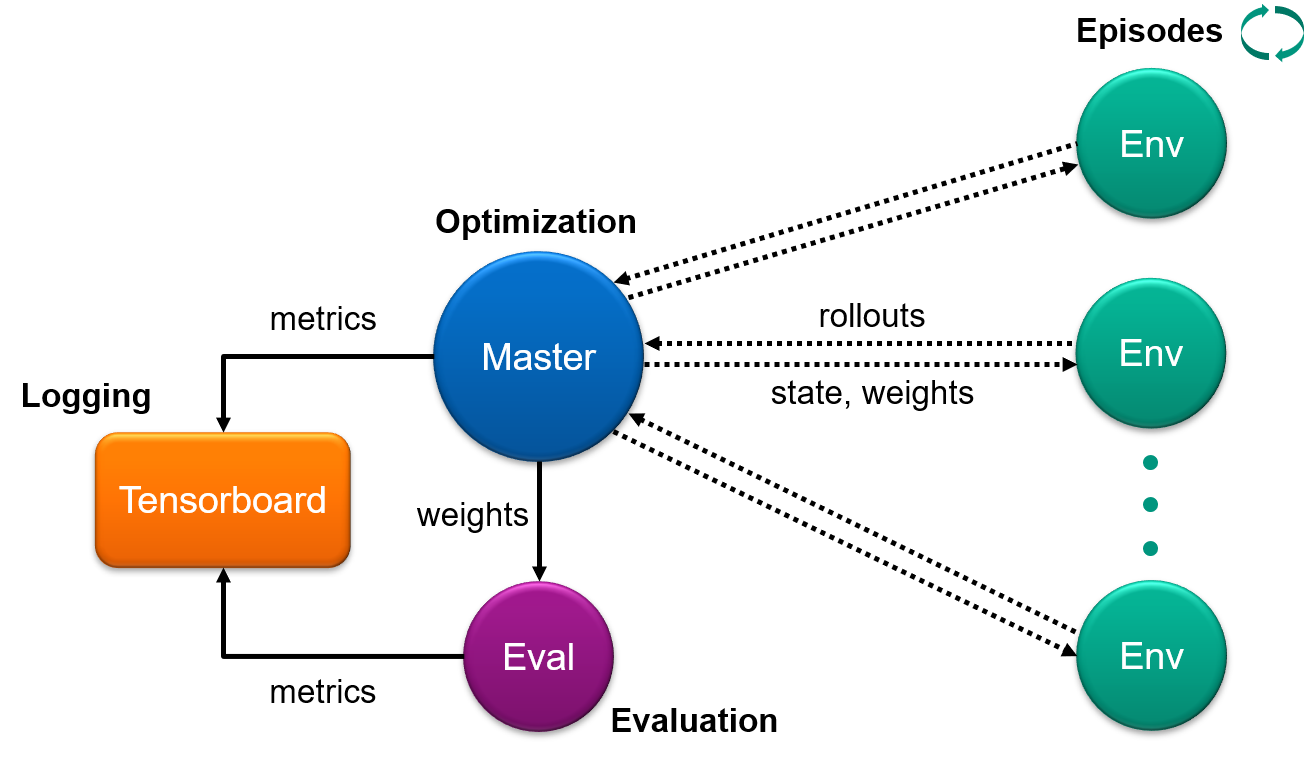

./train.shtrain.shstarts three types of nodes: MasterNode, EvalNode and EnvNode; as well as CARLA servers. Each node/server runs in its own tmux session.- There is only one MasterNode and only one EvalNode per training.

- In contrast, there can be more than one EnvNode.

- EnvNodes and the EvalNode run their own

carla_rllibenvironment, which is uniquely assigned to one carla server. - There can be multiple trainings/nodes running on the same workstations (requires unique ports).

Note: The current implementation uses the trajectory environment from the carla_rllib which was build for a specific use case. In order to fit this implementation to individual needs one must adapted the ppo implementation and the online evaluation.

- stores rollout data received from the environment nodes

- exectues PPO optimization steps

- logs training diagnostics

- runs the policy in the environment to collect training data and sends it to the master

- runs online evaluations during training

- logs evaluation metrics