VITS2: Improving Quality and Efficiency of Single-Stage Text-to-Speech with Adversarial Learning and Architecture Design

Single-stage text-to-speech models have been actively studied recently, and their results have outperformed two-stage pipeline systems. Although the previous single-stage model has made great progress, there is room for improvement in terms of its intermittent unnaturalness, computational efficiency, and strong dependence on phoneme conversion. In this work, we introduce VITS2, a single-stage text-to-speech model that efficiently synthesizes a more natural speech by improving several aspects of the previous work. We propose improved structures and training mechanisms and present that the proposed methods are effective in improving naturalness, similarity of speech characteristics in a multi-speaker model, and efficiency of training and inference. Furthermore, we demonstrate that the strong dependence on phoneme conversion in previous works can be significantly reduced with our method, which allows a fully end-to-end single-stage approach.

Demo: https://vits-2.github.io/demo/

Paper: https://arxiv.org/abs/2307.16430

Unofficial implementation of VITS2. This is a work in progress. Please refer to TODO for more details.

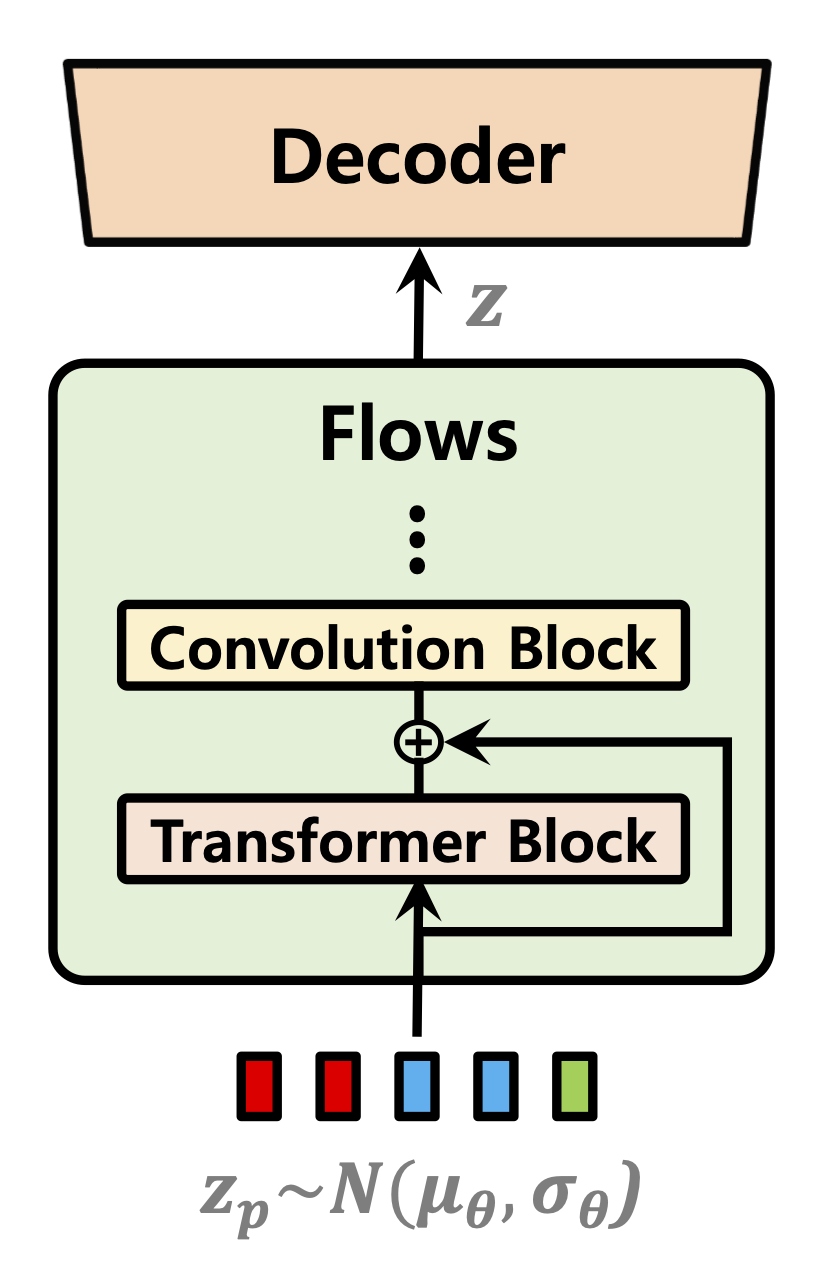

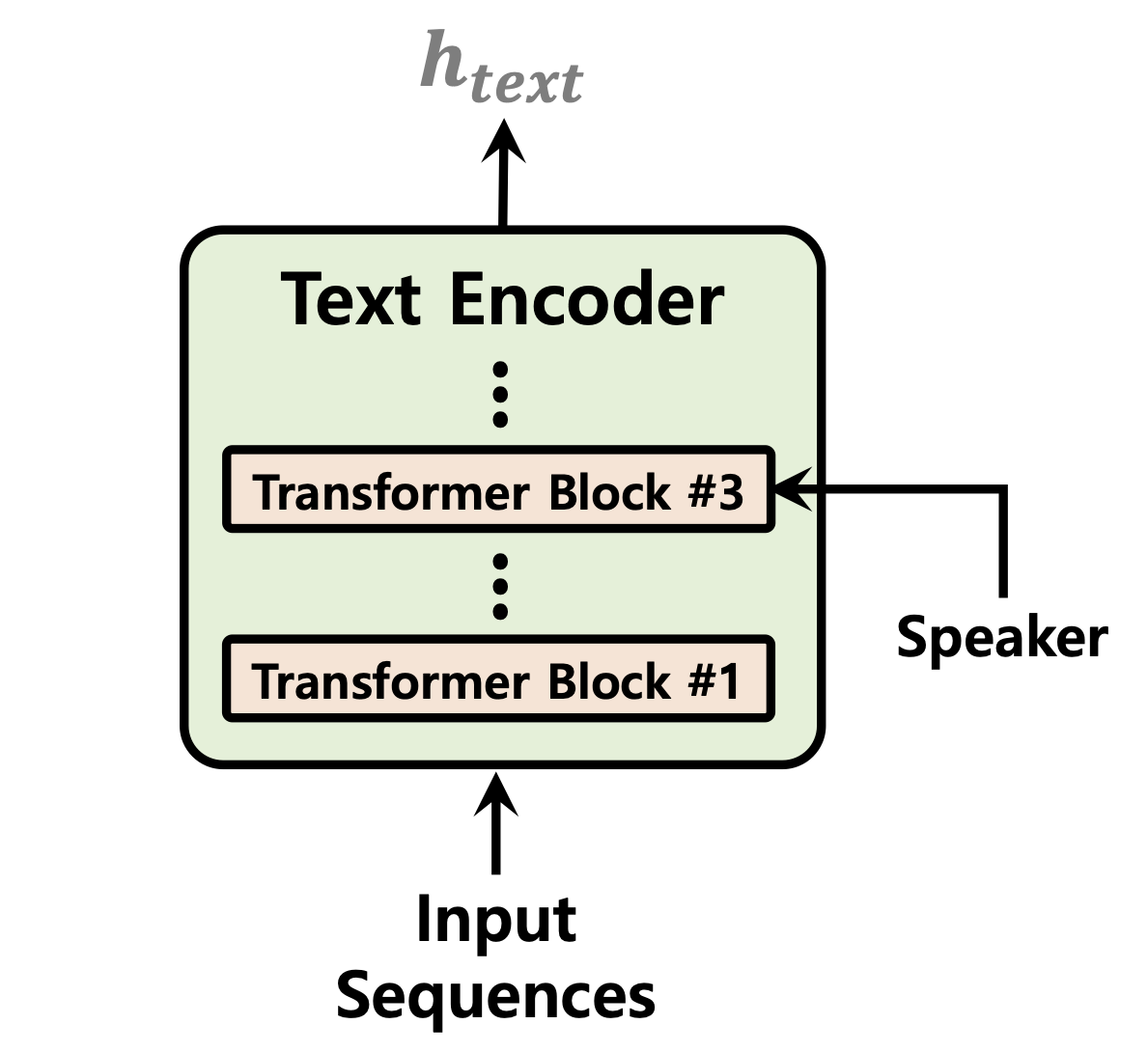

| Duration Predictor | Normalizing Flows | Text Encoder |

|---|---|---|

|

|

|

[In progress]

Audio sample after 52,000 steps of training on 1 GPU for LJSpeech dataset: https://github.com/daniilrobnikov/vits2/assets/91742765/d769c77a-bd92-4732-96e7-ab53bf50d783

Clone the repo

git clone [email protected]:daniilrobnikov/vits2.git

cd vits2This is assuming you have navigated to the vits2 root after cloning it.

NOTE: This is tested under python3.11 with conda env. For other python versions, you might encounter version conflicts.

PyTorch 2.0 Please refer requirements.txt

# install required packages (for pytorch 2.0)

conda create -n vits2 python=3.11

conda activate vits2

pip install -r requirements.txt

conda env config vars set PYTHONPATH="/path/to/vits2"There are three options you can choose from: LJ Speech, VCTK, or custom dataset.

- LJ Speech: LJ Speech dataset. Used for single speaker TTS.

- VCTK: VCTK dataset. Used for multi-speaker TTS.

- Custom dataset: You can use your own dataset. Please refer here. <