Turing (microarchitecture)

| |

| Launched | September 20, 2018 |

|---|---|

| Designed by | Nvidia |

| Manufactured by | |

| Fabrication process | TSMC 12FFC |

| Codename(s) | TU10x TU11x |

| Product Series | |

| Desktop | |

| Professional/workstation | |

| Server/datacenter | |

| Specifications | |

| Compute |

|

| L1 cache | 96 KB (per SM) |

| L2 cache | 2 MB to 6 MB |

| Memory support | GDDR6 HBM2 |

| PCIe support | PCIe 3.0 |

| Supported Graphics APIs | |

| DirectX | DirectX 12 Ultimate (Feature Level 12_2) |

| Direct3D | Direct3D 12.0 |

| Shader Model | Shader Model 6.7 |

| OpenCL | OpenCL 3.0 |

| OpenGL | OpenGL 4.6 |

| CUDA | Compute Capability 7.5 |

| Vulkan | Vulkan 1.3 |

| Media Engine | |

| Encode codecs | |

| Decode codecs | |

| Color bit-depth |

|

| Encoder(s) supported | NVENC |

| Display outputs | |

| History | |

| Predecessor | Pascal |

| Variant | Volta (datacenter/HPC) |

| Successor | Ampere |

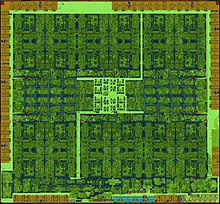

Turing is the codename for a graphics processing unit (GPU) microarchitecture developed by Nvidia. It is named after the prominent mathematician and computer scientist Alan Turing. The architecture was first introduced in August 2018 at SIGGRAPH 2018 in the workstation-oriented Quadro RTX cards,[2] and one week later at Gamescom in consumer GeForce 20 series graphics cards.[3] Building on the preliminary work of Volta, its HPC-exclusive predecessor, the Turing architecture introduces the first consumer products capable of real-time ray tracing, a longstanding goal of the computer graphics industry. Key elements include dedicated artificial intelligence processors ("Tensor cores") and dedicated ray tracing processors ("RT cores"). Turing leverages DXR, OptiX, and Vulkan for access to ray tracing. In February 2019, Nvidia released the GeForce 16 series GPUs, which utilizes the new Turing design but lacks the RT and Tensor cores.

Turing is manufactured using TSMC's 12 nm FinFET semiconductor fabrication process. The high-end TU102 GPU includes 18.6 billion transistors fabricated using this process.[1] Turing also uses GDDR6 memory from Samsung Electronics, and previously Micron Technology.

Details

[edit]

The Turing microarchitecture combines multiple types of specialized processor core, and enables an implementation of limited real-time ray tracing.[4] This is accelerated by the use of new RT (ray-tracing) cores, which are designed to process quadtrees and spherical hierarchies, and speed up collision tests with individual triangles.

Features in Turing:

- CUDA cores (SM, Streaming Multiprocessor)

- Compute Capability 7.5

- traditional rasterized shaders and compute

- concurrent execution of integer and floating point operations (inherited from Volta)

- Ray-tracing (RT) cores

- bounding volume hierarchy acceleration[5]

- shadows, ambient occlusion, lighting, reflections

- Tensor (AI) cores

- artificial intelligence

- large matrix operations

- Deep Learning Super Sampling (DLSS)

- Memory controller with GDDR6/HBM2 support

- DisplayPort 1.4a with Display Stream Compression (DSC) 1.2

- PureVideo Feature Set J hardware video decoding

- GPU Boost 4

- NVLink Bridge with VRAM stacking pooling memory from multiple cards

- VirtualLink VR

- NVENC hardware encoding

The GDDR6 memory is produced by Samsung Electronics for the Quadro RTX series.[6] The RTX 20 series initially launched with Micron memory chips, before switching to Samsung chips by November 2018.[7]

Rasterization

[edit]Nvidia reported rasterization (CUDA) performance gains for existing titles of approximately 30–50% over the previous generation.[8][9]

Ray-tracing

[edit]The ray-tracing performed by the RT cores can be used to produce reflections, refractions and shadows, replacing traditional raster techniques such as cube maps and depth maps. Instead of replacing rasterization entirely, however, the information gathered from ray-tracing can be used to augment the shading with information that is much more photo-realistic, especially in regards to off-camera action. Nvidia said the ray-tracing performance increased about 8 times over the previous consumer architecture, Pascal.

Tensor cores

[edit]Generation of the final image is further accelerated by the Tensor cores, which are used to fill in the blanks in a partially rendered image, a technique known as de-noising. The Tensor cores perform the result of deep learning to codify how to, for example, increase the resolution of images generated by a specific application or game. In the Tensor cores' primary usage, a problem to be solved is analyzed on a supercomputer, which is taught by example what results are desired, and the supercomputer determines a method to use to achieve those results, which is then done with the consumer's Tensor cores. These methods are delivered via driver updates to consumers.[8] The supercomputer uses a large number of Tensor cores itself.

Turing dies

[edit]| Die | TU102[10] | TU104[11] | TU106[12] | TU116[13] | TU117[14] |

|---|---|---|---|---|---|

| Die size | 754 mm2 | 545 mm2 | 445 mm2 | 284 mm2 | 200 mm2 |

| Transistors | 18.6B | 13.6B | 10.8B | 6.6B | 4.7B |

| Transistor density | 24.7 MTr/mm2 | 25.0 MTr/mm2 | 24.3 MTr/mm2 | 23.2 MTr/mm2 | 23.5 MTr/mm2 |

| Graphics processing clusters |

6 | 6 | 3 | 3 | 2 |

| Streaming multiprocessors |

72 | 48 | 36 | 24 | 16 |

| CUDA cores | 4608 | 3072 | 2304 | 1536 | 1024 |

| Texture mapping units | 288 | 192 | 144 | 96 | 64 |

| Render output units | 96 | 64 | 64 | 48 | 32 |

| Tensor cores | 576 | 384 | 288 | — | |

| RT cores | 72 | 48 | 36 | ||

| L1 cache | 6.75 MB | 4.5 MB | 3.375 MB | 2.25 MB | 1.5 MB |

| 96 KB per SM | |||||

| L2 cache | 6 MB | 4 MB | 4 MB | 1.5 MB | 1 MB |

Development

[edit]Turing's development platform is called RTX. RTX ray-tracing features can be accessed using Microsoft's DXR, OptiX, as well using Vulkan extensions (the last one being also available on Linux drivers).[15] It includes access to AI-accelerated features through NGX. The Mesh Shader, Shading Rate Image functionalities are accessible using DirectX 12, Vulkan and OpenGL extensions on Windows and Linux platforms.[16]

Windows 10 October 2018 update includes the public release of DirectX Raytracing.[17][18]

Products using Turing

[edit]- GeForce MX series

- GeForce MX450 Laptop

- GeForce MX550 Laptop

- GeForce 16 series

- GeForce GTX 1630

- GeForce GTX 1650 Laptop

- GeForce GTX 1650

- GeForce GTX 1650 Super

- GeForce GTX 1650 Ti Laptop

- GeForce GTX 1660

- GeForce GTX 1660 Super

- GeForce GTX 1660 Ti Laptop

- GeForce GTX 1660 Ti

- GeForce 20 series

- GeForce RTX 2060 Laptop

- GeForce RTX 2060

- GeForce RTX 2060 Super

- GeForce RTX 2070 Laptop

- GeForce RTX 2070

- GeForce RTX 2070 Super Laptop

- GeForce RTX 2070 Super

- GeForce RTX 2080 Laptop

- GeForce RTX 2080

- GeForce RTX 2080 Super Laptop

- GeForce RTX 2080 Super

- GeForce RTX 2080 Ti

- Titan RTX

- Nvidia Quadro

- Quadro RTX 3000 Laptop

- Quadro RTX 4000 Laptop

- Quadro RTX 4000

- Quadro RTX 5000 Laptop

- Quadro RTX 5000

- Quadro RTX 6000 Laptop

- Quadro RTX 6000

- Quadro RTX 8000

- Quadro T1000 Laptop

- Quadro T2000 Laptop

- T400

- T400 4GB

- T500 Laptop

- T600 Laptop

- T600

- T1000

- T1000 8GB

- T1200 Laptop

- Nvidia Tesla

- Tesla T4

- Tesla T10

- Tesla T40

See also

[edit]- List of eponyms of Nvidia GPU microarchitectures

- List of Nvidia graphics processing units

- Volta (microarchitecture)

References

[edit]- ^ a b "Nvidia Turing GPU Architecture: Graphics Reinvented" (PDF). Nvidia. 2018. Retrieved June 28, 2019.

- ^ Smith, Ryan (August 13, 2018). "NVIDIA Reveals Next-Gen Turing GPU Architecture: NVIDIA Doubles-Down on Ray Tracing, GDDR6, & More". AnandTech. Retrieved April 9, 2023.

- ^ Smith, Ryan (August 20, 2018). "NVIDIA Announces the GeForce RTX 20 Series: RTX 2080 Ti & 2080 on Sept. 20th, RTX 2070 in October". AnandTech. Retrieved April 9, 2023.

- ^ Warren, Tom (August 20, 2018). "Nvidia announces RTX 2000 GPU series with '6 times more performance' and ray-tracing". The Verge. Retrieved August 20, 2018.

- ^ Oh, Nate (September 14, 2018). "The NVIDIA Turing GPU Architecture Deep Dive: Prelude to GeForce RTX". AnandTech. Retrieved April 9, 2023.

- ^ Mujtaba, Hassan (August 14, 2018). "Samsung GDDR6 Memory Powers NVIDIA's Turing GPU Based Quadro RTX Cards". Wccftech. Retrieved April 9, 2023.

- ^ Maislinger, Florian (November 21, 2018). "Faulty RTX 2080 Ti: Nvidia switches from Micron to Samsung for GDDR6 memory". PC Builder's Club. Retrieved July 15, 2019.

- ^ a b "#BeForTheGame". Twitch.

- ^ Fisher, Jeff (August 20, 2018). "GeForce RTX Propels PC Gaming's Golden Age with Real-Time Ray Tracing". Nvidia. Retrieved April 9, 2023.

- ^ "NVIDIA TU102 GPU Specs". TechPowerUp.

- ^ "NVIDIA TU104 GPU Specs". TechPowerUp.

- ^ "NVIDIA TU106 GPU Specs". TechPowerUp.

- ^ "NVIDIA TU116 GPU Specs". TechPowerUp.

- ^ "NVIDIA TU117 GPU Specs". TechPowerUp.

- ^ "NVIDIA RTX platform". Nvidia. July 20, 2018. Retrieved April 9, 2023.

- ^ "Turing Extensions for Vulkan and OpenGL". Nvidia. September 11, 2018. Retrieved April 9, 2023.

- ^ Pelletier, Sean (October 2, 2018). "Windows 10 October 2018 Update a Catalyst for Ray-Traced Games". Nvidia. Retrieved April 9, 2023.

- ^ van Rhyn, Jacques (October 2, 2018). "DirectX Raytracing and the Windows 10 October 2018 Update". Microsoft. Retrieved April 9, 2023.