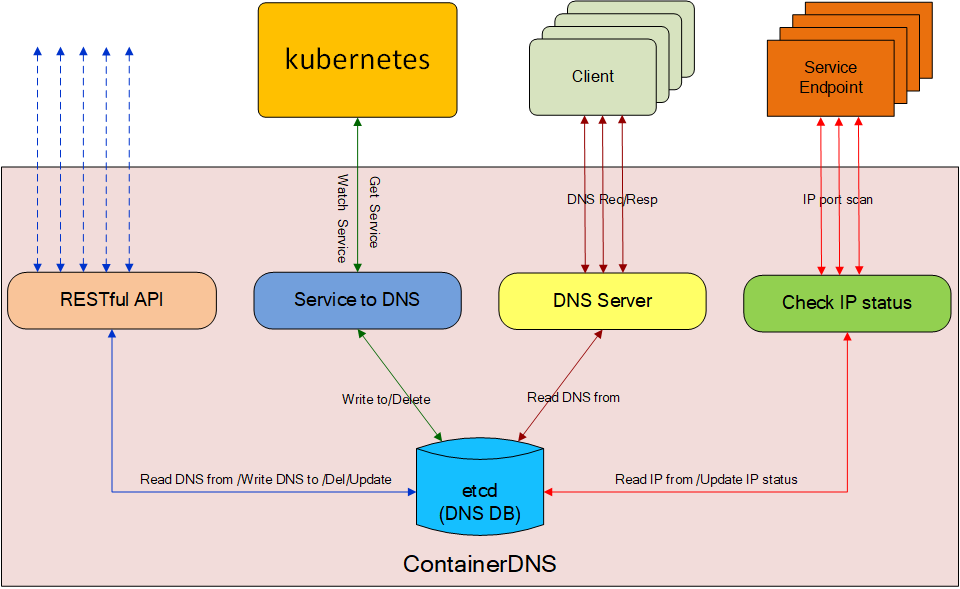

ContainerDNS works as an internal DNS server for a Kubernetes cluster.

containerdns: the main service to offer DNS query.containerdns-kubeapi: monitor the changes of k8s services, and record the change in the etcd. It offered the original data for containerdns, meanwhille containerdns-kubeapi offers the RESTful api for users to maintain domain records.containerdns-apicmd: it is a shell cmd for user to query\update domain record, it is based on containerdns-kubeapi.etcd: used to store DNS information, etcd v3 api is used.

It is based on the DNS library https://github.com/miekg/dns.

- fully-cached DNS records

- backend ip automatic removed when it not avaliable

- support multiple domain suffix

- better performance and less jitter

- load balancing - when a domain has multiple IPs, ContainerDNS chooses an active one randoml

- session persistence - when a domain name is accessed multiple times from the same source, the same service IP is returned.

Then get and compile ContainerDNS:

mkdir -p $GOPATH/src/github.com/tiglabs

cd $GOPATH/src/github.com/tiglabs

git clone https://github.com/tiglabs/containerdns

cd $GOPATH/src/github.com/tiglabs/containerdns

makeconfig-file: read configs from the file, default "/etc/containerdns/containerdns.conf".

the config file like this:

[Dns]

dns-domain = containerdns.local.

dns-addr = 0.0.0.0:53

nameservers = ""

subDomainServers = ""

cacheSize = 100000

ip-monitor-path = /containerdns/monitor/status/

[Log]

log-dir = /export/log/containerdns

log-level = 2

log-to-stdio = true

[Etcd]

etcd-servers = https://127.0.0.1:2379

etcd-certfile = ""

etcd-keyfile = ""

etcd-cafile = ""

[Fun]

random-one = false

hone-one = false

[Stats]

statsServer = 127.0.0.1:9600

statsServerAuthToken = @containerdns.comconfig-file: read configs from the file, default "/etc/containerdns/containerdns.conf".

the config file like this:

[General]

domain=containerdns.local

host = 192.168.169.41

etcd-server = https://127.0.0.1:2379

ip-monitor-path = /containerdns/monitor/status

log-dir = /export/log/containerdns

log-level = 2

log-to-stdio = false

[Kube2DNS]

kube-enable = NO

[DNSApi]

api-enable = YES

api-address = 127.0.0.1:9003

containerdns-auth = 123456789

config-file: read configs from the file, default "/etc/containerdns/containerdns-scanner.conf".

the config file like this:

[General]

core = 0

enable-check = true

hostname = hostname1

log-dir = /export/log/containerdns

log-level = 100

heartbeat-interval = 30

[Check]

check-timeout = 2

check-interval = 10

scann-ports = 22, 80, 8080

enable-icmp = true

ping-timeout = 1000

ping-count = 2

[Etcd]

etcd-machine = https://127.0.0.1:2379

tls-key =

tls-pem =

ca-cert =

status-path = /containerdns/monitor/status

report-path = /containerdns/monitor/report

heart-path = /containerdns/monitor/heart

config-file: read configs from the file, default "/etc/containerdns/containerdns-schedule.conf".

the config file like this:

[General]

schedule-interval = 60

agent-downtime = 60

log-dir = /export/log/containerdns

log-level = 100

hostname = hostname1

force-lock-time = 1800

[Etcd]

etcd-machine = https://127.0.0.1:2379

status-path = /containerdns/monitor/status

report-path = /containerdns/monitor/report

heart-path = /containerdns/monitor/heart

lock-path = /containerdns/monitor/lock we use curl to test the user api.

% curl -H "Content-Type:application/json;charset=UTF-8" -X POST -d '{"type":"A","ips":["192.168.10.1","192.168.10.2","192.168.10.3"]}' https://127.0.0.1:9001/containerdns/api/cctv2?token="123456789"

OK % curl -H "Content-Type:application/json;charset=UTF-8" -X POST -d '{"type":"cname","alias":"tv1"}' https://127.0.0.1:9001/containerdns/api/cctv2.containerdns.local?token="123456789"

OK % nslookup qiyf-nginx-5.default.svc.containerdns.local 127.0.0.1

Server: 127.0.0.1

Address: 127.0.0.1#53

Name: qiyf-nginx-5.default.svc.containerdns.local

Address: 192.168.19.113

if the domain have more than one ip, containerdns will return a radom one.

% nslookup cctv2.containerdns.local 127.0.0.1

Server: 127.0.0.1

Address: 127.0.0.1#53

Name: cctv2.containerdns.local

Address: 192.168.10.3

% nslookup tv1.containerdns.local 127.0.0.1

Server: 127.0.0.1

Address: 127.0.0.1#53

tv1.containerdns.local canonical name = cctv2.containerdns.local.

Name: cctv2.containerdns.local

Address: 192.168.10.3

If the domain may have multiple ips, then dns-scanner is used to monitor the ips behand the domain.

When the service is not reachable, dns-scanner will change the status of the ip. And the containerdns will monitor the ip status,

when it comes down, containerdns will choose a good one.

cctv2.containerdns.local ips[192.168.10.1,192.168.10.2,192.168.10.3]

% nslookup cctv2.containerdns.local 127.0.0.1

Server: 127.0.0.1

Address: 127.0.0.1#53

Name: cctv2.containerdns.local

Address: 192.168.10.3

% etcdctl get /containerdns/monitor/status/192.168.10.3

{"status":"DOWN"}

% nslookup cctv2.containerdns.local 127.0.0.1

Server: 127.0.0.1

Address: 127.0.0.1#53

Name: cctv2.containerdns.local

Address: 192.168.10.1

we query the domain cctv2.containerdns.local form containerdns we get the ip 192.168.10.3, then we shut down the service, we query the domain again

we get the ip 192.168.10.1.

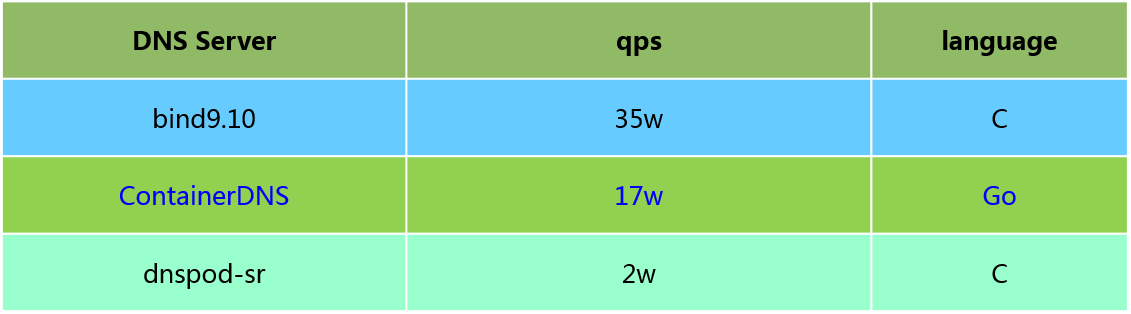

NIC: gigabit ethernet card

CPUs: 32

RAM: 32G

OS: CentOS-7.2

queryperf

Improve ContainerDNS throughput by leveraging the DPDK technology to reach nearly 10 million QPS, https://github.com/tiglabs/containerdns/kdns and the code is also production-ready.

Reference to cite when you use ContainerDNS in a paper or technical report: "Haifeng Liu, Shugang Chen, Yongcheng Bao, Wanli Yang, and Yuan Chen, Wei Ding, Huasong Shan. A High Performance, Scalable DNS Service for Very Large Scale Container Cloud Platforms. In 19th International Middleware Conference Industry, December 10–14, 2018, Rennes, France. "