Confidently evaluate, test and monitor LLM applications.

Website • Slack community • Twitter • Documentation • Feature Requests

Opik is an open-source platform for evaluating, testing and monitoring LLM applications. Built by Comet.

You can use Opik for:

-

Development:

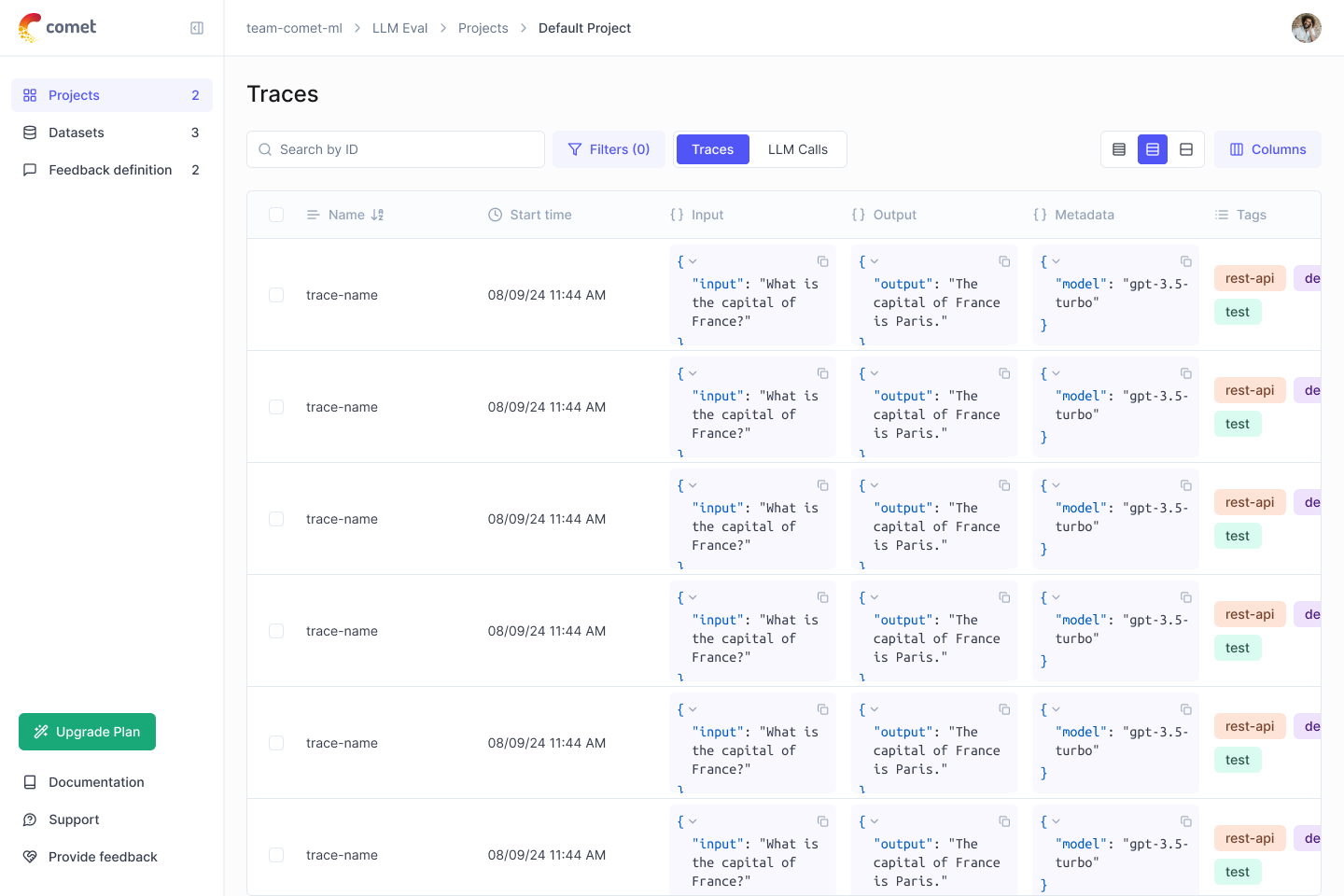

- Tracing: Track all LLM calls and traces during development and production (Quickstart, Integrations

- Annotations: Annotate your LLM calls by logging feedback scores using the Python SDK or the UI.

-

Evaluation: Automate the evaluation process of your LLM application:

-

Datasets and Experiments: Store test cases and run experiments (Datasets, Evaluate your LLM Application)

-

LLM as a judge metrics: Use Opik's LLM as a judge metric for complex issues like hallucination detection, moderation and RAG evaluation (Answer Relevance, Context Precision

-

CI/CD integration: Run evaluations as part of your CI/CD pipeline using our PyTest integration

-

-

Production Monitoring: Monitor your LLM application in production and easily close the feedback loop by adding error traces to your evaluation datasets.

Tip

If you are looking for features that Opik doesn't have today, please raise a new Github discussion topic 🚀

Opik is available as a fully open source local installation or using Comet.com as a hosted solution. The easiest way to get started with Opik is by creating a free Comet account at comet.com.

If you'd like to self-host Opik, you can do so by cloning the repository and starting the platform using Docker Compose:

# Clone the Opik repository

git clone https://github.com/comet-ml/opik.git

# Navigate to the opik/deployment/docker-compose directory

cd opik/deployment/docker-compose

# Start the Opik platform

docker compose up --detach

# You can now visit https://localhost:5173 on your browser!For more information about the different deployment options, please see our deployment guides:

| Installation methods | Docs link |

|---|---|

| Local instance | |

| Kubernetes |

To get started, you will need to first install the Python SDK:

pip install opikOnce the SDK is installed, you can configure it by running the opik configure command:

opik configureThis will allow you to configure Opik locally by setting the correct local server address or if you're using the Cloud platform by setting the API Key

Tip

You can also call the opik.configure(use_local=True) method from your Python code to configure the SDK to run on the local installation.

You are now ready to start logging traces using the Python SDK.

The easiest way to get started is to use one of our integrations. Opik supports:

| Integration | Description | Documentation | Try in Colab |

|---|---|---|---|

| OpenAI | Log traces for all OpenAI LLM calls | Documentation | |

| LiteLLM | Call any LLM model using the OpenAI format | Documentation | |

| LangChain | Log traces for all LangChain LLM calls | Documentation | |

| LangGraph | Log traces for all LangGraph executions | Documentation | |

| Gemini | Log traces for all Gemini LLM calls | Documentation | |

| Groq | Log traces for all Groq LLM calls | Documentation | |

| LlamaIndex | Log traces for all LlamaIndex LLM calls | Documentation | |

| Ollama | Log traces for all Ollama LLM calls | Documentation | |

| Predibase | Fine-tune and serve open-source Large Language Models | Documentation | |

| Ragas | Evaluation framework for your Retrieval Augmented Generation (RAG) pipelines | Documentation | |

| watsonx | Log traces for all watsonx LLM calls | Documentation |

Tip

If the framework you are using is not listed above, feel free to open an issue or submit a PR with the integration.

If you are not using any of the frameworks above, you can also using the track function decorator to log traces:

import opik

opik.configure(use_local=True) # Run locally

@opik.track

def my_llm_function(user_question: str) -> str:

# Your LLM code here

return "Hello"Tip

The track decorator can be used in conjunction with any of our integrations and can also be used to track nested function calls.

The Python Opik SDK includes a number of LLM as a judge metrics to help you evaluate your LLM application. Learn more about it in the metrics documentation.

To use them, simply import the relevant metric and use the score function:

from opik.evaluation.metrics import Hallucination

metric = Hallucination()

score = metric.score(

input="What is the capital of France?",

output="Paris",

context=["France is a country in Europe."]

)

print(score)Opik also includes a number of pre-built heuristic metrics as well as the ability to create your own. Learn more about it in the metrics documentation.

Opik allows you to evaluate your LLM application during development through Datasets and Experiments.

You can also run evaluations as part of your CI/CD pipeline using our PyTest integration.

There are many ways to contribute to Opik:

- Submit bug reports and feature requests

- Review the documentation and submit Pull Requests to improve it

- Speaking or writing about Opik and letting us know

- Upvoting popular feature requests to show your support

To learn more about how to contribute to Opik, please see our contributing guidelines.