| openreview | arXiv | datasets | checkpoints |

This repo contains an official PyTorch implementation for the paper "Label-Noise Robust Diffusion Models" in ICLR 2024.

Byeonghu Na, Yeongmin Kim, HeeSun Bae, Jung Hyun Lee, Se Jung Kwon, Wanmo Kang, and Il-Chul Moon

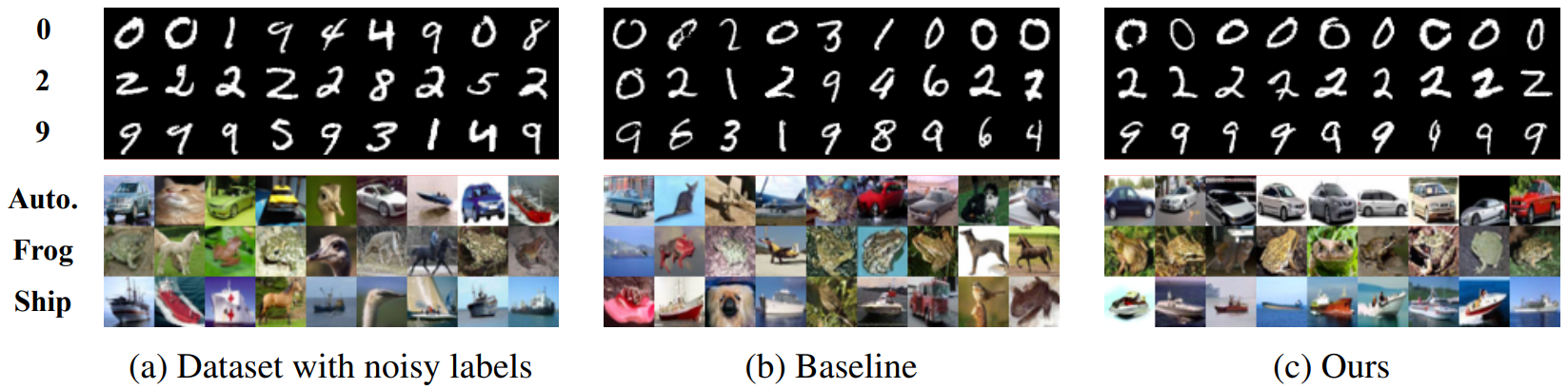

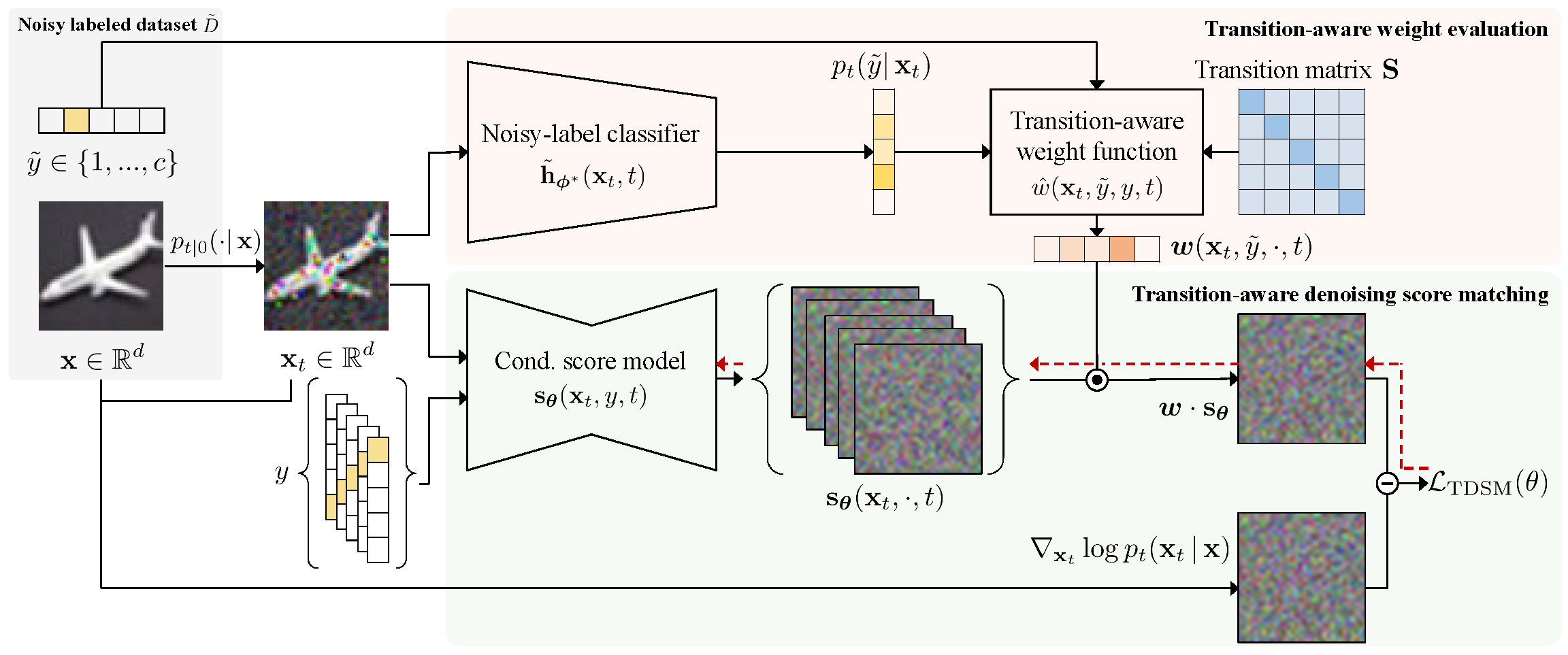

This paper proposes Transition-aware weighted Denoising Score Matching (TDSM) objective for training conditional diffusion models with noisy labels.

The requirements for this code are the same as those outlined for EDM.

In our experiment, we utilized 8 NVIDIA Tesla P40 GPUs, employing CUDA 11.4 and PyTorch 1.12 for training.

Datasets follow the same format used in StyleGAN and EDM, where are stored as uncompressed ZIP archives containing uncompressed PNG files, accompanied by a metadata file dataset.json for label information.

Noisy Labeled Dataset

For the benchmark datasets, we add arguments to adjust the noise type and noise rate.

You can change --noise_type ('sym', 'asym') and --noise_rate (0 to 1).

For example, the script to contruct the CIFAR-10 dataset under 40% symmetric noise is:

python dataset_tool.py --source=downloads/cifar10/cifar-10-python.tar.gz \

--dest=datasets/cifar10_sym_40-32x32.zip --noise_type=sym --noise_rate=0.4Additionally, we provide the noisy labeled datasets that we used by this link.

First download each dataset ZIP archive, then replace dataset.json file in the ZIP archive with the corresponding json file.

You can train new classifiers using train_classifier.py. For example:

torchrun --standalone --nproc_per_node=1 train_classifier.py --outdir=classifier-runs \

--data=datasets/cifar10_sym_40-32x32.zip --cond=1 --arch=ddpmpp --batch 1024You can train the diffusion models with the TDSM objective using train_noise.py. For example:

torchrun --standalone --nproc_per_node=8 train_noise.py --outdir=noise-runs \

--data=datasets/cifar10_sym_40-32x32.zip --cond=1 --arch=ddpmpp \

--cls=/path/to/classifier/network-snapshot-200000.pkl \

--noise_type=sym --noise_rate=0.4We provide the pre-trained models for classifiers, baselines, and our models on noisy labeled datasets by this link.

You can generate samples using generate.py. For example:

python generate.py --seeds=0-63 --steps=18 --class=0 --outdir=/path/to/output \

--network=/path/to/scoreThis work is heavily built upon the code from:

@inproceedings{

na2024labelnoise,

title={Label-Noise Robust Diffusion Models},

author={Byeonghu Na and Yeongmin Kim and HeeSun Bae and Jung Hyun Lee and Se Jung Kwon and Wanmo Kang and Il-Chul Moon},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=HXWTXXtHNl}

}