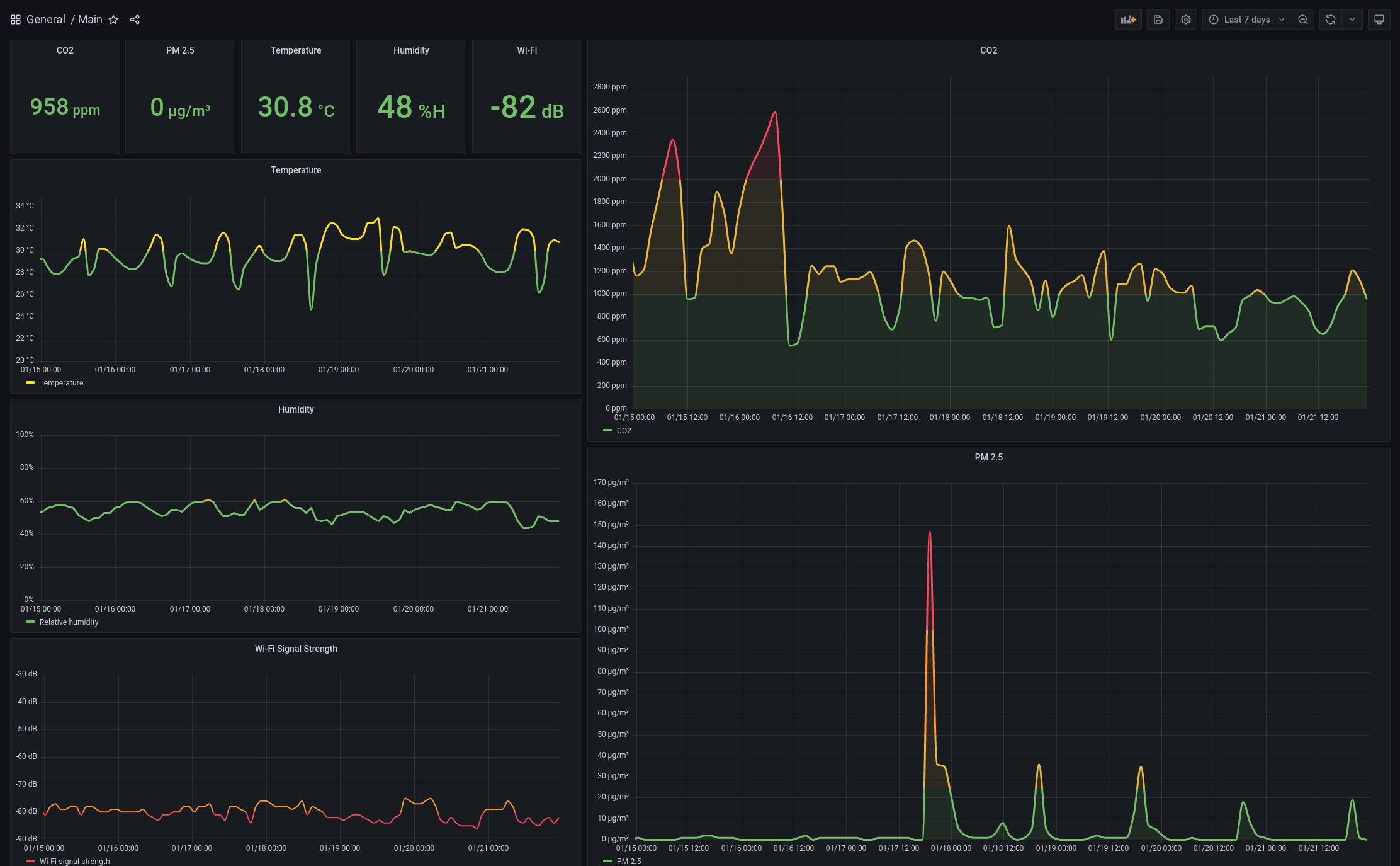

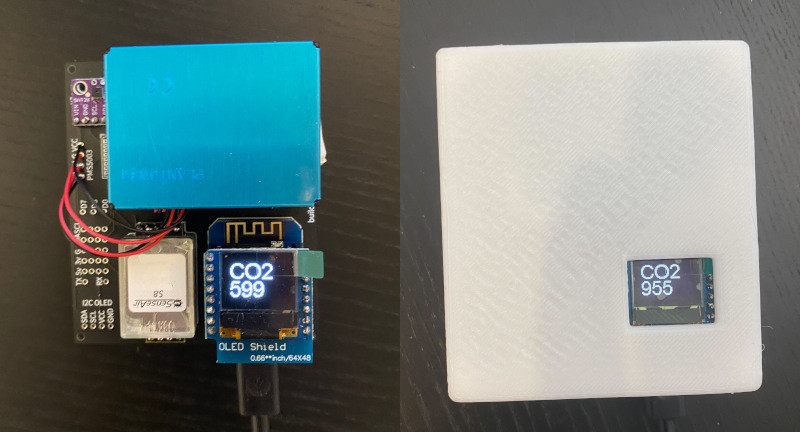

DIY Air Quality Monitor by AirGradient integrated with AWS Iot Core & Amazon Timestream, for display in Grafana.

Records & visualises carbon dioxide, particulate matter, temperature, and humidity.

Sections:

Important

July 2024 Update: AWS will now charge for a 100GB minimum of magnetic storage

Effective July 10, 2024, the service will have a new minimum requirement for the magnetic store usage. All accounts using the service are subject to a 100GB storage minimum, equivalent to $3/month (in US-EAST-1), of the magnetic store. Refer to the pricing page for the prevailing rates of magnetic store usage in your AWS region [1]. If the magnetic store usage in your account exceeds 100 GB, there will be no change to your billing.

You can export your data from timestream to s3 using the new S3 UNLOAD statement

As of January 2022

The DIY kit can be purchased from AirGradient's shop for between US$46 and US$60 + shipping

AWS IoT Core + Amazon Timestream costs are estimated to be approximately the following:

| Service | Link | First month | With 10 years of data |

|---|---|---|---|

| AWS IoT Core | Pricing | US$0.08 | US$0.08 |

| Amazon Timestream | Pricing | US$0.22 | US$0.45 |

Assumptions:

- Running in

us-east-2 - Limited querying scope and frequency

- Sending data using Basic Ingest

- 24h in-memory retention

Notes:

- Costs will vary depending on usage and prices are subject to change

- You may benefit from using scheduled queries depending on your usage patterns

- There is a 10MB (US$0.0001) minimum charge per query

- In the default configuration, a little more than 2MB is added to Timestream each day

- WEMOS D1 mini (ESP8266)

- WEMOS D1 mini display (OLED 0.66 Shield)

- Plantower PMS5003

- Sensair S8

- SHT31

Follow the instructions on https://www.airgradient.com/diy/.

See also Jeff Geerling's video for tips:

AirGradient's client can be uploaded using the Arduino IDE.

This custom client uses the platformio framework to manage the toolchain for building and deployment.

In the client directory:

pio run

pio run -t upload

The OLED screen should begin displaying information.

pio device monitor

Please note that Amazon Timestream is only supported in a few AWS regions. See the pricing page for details.

All the infrastructure can be deployed using AWS CloudFormation and the AWS CLI or AWS console.

Configure environment variables:

export AWS_REGION=us-east-2 # ap-southeast-2 when :(

export STACK_NAME=daq

Create the client certificates to connect to AWS IoT Core:

aws iot create-keys-and-certificate \

--certificate-pem-outfile "daq.cert.pem" \

--public-key-outfile "daq.public.key" \

--private-key-outfile "daq.private.key" > cert_outputs.json

Deploy the CloudFormation stack:

aws cloudformation deploy \

--template-file cloudformation.yaml \

--capabilities CAPABILITY_IAM \

--stack-name $STACK_NAME \

--parameter-overrides MemoryRetentionHours=24 MagneticRetentionDays=3650

aws cloudformation describe-stacks \

--stack-name $STACK_NAME \

--query 'Stacks[0].Outputs' > stack_outputs.json

Once the stack has been successfully deployed, configure the certificate.

cat cert_outputs.json stack_outputs.json

Mark as active:

aws iot update-certificate --certificate-id {CERTIFICATE_ID} --new-status ACTIVE

Attach Policy:

aws iot attach-policy --policy-name {POLICY_NAME} --target {CERTIFICATE_ARN}

Attach Thing:

aws iot attach-thing-principal --thing-name {THING_NAME} --principal {CERTIFICATE_ARN}

In client/include/config.h, update the following:

- Set

THING_NAMEto the name of the created Thing (eg.daq-Thing-L5KRHBFJORP4) - Set

ROOMto the human-readable identifier of the room the client is housed in - Set

ENABLE_WIFItotrue - Set

TIME_ZONEto your timezone - Set

AWS_TOPICto the created topic rule name with the included prefix (eg.$aws/rules/TopicRule_0yKDHTposnt5) - Set

AWS_IOT_ENDPOINTto your AWS IoT Core endpoint (aws iot describe-endpoint --endpoint-type iot:Data-ATS) - Set

AWS_CERT_CAto the value ofAWSRootCA1.pemlink - Set

AWS_CERT_DEVICEto the contents of the createddaq.cert.pemfile - Set

AWS_CERT_PRIVATEto the contents of the createddaq.private.keyfile

Then upload the changes:

cd client

pio run -t upload

Connect to the Wi-Fi network beginning with DAQ-, configure the network connection, and reset.

See WiFiManager for more details.

Once the configuration is complete, confirm that the device is sending data to AWS:

$ pio device monitor

*wm:[1] AutoConnect

*wm:[2] Connecting as wifi client...

*wm:[2] setSTAConfig static ip not set, skipping

*wm:[1] connectTimeout not set, ESP waitForConnectResult...

*wm:[2] Connection result: WL_CONNECTED

*wm:[1] AutoConnect: SUCCESS

Connecting to server

Connected!

{"device_id":"abb44a","room":"office1","wifi":-71,"pm2":0,"co2":733,"tmp":25.60000038,"hmd":53}

{"device_id":"abb44a","room":"office1","wifi":-72,"pm2":0,"co2":735,"tmp":25.60000038,"hmd":53}

{"device_id":"abb44a","room":"office1","wifi":-72,"pm2":0,"co2":739,"tmp":25.60000038,"hmd":53}

$ aws timestream-query query --query-string 'SELECT * FROM "{DATABASE_NAME}"."{TABLE_NAME}" order by time desc LIMIT 10'

{

"Rows": [...],

"ColumnInfo": [

{

"Name": "device_id",

"Type": {

"ScalarType": "VARCHAR"

}

},

{

"Name": "room",

"Type": {

"ScalarType": "VARCHAR"

}

},

{

"Name": "measure_name",

"Type": {

"ScalarType": "VARCHAR"

}

},

{

"Name": "time",

"Type": {

"ScalarType": "TIMESTAMP"

}

},

{

"Name": "measure_value::bigint",

"Type": {

"ScalarType": "BIGINT"

}

},

{

"Name": "measure_value::double",

"Type": {

"ScalarType": "DOUBLE"

}

}

],

"QueryStatus": {

"ProgressPercentage": 100.0,

"CumulativeBytesScanned": 1870735,

"CumulativeBytesMetered": 10000000

}

}

See the installation instructions for Grafana.

Add an Amazon Timestream Data Source:

- In

Configuration->Plugins, install theAmazon Timestreamplugin - In

Configuration->Data Sources, clickAdd data source->Amazon Timestream - Pick your preferred method of creating AWS credentials

- Create an IAM User and attach the

AmazonTimestreamReadOnlyAccesspolicyaws iam create-user --user-name GrafanaTimestreamQuery aws iam attach-user-policy --user-name GrafanaTimestreamQuery --policy-arn "arn:aws:iam::aws:policy/AmazonTimestreamReadOnlyAccess" aws iam create-access-key --user-name GrafanaTimestreamQuery - Use your root credentials and in the

Assume Role ARNfield, enter the ARN of theQueryRolerole instack_outputs.json - Use your root credentials

- Create an IAM User and attach the

- In the

Authentication Providerfield, choose either:Access & secret keyand enter the keys directly.AWS SDK DefaultorCredentials fileand configure your AWS credentials through the filesystem or env variables

- In the

Default Regionfield, enter the AWS Region - Click

Save & test

Import the default dashboard:

- In

Create->Import, clickUpload JSON fileand selectdashboard.json - Change the value of

Amazon Timestreamto your Amazon Timestream Data Source - Change the value of

Databaseto the name of your database - Change the value of

Tableto the name of your table - Click

Import

Thanks to AirGradient for creating this awesome DIY kit, providing the example code, and the Gerber + STL files.

Thanks to Jeff Geerling for the video and commentary.