~ Dragon! Sound effects for board games

» By Joren on Friday 01 September 2023

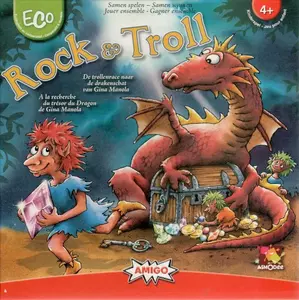

Fig: Rock & Troll collaborative board game.

I often play board games with my kids. One of them is an absolute board game fan while the other is a sore loser and only wants to play collaborative games. These games are played ‘against the board’ and you win, or lose, together. I myself also still have problems losing games so I do understand this predicament. Genetics…

Rock & Troll is one of those games. It is a chance based game where you collaboratively try to build a path to a treasure before the dragon reaches it. Every player has to flip a tile which is either a part of the path (good) or a dragon (very bad). To increase engagement during play I often add sound effects. I was thinking: this can be improved and automated. For example, by doing this when a dragon tile is flipped:

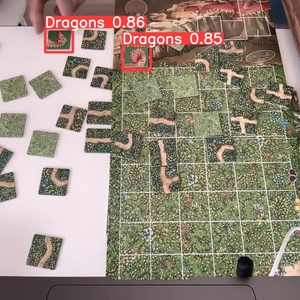

The idea is to unobtrusively detect game state and add sound effects at critical moments. The sound effect should be playing without too much lag, ideally within about 200ms, so it feels immediate and connected to the game event. To implement this a camera based system with robust, fast object detection seemed like the way to go.

Dragon detection

To detect dragons in a video stream I want to retrain an existing object-detection system. So two things need to happen: first a realistic, labeled dataset needs to be created. Then a system needs to be trained to detect the dragons. We do not want to label a massive dataset so we will use transfer learning to retrain an existing network. This existing network should already have learned basic features like edges, colors, geometries and other basic patterns. With the hope that this would result in robust detection, even with a limited dataset.

To create the dataset I wrote a small script which took a webcam picture every few seconds while I was manipulating the board and tiles. This resulted in about 130 pictures, some with no dragons and some with six, 300 labels in total. For annotating the dataset I used the free roboflow web-app which also hosts the final dragon dataset. After augmentation, the size of the dataset can be tripled. The command to extract images from a webcam looks like this on my system:

ffmpeg -y -r 30 -f avfoundation -i '0' -frames:v 1 snapshot.jpg

After some consideration for alternatives I landed on the YOLOv8 object-detection system: a robust and fast object-detection system. Additionally, it is well-documented, pytorch-based, easy-to-use and it has support for video streams. The annotated roboflow dataset can be downloaded in a YOLOv8 compatible format as well. Transfer learning, was based on the yolov8s.pt weights, which are downloaded automatically. With the system installed correctly and the dataset dowloaded, a local GPU based training command might look like this:

yolo train data=RocknTroll.v3i.yolov8/data.yaml epochs=30 model=yolov8s.pt device=mps imgsz=640 batch=32

Once the system was trained – download the model wheights here – a bit of glue code is needed. The python script needs to stream images from a camera, here via open cv, and detect dragons in each image. Every time a new dragon is found, the sound effect is played. Note that the Roboflow website automatically trains a model as well which can be tried out with a webcam.

There are a few ways improve the robustness of the system. During a game there are only more and more dragons: if the script detects less dragons than before it is probably a false negative or there is occlusion. Additionally, the dragon tiles remain in the same location once they are placed on the board. This means that new dragons are expected only in certain regions of the image. Both heuristics can be used to together to improve robustness.

Notes

One of the reasons I bought a M1 mac with unified memory is for exactly these types of AI applications. After installing pytorch 2.0, the GPU acceleration resulted in a 10x training speed improvement. Training on a GeForce 1080 GTX from 2016 was still quite a bit faster, probably thanks to years of performance tuning targeting CUDA. It is clear that the mac GPU acceleration software ecosystem can use more effort, even system tools in macOS are limited: e.g. in the macOS activity monitor, GPU activity is very much an afterthought.

I am and hesitant to use cloud based GPU computing due to lack of control and privacy. I am not willing to send pictures from my kids to e.g. Google Cloud GPUs. The dependency on hardware of others might also limit the longevity of systems.

The ease-of-use, performance and accessibility of these deep-learning systems is great. Only a couple of years ago it would take months of hard work to maybe only approach similar detection performance. Adapting this idea for other board games and more types of tiles or board game events should be very possible.