CN114841998A - Artificial intelligence-based packaging printing abnormity monitoring method - Google Patents

Artificial intelligence-based packaging printing abnormity monitoring method Download PDFInfo

- Publication number

- CN114841998A CN114841998A CN202210763023.2A CN202210763023A CN114841998A CN 114841998 A CN114841998 A CN 114841998A CN 202210763023 A CN202210763023 A CN 202210763023A CN 114841998 A CN114841998 A CN 114841998A

- Authority

- CN

- China

- Prior art keywords

- image

- window

- pixel

- rgbd

- images

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

- 238000007639 printing Methods 0.000 title claims abstract description 150

- 238000004806 packaging method and process Methods 0.000 title claims abstract description 111

- 238000000034 method Methods 0.000 title claims abstract description 80

- 238000012544 monitoring process Methods 0.000 title claims abstract description 73

- 238000013473 artificial intelligence Methods 0.000 title claims abstract description 19

- 238000005286 illumination Methods 0.000 claims abstract description 46

- 230000002159 abnormal effect Effects 0.000 claims abstract description 37

- 238000012545 processing Methods 0.000 claims abstract description 33

- 230000008569 process Effects 0.000 claims description 50

- 239000013598 vector Substances 0.000 claims description 47

- 239000011159 matrix material Substances 0.000 claims description 39

- 230000035945 sensitivity Effects 0.000 claims description 21

- 238000004364 calculation method Methods 0.000 claims description 17

- 238000001514 detection method Methods 0.000 abstract description 19

- 230000007246 mechanism Effects 0.000 description 21

- 230000008859 change Effects 0.000 description 20

- 238000004458 analytical method Methods 0.000 description 11

- 230000006870 function Effects 0.000 description 10

- PCHJSUWPFVWCPO-UHFFFAOYSA-N gold Chemical compound [Au] PCHJSUWPFVWCPO-UHFFFAOYSA-N 0.000 description 7

- 239000010931 gold Substances 0.000 description 7

- 229910052737 gold Inorganic materials 0.000 description 7

- 230000003044 adaptive effect Effects 0.000 description 5

- 238000010586 diagram Methods 0.000 description 5

- 230000005856 abnormality Effects 0.000 description 4

- 238000000605 extraction Methods 0.000 description 4

- 230000009467 reduction Effects 0.000 description 4

- 238000012549 training Methods 0.000 description 4

- 238000013528 artificial neural network Methods 0.000 description 3

- 230000000694 effects Effects 0.000 description 3

- 238000004519 manufacturing process Methods 0.000 description 3

- 230000000903 blocking effect Effects 0.000 description 2

- 238000005520 cutting process Methods 0.000 description 2

- 238000005516 engineering process Methods 0.000 description 2

- 230000004927 fusion Effects 0.000 description 2

- 239000000463 material Substances 0.000 description 2

- 239000011087 paperboard Substances 0.000 description 2

- 238000007781 pre-processing Methods 0.000 description 2

- 238000005070 sampling Methods 0.000 description 2

- 230000000007 visual effect Effects 0.000 description 2

- ORILYTVJVMAKLC-UHFFFAOYSA-N Adamantane Natural products C1C(C2)CC3CC1CC2C3 ORILYTVJVMAKLC-UHFFFAOYSA-N 0.000 description 1

- 230000002547 anomalous effect Effects 0.000 description 1

- 230000001174 ascending effect Effects 0.000 description 1

- 230000009286 beneficial effect Effects 0.000 description 1

- 230000032823 cell division Effects 0.000 description 1

- 230000019771 cognition Effects 0.000 description 1

- 239000003086 colorant Substances 0.000 description 1

- 238000010276 construction Methods 0.000 description 1

- 238000013461 design Methods 0.000 description 1

- 238000009826 distribution Methods 0.000 description 1

- 238000007730 finishing process Methods 0.000 description 1

- 230000006872 improvement Effects 0.000 description 1

- 239000002932 luster Substances 0.000 description 1

- 238000012986 modification Methods 0.000 description 1

- 230000004048 modification Effects 0.000 description 1

- 230000008447 perception Effects 0.000 description 1

- 230000011218 segmentation Effects 0.000 description 1

- 238000000638 solvent extraction Methods 0.000 description 1

- 238000006467 substitution reaction Methods 0.000 description 1

- 238000012360 testing method Methods 0.000 description 1

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/0002—Inspection of images, e.g. flaw detection

- G06T7/0004—Industrial image inspection

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/24—Classification techniques

- G06F18/241—Classification techniques relating to the classification model, e.g. parametric or non-parametric approaches

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T5/00—Image enhancement or restoration

- G06T5/50—Image enhancement or restoration using two or more images, e.g. averaging or subtraction

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/10—Segmentation; Edge detection

- G06T7/11—Region-based segmentation

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/10—Segmentation; Edge detection

- G06T7/136—Segmentation; Edge detection involving thresholding

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/10—Segmentation; Edge detection

- G06T7/194—Segmentation; Edge detection involving foreground-background segmentation

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/70—Arrangements for image or video recognition or understanding using pattern recognition or machine learning

- G06V10/764—Arrangements for image or video recognition or understanding using pattern recognition or machine learning using classification, e.g. of video objects

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10024—Color image

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20081—Training; Learning

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20212—Image combination

- G06T2207/20221—Image fusion; Image merging

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30108—Industrial image inspection

- G06T2207/30144—Printing quality

-

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02P—CLIMATE CHANGE MITIGATION TECHNOLOGIES IN THE PRODUCTION OR PROCESSING OF GOODS

- Y02P90/00—Enabling technologies with a potential contribution to greenhouse gas [GHG] emissions mitigation

- Y02P90/30—Computing systems specially adapted for manufacturing

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Physics & Mathematics (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Evolutionary Computation (AREA)

- Data Mining & Analysis (AREA)

- Artificial Intelligence (AREA)

- General Engineering & Computer Science (AREA)

- Computing Systems (AREA)

- Bioinformatics & Computational Biology (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Life Sciences & Earth Sciences (AREA)

- Quality & Reliability (AREA)

- Health & Medical Sciences (AREA)

- Evolutionary Biology (AREA)

- Databases & Information Systems (AREA)

- General Health & Medical Sciences (AREA)

- Medical Informatics (AREA)

- Software Systems (AREA)

- Multimedia (AREA)

- Investigating Materials By The Use Of Optical Means Adapted For Particular Applications (AREA)

- Image Processing (AREA)

Abstract

The invention relates to the technical field of data identification and processing, in particular to a packaging printing abnormity monitoring method based on artificial intelligence. According to the invention, the RGBD images of the surface under different set illumination angles are obtained, and corresponding data identification and processing are carried out on the images, so that the surface abnormal points of the packaging and printing product can be accurately identified finally, and the problem of inaccurate detection result caused by manual printing quality detection of the packaging and printing product is effectively solved.

Description

Technical Field

The invention relates to the technical field of data identification and processing, in particular to a package printing abnormity monitoring method based on artificial intelligence.

Background

Along with the improvement of aesthetic requirements of people, more and more packaging printed products not only require that the planar design has aesthetic feeling, but also require the texture and luster of concave-convex fluctuation, and in order to meet the requirements, the packaging printed products often need to be subjected to finishing processes such as gold stamping, bulging, UV (ultraviolet) and die cutting after the printing is finished. Since high-quality packaging printing is an important way and means to improve the added value of goods and enhance the competitiveness of goods, there is a need to monitor the printing quality of packaging printed products.

In order to realize quality monitoring of a packaged printed product, a manual visual detection mode is usually adopted at present, but the manual visual detection mode has the problems of strong subjectivity, high fatigue tendency, high possibility of being interfered by illumination and the like, so that the problems of low detection speed, inaccurate detection result and the like are caused.

Disclosure of Invention

The invention aims to provide a package printing abnormity monitoring method based on artificial intelligence, which is used for solving the problem of inaccurate detection result caused by manual detection of the printing quality of a package printing product.

In order to solve the technical problem, the invention provides a package printing abnormity monitoring method based on artificial intelligence, which comprises the following steps of:

acquiring surface RGBD images of a to-be-monitored packaging printing product and a standard packaging printing product under n different set illumination angles respectively, and further acquiring 2n preprocessed surface RGBD images;

inputting 2n preprocessed surface RGBD images into a packaging printing abnormity monitoring network, wherein an embedded layer of the packaging printing abnormity monitoring network carries out data identification according to the 2n preprocessed surface RGBD images, determines a combined image corresponding to a packaging printing product to be monitored and a standard packaging printing product, and sends the combined image to an attention block layer of the packaging printing abnormity monitoring network;

the attention block layer of the package printing abnormity monitoring network comprises all attention blocks which are connected in sequence, each attention block forwards a combined image sent by an embedded layer or a previous attention block to a windowing pyramid of the package printing abnormity monitoring network, the windowing pyramid carries out windowing on the combined image according to an image format corresponding to the corresponding attention block to obtain each window image, and each window image is sent to the corresponding attention block; each attention block carries out data processing on each received window image to obtain a combined image after data processing, the combined image after data processing is sent to the next attention block, and the combined image after data processing is sent to the classifier of the package printing abnormity monitoring network by the last attention block;

the classifier of the package printing abnormity monitoring network receives the merged image after data processing sent by the last attention block, and determines an abnormal pixel binary image of a package printing product to be monitored according to the merged image after data processing;

and determining surface abnormal points of the packaging printing product to be monitored according to the abnormal pixel binary image of the packaging printing product to be monitored.

Further, the step of determining a merged image corresponding to the packaged printed product to be monitored and the standard packaged printed product comprises:

determining each pixel component of each pixel point in the n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored according to the n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored;

respectively splicing pixel components of n pixel points at the same position in n preprocessed surface RGBD images corresponding to a packaging printing product to be monitored, and correspondingly obtaining color variation vectors corresponding to the n pixel points at the same position;

determining each pixel component of each pixel point in the n preprocessed surface RGBD images corresponding to the standard packaging printed product according to the n preprocessed surface RGBD images corresponding to the standard packaging printed product;

respectively splicing pixel components of n pixel points at the same position in n preprocessed surface RGBD images corresponding to a standard packaging printing product, and correspondingly obtaining color variation vectors corresponding to the n pixel points at the same position;

splicing color variation vectors corresponding to n pixel points at the same position in n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored, color variation vectors corresponding to n pixel points at the same position in n preprocessed surface RGBD images corresponding to the standard packaged printed product, and initialized classification vectors corresponding to the same position to correspondingly obtain pixel vectors corresponding to pixel points at the same position in 2n preprocessed surface RGBD images;

and constructing a combined image corresponding to the packaging printed product to be monitored and the standard packaging printed product, wherein the pixel value of each pixel point in the combined image is a pixel vector corresponding to the pixel point at the same position in the 2n preprocessed surface RGBD images.

Further, the attention block layer of the package printing anomaly monitoring network comprises four attention blocks connected in sequence, and the image formats corresponding to the four attention blocks are as follows: the number of windows corresponding to the first attention block is 1, the number of windows corresponding to the second attention block is 4, the number of windows corresponding to the third attention block is 16, the number of windows corresponding to the fourth attention block is 64, and the number of cells in each window corresponding to the four attention blocks is the same.

Further, the calculation formula corresponding to the number of cells in each window corresponding to the four attention blocks is as follows:

wherein,Nthe number of cells in each window for the four attention blocks,andthe resolution of the combined image.

Further, each attention block performs data processing on each window image received by the attention block, including:

determining the illumination sensitivity corresponding to each window image according to the pixel value of each pixel point in each window image;

acquiring binary images corresponding to m platemaking files corresponding to a packaging printing product to be monitored, and determining binary sub-images corresponding to each window image in the binary images corresponding to the m platemaking files;

determining the process complexity corresponding to each window image according to the number of foreground pixel points of each window image in the binary sub-image corresponding to the binary image corresponding to the m plate-making files;

acquiring target surface RGBD images in n preprocessed surface RGBD images corresponding to a standard packaging printing product, and determining corresponding target surface RGBD sub-images of the window images in the target surface RGBD images by combining the window images;

determining the texture complexity corresponding to each window image according to the corresponding target surface RGBD sub-image of each window image in the target surface RGBD image;

determining the number of multiple heads corresponding to each window image according to the illumination sensitivity, the process complexity and the texture complexity corresponding to each window image;

and carrying out data processing on each window image according to the number of the multiple heads corresponding to each window image by each attention block.

Further, the step of determining the illumination sensitivity corresponding to each window image includes:

calculating the average value of the pixel values of a set number of randomly selected pixel points in each window image according to the pixel value of each pixel point in each window image, thereby correspondingly obtaining the average value of the pixel values corresponding to each window image;

determining the minimum RGB value and the maximum RGB value in the average values of the pixel values according to the average values of the pixel values corresponding to the window images, so as to correspondingly obtain the minimum RGB value and the maximum RGB value corresponding to the window images;

and calculating the Euclidean distance between the minimum RGB value and the maximum RGB value corresponding to each window image according to the minimum RGB value and the maximum RGB value corresponding to each window image, so as to correspondingly obtain the illumination sensitivity corresponding to each window image.

Further, the step of determining the process complexity corresponding to each window image comprises:

determining the ratio of the number of foreground pixel points of each window image in the binary sub-image corresponding to the binary image corresponding to the m plate-making files to all the pixel points in the binary sub-image corresponding to the m plate-making files according to the number of the foreground pixel points of each window image in the binary sub-image corresponding to the m plate-making files;

and determining the number of the ratio values which are larger than a set ratio threshold value and correspond to each window image according to the ratio of the number of foreground pixel points in the binary sub-image corresponding to each window image in the binary image corresponding to the m plate-making files to all the pixel points in the corresponding binary sub-image, so as to correspondingly obtain the process complexity corresponding to each window image.

Further, the step of determining the texture complexity corresponding to each window image includes:

determining a depth map and a color map corresponding to the RGBD sub-image of the target surface according to the RGBD sub-image of the target surface corresponding to each window image in the RGBD image of the target surface, and further determining a gray scale map corresponding to the color map;

determining a depth co-occurrence matrix corresponding to the depth map and a gray level co-occurrence matrix corresponding to the gray level map according to the depth map and the gray level map corresponding to each window image, and calculating an entropy value of the depth co-occurrence matrix and an entropy value of the gray level co-occurrence matrix;

and respectively calculating the sum of the entropy of the depth co-occurrence matrix corresponding to each window image and the entropy of the gray level co-occurrence matrix, so as to correspondingly obtain the texture complexity corresponding to each window image.

Further, the calculation formula for determining the number of multiple heads corresponding to each window image is as follows:

wherein,the number of the multiple heads corresponding to each window image,is the minimum value of the number of the heads of the multi-head,、andthe illumination sensitivity, the process complexity and the texture complexity corresponding to each window image respectively,、andthe weights of the illumination sensitivity, the process complexity and the texture complexity corresponding to each window image respectively,is a rounding function.

Further, the step of determining surface anomaly points of the packaged printed product to be monitored comprises:

and according to the abnormal pixel binary image corresponding to the merged image, taking the pixel point with the pixel value of 0 in the abnormal pixel binary image as the surface abnormal point of the packaging printing product to be monitored.

The invention has the following beneficial effects: according to the method, the RGBD images of the surfaces of the package printed product to be monitored and the standard package printed product under different set illumination angles are obtained, the preprocessed images are further obtained, the preprocessed images are input into a pre-constructed package printing abnormity monitoring network, the corresponding combined image is constructed by the embedded layer of the network, the combined image is subjected to corresponding data processing by the attention block layer and the windowing pyramid of the network, an abnormal pixel binary image is obtained, and the surface abnormity points of the package printed product to be monitored are finally obtained. According to the method, when the combined image is constructed, the color difference of the same point of the packaging printing product to be monitored under different illumination angles is fully considered, and the difference of the surface RGBD image of the packaging printing product to be monitored and the surface RGBD image of the standard packaging printing product under different set illumination angles is also considered, so that the characteristics of each point of the packaging printing product to be monitored can be accurately extracted, the identification result of the printing quality is more accurate, and the problem of inaccurate detection result caused by manual printing quality detection of the packaging printing product is effectively solved.

Drawings

In order to more clearly illustrate the embodiments of the present invention or the technical solutions and advantages of the prior art, the drawings used in the description of the embodiments or the prior art will be briefly described below, it is obvious that the drawings in the following description are only some embodiments of the present invention, and other drawings can be obtained by those skilled in the art without creative efforts.

FIG. 1 is a flow chart of an artificial intelligence based package printing anomaly monitoring method in an embodiment of the present invention;

FIG. 2 is a schematic structural diagram of a color change detection apparatus according to an embodiment of the present invention;

FIG. 3 is a schematic structural diagram of a package printing anomaly monitoring network according to an embodiment of the present invention;

FIG. 4 is a schematic structural diagram of the last three attention blocks in the embodiment of the present invention;

fig. 5 is a schematic structural diagram of a first attention block in the embodiment of the present invention.

Detailed Description

To further explain the technical means and effects of the present invention adopted to achieve the predetermined objects, the following detailed description of the embodiments, structures, features and effects of the technical solutions according to the present invention will be given with reference to the accompanying drawings and preferred embodiments. In the following description, different "one embodiment" or "another embodiment" refers to not necessarily the same embodiment. Furthermore, the particular features, structures, or characteristics may be combined in any suitable manner in one or more embodiments.

Unless defined otherwise, all technical and scientific terms used herein have the same meaning as commonly understood by one of ordinary skill in the art to which this invention belongs.

Because the gloss sense standard of the material is not a fixed value, for the gold stamping areas of the packaging and printing products on the same production line, even if the gold stamping areas meet the production standard, the gold stamping areas can show different reflection effects and color distributions under the same light source and the same angle. Because the gold stamping area can change color along with the change of the angle of the light source, if the gold stamping area is white at the moment, and the bottom color of the paperboard is also white, the area is easily identified as a background area by mistake, and in the convex area with the bulging process, the phenomenon is more easily caused due to the existence of diffuse reflection. Therefore, when the printing quality of the package printing product is identified, the color difference of each pixel point in the image of the package printing product under different illumination angles needs to be considered, the difference between the pixel point and the corresponding pixel point in the standard image and various information of surrounding pixels of the pixel point need to be considered, and therefore the identification is more accurate.

Based on the above analysis, in order to accurately identify the printing quality of the package printing product, the present embodiment provides an artificial intelligence-based package printing anomaly monitoring method, and a flowchart corresponding to the method is shown in fig. 1, and includes the following steps:

step S1: the method comprises the steps of obtaining surface RGBD images of a to-be-monitored packaging printing product and a standard packaging printing product under n different set illumination angles respectively, and further obtaining 2n preprocessed surface RGBD images.

In this embodiment, the packaged printed product to be monitored is a printed paperboard, and in order to obtain RGBD images of the surface of the packaged printed product to be monitored at different set illumination angles, the present embodiment provides a color change detection device, a schematic structural diagram of which is shown in fig. 2, and the color change detection device includes an RGBD camera 1, an industrial-grade light source 2, and a semicircular sliding track 3. Wherein, the RGBD camera 1 and the semicircular sliding rail 3 are both disposed above the package printed product transportation rail (the package printed product transportation rail is not shown in fig. 2), and the RGBD camera 1 and the semicircular sliding rail 3 are disposed in a staggered manner. The industrial grade light source 2 is arranged on the sliding track 3, the industrial grade light source 2 can slide along the sliding track 3, in the sliding process, the industrial grade light source 2 is always aligned with the central position of a packaging printed product to be monitored, which is placed on the packaging printed product transportation track, and the sliding speed of the industrial grade light source 2 is set according to the actual production requirement.

When the print quality of the packaged printed products needs to be monitored, the packaged printed products are transported to a designated location through the packaging printed product transportation track and are temporarily stopped at the designated location. When the packaged printed product stays at the designated position, the RGBD camera 1 of the color change detection device is located right above the center of the packaged printed product, and the plane of the sliding rail 3 of the color change detection device is perpendicular to the plane of the packaged printed product. During the package printed product stays at the appointed position, the industrial light source 2 of the color change detection device slides to the other end from one end of the sliding track 3, in the process, the industrial light source 2 is always aligned to the center position of the package printed product, and meanwhile, the RGBD camera 1 conducts shooting and sampling on the package printed product according to the set sampling frequency, so that the surface RGBD image of the package printed product to be monitored under n different set illumination angles is obtained.

In addition, in order to subsequently realize the recognition of the printing quality of the packaging printed product to be monitored, namely recognize abnormal printing points existing on the packaging printed product to be monitored, for the standard packaging printed product which has the same batch with the packaging printed product to be monitored and does not have the problem of printing quality, according to the method for acquiring the surface RGBD images of the packaging printed product to be monitored under n different set illumination angles, the surface RGBD images of the standard packaging printed product under n different set illumination angles are acquired simultaneously. It should be noted that the above-mentioned color change detecting apparatus merely provides a specific device to obtain the RGBD images of the surfaces of the packaged printed product to be monitored and the standard packaged printed product under n different set illumination angles, respectively, as another embodiment, on the basis of being able to obtain these RGBD images, other suitable devices in the prior art may also be adopted.

After obtaining the surface RGBD images of the packaging printing product to be monitored and the standard packaging printing product under n different set illumination angles, preprocessing the surface RGBD images through image editing means such as expansion, cutting and the like, converting two values of the resolution of the surface RGBD images into multiples of 64 so as to be convenient for subsequent windowing of the images, and recording the resolution of the preprocessed surface RGBD images as。

After the 2n preprocessed surface RGBD images corresponding to the package printed product to be monitored and the standard package printed product are obtained in step S1, in order to subsequently realize the print quality identification of the package printed product to be monitored, a package print anomaly monitoring network is constructed in this embodiment, which is substantially a Shine switch-Transformer (SST) neural network based on Swin Transformer, and the network has 2n preprocessed surface RGBD images as input and outputs an anomalous pixel binary image. The general structure of the network is shown in the dashed box part in fig. 3, and includes a windowing pyramid, and an embedding layer, an attention block layer, and a normalized exponential function classifier connected in series in sequence, and the following describes each structure of the network and the principle of each structure operation in detail.

Windowing pyramid:

in order to obtain the deep-level features of each pixel of multiple scales and multiple resolutions, a multi-scale and multi-resolution blocking mechanism is constructed on the basis of a windowing pyramid and four attention blocks. In the blocking mechanism, image formats corresponding to four attention blocks are set through a windowing pyramid, windowing processing is performed on an image according to the corresponding image formats, generally, downsampling is performed on the image firstly, namely unit fusion (Patch Merging), then upsampling is performed step by step, namely unit splitting (Patch dividing), and finally an abnormal pixel binary image is output.

Attention block 4 corresponds to an image format: the image is upsampled, i.e. the image is subjected to element splitting (Patch segmentation), and in order to achieve this purpose, each element of the attention block 3 is divided into horizontal and vertical halves, and one third-order element is divided into square third-order elements of 4 single pixels. And 3, carrying out horizontal bisection and vertical bisection on the third-level window, carrying out secondary 4 third-level windows on one third-level window, dividing the image into 64 third-level windows at the moment, wherein each third-level window also comprises N third-level units, and thus ensuring that the vector group formats of the subsequent operation of each attention block are the same.

The window-dividing pyramid determines image formats corresponding to the four attention blocks based on hierarchical blocks in Swin-Transformer, blocks the image according to the image formats corresponding to the four attention blocks, and only performs an attention mechanism on a window where each unit in the attention block is located when the four attention blocks are subsequently subjected to image processing, so that the calculation efficiency is greatly improved. The hierarchical partitioning in the Swin-Transformer is performed from bottom to top, and the windowing pyramid in the scheme is performed from top to bottom, so that the subsequent calculation amount is further reduced, and the real-time performance is enhanced. On the other hand, the final cell division is accurate to the level of a single pixel, so that the neural network can finally obtain the recognition accuracy at the pixel level.

Embedding layer:

for n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored, arranging the n preprocessed surface RGBD images in a time sequence to obtain a first imageiTaking the preprocessed surface RGBD image as an example, each pixel point hasDepth values of pixel points at the same position in four pixel components, n preprocessed surface RGBD imagesAre all the same. According to the time sequence, carrying out Embedding operation, namely reducing the relation of the image on the time sequence into the characteristics of pixel points on the image to obtain the color variation vectors arranged as follows:

wherein,and corresponding color change vectors for n pixel points at the same position in n preprocessed surface RGBD images corresponding to the packaged printing product to be monitored.

For n preprocessed surface RGBD images corresponding to the standard packaging printed product, arranging the n preprocessed surface RGBD images according to a time sequence, and then obtaining the color variation vectors corresponding to the n pixel points at the same position in the n preprocessed surface RGBD images corresponding to the standard packaging printed product according to the same mode。

According to the color variation vectors corresponding to the n pixel points at the same position in the n preprocessed surface RGBD images corresponding to the packaged printing product to be monitoredAnd color variation vectors corresponding to n pixel points at the same position in n preprocessed surface RGBD images corresponding to the standard packaging printed productConstructing a pixel vectorWhereinThe initialized classification vector is obtained by random initialization, and the random initialization process belongs to the prior art and is not described herein again. According to the mode, n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored are combined with n preprocessed surface RGBD images corresponding to the standard packaged printed product, so that pixel vectors corresponding to pixel points at the same positions in the 2n preprocessed surface RGBD images can be obtained。

Constructing a combined image corresponding to the packaging printed product to be monitored and the standard packaging printed product, wherein the size of the combined image is the same as that of the 2n preprocessed surface RGBD images, and each pixel point in the combined image is uniquely corresponding to a pixel vector corresponding to a pixel point at the same position in the 2n preprocessed surface RGBD imagesTo take this as the follow-up attentionMinimum unit of force mechanism calculation.

Combining the n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored with the n preprocessed surface RGBD images corresponding to the standard packaged printed product, and finally determining the reason why the combined image corresponding to the packaged printed product to be monitored and the standard packaged printed product is that: under the condition that the glossiness meets the requirement, the color change vector corresponding to the package printed product to be monitored can not be fixed, and the direct difference between the color change vector corresponding to the package printed product to be monitored and the color change vector corresponding to the standard package printed product can not be used as the basis for matching the traditional template.

Attention block layer:

the attention block layer comprises four attention blocks connected in sequence, as shown in fig. 4, an attention block 2, an attention block 3 and an attention block 4 have the same neural network structure and are divided into a front sub-block and a rear sub-block, a dashed box represents one sub-block, as shown in fig. 5, the attention block 1 deletes the window analysis and the rear sub-block in the front sub-block on the basis of the structure shown in fig. 4. For four attention blocks in series, namely attention block 1, attention block 2, attention block 3 and attention block 4, the latter one accumulates the features extracted by the former one, and the window and cell sizes are also changing. Compared with the attention block in the conventional Swin transform, the adaptive multi-head attention mechanism in the four attention blocks is the core of the scheme, the number of multi-heads corresponding to each window image needs to be determined adaptively, and other structures except for window analysis are completely the same as those in the conventional Swin transform, wherein the process of constructing the tensor matrix is mainly to perform LayerNorm, namely jump addition, and the data which is not subjected to attention mechanism and the data which is subjected to attention mechanism are added, so that the concept of residual error connection is realized, and the method belongs to the known technology. The window analysis and adaptive multi-head attention mechanism will be described in detail below.

Window analysis:

for the attention block 1, no window analysis is required since its corresponding image format is only one window containing all the features of the packaged printed product. For the attention block 2, the attention block 3 and the attention block 4, because the corresponding image formats are that the image formats include a plurality of windows, that is, the input images of the attention block 2, the attention block 3 and the attention block 4 are all of the plurality of windows, the contents in some windows are only the background area of the packaged printed product, and there is no complex printing process, so that the local feature analysis is performed on each window to reduce the calculation amount of the window with uncomplicated contents, which is specifically implemented as follows:

firstly, plate-making files such as gold stamping, UV, bulging and the like of the packaging printed product in the same batch with the packaging printed product to be detected are obtained, and m plate-making files are arranged, namely m processes are arranged on the packaging printed product to be detected. The m platemaking files are black-white binary images, the foreground is black, the corresponding pixel value is 1, the background is white, the corresponding pixel value is 0, and the length and the width of the m platemaking files are the same as the surface RGBD image after pretreatment.

In any one of the attention block 2, the attention block 3, and the attention block 4, the following three indexes are analyzed for each window image:

(1) sensitivity to light

Randomly extracting p pixel points in the window image, setting p =4 in this embodiment, solving an average vector of pixel vectors corresponding to the p pixel points, and searching a minimum RGB value and a maximum RGB value in the average vector, where the minimum RGB value is recorded as the minimum RGB valueMaximum RGB value is notedDetermining the Euclidean distance between the minimum RGB value and the maximum RGB valueLThe corresponding calculation formula is:

euclidean distance between minimum RGB value and maximum RGB value calculatedLThen, the Euclidean distance is measuredLAs the illumination sensitivity of the window image. Since many printed materials will show different colors in the image with the change of the illumination angle, the maximum difference value of the RGB values changing with time is used to measure the degree of the change of all the pixel points in the window image caused by the illumination,Lthe smaller the value is, the larger the change of the illumination angle is, the larger the change of RGB of all pixel points of the window image is difficult to cause, and then the smaller the attention is paid;Lthe larger the size is, the larger the change of the illumination angle can cause the RGB of all the pixels of the window image to be greatly changed, and subsequently, more attention needs to be paid to the window image.

(2) Complexity of the process

According to the binary images corresponding to the m plate-making files, the parts, belonging to the window image, of the binary images corresponding to the m plate-making files are segmented, the proportion of foreground pixels of each plate-making file in the window image to all pixels of the whole window image is calculated, and the rejection proportion is smaller than a set ratio threshold valueThe embodiment settingAnd the number of the rest platemaking files is the process complexity corresponding to the window image. The process complexity corresponding to the window image is used for measuring the process types superposed in the window image, and the subsequent process with small area occupation ratio is abandoned, so that the calculated amount is reduced.

(3) Texture complexity

For n preprocessed surface RGBD images corresponding to packaged and printed products to be monitored, selecting the preprocessed surface RGBD image at the middle moment as a target surface RGBD sub-image, separating a depth value and an RGB value in the target surface RGBD sub-image to obtain a depth map and a color map, and converting the color map into a gray map by using a weighted average method based on human eye cognition.

Entropy of depth co-occurrence matrix for depth maps using known techniquesSimilarly, the entropy of the gray level co-occurrence matrix of the gray level image is obtainedEntropy is a measure of randomness, and is larger when all elements in the co-occurrence matrix have the largest randomness and all values in the spatial co-occurrence matrix are almost equal and the elements in the co-occurrence matrix are distributed dispersedly. Thus, entropy characterizes the degree of non-uniformity or complexity of texture in an image, the greater the entropy, the more non-uniform or complex the texture of the image. Entropy according to depth co-occurrence matrixEntropy of sum-gray level co-occurrence matrixCalculating the sum of two entropy valuesAnd taking the sum of the two entropy values as the corresponding texture complexity of the window image.

Constructing a tensor matrix:

for any one of the attention blocks 1, 2, 3 and 4, when each window image is processed, the pixel vectors of all the pixel points in each unit in the window image are spliced in the order from left to right and then from top to bottom to obtain the feature tensor. Feature sheets for each cell of each window imageQuantity, transposing the original characteristic tensor which is transversely arranged, and then transversely splicing the units from left to right and then from top to bottom to obtain a tensor matrixThis is the unit of attention.

It should be noted that the above description only briefly describes the process of constructing the tensor matrix, and since the specific implementation process of constructing the tensor matrix is exactly the same as the specific implementation process of constructing the tensor matrix in the Swin Transformer in the prior art, detailed description is not given here.

Self-adaptive multi-head attention mechanism:

the general purpose of the attention mechanism is: for each unit of each window image, the neighborhood of each unit is all the units in the window image where the unit is located, namely each unit (including the unit) in the window image where the unit is located is a neighbor of the unit, the redundant chord similarity of the feature tensor of each unit and the feature tensor of each neighbor in the neighborhood is calculated to obtain the weight, then the weighted summation is carried out, so that the original feature tensor is updated, namely each pixel vector in the unit is updated, wherein the classification vector in the pixel vector is updatedThe local information of the window in which the cell is located is recorded. In short, the output of the pixel vector after attention mechanism is an updated pixel vector, which perceives the information in the window image.

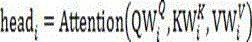

Multiple head means: the tensor matrix is mapped to prior attention mechanismDuplicate as Q, K, V, then use the quantity ashMultiplying the dimensionality reduction matrix of the group by Q, K and V respectively, and taking the obtained product as an attention mechanismInput of a function, thereby obtaininghAnd (3) group output:

wherein,is composed ofFirst of a functioniThe output of the group is carried out,is a firstiAnd (5) grouping the dimensionality reduction matrix.

In obtaininghAfter the group output, the h group output is then spliced using the Concat splicing operation and using the ascending matrixRestoring the splicing result output by the h groups, thereby obtaining a matrix obtained by multi-head splicing:

it should be noted that, the implementation process of the adaptive multi-head attention mechanism of the above attention block is basically the same as the implementation process of the multi-head attention mechanism of the attention block in the Swin Transformer in the prior art, and the differences are that: in the conventional implementation process of the multi-head attention mechanism of the attention block in the Swin Transformer, the number of heads of the multi-head, that is, the group number h of the dimensionality reduction matrix, is a preset fixed value, while in the implementation process of the above adaptive multi-head attention mechanism of the attention block, the number of heads of the multi-head, that is, the group number h of the dimensionality reduction matrix, is determined by the result of the window analysis, and the corresponding calculation formula is as follows:

wherein,the number of the multiple heads corresponding to each window image,for the minimum value of the number of heads, the present embodiment sets,、Andthe illumination sensitivity, the process complexity and the texture complexity corresponding to each window image respectively,、andthe weight of the illumination sensitivity, the process complexity and the texture complexity corresponding to each window image is set by the embodiment,Is a rounding function.

The self-adaptive multi-head attention mechanism is equivalent to the characteristic extraction in multiple directions, generally speaking, the more the number of heads is, the more complete the characteristic extraction is, but the excessive number of heads can increase the calculation amount, so that the method can self-adaptively select the number of the multi-heads according to the characteristics of each window image, and effectively reduce the calculation amount while ensuring the accuracy of the characteristic extraction.

The attention mechanism of the sliding window is as follows:

the sliding window attention mechanism enables each unit in each window image to change a perception domain, so that global information is perceived, and the sliding window is subjected to unified window analysis, so that the calculated amount is further reduced. Since the specific implementation process of the sliding window attention mechanism in the attention block is completely the same as the specific implementation process of the sliding window attention mechanism in the attention block in the Swin Transformer in the prior art, details are not described here.

Multilayer perceptron MLP:

since the specific working process of the multi-layer sensor MLP in the attention block is completely the same as that of the multi-layer sensor MLP in the attention block in the Swin Transformer in the prior art, the detailed description is omitted here.

Normalized exponential function classifier:

the input of the normalized index function classifier is the merged image output by the attention block 4, and the normalized index function classifier outputs an abnormal pixel binary image corresponding to the merged image according to the classification vector [ CLS ] of the pixel vector corresponding to each pixel point in the merged image. In the abnormal pixel binary image, a pixel point having a pixel value of 0 is an abnormal point, and a point having a pixel value of 1 is a normal point.

Step S2: inputting the 2n preprocessed surface RGBD images into a packaging printing abnormity monitoring network, carrying out data identification on an embedded layer of the packaging printing abnormity monitoring network according to the 2n preprocessed surface RGBD images, determining a combined image corresponding to a packaging printing product to be monitored and a standard packaging printing product, and sending the combined image to an attention block layer of the packaging printing abnormity monitoring network.

According to the above description of the structures and the working principle of the structures in the package printing anomaly monitoring network, it can be seen that, after the 2n preprocessed surface RGBD images obtained in step S1 are input into the package printing anomaly monitoring network, the embedded layer of the package printing anomaly monitoring network determines corresponding combined images according to the 2n preprocessed surface RGBD images, and the implementation process is as follows:

step S21: and determining each pixel component of each pixel point in the n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored according to the n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored.

Step S22: respectively splicing the pixel components of n pixel points at the same position in n preprocessed surface RGBD images corresponding to the packaged and printed product to be monitored, and correspondingly obtaining the color variation vector corresponding to the n pixel points at the same position.

Step S23: and determining each pixel component of each pixel point in the n preprocessed surface RGBD images corresponding to the standard packaging printed product according to the n preprocessed surface RGBD images corresponding to the standard packaging printed product.

Step S24: respectively splicing the pixel components of n pixel points at the same position in n preprocessed surface RGBD images corresponding to a standard packaging printing product, and correspondingly obtaining the color variation vector corresponding to the n pixel points at the same position.

Step S25: splicing the color variation vectors corresponding to the n pixel points at the same position in the n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored, the color variation vectors corresponding to the n pixel points at the same position in the n preprocessed surface RGBD images corresponding to the standard packaged printed product, and the initialized classification vectors corresponding to the same position to correspondingly obtain the pixel vectors corresponding to the pixel points at the same position in the 2n preprocessed surface RGBD images.

Step S26: and constructing a combined image corresponding to the packaging printed product to be monitored and the standard packaging printed product, wherein the pixel value of each pixel point in the combined image is a pixel vector corresponding to the pixel point at the same position in the 2n preprocessed surface RGBD images.

Since the specific process of determining the corresponding merged image in the steps S21-S26 has been described in detail in the working principle of the embedded layer in the package printing anomaly monitoring network, and will not be described herein again. After the embedded layer of the package printing anomaly monitoring network obtains the corresponding merged image, the embedded layer sends the merged image to the attention block layer of the package printing anomaly monitoring network.

Step S3: the attention block layer of the package printing abnormity monitoring network comprises all attention blocks which are connected in sequence, each attention block forwards a combined image sent by an embedded layer or a previous attention block to a windowing pyramid of the package printing abnormity monitoring network, the windowing pyramid carries out windowing on the combined image according to an image format corresponding to the corresponding attention block to obtain each window image, and each window image is sent to the corresponding attention block; each attention block carries out data processing on each received window image to obtain a combined image after data processing, the combined image after data processing is sent to the next attention block, and the combined image after data processing is sent to the classifier of the package printing abnormity monitoring network by the last attention block.

As can be seen from the specific structure of the attention block layer in the package printing abnormality monitoring network, in this embodiment, the attention block layer sequentially connects four attention blocks, namely, an attention block 1, an attention block 2, an attention block 3, and an attention block 4, where the attention block 1 receives the merged image sent by the embedded layer and sends the merged image to the windowing pyramid of the package printing abnormality monitoring network, and the windowing pyramid performs windowing on the merged image according to the image format corresponding to the attention block 1 to obtain each window image, and sends each window image to the attention block 1. The attention block 1 processes the received window images to obtain a processed combined image, and sends the processed combined image to the attention block 2. The attention block 2 receives the combined image sent by the attention block 1, and sends the combined image to a windowing pyramid of a package printing anomaly monitoring network, the windowing pyramid performs windowing processing on the combined image according to an image format corresponding to the attention block 2 to obtain each window image, and each window image is sent to the attention block 2. The attention block 2 processes the received window images to obtain a processed combined image, and sends the processed combined image to the attention block 3. And the attention block 3 and the attention block 4 operate in the same manner in sequence, finally, the attention block 4 obtains a final processed combined image, and the final processed combined image is sent to the classifier of the package printing abnormity monitoring network.

According to the image formats corresponding to the attention blocks in the attention block layer arranged in the windowing pyramid in the package printing abnormity monitoring network, the attention block layer of the package printing abnormity monitoring network comprises four attention blocks which are sequentially connected, and the image formats corresponding to the four attention blocks are as follows: the number of windows corresponding to the first attention block is 1, the number of windows corresponding to the second attention block is 4, the number of windows corresponding to the third attention block is 16, the number of windows corresponding to the fourth attention block is 64, and the number of units in each window corresponding to the four attention blocks is the same, which are all 1The corresponding calculation formula is:

wherein,Nfor the number of cells in each window corresponding to four attention blocks,andthe resolution of the combined image.

For each attention block in an attention block layer in a package printing anomaly monitoring network, the attention block processes each window image received by the attention block, and the processing comprises the following steps:

step S31: determining the illumination sensitivity corresponding to each window image according to the pixel value of each pixel point in each window image, wherein the implementation steps comprise:

step S311: and calculating the average value of the pixel values of the set number of randomly selected pixels in each window image according to the pixel value of each pixel in each window image, thereby correspondingly obtaining the average value of the pixel values corresponding to each window image.

Step S312: and determining the minimum RGB value and the maximum RGB value in the average values of the pixel values according to the average values of the pixel values corresponding to the window images, so as to correspondingly obtain the minimum RGB value and the maximum RGB value corresponding to the window images.

Step S313: and calculating the Euclidean distance between the minimum RGB value and the maximum RGB value corresponding to each window image according to the minimum RGB value and the maximum RGB value corresponding to each window image, so as to correspondingly obtain the illumination sensitivity corresponding to each window image.

Step S32: acquiring binary images corresponding to m platemaking files corresponding to the packaging printing product to be monitored, and determining binary sub-images corresponding to the window images in the binary images corresponding to the m platemaking files.

Step S33: determining the process complexity corresponding to each window image according to the number of foreground pixel points of each window image in the binary sub-image corresponding to the binary image corresponding to the m platemaking files, wherein the implementation steps comprise:

step S331: and determining the ratio of the number of foreground pixel points of each window image in the binary sub-image corresponding to the binary image corresponding to the m plate-making files to all the pixel points in the corresponding binary sub-image according to the number of the foreground pixel points of each window image in the binary sub-image corresponding to the m plate-making files.

Step S332: and determining the number of the ratio values which are larger than a set ratio threshold value and correspond to each window image according to the ratio of the number of foreground pixel points in the binary sub-image corresponding to each window image in the binary image corresponding to the m plate-making files to all the pixel points in the corresponding binary sub-image, so as to correspondingly obtain the process complexity corresponding to each window image.

Step S34: and acquiring target surface RGBD images in the n preprocessed surface RGBD images corresponding to the standard packaging printing product, and determining corresponding target surface RGBD sub-images of the window images in the target surface RGBD images by combining the window images.

Step S35: determining the texture complexity corresponding to each window image according to the target surface RGBD sub-image corresponding to each window image in the target surface RGBD image, wherein the implementation steps comprise:

step S351: and determining a depth map and a color map corresponding to the RGBD sub-image of the target surface according to the RGBD sub-image of the target surface corresponding to each window image in the RGBD image of the target surface, and further determining a gray scale map corresponding to the color map.

Step S352: and determining a depth co-occurrence matrix corresponding to the depth map and a gray level co-occurrence matrix corresponding to the gray level map according to the depth map and the gray level map corresponding to each window image, and calculating an entropy value of the depth co-occurrence matrix and an entropy value of the gray level co-occurrence matrix.

Step S353: and respectively calculating the sum of the entropy value of the depth co-occurrence matrix and the entropy value of the gray level co-occurrence matrix corresponding to each window image, thereby correspondingly obtaining the texture complexity corresponding to each window image.

Step S36: determining the number of multiple heads corresponding to each window image according to the illumination sensitivity, the process complexity and the texture complexity corresponding to each window image, wherein the corresponding calculation formula is as follows:

wherein,the number of the multiple heads corresponding to each window image,is the minimum value of the number of the heads of the multi-head,、andthe illumination sensitivity, the process complexity and the texture complexity corresponding to each window image respectively,、andthe weights of the illumination sensitivity, the process complexity and the texture complexity corresponding to each window image respectively,is a rounding function.

Step S37: and carrying out data processing on each window image according to the number of the multiple heads corresponding to each window image by each attention block.

Since each attention block in the above steps S31-S37 determines the number of multiple heads corresponding to each window image, and the specific process of performing data processing on the corresponding window image according to the determined number of multiple heads, detailed descriptions have been given in the working principles of the modules in the package printing anomaly monitoring network, such as window analysis, tensor matrix construction, adaptive multiple head attention mechanism, and the like, and are not described herein again.

Step S4: and the classifier of the package printing abnormity monitoring network receives the merged image after data processing sent by the last attention block, and determines an abnormal pixel binary image of a package printing product to be monitored according to the merged image after data processing.

Since the specific implementation process of determining the abnormal pixel binary image by the classifier of the package printing abnormality monitoring network is described in detail in the working principle of the normalized exponential function classifier in the package printing abnormality monitoring network, details are not described here.

Step S5: and determining surface abnormal points of the packaging printing product to be monitored according to the abnormal pixel binary image of the packaging printing product to be monitored.

Specifically, according to the abnormal pixel binary image corresponding to the merged image, the pixel point with the pixel value of 0 in the abnormal pixel binary image is used as the surface abnormal point of the package printing product to be monitored, and the pixel point with the pixel value of 1 in the abnormal pixel binary image is used as the surface normal point of the package printing product to be monitored, so that the printing quality monitoring of the package printing product to be monitored is finally realized.

It should be noted that, in the above method for monitoring package printing anomaly based on artificial intelligence, the used network for monitoring package printing anomaly is trained in advance, and the corresponding training process includes:

selecting the packaging printed products of the same batch with the packaging printed products to be monitored, acquiring a large number of surface RGBD images of the packaging printed products with abnormal points under n different set illumination angles respectively, a large number of surface RGBD images of the packaging printed products without abnormal points under n different set illumination angles respectively by using a color change detection device and surface RGBD images of the standard packaging printed products under n different set illumination angles respectively according to the mode of the step S1, wherein the surface RGBD images under n different set illumination angles corresponding to one packaging printed product with abnormal points or without abnormal points form an image data sample, and increasing the number of the image data samples corresponding to the packaging printed products with abnormal points by using a data enhancement technology so as to achieve the identification accuracy capable of adapting to various conditions, all the above image data samples together constitute an image data set.

In the image data sets, 80% of image data samples are randomly selected as a training set, 20% of image data samples are selected as a testing set, surface RGBD images of standard package printing products under n different set illumination angles are combined, after corresponding image preprocessing is carried out, a package printing abnormity monitoring network is trained, a cross entropy loss function is used for the package printing abnormity monitoring network, Adam is used for an optimizer, and finally the package printing abnormity monitoring network capable of accurately monitoring abnormal pixel points of the package printing products is obtained. Since the specific training process of the packaging printing anomaly monitoring network belongs to the common knowledge of those skilled in the art, the detailed description is omitted here.

After the training of the packaging printing anomaly monitoring network is completed, the packaging printing product to be monitored obtained in the step S1 and 2n preprocessed surface RGBD images corresponding to the standard packaging printing product are input into the network, and an abnormal pixel binary image is output by the network, so that an expert can quickly locate the position of the printing anomaly, and subsequent processing is facilitated.

According to the method, the RGBD images of the surfaces of the package printing product to be monitored and the standard package printing product under different set illumination angles are obtained, the images are preprocessed, the preprocessed images are input into a pre-constructed package printing abnormity monitoring network, a corresponding combined image is constructed by an embedded layer of the network, the combined image is processed by an attention block layer and a windowing pyramid of the network, an abnormal pixel binary image is obtained, and the surface abnormity points of the package printing product to be monitored are finally obtained. According to the method, when the combined image is obtained, the color difference of the same point of the to-be-monitored packaging printing product under different illumination angles is fully considered, and the difference of the surface RGBD image of the to-be-monitored packaging printing product and the surface RGBD image of the standard packaging printing product under different set illumination angles is also considered, so that the characteristics of each point of the to-be-monitored packaging printing product can be accurately extracted, the identification is more accurate, and the problem that the detection result is inaccurate due to manual printing quality detection of the packaging printing product is effectively solved. Secondly, the windowing pyramid of the package printing abnormity monitoring network divides the merged image into blocks from top to bottom, so that the subsequent calculation amount is further reduced, and the real-time performance is enhanced. Thirdly, each attention block in the packaging printing abnormity monitoring network adaptively determines the number of multiple heads corresponding to each window image by performing feature analysis on each window image, so that the calculation amount of the network can be effectively reduced while the accuracy of feature extraction is ensured.

It should be noted that: the above-mentioned embodiments are only used for illustrating the technical solutions of the present application, and not for limiting the same; although the present application has been described in detail with reference to the foregoing embodiments, it should be understood by those of ordinary skill in the art that: the technical solutions described in the foregoing embodiments may still be modified, or some technical features may be equivalently replaced; such modifications and substitutions do not substantially depart from the spirit and scope of the embodiments of the present application and are intended to be included within the scope of the present application.

Claims (10)

1. A package printing abnormity monitoring method based on artificial intelligence is characterized by comprising the following steps:

acquiring surface RGBD images of a to-be-monitored packaging printing product and a standard packaging printing product under n different set illumination angles respectively, and further acquiring 2n preprocessed surface RGBD images;

inputting 2n preprocessed surface RGBD images into a packaging printing abnormity monitoring network, wherein an embedded layer of the packaging printing abnormity monitoring network carries out data identification according to the 2n preprocessed surface RGBD images, determines a combined image corresponding to a packaging printing product to be monitored and a standard packaging printing product, and sends the combined image to an attention block layer of the packaging printing abnormity monitoring network;

the attention block layer of the package printing abnormity monitoring network comprises all attention blocks which are connected in sequence, each attention block forwards a combined image sent by an embedded layer or a previous attention block to a windowing pyramid of the package printing abnormity monitoring network, the windowing pyramid carries out windowing on the combined image according to an image format corresponding to the corresponding attention block to obtain each window image, and each window image is sent to the corresponding attention block; each attention block carries out data processing on each received window image to obtain a combined image after data processing, the combined image after data processing is sent to the next attention block, and the combined image after data processing is sent to the classifier of the package printing abnormity monitoring network by the last attention block;

the classifier of the package printing abnormity monitoring network receives the merged image after data processing sent by the last attention block, and determines an abnormal pixel binary image of a package printing product to be monitored according to the merged image after data processing;

and determining surface abnormal points of the packaging printing product to be monitored according to the abnormal pixel binary image of the packaging printing product to be monitored.

2. The artificial intelligence based packaging printing anomaly monitoring method according to claim 1, wherein the step of determining a merged image corresponding to the packaging printed product to be monitored and the standard packaging printed product comprises:

determining each pixel component of each pixel point in the n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored according to the n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored;

respectively splicing pixel components of n pixel points at the same position in n preprocessed surface RGBD images corresponding to a packaging printing product to be monitored, and correspondingly obtaining color variation vectors corresponding to the n pixel points at the same position;

determining each pixel component of each pixel point in the n preprocessed surface RGBD images corresponding to the standard packaging printed product according to the n preprocessed surface RGBD images corresponding to the standard packaging printed product;

respectively splicing pixel components of n pixel points at the same position in n preprocessed surface RGBD images corresponding to a standard packaging printing product, and correspondingly obtaining color variation vectors corresponding to the n pixel points at the same position;

splicing color variation vectors corresponding to n pixel points at the same position in n preprocessed surface RGBD images corresponding to the packaged printed product to be monitored, color variation vectors corresponding to n pixel points at the same position in n preprocessed surface RGBD images corresponding to the standard packaged printed product, and initialized classification vectors corresponding to the same position to correspondingly obtain pixel vectors corresponding to pixel points at the same position in 2n preprocessed surface RGBD images;

and constructing a combined image corresponding to the packaging printed product to be monitored and the standard packaging printed product, wherein the pixel value of each pixel point in the combined image is a pixel vector corresponding to the pixel point at the same position in the 2n preprocessed surface RGBD images.

3. The method for monitoring the abnormal package printing based on the artificial intelligence as claimed in claim 2, wherein the attention block layer of the network for monitoring the abnormal package printing comprises four attention blocks connected in sequence, and the image formats corresponding to the four attention blocks are as follows: the number of windows corresponding to the first attention block is 1, the number of windows corresponding to the second attention block is 4, the number of windows corresponding to the third attention block is 16, the number of windows corresponding to the fourth attention block is 64, and the number of cells in each window corresponding to the four attention blocks is the same.

4. The method for monitoring the printing anomaly of the artificial intelligence-based package as claimed in claim 3, wherein the calculation formula corresponding to the number of the units in each window corresponding to the four attention blocks is as follows:

5. The artificial intelligence based package printing anomaly monitoring method according to claim 3, wherein each attention block performs data processing on each window image received by each attention block, comprising:

determining the illumination sensitivity corresponding to each window image according to the pixel value of each pixel point in each window image;

acquiring binary images corresponding to m platemaking files corresponding to a packaging printing product to be monitored, and determining binary sub-images corresponding to each window image in the binary images corresponding to the m platemaking files;

determining the process complexity corresponding to each window image according to the number of foreground pixel points of each window image in the binary sub-image corresponding to the binary image corresponding to the m plate-making files;

acquiring target surface RGBD images in n preprocessed surface RGBD images corresponding to a standard packaging printing product, and determining corresponding target surface RGBD sub-images of the window images in the target surface RGBD images by combining the window images;

determining the texture complexity corresponding to each window image according to the corresponding target surface RGBD sub-image of each window image in the target surface RGBD image;