CN113613024B - Video preprocessing method and device - Google Patents

Video preprocessing method and device Download PDFInfo

- Publication number

- CN113613024B CN113613024B CN202110919840.8A CN202110919840A CN113613024B CN 113613024 B CN113613024 B CN 113613024B CN 202110919840 A CN202110919840 A CN 202110919840A CN 113613024 B CN113613024 B CN 113613024B

- Authority

- CN

- China

- Prior art keywords

- video frame

- preprocessing

- mode

- current video

- determining

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N19/00—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals

- H04N19/85—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using pre-processing or post-processing specially adapted for video compression

- H04N19/87—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using pre-processing or post-processing specially adapted for video compression involving scene cut or scene change detection in combination with video compression

Landscapes

- Engineering & Computer Science (AREA)

- Multimedia (AREA)

- Signal Processing (AREA)

- Two-Way Televisions, Distribution Of Moving Picture Or The Like (AREA)

Abstract

The embodiment of the application relates to the technical field of multimedia and provides a video preprocessing method and device. The video preprocessing method comprises the following steps: acquiring video frame attribute parameter information of a current video frame, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes under the condition that the video frame attribute parameter information meets the preprocessing mode switching condition, and further executing preprocessing on the current video frame according to the target preprocessing mode. It can be seen that, according to the technical solution of the embodiment of the present application, a plurality of preprocessing modes are configured, and then, a preprocessing mode matched with a video frame is selected from the plurality of preprocessing modes according to the attribute parameter information of the video frame, so that preprocessing is performed on the video frame by using the matched preprocessing mode. In this way, the quality difference of each video frame of the video after preprocessing can be ensured to be relatively small, so that the viewing experience of a user can be improved.

Description

Technical Field

The embodiment of the application relates to the technical field of multimedia, in particular to a video preprocessing method and device.

Background

Video preprocessing is the process of processing video data for encoding prior to video encoding. One common video preprocessing method is to process each video frame of the video according to a pre-configured processing flow and algorithm.

In an actual implementation scenario, a change in the content displayed by a video, a change in the network state, or the like may cause different qualities (such as image quality and noise) of different video frames of the same video. Based on the above, the same processing flow and algorithm are adopted to process each video frame of the video, which results in larger quality difference after processing each video frame, thereby reducing the viewing experience of the user.

Disclosure of Invention

The embodiment of the application provides a video preprocessing method and video preprocessing equipment, which are used for solving the problem that the quality difference of each processed video frame is large due to the existing video preprocessing method.

In a first aspect, an embodiment of the present application provides a video preprocessing method, where the method includes:

acquiring video frame attribute parameter information of a current video frame;

if the attribute parameter information of the video frame meets the preprocessing mode switching condition, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes;

and preprocessing the current video frame according to the target preprocessing mode.

In a second aspect, an embodiment of the present application provides a video preprocessing apparatus, including:

the acquisition module is used for acquiring video frame attribute parameter information of the current video frame;

the processing mode determining module is used for determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes if the video frame attribute parameter information meets the preprocessing mode switching condition;

and the processing module is used for executing preprocessing on the current video frame according to the target preprocessing mode.

In some possible embodiments, the preset at least two pre-processing modes include: no processing, a color enhancement processing mode, a sharpness enhancement processing mode, and a noise reduction processing mode.

In some possible embodiments, the video frame attribute parameter information includes a scene category corresponding to the current video frame, and the obtaining module is further configured to extract a first video frame feature of the current video frame and a second video frame feature of a previous video frame of the current video frame; performing similarity calculation on the first video frame characteristics and the second video frame characteristics, and determining the similarity of the current video frame and the previous video frame of the current video frame; if the similarity is smaller than a preset similarity threshold, determining that the current video frame switches scenes relative to the previous video frame of the current video frame; and determining a scene category of the current video frame.

In some possible implementations, the processing mode determining module is further configured to determine the color enhancement processing mode as the target processing mode if the scene category of the current video frame is the target scene category.

In some possible implementations, the video frame attribute parameter information further includes at least one of: the definition of the current video frame, the resolution of the current video frame and the corresponding frame rate of the current video frame;

the processing mode determining module is further configured to determine that no processing is performed as a target processing mode if the sharpness of the current video frame is greater than a first threshold, or if the resolution of the current video frame is greater than a second threshold, or if the frame rate corresponding to the current video frame is greater than a third threshold;

the processing mode determining module is further configured to determine the sharpness enhancement processing as a target processing mode if the sharpness of the current video frame is smaller than a fourth threshold, where the fourth threshold is smaller than the first threshold;

the processing mode determining module is further configured to determine the noise reduction processing mode as a target processing mode if the noise of the current video frame is greater than a fifth threshold.

In some possible implementations, the scene category of the current video frame is a target scene category, including: the current video frame is a game scene or an animation scene.

The processing mode determining module is further configured to determine a target preprocessing mode according to a preset priority of each condition when the video frame attribute parameter information meets at least two preprocessing mode switching conditions.

In a third aspect, an electronic device is provided that includes a memory and one or more processors; wherein the memory is used for storing a computer program; the computer program, when executed by the processor, causes an electronic device to perform the video preprocessing method of the first aspect.

In a fourth aspect, embodiments of the present application provide a computer-readable storage medium having instructions stored therein, which when executed on a computer, cause the computer to perform some or all of the steps of the video preprocessing method of the first aspect.

In a fifth aspect, embodiments of the present application provide a computer program product, where the computer program product includes computer program code, which when run on a computer causes the computer to implement the video preprocessing method according to the first aspect.

In order to solve the technical problems of the existing scheme, at least two preprocessing modes are preconfigured in the embodiment of the application. After the video frame attribute parameter information of the current video frame is acquired, if the video frame attribute parameter information meets the preprocessing mode switching condition, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes, and further executing preprocessing on the current video frame according to the determined target preprocessing mode. It can be seen that, according to the technical solution of the embodiment of the present application, a plurality of preprocessing modes are configured, and then, according to the attribute parameter information of the video frame, a preprocessing mode matched with the current video frame is selected from the plurality of preprocessing modes, so that preprocessing is performed on the video frame by using the matched processing mode. In this way, the preprocessing mode is adaptively selected from the plurality of preprocessing modes, so that the quality difference of each video frame of the video after being processed is relatively small, and the viewing experience of a user can be improved.

Drawings

In order to more clearly illustrate the technical solutions in the embodiments of the present application, the following will briefly describe the drawings that are required to be used in the embodiments of the present application. It will be appreciated by those of ordinary skill in the art that other figures may be derived from these figures without inventive effort.

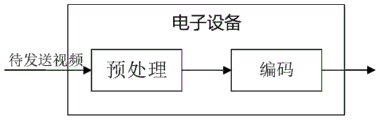

Fig. 1 is a schematic view of an exemplary application scenario provided in an embodiment of the present application;

FIG. 2 is an exemplary method flowchart of a video preprocessing method 100 provided in an embodiment of the present application;

FIG. 3 is an exemplary interface diagram of a video frame provided in an embodiment of the present application;

FIG. 4 is another exemplary interface schematic of a video frame provided in an embodiment of the present application;

fig. 5A is a schematic diagram illustrating an exemplary composition of a video preprocessing device 50 according to an embodiment of the present application;

fig. 5B is an exemplary structural schematic diagram of the electronic device 51 provided in the embodiment of the present application.

Detailed Description

The technical solutions of the embodiments of the present application are described below with reference to the drawings in the embodiments of the present application.

The terminology used in the following examples of the application is for the purpose of describing particular embodiments and is not intended to be limiting of the technical aspects of the application. As used in the specification and the appended claims, the singular forms "a," "an," "the," and "the" are intended to include the plural forms as well, unless the context clearly indicates to the contrary. It should also be understood that, although the terms first, second, etc. may be used in the following embodiments to describe certain types of objects, the objects should not be limited to these terms. These terms are used to distinguish between specific implementation objects of such objects. For example, the terms first, second, etc. are used in the following embodiments to describe video frame features, but video frame features are not limited to these terms. These terms are only used to distinguish the characteristics of different video frames. Other classes of objects that may be described in the following embodiments using the terms first, second, etc. are not described here again.

The following describes an application scenario of the embodiments of the present application, an electronic device, and a video preprocessing method executed by using such an electronic device.

The embodiment of the application can be applied to live broadcast, screen casting and the like, and relates to a scene of video transmission. Embodiments of the present application relate to a processing stage prior to video transmission. Referring to fig. 1, fig. 1 illustrates an exemplary application scenario according to an embodiment of the present application. Before transmitting the video, the electronic device pre-processes the video to be transmitted to obtain video data for encoding, and further encodes the obtained video data to obtain encoded data for transmission.

Optionally, the video to be sent according to the embodiment of the present application may include a video signal acquired or captured by the electronic device through the video capturing device in real time, a video file pre-stored in a local area of the electronic device, and a video file received through a network. Video capture devices include, for example, cameras, virtual Reality (VR) devices, and augmented reality (augmented reality, AR) devices, among others.

The video may be understood as a plurality of Frame images (which may also be described as video frames in the field) played according to a certain sequence and Frame Rate (Frame Rate), based on which the video is preprocessed, and in the actual implementation process, each video Frame of the video is preprocessed. The purpose of video preprocessing may be to ensure that the image quality corresponding to the video frame data for encoding reaches a certain level, and to reduce the code rate of the video frame data for encoding. In this regard, video preprocessing may include truing video frames, color format conversion (e.g., converting video frames from Red Green Blue (RGB) format to brightness and chrominance (YUV) format), toning or denoising, and the like.

The video coding according to the embodiments of the present application may be the operation of the preprocessed video data according to a video coding standard (e.g., high efficiency video coding h.265 standard) or an industry standard. The standards described herein may include ITU-T H.261, ISO/IECMPEG-1Visual, ITU-T H.262 or ISO/IECMPEG-2Visual, ITU-T H.263, ISO/IECMPEG-4Visual, ITU-T H.264 (also known as ISO/IECMPEG-4 AVC), and the like. The embodiments of the present application are not limited in this regard.

The electronic device according to the embodiments of the present application is implemented as a video transmitting device, and may be implemented as a device including a desktop computer, a mobile computing device, a notebook (e.g., laptop) computer, a tablet computer, a smart phone, a television, a camera, a digital media player, a video game console, a vehicle-mounted computer, or other devices that support video preprocessing functions. Alternatively, the electronic device may implement some or all of the steps of the video preprocessing method of the embodiments of the present application using a central processing unit (central processing unit, CPU) or a graphics processor (graphics processing unit, GPU). Alternatively, in an actual implementation scenario, the video preprocessing function may be deployed in another device independent of the video encoding device, or may be deployed in the video encoding device. The embodiments of the present application are not limited in this regard.

The embodiment of the application provides a video preprocessing method and device, wherein a plurality of preprocessing modes are preconfigured in the device. Then, the apparatus selects a preprocessing mode matching the video frame from the plurality of preprocessing modes according to attribute parameter information of the video frame to be processed, so as to perform preprocessing on the video frame using the matched preprocessing mode. In this way, the quality difference of each video frame of the video after preprocessing can be ensured to be relatively small, so that the viewing experience of a user can be improved.

Technical solutions of the embodiments of the present application and technical effects produced by the technical solutions of the present application are described below through descriptions of exemplary embodiments.

Referring to fig. 2, fig. 2 illustrates an exemplary video preprocessing method 100 (hereinafter referred to as method 100). The present implementation is described by taking the sending device executing method 100 as an example, and the sending device may be the electronic device described above. The method 100 comprises the steps of:

in step S101, the transmitting apparatus acquires video frame attribute parameter information of the current video frame.

Wherein the current video frame is a video frame of the video to be processed. Alternatively, the first video frame and the last video frame of the video to be processed generally have less influence on the overall look and feel of the video to be processed, and based on this, alternatively, the current video frame may be any video frame except for the first video frame and the last video frame in the video to be processed. It should be understood that the video to be processed here has the same meaning as the video to be transmitted illustrated in fig. 1.

In combination with the foregoing description of the source of the video to be transmitted, optionally, the transmitting device may acquire or capture the current video frame in real time through the video capturing device, or acquire the current video frame from a locally pre-stored video file, or receive the current video frame through a network. The manner in which the sending device obtains the current video frame is illustratively related to the implementation scenario. For example, in a live scene, a sending device may acquire or capture a current video frame in real time through a video capture device; for another example, in the screen-drop scenario, the sending device may obtain the current video frame from a locally pre-stored video file.

Optionally, the video frame attribute parameter information may include at least one of a scene category corresponding to the current video frame, a sharpness of the current video frame, a resolution of the current video frame, and a corresponding frame rate of the current video frame.

Alternatively, the "scene" in the embodiments of the present application may refer to a situation expressed by the picture content of the video frame, for example, the situation expressed by the video frame illustrated in fig. 3 is a game, and then, the situation illustrated in fig. 3 is a game scene; for another example, the video frame representation scenario illustrated in fig. 4 is a commodity close-up scenario, and then fig. 4 illustrates a commodity close-up scenario. In order to ensure the visual experience of the user, video frames corresponding to different scenes can be displayed with different image quality. Based on this, the embodiment of the present application may divide the scene categories according to the requirements for the image quality, and use the categories corresponding to the scenes having a certain requirement for the image quality as the target scene categories. For example, in live video, a game scene, an animation scene, and a commodity feature scene are scenes that have a certain requirement for image quality, and then a scene category corresponding to the game scene, the animation scene, and the commodity feature scene may be regarded as a target scene category.

In an actual implementation scene, a plurality of continuous video frames of the video to be processed usually show the same scene, the sending device can determine whether the video to be processed switches the scene according to the current video frame, and if the video to be processed is determined to switch the scene, the sending device can further determine the scene type of the current video frame.

For example, the transmitting device may extract a first video frame feature of the current video frame and a second video frame feature of a previous video frame of the current video frame, and then perform similarity calculation on the first video frame feature and the second video frame feature to determine the similarity of the current video frame and the previous video frame of the current video frame. If the similarity is smaller than a preset similarity threshold, determining that the current video frame switches scenes relative to the previous video frame of the current video frame, and determining the scene category of the current video frame.

For example, the first video frame feature and the second video frame feature may each be implemented as gray histogram features.

Illustratively, the foregoing similarity may be characterized by a distance, where the distance includes a Euclidean distance (Euclidean Distance) or a cosine distance. The larger the Euclidean distance is, the higher the similarity of the two features is, and the smaller the Euclidean distance is, the lower the similarity of the two features is. The smaller the cosine distance, the higher the similarity of the two features, and the larger the cosine distance, the lower the similarity of the two features.

Sharpness refers to the sharpness of each detail shadow and its boundaries on a video frame, which can be expressed in terms of resolution. Resolution refers to the total number of pixels (P) of a video frame, also expressed as the size or dimension of the video frame, and can be generally expressed by the number of pixels in the width direction by the number of pixels in the height direction, for example, when the resolution of the current video frame is 1024p×768P, an image of 1024 pixels in width and 768 pixels in height of the current video frame is expressed. The Frame rate refers to the number of video Frames (FPS) that the video includes per second.

In step S102, if the video frame attribute parameter information satisfies the preprocessing mode switching condition, a corresponding switched target preprocessing mode is determined from at least two preset preprocessing modes.

At least two preprocessing modes may be preconfigured among the transmitting devices, and optionally, the at least two preprocessing modes may include a no-process, a color enhancement processing mode, a sharpness enhancement processing mode, a noise reduction processing mode, and the like. The color enhancement processing mode, the definition enhancement processing mode and the noise reduction processing mode can respectively realize related processing functions through processing algorithms or combination of the processing algorithms. The mode of no processing does not include any processing algorithms. Alternatively, if two preprocessing modes contain the same processing algorithm, the parameters of the same processing algorithm in the two preprocessing modes may be different. Alternatively, the processing algorithms may include noise reduction algorithms, color enhancement algorithms, sharpening algorithms, contrast enhancement algorithms, and the like.

It is to be understood that the above is merely a schematic description of the processing mode and processing algorithm, and is not limiting of the embodiments of the present application. In other embodiments, the at least two pre-processing modes may also include more or fewer processing modes and processing algorithms.

According to the foregoing description of the video frame attribute parameter information, the video frame attribute parameter information includes at least one item of information, based on which the video frame attribute parameter information satisfies a preprocessing mode switching condition, including at least one case, corresponding to determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes, and may also include multiple cases.

Optionally, if the scene type of the current video frame is the target scene type, determining the color enhancement processing mode as the target processing mode. If the definition of the current video frame is greater than the first threshold, or the resolution of the current video frame is greater than the second threshold, or the frame rate corresponding to the current video frame is greater than the third threshold, determining that the processing is not performed as a target processing mode. And if the definition of the current video frame is smaller than a fourth threshold value, determining the definition enhancement processing as a target processing mode, wherein the fourth threshold value is smaller than the first threshold value. And if the noise of the current video frame is larger than the fifth threshold value, determining the noise reduction processing mode as a target processing mode.

Illustratively, the scene category of the current video frame is a target scene category, comprising: the current video frame is a game scene or an animation scene.

In step S103, the transmitting apparatus performs preprocessing on the current video frame in accordance with the target preprocessing mode.

After determining the target processing mode, the transmitting device may pre-process the current video frame according to an algorithm of the target processing mode.

Optionally, when the video frame attribute parameter information satisfies at least two preprocessing mode switching conditions, the transmitting device may determine the target preprocessing mode according to a preset priority of each condition.

It will be appreciated that the priority of each of the above information is only schematically described and that the embodiments of the present application are not limited. In the actual implementation process, the priority of each information can be flexibly set according to the requirements. The embodiments of the present application are not limited in this regard.

For example:

pretreatment mode one: the current video frame satisfies at least one of: the resolution of the current video frame is greater than 540p, the frame rate of the current video frame is greater than 25, or the sharpness of the current video frame is greater than 50, and the transmitting device does not pre-process the current video frame according to the target processing mode (i.e., does not process).

Pretreatment mode II: the resolution of the current video frame is less than 540p, the frame rate of the current video frame is equal to 25, the definition of the current video frame is less than 20, and the sending device is in accordance with the target processing mode: and a sharpness enhancement processing mode for performing sharpness enhancement processing on the current video frame.

Pretreatment mode III: and a scene in which the conditions of the first pretreatment mode and the conditions of the second pretreatment mode are not satisfied. In the preprocessing mode III, the current video frame shows the animation and the game, and the sending equipment processes the mode according to the target: color enhancement processing mode, performing color enhancement processing on the current video frame.

Pretreatment mode four: a scene in which the conditions of the first pretreatment mode, the second pretreatment mode, and the third pretreatment mode are not satisfied. In the fourth preprocessing mode, the noise intensity of the current video frame is greater than 5, and the transmitting device processes the mode according to the target: and a noise reduction processing mode for performing noise reduction processing on the current video frame.

It should be understood that the above pretreatment modes one to four are all schematically described, and do not constitute limitations on the embodiments of the present application. In other embodiments, the threshold values for the conditions may be other values, and the sending device may perform other preprocessing operations on the current video frame if the current video frame meets other conditions. The embodiments of the present application are not limited in this regard.

The at least two preprocessing modes, the content of the attribute information, and various conditions corresponding to the attribute information are all schematically described, and the embodiments of the present application are not limited thereto. In the implementation process of the technical scheme, the at least two preprocessing modes, the content of the attribute information and various conditions corresponding to the attribute information can all comprise other examples. Embodiments of the present application are not illustrated.

In summary, in an implementation manner of the embodiment of the present application, at least two preprocessing modes are preconfigured. After the video frame attribute parameter information of the current video frame is acquired, if the video frame attribute parameter information meets the preprocessing mode switching condition, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes, and further executing preprocessing on the current video frame according to the determined target preprocessing mode. It can be seen that, according to the technical solution of the embodiment of the present application, a plurality of preprocessing modes are configured, and then, according to the attribute parameter information of the video frame, a preprocessing mode matched with the current video frame is selected from the plurality of preprocessing modes, so that preprocessing is performed on the video frame by using the matched processing mode. In this way, the preprocessing mode is adaptively selected from the plurality of preprocessing modes, so that the quality difference of each video frame of the video after being processed is relatively small, and the viewing experience of a user can be improved.

The above embodiments describe each implementation of the video preprocessing method provided in the embodiments of the present application from the perspective of actions performed by devices such as acquisition of video frame attribute parameter information, detection of whether a switching condition is satisfied, determination of a target preprocessing model, and the like. It should be understood that, in the embodiments of the present application, the above functions may be implemented in hardware or a combination of hardware and computer software, corresponding to the processing steps of acquiring the attribute parameter information of the video frame, detecting whether the switching condition is satisfied, determining the target preprocessing model, and so on. Whether a function is implemented as hardware or computer software driven hardware depends upon the particular application and design constraints imposed on the solution. Skilled artisans may implement the described functionality in varying ways for each particular application, but such implementation decisions should not be interpreted as causing a departure from the scope of the present application.

For example, if the above implementation steps are implemented by software modules, as shown in fig. 5A, the embodiment of the present application provides a video preprocessing device 50. The video preprocessing device 50 may include an acquisition module 501, a processing mode determination module 502, and a processing module 503. The video preprocessing unit 50 may be used to perform some or all of the operations of the method 100 described above.

For example: the acquisition module 501 may be configured to acquire video frame attribute parameter information of a current video frame. The processing mode determining module 502 may be configured to determine a corresponding switched target preprocessing mode from at least two preset preprocessing modes if the video frame attribute parameter information meets the preprocessing mode switching condition. The processing module 503 may be configured to perform preprocessing on the current video frame according to the target preprocessing mode.

It can be seen that the video preprocessing device 50 provided in the embodiments of the present application pre-configures at least two preprocessing modes. After the video frame attribute parameter information of the current video frame is acquired, if the video frame attribute parameter information meets the preprocessing mode switching condition, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes, and further executing preprocessing on the current video frame according to the determined target preprocessing mode. In this way, the preprocessing mode is adaptively selected from the plurality of preprocessing modes, so that the quality difference of each video frame of the video after being processed is relatively small, and the viewing experience of a user can be improved.

Optionally, the preset at least two preprocessing modes include: no processing, a color enhancement processing mode, a sharpness enhancement processing mode, and a noise reduction processing mode.

Optionally, the video frame attribute parameter information includes a scene category corresponding to the current video frame, and the obtaining module 501 is further configured to extract a first video frame feature of the current video frame and a second video frame feature of a previous video frame of the current video frame; performing similarity calculation on the first video frame characteristics and the second video frame characteristics, and determining the similarity of the current video frame and the previous video frame of the current video frame; if the similarity is smaller than a preset similarity threshold, determining that the current video frame switches scenes relative to the previous video frame of the current video frame; and determining a scene category of the current video frame.

Optionally, the processing mode determining module 502 is further configured to determine the color enhancement processing mode as the target processing mode if the scene type of the current video frame is the target scene type.

Optionally, the video frame attribute parameter information further includes at least one of: the sharpness of the current video frame, the resolution of the current video frame, and the corresponding frame rate of the current video frame. In this example, the processing mode determining module 502 is further configured to determine that the processing is not performed as the target processing mode if the sharpness of the current video frame is greater than the first threshold, or the resolution of the current video frame is greater than the second threshold, or the frame rate corresponding to the current video frame is greater than the third threshold. The processing mode determining module 502 is further configured to determine the sharpness enhancement process as the target processing mode if the sharpness of the current video frame is smaller than a fourth threshold, and the fourth threshold is smaller than the first threshold. The processing mode determining module 502 is further configured to determine the noise reduction processing mode as the target processing mode if the noise of the current video frame is greater than a fifth threshold.

Optionally, the scene category of the current video frame is a target scene category, including: the current video frame is a game scene or an animation scene.

Optionally, the processing mode determining module 502 is further configured to determine, when the video frame attribute parameter information meets at least two preprocessing mode switching conditions, a target preprocessing mode according to a preset priority of each condition.

It will be appreciated that the above division of the modules/units is merely a division of logic functions, and in actual implementation, the functions of the above modules may be integrated into a hardware entity implementation, for example, the functions of the processing mode determining module 502 and the processing module 503 may be integrated into a processor implementation, the functions of the acquiring module 501 may be integrated into a transceiver implementation, and programs and instructions implementing the functions of the above modules may be maintained in a memory.

For example, fig. 5B provides an electronic device 51, where the electronic device 51 may implement the functions of the foregoing transmitting device. The electronic device 51 includes a processor 511, a transceiver 512, and a memory 513. Wherein transceiver 512 is configured to perform the retrieval of information in method 100. Alternatively, the transceiver 512 may be implemented as an image receiving device, such as a camera. The memory 513 may be used to store programs/codes preloaded by the video preprocessing device 50, or may be used to store codes executed by the processor 511, etc. The processor 511, when executing the code stored in the memory 513, causes the electronic device 51 to perform some or all of the operations of the video preprocessing method in the method 100 described above.

The specific implementation is described in detail in the embodiments illustrated in method 100 above and not described in detail herein.

In a specific implementation, corresponding to the foregoing electronic device, the embodiment of the application further provides a computer storage medium, where the computer storage medium provided in the electronic device may store a program, and when the program is executed, part or all of the steps in each embodiment of the video preprocessing method including the method 100 may be implemented. The storage medium may be a magnetic disk, an optical disk, a read-only memory (ROM), a random-access memory (random access memory, RAM), or the like.

One or more of the above modules or units may be implemented in software, hardware, or a combination of both. When any of the above modules or units are implemented in software, the software exists in the form of computer program instructions and is stored in a memory, a processor can be used to execute the program instructions and implement the above method flows. The processor may include, but is not limited to, at least one of: a central processing unit (central processing unit, CPU), microprocessor, digital Signal Processor (DSP), microcontroller (microcontroller unit, MCU), or artificial intelligence processor, each of which may include one or more cores for executing software instructions to perform operations or processes. The processor may be built into a SoC (system on a chip) or an application specific integrated circuit (application specific integrated circuit, ASIC) or may be a separate semiconductor chip. The processor may further include necessary hardware accelerators, such as field programmable gate arrays (field programmable gate array, FPGAs), PLDs (programmable logic devices), or logic circuits implementing dedicated logic operations, in addition to the cores for executing software instructions for operation or processing.

When the above modules or units are implemented in hardware, the hardware may be any one or any combination of a CPU, microprocessor, DSP, MCU, artificial intelligence processor, ASIC, soC, FPGA, PLD, dedicated digital circuitry, hardware accelerator, or non-integrated discrete device that may run the necessary software or that is independent of the software to perform the above method flows.

Further, a bus interface may be included in FIG. 5B, which may include any number of interconnected buses and bridges, with the various circuits of the memory, specifically represented by one or more of the processors and the memory, being linked together. The bus interface may also link together various other circuits such as peripheral devices, voltage regulators, power management circuits, etc., which are well known in the art and, therefore, will not be described further herein. The bus interface provides an interface. The transceiver provides a means for communicating with various other apparatus over a transmission medium. The processor is responsible for managing the bus architecture and general processing, and the memory may store data used by the processor in performing operations.

When the above modules or units are implemented in software, they may be implemented in whole or in part in the form of a computer program product. The computer program product includes one or more computer instructions. When loaded and executed on a computer, produces a flow or function in accordance with embodiments of the present invention, in whole or in part. The computer may be a general purpose computer, a special purpose computer, a computer network, or other programmable apparatus. The computer instructions may be stored in or transmitted from one computer-readable storage medium to another, for example, by wired (e.g., coaxial cable, optical fiber, digital Subscriber Line (DSL)), or wireless (e.g., infrared, wireless, microwave, etc.). The computer readable storage medium may be any available medium that can be accessed by a computer or a data storage device such as a server, data center, etc. that contains an integration of one or more available media. The usable medium may be a magnetic medium (e.g., floppy Disk, hard Disk, magnetic tape), an optical medium (e.g., DVD), or a semiconductor medium (e.g., solid State Disk (SSD)), etc.

It should be understood that, in various embodiments of the present application, the size of the sequence number of each process does not mean that the execution sequence of each process should be determined by its functions and internal logic, and should not constitute any limitation on the implementation process of the embodiments.

All parts of the specification are described in a progressive manner, and all parts of the embodiments which are the same and similar to each other are referred to each other, and each embodiment is mainly described as being different from other embodiments. In particular, for apparatus and system embodiments, the description is relatively simple, as it is substantially similar to method embodiments, with reference to the description of the method embodiments section.

While alternative embodiments of the present application have been described, additional variations and modifications in those embodiments may occur to those skilled in the art once they learn of the basic inventive concepts. It is therefore intended that the following claims be interpreted as including the preferred embodiments and all such alterations and modifications as fall within the scope of the application.

The foregoing embodiments have been provided for the purpose of illustrating the general principles of the present invention, and are not meant to limit the scope of the invention, but to limit the scope of the invention.

Claims (8)

1. A method of video preprocessing, the method comprising:

acquiring video frame attribute parameter information of a current video frame;

if the video frame attribute parameter information meets the preprocessing mode switching condition, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes;

preprocessing the current video frame according to the target preprocessing mode, and obtaining video data for encoding after preprocessing the video frame of the video to be processed;

wherein the preset at least two preprocessing modes comprise: a color enhancement processing mode, a sharpness enhancement processing mode, and a noise reduction processing mode;

if the video frame attribute parameter information meets the preprocessing mode switching condition, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes, wherein the method comprises the following steps:

if the definition of the current video frame is smaller than a fourth threshold value, determining a definition enhancement processing mode as the target preprocessing mode;

if the noise of the current video frame is larger than a fifth threshold, determining a noise reduction processing mode as the target preprocessing mode;

and if the scene type of the current video frame is a game scene or an animation scene, determining a color enhancement processing mode as the target preprocessing mode.

2. The method of claim 1, wherein the preset at least two preprocessing modes further comprise: no treatment is performed.

3. The method according to claim 1 or 2, wherein the video frame attribute parameter information includes a scene category corresponding to the current video frame,

the obtaining the video frame attribute parameter information of the current video frame comprises the following steps:

extracting a first video frame characteristic of a current video frame and a second video frame characteristic of a video frame before the current video frame;

performing similarity calculation on the first video frame characteristics and the second video frame characteristics, and determining the similarity of the current video frame and a video frame before the current video frame;

if the similarity is smaller than a preset similarity threshold, determining that the current video frame switches scenes relative to the previous video frame of the current video frame;

a scene category of the current video frame is determined.

4. The method according to claim 1 or 2, wherein if the video frame attribute parameter information satisfies a preprocessing mode switching condition, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes, further comprises:

if the definition of the current video frame is greater than a first threshold, or the resolution of the current video frame is greater than a second threshold, or the frame rate corresponding to the current video frame is greater than a third threshold, determining that no processing is performed as the target preprocessing mode;

the fourth threshold is less than the first threshold.

5. The method according to claim 1 or 2, wherein if the video frame attribute parameter information satisfies a preprocessing mode switching condition, determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes, further comprises:

and when the video frame attribute parameter information meets at least two preprocessing mode switching conditions, determining the target preprocessing mode according to the priority of each preset condition.

6. A video preprocessing device, the device comprising:

the acquisition module is used for acquiring video frame attribute parameter information of the current video frame;

the processing mode determining module is used for determining a corresponding switched target preprocessing mode from at least two preset preprocessing modes if the video frame attribute parameter information meets the preprocessing mode switching condition;

the processing module is used for preprocessing the current video frame according to the target preprocessing mode, and obtaining video data for encoding after preprocessing the video frame of the video to be processed;

wherein the preset at least two preprocessing modes comprise: a color enhancement processing mode, a sharpness enhancement processing mode, and a noise reduction processing mode;

the processing mode determining module is specifically configured to:

if the definition of the current video frame is smaller than a fourth threshold value, determining a definition enhancement processing mode as the target preprocessing mode;

if the noise of the current video frame is larger than a fifth threshold, determining a noise reduction processing mode as the target preprocessing mode;

and if the scene type of the current video frame is a game scene or an animation scene, determining a color enhancement processing mode as the target preprocessing mode.

7. An electronic device comprising a memory and one or more processors; wherein the memory is used for storing a computer program; the computer program, when executed by the processor, causes the electronic device to perform the video preprocessing method of any one of claims 1 to 5.

8. A computer readable storage medium, characterized in that the computer readable storage medium stores a computer program which, when run on a computer, causes the computer to perform the video preprocessing method according to any one of claims 1 to 5.

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202110909960X | 2021-08-09 | ||

| CN202110909960 | 2021-08-09 |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN113613024A CN113613024A (en) | 2021-11-05 |

| CN113613024B true CN113613024B (en) | 2023-04-25 |

Family

ID=78308242

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202110919840.8A Active CN113613024B (en) | 2021-08-09 | 2021-08-11 | Video preprocessing method and device |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN113613024B (en) |

Families Citing this family (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114302175A (en) * | 2021-12-01 | 2022-04-08 | 阿里巴巴(中国)有限公司 | Video processing method and device |

Citations (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112805990A (en) * | 2018-11-15 | 2021-05-14 | 深圳市欢太科技有限公司 | Video processing method and device, electronic equipment and computer readable storage medium |

Family Cites Families (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN104581199B (en) * | 2014-12-12 | 2018-02-27 | 百视通网络电视技术发展有限责任公司 | Processing system for video and its processing method |

| CN107481327B (en) * | 2017-09-08 | 2019-03-15 | 腾讯科技(深圳)有限公司 | About the processing method of augmented reality scene, device, terminal device and system |

| CN109905711B (en) * | 2019-02-28 | 2021-02-09 | 深圳英飞拓智能技术有限公司 | Image processing method and system and terminal equipment |

| CN112312231B (en) * | 2019-07-31 | 2022-09-02 | 北京金山云网络技术有限公司 | Video image coding method and device, electronic equipment and medium |

| CN110958469A (en) * | 2019-12-13 | 2020-04-03 | 联想(北京)有限公司 | Video processing method and device, electronic equipment and storage medium |

| CN111698553B (en) * | 2020-05-29 | 2022-09-27 | 维沃移动通信有限公司 | Video processing method and device, electronic equipment and readable storage medium |

-

2021

- 2021-08-11 CN CN202110919840.8A patent/CN113613024B/en active Active

Patent Citations (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112805990A (en) * | 2018-11-15 | 2021-05-14 | 深圳市欢太科技有限公司 | Video processing method and device, electronic equipment and computer readable storage medium |

Also Published As

| Publication number | Publication date |

|---|---|

| CN113613024A (en) | 2021-11-05 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN111681167B (en) | Image quality adjusting method and device, storage medium and electronic equipment | |

| US9013536B2 (en) | Augmented video calls on mobile devices | |

| US10887614B2 (en) | Adaptive thresholding for computer vision on low bitrate compressed video streams | |

| JP7359521B2 (en) | Image processing method and device | |

| US20150117540A1 (en) | Coding apparatus, decoding apparatus, coding data, coding method, decoding method, and program | |

| US8594449B2 (en) | MPEG noise reduction | |

| JP2022550565A (en) | IMAGE PROCESSING METHOD, IMAGE PROCESSING APPARATUS, ELECTRONIC DEVICE, AND COMPUTER PROGRAM | |

| CN112954393A (en) | Target tracking method, system, storage medium and terminal based on video coding | |

| CN113518185A (en) | Video conversion processing method and device, computer readable medium and electronic equipment | |

| JP2011091510A (en) | Image processing apparatus and control method therefor | |

| US8630500B2 (en) | Method for the encoding by segmentation of a picture | |

| CN113613024B (en) | Video preprocessing method and device | |

| WO2016197323A1 (en) | Video encoding and decoding method, and video encoder/decoder | |

| CN115471413A (en) | Image processing method and device, computer readable storage medium and electronic device | |

| WO2022261849A1 (en) | Method and system of automatic content-dependent image processing algorithm selection | |

| CN113542864B (en) | Video splash screen area detection method, device and equipment and readable storage medium | |

| Xia et al. | Visual sensitivity-based low-bit-rate image compression algorithm | |

| US10999582B1 (en) | Semantically segmented video image compression | |

| Zhao et al. | Fast CU partition decision strategy based on human visual system perceptual quality | |

| CN112488933A (en) | Video detail enhancement method and device, mobile terminal and storage medium | |

| US20230326086A1 (en) | Systems and methods for image and video compression | |

| CN116980604A (en) | Video encoding method, video decoding method and related equipment | |

| CN114266696B (en) | Image processing method, apparatus, electronic device, and computer-readable storage medium | |

| CN108668169A (en) | Image information processing method and device, storage medium | |

| KR20240038779A (en) | Encoding and decoding methods, and devices |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |