CN110798696B - Live broadcast interaction method and device, electronic equipment and readable storage medium - Google Patents

Live broadcast interaction method and device, electronic equipment and readable storage medium Download PDFInfo

- Publication number

- CN110798696B CN110798696B CN201911130084.XA CN201911130084A CN110798696B CN 110798696 B CN110798696 B CN 110798696B CN 201911130084 A CN201911130084 A CN 201911130084A CN 110798696 B CN110798696 B CN 110798696B

- Authority

- CN

- China

- Prior art keywords

- interactive

- live broadcast

- content

- interaction

- target

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/20—Servers specifically adapted for the distribution of content, e.g. VOD servers; Operations thereof

- H04N21/21—Server components or server architectures

- H04N21/218—Source of audio or video content, e.g. local disk arrays

- H04N21/2187—Live feed

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/40—Client devices specifically adapted for the reception of or interaction with content, e.g. set-top-box [STB]; Operations thereof

- H04N21/43—Processing of content or additional data, e.g. demultiplexing additional data from a digital video stream; Elementary client operations, e.g. monitoring of home network or synchronising decoder's clock; Client middleware

- H04N21/439—Processing of audio elementary streams

- H04N21/4394—Processing of audio elementary streams involving operations for analysing the audio stream, e.g. detecting features or characteristics in audio streams

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/40—Client devices specifically adapted for the reception of or interaction with content, e.g. set-top-box [STB]; Operations thereof

- H04N21/47—End-user applications

- H04N21/478—Supplemental services, e.g. displaying phone caller identification, shopping application

Landscapes

- Engineering & Computer Science (AREA)

- Multimedia (AREA)

- Signal Processing (AREA)

- Databases & Information Systems (AREA)

- Information Transfer Between Computers (AREA)

- Two-Way Televisions, Distribution Of Moving Picture Or The Like (AREA)

Abstract

The embodiment of the application provides a live broadcast interaction method and device, electronic equipment and a readable storage medium, a live broadcast providing terminal sends target interaction content matched with audio interaction data to a server according to the audio interaction data between an anchor broadcast and audiences in a live broadcast room, the server generates corresponding interactive service information according to the target interaction content and sends the interactive service information to a live broadcast watching terminal entering the live broadcast room, and the live broadcast watching terminal shows corresponding interactive animation special effects in an interaction interface of the live broadcast room according to the interactive service information. Therefore, the corresponding interactive animation special effect can be executed according to the target interactive content matched with the audio interactive data, the representation mode and interactive experience of live broadcast interaction are enriched, and the flow operation of a live broadcast platform is facilitated.

Description

Technical Field

The application relates to the field of internet live broadcast, in particular to a live broadcast interaction method and device, an electronic device and a readable storage medium.

Background

The anchor generates a lot of audio interaction data with the audience in the process of live broadcasting, and the audio interaction data can represent the interactive content between the anchor and the audience. In the current interactive mode, the audio interactive data can only be directly transmitted to a live broadcast watching terminal of audiences for playing in an audio and video transmission mode, so that the representation mode of live broadcast interaction is too single, the interactivity of the audiences and a main broadcast is weak, the use experience of the audiences is reduced, and the flow operation of a live broadcast platform is influenced.

Disclosure of Invention

In view of this, an object of the present application is to provide a live broadcast interaction method, a live broadcast interaction device, an electronic device, and a readable storage medium, which can execute a corresponding interactive animation special effect according to target interaction content matched with audio interaction data, enrich a presentation manner and an interactive experience of live broadcast interaction, and further facilitate traffic operation of a live broadcast platform.

According to one aspect of the application, a live broadcast interaction method is provided and applied to a live broadcast interaction system, the live broadcast interaction system comprises a live broadcast providing terminal, a live broadcast watching terminal and a server in communication connection with the live broadcast providing terminal and the live broadcast watching terminal, and the method comprises the following steps:

the live broadcast providing terminal sends target interactive content matched with the audio interactive data to the server according to the audio interactive data between a main broadcast and audiences in a live broadcast room;

the server generates a corresponding interactive service message according to the target interactive content and sends the interactive service message to a live broadcast watching terminal entering the live broadcast room;

and the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

According to another aspect of the present application, a live broadcast interaction method is provided, which is applied to a live broadcast providing terminal, where the live broadcast providing terminal is in communication connection with a server, and the server is in communication connection with a live broadcast watching terminal, and the method includes:

acquiring audio interaction data between a main broadcast and audiences in a live broadcast room;

and sending the target interactive content matched with the audio interactive data to the server so that the server generates a corresponding interactive service message according to the target interactive content, and sending the interactive service message to a live broadcast watching terminal entering a live broadcast room, so that the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

According to another aspect of the present application, a live broadcast interaction method is provided, which is applied to a server, where the server is in communication connection with a live broadcast providing terminal and a live broadcast watching terminal, respectively, and the method includes:

receiving target interactive content which is sent by the live broadcast providing terminal according to audio interactive data between a main broadcast and audiences in a live broadcast room and is matched with the audio interactive data;

and generating a corresponding interactive service message according to the target interactive content, and sending the interactive service message to a live broadcast watching terminal entering the live broadcast room, so that the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

According to another aspect of the application, a live broadcast interaction device is provided, and is applied to a live broadcast providing terminal, the live broadcast providing terminal is in communication connection with a server, the server is in communication connection with a live broadcast watching terminal, and the device comprises:

the acquisition module is used for acquiring audio interaction data between a main broadcast and audiences in a live broadcast room;

and the sending module is used for sending the target interactive content matched with the audio interactive data to the server so that the server generates a corresponding interactive service message according to the target interactive content, and sends the interactive service message to a live broadcast watching terminal entering a live broadcast room, so that the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

According to another aspect of this application, provide a live broadcast interaction device, be applied to the server, the server respectively with live broadcast provide terminal and live broadcast watch terminal communication connection, the device includes:

the receiving module is used for receiving target interactive content which is sent by the live broadcast providing terminal according to audio interactive data between a main broadcast and audiences in a live broadcast room and is matched with the audio interactive data;

and the generating module is used for generating corresponding interactive service information according to the target interactive content and sending the interactive service information to a live broadcast watching terminal entering the live broadcast room so that the live broadcast watching terminal can display a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service information.

According to another aspect of the present application, an electronic device is provided, where the electronic device includes a machine-readable storage medium and a processor, where the machine-readable storage medium stores machine-executable instructions, and when the processor executes the machine-executable instructions, the electronic device serves as a live broadcast providing terminal to implement the live broadcast interaction method, or serves as a server to implement the live broadcast interaction method.

According to another aspect of the present application, there is provided a readable storage medium having stored therein machine-executable instructions that, when executed, implement the aforementioned live interaction method.

Based on any aspect, the target interactive content matched with the audio interactive data is sent to the server through the live broadcast providing terminal according to the audio interactive data between the anchor broadcast and the audience in the live broadcast room, the server generates corresponding interactive service information according to the target interactive content and sends the interactive service information to the live broadcast watching terminal entering the live broadcast room, and the live broadcast watching terminal shows the corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service information. Therefore, the corresponding interactive animation special effect can be executed according to the target interactive content matched with the audio interactive data, the representation mode and interactive experience of live broadcast interaction are enriched, and the flow operation of a live broadcast platform is facilitated.

Drawings

To more clearly illustrate the technical solutions of the embodiments of the present application, the drawings needed in the embodiments will be briefly described below, it should be understood that the following drawings only illustrate some embodiments of the present application and therefore should not be considered as limiting the scope, and those skilled in the art can also obtain other related drawings based on the drawings without inventive efforts.

Fig. 1 is a schematic view illustrating an application scenario of a live broadcast system provided in an embodiment of the present application;

fig. 2 shows one of the flow diagrams of a live interaction method provided by an embodiment of the present application;

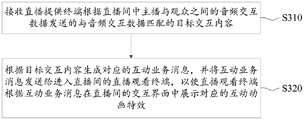

FIG. 3 shows a flow diagram of the sub-steps of step S110 shown in FIG. 2;

FIG. 4 shows a flow diagram of the substeps of step S120 shown in FIG. 2;

FIG. 5 is a flow chart illustrating the sub-steps of step S130 shown in FIG. 2;

fig. 6 is a schematic view illustrating an interactive interface in a live broadcast providing terminal according to an embodiment of the present application;

FIG. 7 is a schematic diagram for showing an interactive animated special effect in an interactive interface of a live broadcast room according to an embodiment of the present application;

fig. 8 shows a second flowchart of a live interaction method provided in an embodiment of the present application;

fig. 9 shows a third flowchart of a live interaction method provided in an embodiment of the present application;

fig. 10 is a schematic functional module diagram of a first direct broadcast interaction device provided by an embodiment of the present application;

fig. 11 is a functional module diagram of a second live interaction device provided by an embodiment of the present application;

fig. 12 shows a component structural diagram of an electronic device for implementing the server and the live broadcast providing terminal shown in fig. 1 according to an embodiment of the present application.

Detailed Description

In order to make the purpose, technical solutions and advantages of the embodiments of the present application clearer, the technical solutions in the embodiments of the present application will be clearly and completely described below with reference to the drawings in the embodiments of the present application, and it should be understood that the drawings in the present application are for illustrative and descriptive purposes only and are not used to limit the scope of protection of the present application. Additionally, it should be understood that the schematic drawings are not necessarily drawn to scale. The flowcharts used in this application illustrate operations implemented according to some of the embodiments of the present application. It should be understood that the operations of the flow diagrams may be performed out of order, and steps without logical context may be performed in reverse order or simultaneously. One skilled in the art, under the guidance of this application, may add one or more other operations to, or remove one or more operations from, the flowchart.

In addition, the described embodiments are only a part of the embodiments of the present application, and not all of the embodiments. The components of the embodiments of the present application, generally described and illustrated in the figures herein, can be arranged and designed in a wide variety of different configurations. Thus, the following detailed description of the embodiments of the present application, presented in the accompanying drawings, is not intended to limit the scope of the claimed application, but is merely representative of selected embodiments of the application. All other embodiments, which can be derived by a person skilled in the art from the embodiments of the present application without making any creative effort, shall fall within the protection scope of the present application.

Referring to fig. 1, fig. 1 shows an interaction scene schematic diagram of a live broadcast system 10 provided in an embodiment of the present application. For example, the live system 10 may be for a service platform such as an internet live. The live broadcasting system 10 may include a server 100, a live broadcasting providing terminal 200, and a live broadcasting viewing terminal 300, where the server 100 is in communication connection with the live broadcasting viewing terminal 300 and the live broadcasting providing terminal 200, respectively, for providing live broadcasting services for the live broadcasting viewing terminal 300 and the live broadcasting providing terminal 200. For example, the server 100 may provide a live interactive service for the anchor of the live broadcast providing terminal 200 and the audience of the live viewing terminal 300, so that the anchor and the audience can perform interactive interaction in a live broadcast room, such as sending a barrage, giving away a live gift, and the like.

In some implementation scenarios, the live viewing terminal 300 and the live providing terminal 200 may be used interchangeably. For example, the anchor of the live broadcast providing terminal 200 may provide a live video service to the viewer using the live broadcast providing terminal 200, or view live video provided by other anchors as the viewer. For another example, a viewer of the live viewing terminal 300 may also use the live viewing terminal 300 to view live video provided by a main broadcast of interest, or to serve live video as a main broadcast to other viewers.

In this embodiment, the live broadcast providing terminal 200 and the live broadcast watching terminal 300 may be, but are not limited to, a smart phone, a personal digital assistant, a tablet computer, a personal computer, a notebook computer, a virtual reality terminal device, an augmented reality terminal device, and the like. In particular implementations, there may be zero, one or more live viewing terminals 300 and live providing terminals 200, only one of which is shown in fig. 1, accessing the server 100. The live broadcast providing terminal 200 and the live broadcast watching terminal 300 may have internet products installed therein for providing live broadcast internet services, for example, the internet products may be applications APP, Web pages, applets, etc. related to live broadcast internet services used in a computer or a smart phone. For example, the internet products may include voice recognition programs, front-end applications, live software programs, and the like.

In this embodiment, the server 100 may be a single physical server, or may be a server group including a plurality of physical servers for performing different data processing functions. The set of servers may be centralized or distributed (e.g., server 100 may be a distributed system). In some possible embodiments, such as where the server 100 employs a single physical server, the physical server may be assigned different logical server components based on different live service functions.

It is understood that the live system 10 shown in fig. 1 is only one possible example, and in other possible embodiments, the live system 10 may include only a portion of the components shown in fig. 1 or may include other components.

The live broadcast interaction method provided by the embodiment of the present application is exemplarily described below with reference to the application scenario shown in fig. 1. First, referring to fig. 2, the live broadcast interaction method provided in this embodiment may be executed by the live broadcast system 10 in fig. 1, and it should be understood that, in other embodiments, the order of some steps in the live broadcast interaction method of this embodiment may be interchanged according to actual needs, or some steps in the live broadcast interaction method may also be omitted or deleted. The steps of the live interaction method performed by the live system 10 are described in detail below.

In step S110, the live broadcast providing terminal 200 sends the target interactive content matched with the audio interactive data to the server 100 according to the audio interactive data between the anchor and the audience in the live broadcast room.

In step S120, the server 100 generates a corresponding interactive service message according to the target interactive content, and sends the interactive service message to the live viewing terminal 300 entering the live broadcast room.

Step S130, the live viewing terminal 300 displays a corresponding interactive animation special effect in the interactive interface of the live room according to the interactive service message.

According to the live broadcast interaction method provided by the embodiment, the live broadcast providing terminal 200 sends the target interaction content matched with the audio interaction data to the server 100 according to the audio interaction data between the anchor broadcast and the audience in the live broadcast room, the server 100 generates the corresponding interactive service message according to the target interaction content and sends the interactive service message to the live broadcast watching terminal 300 entering the live broadcast room, and the live broadcast watching terminal 300 displays the corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message. Therefore, the corresponding interactive animation special effect can be executed according to the target interactive content matched with the audio interactive data, the representation mode and interactive experience of live broadcast interaction are enriched, and the flow operation of a live broadcast platform is facilitated.

In one possible implementation, in order to determine the target interactive content matching the audio interactive data and reduce the communication data amount between the live broadcast providing terminal 200 and the server 100, please refer to fig. 3 in conjunction with step S110, which may be further implemented by the following sub-steps:

in sub-step S111, the live broadcast providing terminal 200 obtains audio interaction data between the anchor and the audience in the live broadcast room.

And a substep S112, identifying corresponding audio interaction text content from the audio interaction data.

And a substep S113, obtaining the text content meeting the preset interaction condition from the audio interaction text content, and sending the text content as the target interaction content matched with the audio interaction data to the server 100.

In this embodiment, the anchor generates a lot of audio interactive data with the audience during the live broadcast. For example, when watching the audio and video stream of the live broadcast room of the anchor, the audience can send a barrage to interact with the anchor in the live broadcast room, and the anchor can send audio interaction data according to the barrage sent by each audience to interact with the audience.

In the traditional live broadcast interaction scheme, the audio interaction data can be directly transmitted to the live broadcast watching terminal 300 of the audience for playing only in an audio and video transmission mode, so that the representation mode of the live broadcast interaction is too single, the interactivity of the audience and the anchor is weak, the use experience of the audience is reduced, and the flow operation of a live broadcast platform is influenced. Therefore, in the present embodiment, after the audio interaction data between the anchor and the audience in the live broadcast is acquired, the corresponding audio interaction text content is first identified from the audio interaction data, instead of merely sending the audio interaction data to the server 100. For example, some common speech recognition algorithms (e.g., Baidu speech recognition algorithm, Korea fly speech recognition algorithm, etc.) may be used to identify corresponding audio interaction text content from the audio interaction data.

Optionally, in order to increase the data loading speed, the identified audio interaction text content may be text data in JSON (JSON Object Notation) format.

On this basis, since the identified audio interactive text content corresponds to the audio interactive data, but actually, only a part of specific text content in the audio interactive text content may be used to represent substantial interactive content of the anchor, the text content meeting the preset interactive condition may be obtained from the audio interactive text content as the target interactive content matching the audio interactive data, and then sent to the server 100.

Based on the above steps, in order to improve the later-stage interaction effect, in this embodiment, not only the audio interaction data is sent to the server 100, but the target interaction content matched with the audio interaction data is determined and then sent to the server 100, so that the presentation manner and the interaction experience of the live broadcast interaction are more conveniently enriched, and since only a part of the target interaction content is sent to the server 100, the communication data amount between the live broadcast providing terminal 200 and the server 100 can be reduced, and the communication effect is optimized to a certain extent.

In a possible implementation manner, in the sub-step S113, an interaction keyword library may be stored in the live broadcast providing terminal 200, and the interaction keyword library may include a plurality of interaction keywords. For example, common interactive keywords may be common interactive keywords in a live broadcast process such as "thank you", "welcome", and the like, and may be configured by the live broadcast platform according to actual scene needs.

On the basis, in the process of obtaining text content meeting preset interaction conditions from the audio interaction text content and sending the text content to the server 100 as target interaction content matched with the audio interaction data, the audio interaction text content can be respectively matched with each interaction keyword in the interaction keyword library, when the audio interaction text content comprises an interaction text matched with any interaction keyword in the interaction keyword library, target interaction content corresponding to the interaction text is generated, and the target interaction content is sent to the server 100.

For example, assuming that the user XXX gifts 1314 gift to the anchor, the anchor sends out audio interactive data of "1314 gift delivered by thank you XXX" after seeing the 1314 gift donated by the user XXX, at this time, audio interactive text contents of "1314 gift delivered by thank you XXX" sent by the anchor may be acquired and an interactive keyword of "thank you" is matched from the audio interactive text contents of "1314 gift delivered by thank you XXX", at this time, a target interactive content "thank you XXX", of "1314 gift delivered by thank you XXX", or "thank you", or "1314 gift 1314" and the like may be generated and then transmitted to the server 100.

Optionally, the above process may be executed by a front-end application (JavaScript program) running in the live broadcast providing terminal 200, and the front-end application may be used to provide an interaction function between the live broadcast providing terminal 200 and the server 100, and may cooperate with a live broadcast software program running in the live broadcast providing terminal 200 to execute other functions, for example, a special effect display function.

In a possible implementation manner, regarding step S120, considering that in the live broadcasting process, there is also a difference in the interaction manner between the anchor and the audience for different service scenes, such as PK scenes between different anchors, scenes of the anchor live broadcasting game, and so on, which may result in different presentation forms of the same interactive service message in different service scenes. Based on this, please refer to fig. 4 in combination, step S120 can be further implemented by the following sub-steps:

in substep S121, the server 100 determines a corresponding service processing policy according to the service scenario of the live broadcast room.

And a substep S122, determining the interactive service message corresponding to the target interactive content according to the service processing strategy.

And a substep S123 of transmitting the interactive service message to the live viewing terminal 300 entering the live broadcast room.

In this embodiment, for different service scenarios, the server 100 may configure corresponding service processing policies. For example, for PK scenes between different anchor, a business processing policy corresponding to the PK scene may be configured, such as interactive contents common in the PK scene (e.g., "i won"), and interactive business messages that may be involved with the interactive contents (e.g., "i won XXX anchor", "i won first farm", etc.). For another example, for a scene of the anchor live game, a service processing policy corresponding to the scene of the anchor live game may also be configured, such as common interactive contents (e.g., "thank you", "welcome", etc.) in the scene of the anchor live game, and interactive service messages that may be related to the interactive contents (e.g., "thank you XXX audience send AAAA gift", "welcome XXX audience enters my live room", etc.).

In this embodiment, after receiving the target interactive content, the server 100 first determines a corresponding service processing policy according to a service scene of the live broadcast room, and then determines an interactive service message corresponding to the target interactive content according to the service processing policy. For example, in one possible implementation, interactive additional content for the target interactive content may be determined in live data of a live broadcast room according to a service processing policy, and corresponding interactive additional content is added to the target interactive content to determine an interactive service message corresponding to the target interactive content.

For example, taking the scene of the main live game as an example, assuming that the target interactive content is "thank you", or "thank you XXX audience", or "thank you AAA gift", or "thank you gift", etc., in order to ensure the integrity of the interactive service message, the interactive additional content for the "thank you XXX audience", or "thank you AAA gift", or "thank you gift", etc. may be determined in the live data in the live room. For example, the interactive additional content of "thank you XXX audience" may be "AAA gift delivered", the interactive additional content of "thank you AAA gift" may be "AAA gift delivered by XXX audience", and the interactive additional content of "thank you gift" may be "AAA gift delivered by XXX audience", so that the generated interactive service message may be "AAA gift delivered by" thank you XXX audience ".

Therefore, the interactive service message corresponding to the target interactive content can be determined according to different service scenes of the live broadcast room, the integrity of the interactive service message can be ensured by adding the corresponding interactive additional content in the target interactive content, the representation mode and the interactive experience of the live broadcast interaction are further enriched, and the flow operation of a live broadcast platform is facilitated.

In one possible embodiment, for step S130, in order to further enrich the presentation and interaction experience of the live interaction, please refer to fig. 5, step S130 may be further implemented by the following sub-steps:

in sub-step S131, after receiving the interactive service message, the live viewing terminal 300 determines a special effect rendering policy corresponding to the interactive service message.

And a substep S132, generating an interactive animation special effect corresponding to the interactive service message according to the special effect rendering strategy, and displaying the interactive animation special effect in an interactive interface of the live broadcast room.

In this embodiment, the live viewing terminal 300 may store special effect rendering policies corresponding to different interactive service messages, and the special effect rendering policies may include corresponding special effect files. For example, the live viewing terminal 300 may download the special effect files from the server 100 in advance for personalized customization.

On the basis, a corresponding special effect file can be obtained by calling a related image API (Application Programming Interface) according to a special effect rendering strategy, and an interactive animation special effect corresponding to the interactive service message is generated by rendering according to the special effect file.

Therefore, the interactive service messages are displayed to audiences in an interactive animation special effect mode, and the representation mode and the interactive experience of live broadcast interaction can be further enriched.

In a possible implementation manner, in order to improve the interaction efficiency and reduce the amount of data interacted between the live broadcast providing terminal 200 and the server 100 before, the live broadcast providing terminal 200 may further generate a corresponding interactive service message according to the target interactive content after sending the target interactive content matched with the audio interactive data to the server 100 according to the audio interactive data between the anchor and the audience in the live broadcast room, and display a corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message. In detail, the operations may be executed through a front-end application program run by the live broadcast providing terminal 200, and detailed details of the specific execution process may refer to the related description above, and will not be repeated herein.

In another possible implementation manner, after generating the corresponding interactive service message according to the target interactive content, the server 100 may also send the interactive service message to the live broadcast providing terminal 200, and the live broadcast providing terminal 200 displays the corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message. The specific process of the live broadcast providing terminal 200 displaying the corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message may refer to the above-mentioned related description of the live broadcast watching terminal 300 displaying the corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message, and is not repeated here.

In order to more clearly illustrate the technical solution of the embodiment of the present application, the interface display content of the interactive process of the embodiment of the present application will be exemplarily described below with reference to fig. 6 and fig. 7, it should be understood that the interactive interface shown in fig. 6 and fig. 7 is only one possible example, and a person skilled in the art may use other interactive interfaces to display the entire live interactive process after reading the embodiment of the present application, and all of them are within the scope of the present application.

Referring to fig. 6, an interactive interface schematic diagram of a live broadcast providing terminal 200 according to an embodiment of the present disclosure is shown, where the interactive interface may include an interactive interface of a live broadcast room and a barrage sending area, and a main broadcast may see barrage interaction data from an audience in the barrage sending area, for example, a barrage AAAA sent by an audience a, a barrage BBBB sent by an audience B, a barrage CCCC sent by an audience C, and a DDDD gift given by an audience D, where the barrage interaction data may be synchronously displayed in the interactive interface of the live broadcast room. At this time, the anchor can optionally express the emotion of receiving the DDDD gift to the viewer D while viewing the barrage interactive data, and for example, can send audio interactive data such as "thank you D", "thank you DDDD gift", and "thank you D DDDD gift". The live broadcast providing terminal 200 may recognize the target interactive content in the audio interactive data and then send the target interactive content to the server 100, and the server 100 may generate a corresponding interactive service message to the live broadcast viewing terminal 300.

Referring to fig. 7, on the basis of the above example, a schematic diagram for displaying an interactive animation special effect in an interactive interface of a live broadcast room provided in the embodiment of the present application is shown, for each viewer entering the live broadcast room, the displayed interactive animation special effect "thank you for the DDDD gift given by the viewer D", and some pentagonal special effects, other special effects, and the like may be arranged around the displayed interactive animation special effect, so that the viewer D (shown in fig. 7, the interactive interface seen by the live broadcast viewing terminal 300 of the viewer D) can timely perceive the interactive feedback of the anchor broadcast on the DDDD gift sent by the viewer D, thereby enriching the presentation manner and interactive experience of live broadcast interaction, and further facilitating the traffic operation of a live broadcast platform.

Based on the same inventive concept, fig. 8 shows a flowchart of another live broadcast interaction method provided in the embodiment of the present application, and unlike the foregoing embodiment, the live broadcast interaction method is executed by the live broadcast providing terminal 200 shown in fig. 1. It should be noted that, the steps involved in the live interaction method to be described next have been described in the above embodiment, and the detailed contents of the specific steps can be described with reference to the above embodiment, and are not described in detail here. Only the steps performed by the broadcast providing terminal 200 will be briefly described below.

Step S210, audio interaction data between the anchor and the audience in the live broadcast room is obtained.

Step S220, sending the target interactive content matched with the audio interactive data to the server 100, so that the server 100 generates a corresponding interactive service message according to the target interactive content, and sending the interactive service message to the live viewing terminal 300 entering the live broadcast room, so that the live viewing terminal 300 displays a corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message.

In one possible implementation, for step S220, corresponding audio interaction text content may be identified from the audio interaction data, and text content meeting preset interaction conditions is obtained from the audio interaction text content and sent to the server 100 as target interaction content matching the audio interaction data.

In a possible implementation manner, an interactive keyword library is stored in the live broadcast providing terminal 200, the interactive keyword library includes a plurality of interactive keywords, in the step of obtaining text content satisfying a preset interaction condition from audio interactive text content and sending the text content as target interactive content matched with audio interactive data to the server 100, the audio interactive text content may be respectively matched with each interactive keyword in the interactive keyword library, when the audio interactive text content includes an interactive text matched with any interactive keyword in the interactive keyword library, target interactive content corresponding to the interactive text is generated, and the target interactive content is sent to the server 100.

In a possible implementation manner, after the target interactive content matched with the audio interactive data is sent to the server 100, the live broadcast providing terminal 200 may further generate a corresponding interactive service message according to the target interactive content, and display a corresponding interactive animation special effect in an interactive interface of a live broadcast room according to the interactive service message.

Based on the same inventive concept, fig. 9 shows a flowchart of another live broadcast interaction method provided in this embodiment, and unlike the foregoing embodiment, the live broadcast interaction method is executed by the server 100 shown in fig. 1. It should be noted that, the steps involved in the live interaction method to be described next have been described in the above embodiment, and the detailed contents of the specific steps can be described with reference to the above embodiment, and are not described in detail here. Only the steps performed by the server 100 will be briefly described below.

Step S310, receiving a target interactive content matched with the audio interactive data sent by the live broadcast providing terminal 200 according to the audio interactive data between the anchor and the audience in the live broadcast room.

Step S320, generating a corresponding interactive service message according to the target interactive content, and sending the interactive service message to the live viewing terminal 300 entering the live broadcast room, so that the live viewing terminal 300 displays a corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message.

In a possible implementation manner, in step S320, a corresponding service processing policy may be determined according to a service scene of the live broadcast room, an interactive service message corresponding to the target interactive content may be determined according to the service processing policy, and the interactive service message is sent to the live broadcast viewing terminal 300 entering the live broadcast room.

In a possible implementation manner, the interactive service message corresponding to the target interactive content is determined according to the service processing policy, which may specifically be determining, according to the service processing policy, interactive additional content for the target interactive content in live broadcast data in a live broadcast room, and then adding corresponding interactive additional content in the target interactive content to determine the interactive service message corresponding to the target interactive content.

In a possible implementation manner, after generating the corresponding interactive service message according to the target interactive content, the server 100 may further send the interactive service message to the live broadcast providing terminal 200, so that the live broadcast providing terminal 200 displays the corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message.

Based on the same inventive concept, please refer to fig. 10, which shows a functional module diagram of the first live interaction device 210 according to an embodiment of the present application, and in this embodiment, the functional module of the first live interaction device 210 may be divided according to the embodiment of the method executed by the live broadcast providing terminal 200. For example, each function module may be divided for each function of the live broadcast providing terminal 200, or two or more functions may be integrated into one processing module. The integrated module can be realized in a hardware mode, and can also be realized in a software functional module mode. It should be noted that, in the embodiment of the present application, the division of the module is schematic, and is only one logic function division, and there may be another division manner in actual implementation. For example, in the case of dividing each function module by corresponding functions, the first direct-broadcast interactive apparatus 210 shown in fig. 10 is only an apparatus diagram. The first direct broadcast interaction apparatus 210 may include an obtaining module 211 and a sending module 212, and the functions of the functional modules of the first direct broadcast interaction apparatus 210 are described in detail below.

The obtaining module 211 is configured to obtain audio interaction data between a main broadcast and a viewer in a live broadcast. It is understood that the obtaining module 211 may be configured to perform the step S210, and for a detailed implementation of the obtaining module 211, reference may be made to the content related to the step S210.

The sending module 212 is configured to send the target interactive content matched with the audio interactive data to the server 100, so that the server 100 generates a corresponding interactive service message according to the target interactive content, and sends the interactive service message to the live broadcast watching terminal 300 entering the live broadcast room, so that the live broadcast watching terminal 300 displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message. It is understood that the sending module 212 may be configured to perform the step S220, and for the detailed implementation of the sending module 212, reference may be made to the content related to the step S220.

Based on the same inventive concept, please refer to fig. 11, which shows a functional module diagram of the second live broadcast interaction device 110 provided in the embodiment of the present application, and the embodiment may divide the functional module of the second live broadcast interaction device 110 according to the method embodiment executed by the server 100. For example, the respective functional modules may be divided for the respective functions of the server 100, or two or more functions may be integrated into one processing module. The integrated module can be realized in a hardware mode, and can also be realized in a software functional module mode. It should be noted that, in the embodiment of the present application, the division of the module is schematic, and is only one logic function division, and there may be another division manner in actual implementation. For example, in the case of dividing each function module according to each function, the second live interactive device 110 shown in fig. 11 is only a device diagram. The second live interactive apparatus 110 may include a receiving module 111 and a generating module 112, and the functions of the functional modules of the second live interactive apparatus 110 are described in detail below.

The receiving module 111 is configured to receive a target interactive content that is sent by the live broadcast providing terminal 200 according to audio interactive data between a main broadcast and a viewer in a live broadcast room and is matched with the audio interactive data. It is understood that the receiving module 111 can be used to execute the step S310, and for the detailed implementation of the receiving module 111, reference can be made to the content related to the step S310.

The generating module 112 is configured to generate a corresponding interactive service message according to the target interactive content, and send the interactive service message to the live viewing terminal 300 entering the live broadcast room, so that the live viewing terminal 300 displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message. It is understood that the generating module 112 may be configured to perform the step S320, and for a detailed implementation of the generating module 112, reference may be made to the content related to the step S320.

Based on the same inventive concept, please refer to fig. 12, which shows a schematic block diagram of a component structure of an electronic device 400 for implementing the server 100 and the live broadcast providing terminal 200, according to an embodiment of the present application, where the electronic device 400 may include a machine-readable storage medium 420 and a processor 430.

In this embodiment, the machine-readable storage medium 420 and the processor 430 are both located in the electronic device 400 and are separately located. However, it should be understood that the machine-readable storage medium 420 may be separate from the electronic device 400 and may be accessed by the processor 430 through a bus interface. Alternatively, the machine-readable storage medium 420 may be integrated into the processor 430, e.g., may be a cache and/or general purpose registers.

The processor 430 is a control center of the electronic device 400, connects various parts of the entire electronic device 400 using various interfaces and lines, performs various functions of the electronic device 400 and processes data by operating or executing software programs and/or modules stored in the machine-readable storage medium 420 and calling data stored in the machine-readable storage medium 420, thereby performing overall monitoring of the electronic device 400. Alternatively, processor 430 may include one or more processing cores; for example, processor 430 may integrate an application processor that handles primarily the operating system, user interface, applications, etc., and a modem processor that handles primarily wireless communications. It will be appreciated that the modem processor described above may not be integrated into the processor.

The processor 430 may be a general-purpose Central Processing Unit (CPU), a microprocessor, an Application-Specific Integrated Circuit (ASIC), or one or more Integrated circuits for controlling program execution of the live interactive method provided by the above method embodiments.

Machine-readable storage medium 420 may be, but is not limited to, a ROM or other type of static storage device that can store static information and instructions, a RAM or other type of dynamic storage device that can store information and instructions, an Electrically Erasable programmable Read-Only MEMory (EEPROM), a compact disc Read-Only MEMory (CD-ROM) or other optical disk storage, optical disk storage (including compact disc, laser disk, optical disk, digital versatile disk, blu-ray disk, etc.), magnetic disk storage or other magnetic storage devices, or any other medium that can be used to carry or store desired program code in the form of instructions or data structures and that can be accessed by a computer. The machine-readable storage medium 420 may be self-contained and coupled to the processor 430 via a communication bus. The machine-readable storage medium 420 may also be integrated with the processor. The machine-readable storage medium 420 is used for storing, among other things, machine-executable instructions for performing aspects of the present application. The processor 430 is configured to execute machine executable instructions stored in the machine readable storage medium 420 to implement the method embodiment executed by the server 100 or the method embodiment executed by the live broadcast providing terminal 200.

Since the electronic device 400 provided in the embodiment of the present application is another implementation form of the method embodiment executed by the server 100 or the method embodiment executed by the live broadcast providing terminal 200, and the electronic device 400 may be configured to execute the live broadcast interaction method provided in the method embodiment executed by the server 100 or the method embodiment executed by the live broadcast providing terminal 200, for technical effects that can be obtained by the electronic device 400, reference may be made to the method embodiment, and details are not described here.

Further, the present application also provides a readable storage medium containing computer executable instructions, which when executed, can be used to implement the method embodiment performed by the foregoing server 100 or the method embodiment performed by the foregoing live broadcast providing terminal 200.

Of course, the storage medium provided in the embodiments of the present application and containing computer-executable instructions is not limited to the above method operations, and may also perform related operations in the live broadcast interaction method provided in any embodiment of the present application.

Embodiments of the present application are described with reference to flowchart illustrations and/or block diagrams of methods, apparatus and computer program products according to embodiments of the application. It will be understood that each flow and/or block of the flowchart illustrations and/or block diagrams, and combinations of flows and/or blocks in the flowchart illustrations and/or block diagrams, can be implemented by computer program instructions. These computer program instructions may be provided to a processor of a general purpose computer, special purpose computer, embedded processor, or other programmable data processing apparatus to produce a machine, such that the instructions, which execute via the processor of the computer or other programmable data processing apparatus, create means for implementing the functions specified in the flowchart flow or flows and/or block diagram block or blocks.

While the present application has been described in connection with various embodiments, other variations to the disclosed embodiments can be understood and effected by those skilled in the art in practicing the claimed application, from a review of the drawings, the disclosure, and the appended claims. In the claims, the word "comprising" does not exclude other elements or steps, and the word "a" or "an" does not exclude a plurality. A single processor or other unit may fulfill the functions of several items recited in the claims. The mere fact that certain measures are recited in mutually different dependent claims does not indicate that a combination of these measures cannot be used to advantage.

The above description is only for various embodiments of the present application, but the scope of the present application is not limited thereto, and any person skilled in the art can easily conceive of changes or substitutions within the technical scope of the present application, and all such changes or substitutions are included in the scope of the present application. Therefore, the protection scope of the present application shall be subject to the protection scope of the claims.

Claims (16)

1. A live broadcast interaction method is applied to a live broadcast system, the live broadcast system comprises a live broadcast providing terminal, a live broadcast watching terminal and a server which is in communication connection with the live broadcast providing terminal and the live broadcast watching terminal, and the method comprises the following steps:

the live broadcast providing terminal sends target interactive content matched with the audio interactive data to the server according to the audio interactive data between a main broadcast and audiences in a live broadcast room, wherein the target interactive content is interactive keywords in an interactive keyword library;

the server determines a corresponding service processing strategy according to the service scene of the live broadcast room; according to the service processing strategy, interactive additional content aiming at the target interactive content is determined in the live broadcast data of the live broadcast room; the service scene represents the current live broadcast project of the anchor, and the interactive additional content is text content matched with the service scene;

the server adds corresponding interactive additional content in the target interactive content to determine interactive service information corresponding to the target interactive content, and sends the interactive service information to a live broadcast viewing terminal entering the live broadcast room;

and the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

2. The live broadcast interaction method of claim 1, wherein the step of the live broadcast providing terminal sending the target interaction content matched with the audio interaction data to the server according to the audio interaction data between the anchor and the audience in the live broadcast room comprises:

the live broadcast providing terminal obtains audio interactive data between a main broadcast and audiences in a live broadcast room;

identifying corresponding audio interaction text content from the audio interaction data;

and obtaining the text content meeting the preset interaction condition from the audio interaction text content, and sending the text content serving as the target interaction content matched with the audio interaction data to the server.

3. The live interaction method as claimed in claim 2, wherein the step of obtaining text content satisfying preset interaction conditions from the audio interaction text content as target interaction content matching the audio interaction data and sending the target interaction content to the server comprises:

matching the audio interactive text content with each interactive keyword in the interactive keyword library respectively;

when the audio interactive text content comprises an interactive text matched with any interactive keyword in the interactive keyword library, generating target interactive content corresponding to the interactive text;

and sending the target interactive content to the server.

4. The live broadcast interaction method of claim 1, wherein the step of the live broadcast viewing terminal displaying the corresponding interactive animation special effect in the interactive interface of the live broadcast room according to the interactive service message comprises:

after receiving the interactive service message, the live broadcast watching terminal determines a special effect rendering strategy corresponding to the interactive service message;

and generating an interactive animation special effect corresponding to the interactive service message according to the special effect rendering strategy, and displaying the interactive animation special effect in an interactive interface of the live broadcast room.

5. The live interaction method as claimed in any one of claims 1 to 4, wherein after the step of the live providing terminal sending the target interaction content matching the audio interaction data to the server according to the audio interaction data between the anchor and the audience in the live room, the method further comprises:

and the live broadcast providing terminal generates corresponding interactive service information according to the target interactive content and displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service information.

6. The live interaction method as claimed in any one of claims 1 to 4, wherein after the step of generating the corresponding interactive service message according to the target interactive content by the server, the method further comprises:

the server sends the interactive service message to the live broadcast providing terminal;

and the live broadcast providing terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

7. A live broadcast interaction method is applied to a live broadcast providing terminal, the live broadcast providing terminal is in communication connection with a server, the server is in communication connection with a live broadcast watching terminal, and the method comprises the following steps:

acquiring audio interaction data between a main broadcast and audiences in a live broadcast room;

sending the target interactive content matched with the audio interactive data to the server so that the server determines a corresponding service processing strategy according to the service scene of the live broadcast room; according to the service processing strategy, interactive additional content aiming at the target interactive content is determined in the live broadcast data of the live broadcast room; adding corresponding interactive additional content in the target interactive content to determine an interactive service message corresponding to the target interactive content, wherein the service scene represents a current live broadcast project of the anchor, and the interactive additional content is text content matched with the service scene; and sending the interactive service message to a live broadcast watching terminal entering the live broadcast room, so that the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message, wherein the target interactive content is an interactive keyword in an interactive keyword library.

8. The live interaction method as claimed in claim 7, wherein the step of sending the target interaction content matching the audio interaction data to the server comprises:

identifying corresponding audio interaction text content from the audio interaction data;

and obtaining the text content meeting the preset interaction condition from the audio interaction text content, and sending the text content serving as the target interaction content matched with the audio interaction data to the server.

9. The live broadcast interaction method as claimed in claim 8, wherein an interaction keyword library is stored in the live broadcast providing terminal, the interaction keyword library includes a plurality of interaction keywords, and the step of obtaining text contents satisfying a preset interaction condition from the audio interaction text contents as target interaction contents matched with the audio interaction data and sending the target interaction contents to the server includes:

matching the audio interactive text content with each interactive keyword in the interactive keyword library respectively;

when the audio interactive text content comprises an interactive text matched with any interactive keyword in the interactive keyword library, generating target interactive content corresponding to the interactive text;

and sending the target interactive content to the server.

10. The live interaction method as claimed in any one of claims 7 to 8, wherein after the step of sending the target interaction content matched with the audio interaction data to the server, the method further comprises:

and generating a corresponding interactive service message according to the target interactive content, and displaying a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

11. A live broadcast interaction method is applied to a server, the server is respectively in communication connection with a live broadcast providing terminal and a live broadcast watching terminal, and the method comprises the following steps:

receiving target interactive content which is sent by the live broadcast providing terminal according to audio interactive data between a main broadcast and audiences in a live broadcast room and is matched with the audio interactive data, wherein the target interactive content is an interactive keyword in an interactive keyword library;

determining a corresponding service processing strategy according to the service scene of the live broadcast room;

according to the service processing strategy, interactive additional content aiming at the target interactive content is determined in the live broadcast data of the live broadcast room;

adding corresponding interactive additional content in the target interactive content to determine an interactive service message corresponding to the target interactive content, wherein the service scene represents a current live broadcast project of the anchor, and the interactive additional content is text content matched with the service scene;

and sending the interactive service message to a live broadcast watching terminal entering the live broadcast room, so that the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

12. The live interaction method of claim 11, wherein after the step of generating the corresponding interactive service message according to the target interactive content, the method further comprises:

and sending the interactive service message to the live broadcast providing terminal so that the live broadcast providing terminal can display a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

13. The utility model provides a live broadcast interaction device which characterized in that is applied to the live broadcast and provides the terminal, live broadcast provides terminal and server communication connection, the server watches terminal communication connection with the live broadcast, the device includes:

the acquisition module is used for acquiring audio interaction data between a main broadcast and audiences in a live broadcast room;

the sending module is used for sending the target interactive content matched with the audio interactive data to the server so that the server determines a corresponding service processing strategy according to the service scene of the live broadcast room; according to the service processing strategy, interactive additional content aiming at the target interactive content is determined in the live broadcast data of the live broadcast room; adding corresponding interactive additional content in the target interactive content to determine an interactive service message corresponding to the target interactive content, wherein the service scene represents a current live broadcast item of the anchor, and the interactive additional content is text content matched with the service scene; and sending the interactive service message to a live broadcast watching terminal entering the live broadcast room, so that the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message, wherein the target interactive content is an interactive keyword in an interactive keyword library.

14. A live broadcast interaction device is applied to a server, the server is respectively in communication connection with a live broadcast providing terminal and a live broadcast watching terminal, and the device comprises:

the receiving module is used for receiving target interactive content which is sent by the live broadcast providing terminal according to audio interactive data between a main broadcast and audiences in a live broadcast room and is matched with the audio interactive data, wherein the target interactive content is interactive keywords in an interactive keyword library;

the generating module is used for determining a corresponding service processing strategy according to the service scene of the live broadcast room; determining interactive additional content aiming at the target interactive content in live broadcast data of the live broadcast room according to the service processing strategy; adding corresponding interactive additional content in the target interactive content to determine an interactive service message corresponding to the target interactive content, wherein the service scene represents a current live broadcast project of the anchor, and the interactive additional content is text content matched with the service scene;

the generation module is further configured to send the interactive service message to a live broadcast watching terminal entering the live broadcast room, so that the live broadcast watching terminal displays a corresponding interactive animation special effect in an interactive interface of the live broadcast room according to the interactive service message.

15. An electronic device, comprising a machine-readable storage medium and a processor, wherein the machine-readable storage medium stores machine-executable instructions, and the processor, when executing the machine-executable instructions, implements the live interaction method of any one of claims 7 to 10 as a live broadcast providing terminal, or implements the live interaction method of any one of claims 11 to 12 as a server.

16. A readable storage medium having stored therein machine executable instructions which, when executed by a processor, carry out the live interaction method of any one of claims 7-10 or carry out the live interaction method of any one of claims 11-12.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201911130084.XA CN110798696B (en) | 2019-11-18 | 2019-11-18 | Live broadcast interaction method and device, electronic equipment and readable storage medium |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201911130084.XA CN110798696B (en) | 2019-11-18 | 2019-11-18 | Live broadcast interaction method and device, electronic equipment and readable storage medium |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN110798696A CN110798696A (en) | 2020-02-14 |

| CN110798696B true CN110798696B (en) | 2022-09-30 |

Family

ID=69445161

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201911130084.XA Active CN110798696B (en) | 2019-11-18 | 2019-11-18 | Live broadcast interaction method and device, electronic equipment and readable storage medium |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN110798696B (en) |

Families Citing this family (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN111277761B (en) * | 2020-03-05 | 2022-03-01 | 北京达佳互联信息技术有限公司 | Video shooting method, device and system, electronic equipment and storage medium |

| CN112291504B (en) * | 2020-03-27 | 2022-10-28 | 北京字节跳动网络技术有限公司 | Information interaction method and device and electronic equipment |

| CN111934984B (en) * | 2020-07-30 | 2023-05-12 | 北京达佳互联信息技术有限公司 | Message feedback method and device, electronic equipment and storage medium |

| CN111954063B (en) * | 2020-08-24 | 2022-11-04 | 北京达佳互联信息技术有限公司 | Content display control method and device for video live broadcast room |

| CN112836469A (en) * | 2021-01-27 | 2021-05-25 | 北京百家科技集团有限公司 | Information rendering method and device |

| CN113014935B (en) * | 2021-02-20 | 2023-05-09 | 北京达佳互联信息技术有限公司 | Interaction method and device of live broadcasting room, electronic equipment and storage medium |

| CN113259704B (en) * | 2021-05-19 | 2023-06-09 | 杭州米络星科技(集团)有限公司 | Live broadcast room initialization method, device, equipment and storage medium |

| CN113453030B (en) * | 2021-06-11 | 2023-01-20 | 广州方硅信息技术有限公司 | Audio interaction method and device in live broadcast, computer equipment and storage medium |

| CN114173139B (en) * | 2021-11-08 | 2023-11-24 | 北京有竹居网络技术有限公司 | Live broadcast interaction method, system and related device |

| CN114168018A (en) * | 2021-12-08 | 2022-03-11 | 北京字跳网络技术有限公司 | Data interaction method, data interaction device, electronic equipment, storage medium and program product |

Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN106804007A (en) * | 2017-03-20 | 2017-06-06 | 合网络技术(北京)有限公司 | The method of Auto-matching special efficacy, system and equipment in a kind of network direct broadcasting |

| CN109618181A (en) * | 2018-11-28 | 2019-04-12 | 网易(杭州)网络有限公司 | Exchange method and device, electronic equipment, storage medium is broadcast live |

Family Cites Families (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2017137948A1 (en) * | 2016-02-10 | 2017-08-17 | Vats Nitin | Producing realistic body movement using body images |

-

2019

- 2019-11-18 CN CN201911130084.XA patent/CN110798696B/en active Active

Patent Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN106804007A (en) * | 2017-03-20 | 2017-06-06 | 合网络技术(北京)有限公司 | The method of Auto-matching special efficacy, system and equipment in a kind of network direct broadcasting |

| CN109618181A (en) * | 2018-11-28 | 2019-04-12 | 网易(杭州)网络有限公司 | Exchange method and device, electronic equipment, storage medium is broadcast live |

Also Published As

| Publication number | Publication date |

|---|---|

| CN110798696A (en) | 2020-02-14 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN110798696B (en) | Live broadcast interaction method and device, electronic equipment and readable storage medium | |

| CN108184144B (en) | Live broadcast method and device, storage medium and electronic equipment | |

| CN111131851B (en) | Game live broadcast control method and device, computer storage medium and electronic equipment | |

| CN112087655B (en) | Method and device for presenting virtual gift and electronic equipment | |

| CN108449409B (en) | Animation pushing method, device, equipment and storage medium | |

| CN108171160B (en) | Task result identification method and device, storage medium and electronic equipment | |

| CN112929678B (en) | Live broadcast method, live broadcast device, server side and computer readable storage medium | |

| CN113727130B (en) | Message prompting method, system and device for live broadcasting room and computer equipment | |

| CN109005422B (en) | Video comment processing method and device | |

| US20110264536A1 (en) | Distance dependent advertising | |

| CN111079529B (en) | Information prompting method and device, electronic equipment and storage medium | |

| CN112584224A (en) | Information display and processing method, device, equipment and medium | |

| CN114095744B (en) | Video live broadcast method and device, electronic equipment and readable storage medium | |

| CN112218108B (en) | Live broadcast rendering method and device, electronic equipment and storage medium | |

| CN110856005B (en) | Live stream display method and device, electronic equipment and readable storage medium | |

| CN113824977B (en) | Live broadcast room virtual gift giving method, system, device, equipment and storage medium | |

| CN114501100A (en) | Live broadcast page skipping method and system | |

| CN111800661A (en) | Live broadcast room display control method, electronic device and storage medium | |

| US20190035428A1 (en) | Video processing architectures which provide looping video | |

| CN111711850A (en) | Method and device for displaying bullet screen and electronic equipment | |

| CN111475240A (en) | Data processing method and system | |

| CN116708853A (en) | Interaction method and device in live broadcast and electronic equipment | |

| CN113727125B (en) | Live broadcast room screenshot method, device, system, medium and computer equipment | |

| CN110166801B (en) | Media file processing method and device and storage medium | |

| CN109999490B (en) | Method and system for reducing networking cloud application delay |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |