CN109934179B - Human body action recognition method based on automatic feature selection and integrated learning algorithm - Google Patents

Human body action recognition method based on automatic feature selection and integrated learning algorithm Download PDFInfo

- Publication number

- CN109934179B CN109934179B CN201910203015.0A CN201910203015A CN109934179B CN 109934179 B CN109934179 B CN 109934179B CN 201910203015 A CN201910203015 A CN 201910203015A CN 109934179 B CN109934179 B CN 109934179B

- Authority

- CN

- China

- Prior art keywords

- human body

- data

- feature

- information set

- learning algorithm

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Expired - Fee Related

Links

- 230000009471 action Effects 0.000 title claims abstract description 73

- 238000000034 method Methods 0.000 title claims abstract description 34

- 238000011156 evaluation Methods 0.000 claims abstract description 27

- 238000012952 Resampling Methods 0.000 claims abstract description 19

- 238000012549 training Methods 0.000 claims abstract description 19

- 230000008569 process Effects 0.000 claims description 13

- 238000013507 mapping Methods 0.000 claims description 8

- 230000003595 spectral effect Effects 0.000 claims description 4

- 230000001133 acceleration Effects 0.000 claims description 3

- 238000001914 filtration Methods 0.000 claims description 3

- 238000012163 sequencing technique Methods 0.000 claims description 3

- 238000007781 pre-processing Methods 0.000 claims 2

- 230000002349 favourable effect Effects 0.000 abstract description 2

- 238000005516 engineering process Methods 0.000 description 8

- 230000000694 effects Effects 0.000 description 6

- 238000010801 machine learning Methods 0.000 description 6

- 238000011160 research Methods 0.000 description 4

- 230000002354 daily effect Effects 0.000 description 3

- 238000012360 testing method Methods 0.000 description 3

- 210000003423 ankle Anatomy 0.000 description 2

- 238000013473 artificial intelligence Methods 0.000 description 2

- 230000009286 beneficial effect Effects 0.000 description 2

- 238000004364 calculation method Methods 0.000 description 2

- 238000003066 decision tree Methods 0.000 description 2

- 238000011161 development Methods 0.000 description 2

- 238000002474 experimental method Methods 0.000 description 2

- 238000002360 preparation method Methods 0.000 description 2

- 238000012706 support-vector machine Methods 0.000 description 2

- 210000000707 wrist Anatomy 0.000 description 2

- 238000004458 analytical method Methods 0.000 description 1

- 238000013528 artificial neural network Methods 0.000 description 1

- 230000006399 behavior Effects 0.000 description 1

- 230000007547 defect Effects 0.000 description 1

- 238000003745 diagnosis Methods 0.000 description 1

- 238000010586 diagram Methods 0.000 description 1

- 230000006806 disease prevention Effects 0.000 description 1

- 238000005265 energy consumption Methods 0.000 description 1

- 230000003203 everyday effect Effects 0.000 description 1

- 230000036541 health Effects 0.000 description 1

- 230000007246 mechanism Effects 0.000 description 1

- 238000012986 modification Methods 0.000 description 1

- 230000004048 modification Effects 0.000 description 1

- 238000012545 processing Methods 0.000 description 1

- 238000005070 sampling Methods 0.000 description 1

- 238000012216 screening Methods 0.000 description 1

- 230000003068 static effect Effects 0.000 description 1

Images

Landscapes

- Image Analysis (AREA)

Abstract

The invention discloses a human body action recognition method based on automatic feature selection and an integrated learning algorithm, which comprises the following steps of: a, acquiring a data information set of human body actions; b, resampling the data information set of the human body action, and constructing a sample feature space by utilizing the resampling point data, the time domain feature corresponding to the window data and the frequency domain feature corresponding to the window data; training sample data in a sample feature space by using a characteristic evaluation algorithm and an ensemble learning algorithm based on a Kate tree to obtain a trained double-layer model, wherein the double-layer model comprises a feature selection layer and an action recognition layer; and D, classifying and identifying the human body actions by using the trained double-layer model to realize automatic feature selection and human body action identification. The invention can automatically select the characteristics favorable to the model, reduces additional manual operation and improves the engineering efficiency; the integrated learning algorithm is used for human body action recognition, the training time is short, and the recognition rate is high.

Description

Technical Field

The invention relates to the field of sports science and artificial intelligence, in particular to a human body action recognition method based on automatic feature selection and an integrated learning algorithm.

Background

With the development of the internet of things technology, wearable fitness and medical sensing equipment is rapidly emerging, the wearable fitness and medical sensing equipment can be used for collecting human physiological data and sharing the data, data processing and analysis can be carried out at the cloud end, and finally the result is sent to a doctor so as to give diagnosis and rehabilitation suggestions. The 'closed loop' can be continuously carried out every day, the behavior habit of the human body is mastered by identifying and recording the daily action of the human body, and the daily work and rest and the exercise intensity are better analyzed, so that a healthy activity plan is established for each person. The most key technology in the technology is human body action recognition technology, which is applied to various aspects such as disease prevention and control, rehabilitation training, energy consumption prediction, health guidance and the like.

The traditional human body action recognition technology adopts a method of 'Cut Point', but the recognition precision of the method is low, particularly the selection accuracy of the Cut Point has a large influence on the recognition precision, and particularly the motion is static. With the development of artificial intelligence technology, machine learning methods are introduced into human motion recognition. At present, a plurality of machine learning algorithms used in the human body action recognition technology mainly comprise a decision tree, an artificial neural network, a hidden Markov model, a support vector machine and the like. However, in these studies, the feature selection process and the motion recognition process are completely separated and independent, and some processes have no feature selection even at all. The data and the features are known to determine the upper limit of the model recognition effect, and the algorithm only adjusts the upper limit, so the feature selection is important relative to the human motion recognition technology. In addition, in the aspect of action recognition models, existing research proves that a single learning device similar to a decision tree or a support vector machine in machine learning cannot guarantee good performance on any given human action recognition problem, weak learning algorithms can generate any high-precision strong learning method in an integrated mode as long as enough data exist, and the current research proves that the recognition effect of an algorithm model of integrated learning is superior to that of the single learning device. However, the independent feature selection process is a manual operation, and feature selection is often performed according to personal experience and existing research results, which may lead to screening out redundant features that are useless for the recognition model, or removing important features that have a large influence on the recognition model, and both of these phenomena may affect the recognition rate of the recognition model. If the model can automatically select the features of the original data and select the features with higher importance according to a certain evaluation mechanism, the recognition rate of the model can be improved, and the inconvenience caused by manual operation can be reduced, so that the engineering efficiency can be improved. At present, the research of human body motion recognition by using a model combining automatic feature selection and a motion recognition algorithm is not available.

The invention aims to train a human body action recognition model based on a Katt tree feature evaluation algorithm and an integrated learning algorithm by utilizing human body action data acquired by wearable equipment, and the model can automatically select features and recognize human body actions.

Disclosure of Invention

In the prior art, in the human body action recognition method based on the machine learning algorithm, a feature selection process and an action recognition process are two separate and independent processes, feature selection is independently and artificially performed, and features selected based on subjectivity and experience influence the recognition effect of a model. The invention aims to provide a human body action recognition method based on automatic feature selection and an integrated learning algorithm aiming at the defects in the prior art, which can realize automatic feature selection and human body action recognition, can automatically select the features favorable for a model, can obtain higher recognition rate, reduces additional manual operation and improves engineering efficiency.

In order to solve the technical problems, the technical scheme adopted by the invention is as follows:

a human body action recognition method based on automatic feature selection and an integrated learning algorithm is characterized by comprising the following steps:

step A, obtaining a data information set of human body actions;

b, setting a sliding window in the data information set of the human body action, resampling the data information set of the human body action in the sliding window, and constructing a sample characteristic space by utilizing the resampling point data, the time domain characteristic corresponding to the window data and the frequency domain characteristic corresponding to the window data;

step C, training sample data in a sample feature space by using a characteristic evaluation algorithm and an ensemble learning algorithm based on a Kate tree to obtain a trained double-layer model, namely an RAFs model, wherein the double-layer model comprises a characteristic selection layer and an action recognition layer;

and D, classifying and identifying the human body actions by using the trained double-layer (RAFs) model to realize automatic feature selection and human body action identification.

As a preferable mode, in the step a, the obtaining of the data set of the human body motion includes: firstly, obtaining an original data set of human body actions (for example, an original data set of human body actions is collected by installing an inertial sensor at a human body joint); then, the raw data is preprocessed, so that a data information set of the human body motion is obtained.

As a preferable mode, the step B includes:

setting a sliding window in a data information set of human body actions, setting a sliding distance and a resampling time interval, and resampling the data information set in the sliding window;

computing time domain features and frequency domain features in the sliding window;

and constructing a sample feature space by utilizing the resampling point data, the time domain feature corresponding to the window data and the frequency domain feature corresponding to the window data.

As a preferable mode, the step C includes:

step C1, setting a data information set of human body actions as T, a sample feature space as F, the number of features as K, a random subspace coefficient alpha, the number of Kate trees as M, and a feature selection coefficient beta;

step C2, randomly selecting alpha K features in the feature space F to create a sub-feature space F sub ={f 1 ,f 2 ,...,f j },(j=1,2,...,αK);

Step C3, selecting a sub-feature space F sub The data information set T is mapped by the characteristics in the method to obtain a new data set T i 1, 2.. M, i.e.:

step C4, using the new data set T i CT training Kate Tree i ;

Step C5, repeating the steps C2-C4 for M times from 1 to M to obtain M Kate trees CT i ;

Step C6, if the feature f j Node-in-tree CT i In, the jth feature sectionThe depth of the point in the ith Kate tree is d ij Then the importance evaluation value E of the jth feature in the ith Katt tree ij Comprises the following steps:if the feature f j CT with nodes not in Kate tree i In, then E ij =0;

Step C7, repeating step C6M times by K times for i from 1 to M and j from 1 to K, and obtaining the importance evaluation value E of each feature in each Katt tree ij ;

Step C8, calculating the total evaluation value E of each feature node in all Katt trees for j from 1 to K j :

Step C9, according to E j Sequencing each feature in the sample feature space F, and selecting the first beta K features with high importance evaluation value to form a new feature space F selected ,F selected ={f 1 ,f 2 ,…,f βK }

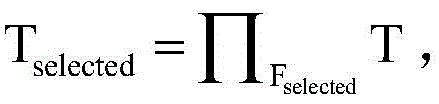

Step C10, selecting a new feature space F selected The data information set T is mapped by the characteristics in the data information set T to obtain a new set T selected That is to say that,

step C11, new data set T selected And inputting the data into a second layer of the model, namely an XGboost algorithm motion recognition layer, and training the human body motion recognition layer to obtain a final trained double-layer model.

As a preferable mode, in the step a, the pretreatment process includes: mean filtering is used to remove noise in the original data set.

As a preferable mode, in the step a, the pretreatment process includes: and removing the first 10 seconds of data at the beginning and the last 10 seconds of data at the end in the original data set so as to remove useless data in the action preparation stage and the action finishing stage.

Preferably, the time domain features include one or more of a mean, a minimum, a maximum, a median, a variance, a standard deviation, a skewness, a kurtosis, a zero-crossing number, and an acceleration coordinate axis correlation index.

Preferably, the frequency domain features include one or more of spectral energy, dominant frequency, and amplitude corresponding to the dominant frequency.

Compared with the prior art, the method has the advantages that the characteristic importance evaluation algorithm based on the Katt tree is utilized to automatically select the characteristics, so that the characteristics beneficial to the model can be automatically selected, the inconvenience caused by manually selecting the characteristics is avoided, the additional manual operation is reduced, and the engineering efficiency is improved; meanwhile, the human body action recognition is carried out by using the integrated learning algorithm, the time complexity is small, the training time is greatly reduced, and the recognition rate of the human body action is improved.

Drawings

FIG. 1 is a model framework diagram of the present invention.

Fig. 2 is a comparison graph of recognition effects before and after feature selection.

Detailed Description

The invention utilizes an integrated learning algorithm in machine learning to establish a model, the structure of which is shown in figure 1 and is called RAFs (human motion registration model based on automatic feature selection) model. The model comprises two layers, wherein the first layer uses a characteristic evaluation algorithm based on a Kate tree to perform characteristic selection, and the second layer uses an XGboost (extreme Gradient boosting) algorithm to perform human body action identification.

The embodiment of the human body action recognition method based on automatic feature selection and an integrated learning algorithm comprises the following steps:

step A, obtaining a data information set of human body actions: firstly, obtaining an original data set of human body actions (for example, an original data set of human body actions is collected by installing an inertial sensor at a human body joint); then, the raw data is preprocessed, so that a data information set of the human body motion is obtained.

The pretreatment process comprises the following steps: eliminating noise in the original data set by using mean filtering; and removing the first 10 seconds of data at the beginning and the last 10 seconds of data at the end in the original data set so as to remove useless data in the action preparation stage and the action finishing stage.

And step B, setting a sliding window in the data information set of the human body action, resampling the data information set of the human body action in the sliding window, and constructing a sample characteristic space by utilizing the resampling point data, the time domain characteristic corresponding to the window data and the frequency domain characteristic corresponding to the window data. For the resampling data, a sliding window with the length of 10 seconds is arranged in a data information set of human body motion, the sliding distance is 5 seconds, and the data information set is resampled in the sliding window with the length of 10 seconds. On the premise that the frequency of data collected by the inertial sensor is not less than 20 Hz, the resampling time interval is set to be 0.5 s, and 19 pieces of resampling data can be obtained in a 10-second window on each coordinate axis. For both time domain features and frequency domain features, the calculations are performed in a 10 second sliding window. Then, a sample feature space F is constructed by utilizing the resampling point data, the time domain feature corresponding to the window data and the frequency domain feature corresponding to the window data, wherein F is { F ═ F 1 ,f 2 ,…,f n In which f i Is the ith original feature of the sample, and the specific information of each element in the feature space F is shown in table 1.

From f in Table 1 1 To f 57 Is X, Y and the resampling point for the Z axis in the 10 second time window, X i Data for ith second in a 10 second time window for the X axis, Y i 、Z i And X i The same is true. f. of 58 To f 87 Time domain features representing X, Y calculated and the Z-axis in a 10 second time window include Mean (Mean), minimum (Min), maximum (Max), Median (media), Variance (Variance), standard deviation (Std), Skewness (Skewness), Kurtosis (Kurtosis), number of zero crossings (Zc), and acceleration axis correlation index (Cac). For example, cac (XY)Referring to the correlation index of the X-axis and the Y-axis in a certain 10-second time window, the calculation formula is as follows:

table 1 characteristic space each element information table

From f 88 To f 96 Representing X, Y frequency domain features calculated in a 10 second time window and the Z axis, including spectral energy (Se), dominant frequency (Df), and amplitude (Dfm) corresponding to the dominant frequency, wherein the spectral energy is calculated as follows:

and step C, training sample data in the sample feature space by using a characteristic evaluation algorithm and an ensemble learning algorithm based on the Kate tree to obtain a trained double-layer model, namely the RAFs model, wherein the double-layer model comprises a characteristic selection layer and an action recognition layer.

Specifically, the step C includes:

step C1, setting a data information set of human body actions as T, a sample feature space as F, the number of features as K, a random subspace coefficient alpha, the number of Kate trees as M, and a feature selection coefficient beta;

step C2, randomly selecting alpha K features in the feature space F to create a sub-feature space F sub ={f 1 ,f 2 ,...,f j },(j=1,2,...,αK);

Step C3, selecting a sub-feature space F sub The data information set T is mapped by the characteristics in the method to obtain a new data set T i 1, 2.. M, i.e.:

step C4, using the new data set T i Training Kate Tree CT i ;

Step C5, repeating the steps C2-C4 for M times from 1 to M to obtain M Kate trees CT i ;

Step C6, if the feature f j node-in-Carter tree CT i Among them, the depth of the jth characteristic node in the ith Kate tree is d ij Then the importance evaluation value E of the jth feature in the ith Katt tree ij Comprises the following steps:

if the feature f j CT with nodes not in Kate tree i In, then E ij =0;

Step C7, repeating step C6M times by K times for i from 1 to M and j from 1 to K, and obtaining the importance evaluation value E of each feature in each Katt tree ij ;

Step C8, calculating the total evaluation value E of each feature node in all Katt trees for j from 1 to K j :

Step C9, according to E j Sequencing each feature in the sample feature space F, and selecting the first beta K features with high importance evaluation value to form a new feature space F selected ,F selected ={f 1 ,f 2 ,…,f βK }

Step C10, selecting a new feature space F selected The data information set T is mapped by the characteristics in the data information set T to obtain a new set T selected That is to say that,

step C11, new data set T selected And inputting the data into a second layer of the model, namely an XGboost algorithm action recognition layer, and training the human body action recognition layer to obtain the final RAFs model.

And D, classifying and identifying the human body actions by using the trained RAFs model to realize automatic feature selection and human body action identification.

To further illustrate the implementation of the present invention, the following experiments are now used to verify the beneficial effects of the present invention:

the data set used in this experiment was derived from the open-source PAMAP2 data set in the UCI machine learning library, which collected the movement data of 9 volunteers, including 8 males, 1 female, and an average age of 27.2 years. The inertial sensors of the data set are distributed on the Wrist (Wrist), the Chest (Chest) and the Ankle (Ankle) of a human body, the sampling frequency of the sensors is 100 Hz, 18 daily actions and complex actions are collected, the data of 8 actions are only used in the invention, and the specific information is shown in Table 2.

TABLE 2 Experimental data information Table

1) Two-thirds of each action data is selected from the data set as a training set, one-third of each action data is selected as a testing set, and the selection operation is random selection, so that the training set and the testing set are ensured to contain 8 action data.

2) The training set is set as T, the sample feature space is set as F, the number of sample features is 288, the coefficient of the random subspace is 0.7, the coefficient of the feature selection is 0.7, and the number of Kate trees is 140.

3)i=0。

4)i=i+1。

5) Randomly selecting 201 features in F types of sample feature space to construct a sub-feature space F sub ={f 1 ,f 2 ,...,f 201 }。

6) Selecting a sub-feature space F sub The training set T is mapped by the characteristics in the step (2) to obtain a new set T i 1, 2., 140, i.e. 1,2Where n represents the mapping operation,

7) using new data set T i Training Kate Tree CT i 。

8) Repeat 5), 6), 7) until i equals 140, resulting in 140 kat tree CTs i 。

9)i=0。

10)i=i+1。

11)j=0。

12)j=j+ 1。

13) And calculating the importance evaluation value of the characteristic node in the Kate tree. If the feature f j node-in-Carter tree CT i Among them, the depth of the jth characteristic node in the ith Kate tree is d ij Then the importance evaluation value of the jth feature in the ith Katt tree isIf the feature f j CT with nodes not in Kate tree i In, then E ij =0。

14) Repeating 13) until j equals 288, i equals 140, and finally obtaining the evaluation value E of each feature in each Katt tree ij 。

15)j=0。

16)j=j+ 1。

18) Repeat 17) until j equals 288, finally obtaining the total evaluation value E of each characteristic j And 288 in total.

19) According to E j The 288 features in the feature space F are sorted, and the first 201 features with higher importance evaluation values are selected to form a new feature space F selected ={f 1 ,f 2 ,…,f 201 }。

20) Selecting a new feature space F selected The features in (1) map the data set T to obtain a new set T selected I.e. byWherein Π represents a mapping operation,

21) new data set T selected Input into the second layer of the model, XGBOAnd the ost algorithm action recognition layer is used for training the human body action recognition layer so as to obtain a final RAFs model, and the final parameter setting of the model is shown in Table 3.

TABLE 3RAFs model parameter setting Table

The F1 value is selected as the evaluation index of the model. The F1 value is related to precision and recall and is calculated as follows:

the higher the F1 value, the better the recognition of the model.

The RAFs model was tested using the test set with an average F1 value of 0.9 before feature selection and an average F1 value of 0.94 after feature selection, with specific experimental results as shown in table 4. A comparison of experimental data before and after feature selection is shown in fig. 2.

TABLE 4 Experimental data sheet before and after feature selection of RAFs model

As can be seen from the experimental data in Table 4 and FIG. 2, the RAFs model of the present invention realizes automatic feature selection, and improves the recognition rate of the model, and the recognition effect is far better than that of other models.

While the present invention has been described with reference to the embodiments shown in the drawings, the present invention is not limited to the embodiments, which are illustrative and not restrictive, and it will be apparent to those skilled in the art that various changes and modifications can be made therein without departing from the spirit and scope of the invention as defined in the appended claims.

Claims (7)

1. A human body action recognition method based on automatic feature selection and an integrated learning algorithm is characterized by comprising the following steps:

step A, obtaining a data information set of human body actions;

b, setting a sliding window in the data information set of the human body action, resampling the data information set of the human body action in the sliding window, and constructing a sample characteristic space by utilizing the resampling point data, the time domain characteristic corresponding to the window data and the frequency domain characteristic corresponding to the window data;

step C, training sample data in a sample feature space by using a characteristic evaluation algorithm and an ensemble learning algorithm based on a Kate tree to obtain a trained double-layer model, wherein the double-layer model comprises a feature selection layer and an action recognition layer;

step D, classifying and identifying the human body actions by using the trained double-layer model to realize automatic feature selection and human body action identification;

the step C comprises the following steps:

step C1, setting a data information set of human body actions as T, a sample feature space as F, the number of features as K, a random subspace coefficient alpha, the number of Kate trees as M, and a feature selection coefficient beta;

step C2, randomly selecting alpha K features in the feature space F to create a sub-feature space F sub ={f 1 ,f 2 ,...,f j },(j=1,2,...,αK);

Step C3, selecting a sub-feature space F sub The data information set T is mapped by the characteristics in the database to obtain a new data set T i 1, 2.. M, i.e.:

step C4, Using the new data setClosing T i Training Kate Tree CT i ;

Step C5, repeating the steps C2-C4 for M times from 1 to M to obtain M Kate trees CT i ;

Step C6, if the feature f j Node-in-tree CT i Among them, the depth of the jth characteristic node in the ith Kate tree is d ij Then the importance evaluation value E of the jth feature in the ith Katt tree ij Comprises the following steps:if the feature f j CT with nodes not in Kate tree i In, then E ij =0;

Step C7, repeating step C6M times by K times for i from 1 to M and j from 1 to K, and obtaining the importance evaluation value E of each feature in each Katt tree ij ;

Step C8, calculating the total evaluation value E of each feature node in all Katt trees for j from 1 to K j :

Step C9, according to E j Sequencing each feature in the sample feature space F, and selecting the first beta K features with high importance evaluation value to form a new feature space F selected ,F selected ={f 1 ,f 2 ,…,f βK }

Step C10, selecting a new feature space F selected The data information set T is mapped by the characteristics in the data information set T to obtain a new set T selected That is to say that,

step C11, new data set T selected And inputting the data into an XGboost algorithm action recognition layer for training a human body action recognition layer so as to obtain a final trained double-layer model.

2. The human body motion recognition method based on automatic feature selection and integrated learning algorithm as claimed in claim 1, wherein in the step a, the process of obtaining the data information set of the human body motion comprises: firstly, obtaining an original data set of human body actions; then, the raw data is preprocessed, so that a data information set of the human body motion is obtained.

3. The human motion recognition method based on automatic feature selection and integrated learning algorithm as claimed in claim 1, wherein said step B comprises:

setting a sliding window in a data information set of human body actions, setting a sliding distance and a resampling time interval, and resampling the data information set in the sliding window;

computing time domain features and frequency domain features in the sliding window;

and constructing a sample feature space by utilizing the resampling point data, the time domain feature corresponding to the window data and the frequency domain feature corresponding to the window data.

4. The human body motion recognition method based on automatic feature selection and integrated learning algorithm as claimed in claim 2, wherein in the step a, the preprocessing process comprises: mean filtering is used to remove noise in the original data set.

5. The human body motion recognition method based on automatic feature selection and integrated learning algorithm as claimed in claim 2, wherein in the step a, the preprocessing process comprises: the first 10 seconds of data at the very beginning and the last 10 seconds of data at the very end in the original data set are removed.

6. The human motion recognition method based on automatic feature selection and integrated learning algorithm according to claim 1 or 3, wherein the time domain features comprise one or more of mean, minimum, maximum, median, variance, standard deviation, skewness, kurtosis, number of zero crossings, acceleration coordinate axis correlation index.

7. The method for human motion recognition based on automatic feature selection and integrated learning algorithm according to claim 1 or 3, wherein the frequency domain features comprise one or more of spectral energy, dominant frequency, and amplitude corresponding to the dominant frequency.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910203015.0A CN109934179B (en) | 2019-03-18 | 2019-03-18 | Human body action recognition method based on automatic feature selection and integrated learning algorithm |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910203015.0A CN109934179B (en) | 2019-03-18 | 2019-03-18 | Human body action recognition method based on automatic feature selection and integrated learning algorithm |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN109934179A CN109934179A (en) | 2019-06-25 |

| CN109934179B true CN109934179B (en) | 2022-08-02 |

Family

ID=66987359

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201910203015.0A Expired - Fee Related CN109934179B (en) | 2019-03-18 | 2019-03-18 | Human body action recognition method based on automatic feature selection and integrated learning algorithm |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN109934179B (en) |

Families Citing this family (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN110689041A (en) * | 2019-08-20 | 2020-01-14 | 陈羽旻 | Multi-target behavior action recognition and prediction method, electronic equipment and storage medium |

| CN110674875A (en) * | 2019-09-25 | 2020-01-10 | 电子科技大学 | Pedestrian motion mode identification method based on deep hybrid model |

| CN111028339B (en) * | 2019-12-06 | 2024-03-29 | 国网浙江省电力有限公司培训中心 | Behavior modeling method and device, electronic equipment and storage medium |

| CN111199187A (en) * | 2019-12-11 | 2020-05-26 | 中国科学院计算机网络信息中心 | Animal behavior identification method based on algorithm, corresponding storage medium and electronic device |

Family Cites Families (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2012006578A2 (en) * | 2010-07-08 | 2012-01-12 | The Regents Of The University Of California | End-to-end visual recognition system and methods |

| US8306257B2 (en) * | 2011-01-31 | 2012-11-06 | Seiko Epson Corporation | Hierarchical tree AAM |

| US20160026917A1 (en) * | 2014-07-28 | 2016-01-28 | Causalytics, LLC | Ranking of random batches to identify predictive features |

| CN105931224A (en) * | 2016-04-14 | 2016-09-07 | 浙江大学 | Pathology identification method for routine scan CT image of liver based on random forests |

| CN108921197A (en) * | 2018-06-01 | 2018-11-30 | 杭州电子科技大学 | A kind of classification method based on feature selecting and Integrated Algorithm |

| CN109034398B (en) * | 2018-08-10 | 2023-09-12 | 深圳前海微众银行股份有限公司 | Gradient lifting tree model construction method and device based on federal training and storage medium |

-

2019

- 2019-03-18 CN CN201910203015.0A patent/CN109934179B/en not_active Expired - Fee Related

Also Published As

| Publication number | Publication date |

|---|---|

| CN109934179A (en) | 2019-06-25 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN109934179B (en) | Human body action recognition method based on automatic feature selection and integrated learning algorithm | |

| Zhu et al. | Using deep learning for energy expenditure estimation with wearable sensors | |

| Khan et al. | Accelerometer signal-based human activity recognition using augmented autoregressive model coefficients and artificial neural nets | |

| CN111461176A (en) | Multi-mode fusion method, device, medium and equipment based on normalized mutual information | |

| CN115563484A (en) | Street greening quality detection method based on physiological awakening identification | |

| CN107832737A (en) | Electrocardiogram interference identification method based on artificial intelligence | |

| CN103699873A (en) | Lower-limb flat ground walking gait recognition method based on GA-BP (Genetic Algorithm-Back Propagation) neural network | |

| CN113197579A (en) | Intelligent psychological assessment method and system based on multi-mode information fusion | |

| CN109544518A (en) | A kind of method and its system applied to the assessment of skeletal maturation degree | |

| CN110755085B (en) | Motion function evaluation method and equipment based on joint mobility and motion coordination | |

| CN113974612B (en) | Automatic evaluation method and system for upper limb movement function of stroke patient | |

| CN105868532B (en) | A kind of method and system of intelligent evaluation heart aging degree | |

| CN110575141A (en) | Epilepsy detection method based on generation countermeasure network | |

| CN113171080B (en) | Energy metabolism evaluation method and system based on wearable sensing information fusion | |

| CN111462082A (en) | Focus picture recognition device, method and equipment and readable storage medium | |

| Arozi et al. | EMG signal processing of Myo armband sensor for prosthetic hand input using RMS and ANFIS | |

| Aydemir et al. | A new method for activity monitoring using photoplethysmography signals recorded by wireless sensor | |

| CN116504362A (en) | Sleep adjustment scheme generation method, device, equipment and storage medium | |

| CN114224343B (en) | Cognitive disorder detection method, device, equipment and storage medium | |

| Li et al. | Tfformer: A time frequency information fusion based cnn-transformer model for osa detection with single-lead ecg | |

| CN114299996A (en) | AdaBoost algorithm-based speech analysis method and system for key characteristic parameters of symptoms of frozen gait of Parkinson's disease | |

| CN111916179A (en) | Method for carrying out 'customized' diet nourishing model based on artificial intelligence self-adaption individual physical sign | |

| CN108985278A (en) | A kind of construction method of the gait function assessment models based on svm | |

| Hu et al. | Automatic heart sound classification using one dimension deep neural network | |

| CN115633961A (en) | Construction method and system based on dynamic weighted decision fusion high-fear recognition model |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant | ||

| CF01 | Termination of patent right due to non-payment of annual fee |

Granted publication date: 20220802 |

|

| CF01 | Termination of patent right due to non-payment of annual fee |