CN109035287B - Foreground image extraction method and device and moving vehicle identification method and device - Google Patents

Foreground image extraction method and device and moving vehicle identification method and device Download PDFInfo

- Publication number

- CN109035287B CN109035287B CN201810720684.0A CN201810720684A CN109035287B CN 109035287 B CN109035287 B CN 109035287B CN 201810720684 A CN201810720684 A CN 201810720684A CN 109035287 B CN109035287 B CN 109035287B

- Authority

- CN

- China

- Prior art keywords

- image

- foreground

- target

- foreground image

- moving

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

- 238000000605 extraction Methods 0.000 title claims abstract description 70

- 238000000034 method Methods 0.000 title claims abstract description 56

- 238000004590 computer program Methods 0.000 claims description 34

- 238000012545 processing Methods 0.000 claims description 23

- 230000011218 segmentation Effects 0.000 claims description 22

- 230000004069 differentiation Effects 0.000 claims description 3

- 238000010586 diagram Methods 0.000 description 10

- 238000001514 detection method Methods 0.000 description 8

- 238000005516 engineering process Methods 0.000 description 6

- 230000008569 process Effects 0.000 description 3

- 230000009286 beneficial effect Effects 0.000 description 2

- 230000008859 change Effects 0.000 description 2

- 230000007547 defect Effects 0.000 description 2

- 230000000694 effects Effects 0.000 description 2

- 230000003068 static effect Effects 0.000 description 2

- 230000001360 synchronised effect Effects 0.000 description 2

- 238000007796 conventional method Methods 0.000 description 1

- 238000013461 design Methods 0.000 description 1

- 238000011161 development Methods 0.000 description 1

- 230000018109 developmental process Effects 0.000 description 1

- 238000001914 filtration Methods 0.000 description 1

- 239000004973 liquid crystal related substance Substances 0.000 description 1

- 238000012986 modification Methods 0.000 description 1

- 230000004048 modification Effects 0.000 description 1

- 230000001629 suppression Effects 0.000 description 1

- 238000012795 verification Methods 0.000 description 1

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/10—Segmentation; Edge detection

- G06T7/194—Segmentation; Edge detection involving foreground-background segmentation

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T5/00—Image enhancement or restoration

- G06T5/70—Denoising; Smoothing

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

- G06T7/215—Motion-based segmentation

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

- G06T7/254—Analysis of motion involving subtraction of images

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V20/00—Scenes; Scene-specific elements

- G06V20/40—Scenes; Scene-specific elements in video content

- G06V20/41—Higher-level, semantic clustering, classification or understanding of video scenes, e.g. detection, labelling or Markovian modelling of sport events or news items

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V2201/00—Indexing scheme relating to image or video recognition or understanding

- G06V2201/07—Target detection

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Multimedia (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Computational Linguistics (AREA)

- Software Systems (AREA)

- Image Analysis (AREA)

Abstract

The invention relates to a foreground image extraction method and a device, wherein the method comprises the following steps: acquiring a first foreground image obtained by performing interframe difference on a target image; acquiring a second foreground image obtained by carrying out background difference on a target image; extracting the contour of the moving target from the first foreground image, and determining the moving area of the moving target according to the contour; the method comprises the steps of adding a first foreground image and a second foreground image to obtain an initial foreground image, extracting the foreground image of a moving target from the initial foreground image according to the moving region of the moving target, enabling common pixel characteristics of the first foreground image and the second foreground image to be combined, utilizing the first foreground image to accurately obtain the outline of the moving target, accurately reflecting the moving region of the moving target in the image, extracting the foreground image of the moving target by combining the moving region, and improving the accuracy of extracting the foreground image. A moving vehicle identification method and apparatus are also provided.

Description

Technical Field

The present invention relates to the field of image processing technologies, and in particular, to a foreground image extraction method, a foreground image extraction device, a moving vehicle identification method, a moving vehicle identification device, a computer device, and a computer-readable storage medium.

Background

With the development of image processing technology, image detection and recognition technology is widely applied to the detection and recognition of moving objects, for example, an intelligent transportation system adopts the detection and recognition technology based on video analysis technology to detect and recognize moving vehicles in vehicle video images in real time.

The moving target can be detected and identified by extracting the foreground image of the moving target, the traditional technology is easily influenced by interference factors such as noise, shadow and the like existing in the image in the process of extracting the foreground image, for example, when a video image of the moving target has a large noise sequence, the error rate is increased when the extracted foreground image is used for detecting the moving target, and for a foreground image extraction method which is easily interfered by the shadow, the detection accuracy of the moving target is low under the night condition, and the detection of the moving target is inaccurate.

Disclosure of Invention

Based on this, it is necessary to provide a foreground image extraction method, a foreground image extraction device, a moving vehicle identification method, a moving vehicle identification device, a computer apparatus, and a computer-readable storage medium, in view of the problem that the conventional technique is low in accuracy.

A foreground image extraction method comprises the following steps:

acquiring a first foreground image obtained by performing interframe difference on a target image; the target image is an image carrying a moving target;

acquiring a second foreground image obtained by carrying out background difference on the target image;

extracting the contour of the moving target from the first foreground image, and determining a moving area of the moving target according to the contour;

and performing addition operation on the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target.

According to the foreground image extraction method, the initial foreground image is obtained by adding operation according to the first foreground image and the second foreground image, so that the common pixel characteristics of the two foreground images are combined, the influence of interference factors such as noise, shadow and the like on foreground image extraction can be avoided, the outline of the moving target can be accurately obtained by utilizing the first foreground image, the moving area of the moving target in each frame of image can be accurately reflected, the foreground image of the moving target is extracted from the initial foreground image by combining the moving area, the accuracy of foreground image extraction can be improved, and the accuracy of detection and identification of the moving target can be improved.

In one embodiment, there is provided a moving vehicle identification method, including the steps of:

extracting a target image carrying a moving target from a traffic flow video image sequence; wherein the moving object comprises a moving vehicle;

acquiring a first foreground image obtained by performing interframe difference on the target image; acquiring a second foreground image obtained by carrying out background difference on the target image;

extracting the contour of the moving target from the first foreground image, and determining a moving area of the moving target according to the contour;

adding the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target;

and identifying the moving vehicle in the traffic flow video image sequence according to the foreground image.

According to the moving vehicle identification method, the initial foreground image is obtained by adding the first foreground image and the second foreground image of the target image in the traffic flow video image sequence, so that the common pixel characteristics of the two foreground images are combined, the influence of interference factors such as noise, shadow and the like on foreground image extraction can be avoided, the outline of the moving target can be accurately obtained by utilizing the first foreground image, the moving area of the moving target in each frame image is accurately reflected, the foreground image of the moving target is extracted from the initial foreground image by combining the moving area, the accuracy of foreground image extraction can be improved, the foreground image is used for identifying and detecting moving vehicles in the traffic flow video image sequence, and the accuracy of detecting and identifying the moving vehicles is also improved.

In one embodiment, there is provided a foreground image extraction apparatus including:

the first foreground obtaining module is used for obtaining a first foreground image obtained by performing interframe difference on a target image; the target image is an image carrying a moving target;

the second foreground obtaining module is used for obtaining a second foreground image obtained by carrying out background difference on the target image;

the moving region determining module is used for extracting the contour of the moving target from the first foreground image and determining the moving region of the moving target according to the contour;

and the moving foreground extraction module is used for performing addition operation on the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target.

According to the foreground image extraction device, the initial foreground image is obtained by adding operation according to the first foreground image and the second foreground image, so that the common pixel characteristics of the two foreground images are combined, the influence of interference factors such as noise and shadow on foreground image extraction can be avoided, the outline of the moving target can be accurately obtained by using the first foreground image, the moving area of the moving target in each frame of image can be accurately reflected, the foreground image of the moving target is extracted from the initial foreground image by combining the moving area, the accuracy of extracting the foreground image can be improved, and the accuracy of detecting and identifying the moving target can be improved.

In one embodiment, there is provided a moving vehicle identification device comprising:

the target image extraction module is used for extracting a target image carrying a moving target from the traffic flow video image sequence; wherein the moving object comprises a moving vehicle;

the foreground image acquisition module is used for acquiring a first foreground image obtained by performing interframe difference on the target image; acquiring a second foreground image obtained by carrying out background difference on the target image;

a moving region obtaining module, configured to extract a contour of the moving target from the first foreground image, and determine a moving region of the moving target according to the contour;

a foreground image extraction module, configured to perform an addition operation on the first foreground image and the second foreground image to obtain an initial foreground image, and extract a foreground image of the moving target from the initial foreground image according to a moving region of the moving target;

and the moving vehicle identification module is used for identifying moving vehicles in the traffic flow video image sequence according to the foreground images.

According to the moving vehicle identification device, the initial foreground image is obtained by adding the first foreground image and the second foreground image of the target image in the traffic flow video image sequence, so that the common pixel characteristics of the two foreground images are combined, the influence of interference factors such as noise, shadow and the like on foreground image extraction can be avoided, the outline of the moving target can be accurately obtained by utilizing the first foreground image, the moving area of the moving target in each frame image is accurately reflected, the foreground image of the moving target is extracted from the initial foreground image by combining the moving area, the accuracy of foreground image extraction can be improved, the foreground image is used for identifying and detecting moving vehicles in the traffic flow video image sequence, and the accuracy of detecting and identifying the moving vehicles is also improved.

A computer device comprising a memory, a processor and a computer program stored on the memory and executable on the processor, the processor implementing the foreground image extraction method or the moving vehicle identification method as described in any one of the above embodiments when executing the computer program.

According to the computer equipment, through the computer program running on the processor, the accuracy of extracting the foreground image can be improved, and the accuracy of detecting and identifying the moving target can also be improved.

A computer-readable storage medium on which a computer program is stored, which when executed by a processor implements the foreground image extraction method or the moving vehicle identification method as described in any one of the above embodiments.

The computer readable storage medium can improve the accuracy of extracting the foreground image and the accuracy of detecting and identifying the moving target through the stored computer program.

Drawings

FIG. 1 is a schematic flow chart of a foreground image extraction method in one embodiment;

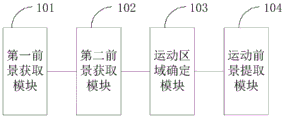

FIG. 2 is a schematic diagram of a foreground image extraction apparatus in one embodiment;

FIG. 3 is a schematic flow chart diagram of a method for identifying a moving vehicle in one embodiment;

FIG. 4 is a schematic structural diagram of a moving vehicle identification device according to an embodiment;

FIG. 5 is a diagram illustrating an internal structure of a computer device according to an embodiment.

Detailed Description

In order to make the objects, technical solutions and advantages of the present invention more apparent, the present invention is described in further detail below with reference to the accompanying drawings and embodiments. It should be understood that the specific embodiments described herein are merely illustrative of the invention and are not intended to limit the invention.

It should be noted that the term "first \ second" referred to in the embodiments of the present invention is only used for distinguishing similar objects, and does not represent a specific ordering for the objects, and it should be understood that "first \ second" may exchange a specific order or sequence order if allowed. It should be understood that "first \ second" distinct objects may be interchanged under appropriate circumstances such that embodiments of the invention described herein may be practiced in sequences other than those illustrated or described herein.

In an embodiment, an extraction method of a foreground image is provided, referring to fig. 1, where fig. 1 is a schematic flow diagram of the extraction method of the foreground image in an embodiment, and the extraction method of the foreground image may include the following steps:

step S101, a first foreground image obtained by performing inter-frame difference on a target image is obtained.

The target image is an image carrying a moving target, the image may be an image in a video image sequence, the first foreground image is a foreground image carrying the moving target in the target image, the first foreground image may be obtained by performing inter-frame difference processing on the target image, and the inter-frame difference processing is a method of extracting the moving foreground image by performing difference operation on the target image and an adjacent frame image.

In this step, the target image and the adjacent frame image thereof can be obtained, and the target image and the adjacent frame image thereof are processed by an inter-frame difference method to obtain a first foreground image.

Step S102, a second foreground image obtained by carrying out background difference on the target image is obtained.

In this step, the second foreground image refers to a foreground image carrying a moving target in the target image, and the second foreground image may be obtained by performing a background subtraction on the target image, where the background subtraction is a method of extracting a moving image by performing a subtraction operation on a current frame image and a background image.

For the background image, a first frame image in a video image sequence in which the target image is located may be set as the background image or background pixel point statistics may be performed on pixel points of a current frame image, and the background image is set according to a statistical result, for example, if a certain pixel point of a real-time foreground image of the current frame image is detected as a foreground for multiple times before the current time, the pixel point is set as a background pixel point, and the background image is generated according to the multiple background pixel points.

In the step, the background image of the target image can be obtained, and the target image and the background image thereof are processed by a background difference method to obtain a second foreground image.

Step S103, extracting the contour of the moving object from the first foreground image, and determining the moving area of the moving object according to the contour.

The contour of the moving object refers to a contour line of the moving object in the target image, and can be marked in the target image through pixel points with uniform pixel values, and can be used for marking contour information such as a pixel point range occupied by the moving object in the target image.

Extracting the contour of a moving target from a first foreground image, and determining a moving area of the moving target in a target image according to the contour, wherein the moving area can be a circumscribed rectangular pixel frame of the contour of the moving target; the motion area can be used for determining the main motion range of the moving target, effectively shielding the interference of other pixel points in the target image on the moving target, reducing the probability of false extraction of the foreground image and being beneficial to improving the accuracy of extraction of the foreground image of the moving target.

And step S104, performing addition operation on the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target.

In this step, the initial foreground image may be obtained by performing an addition operation on pixel values of corresponding pixels in the first foreground image and the second foreground image, so that common pixel characteristics of the first foreground image and the second foreground image are combined, and an influence of interference factors such as noise and shadow on foreground image extraction can be avoided, specifically, the initial foreground image can make up for an influence that a moving target misjudges when the moving target suddenly appears in a target image by using a conventional interframe difference method to reduce the accuracy of the foreground image, and also make up for a defect that the target image is hollow due to a phenomenon that the target image is hollow due to the fact that the moving target characteristic data cannot be effectively obtained by using the conventional background difference method under the condition of dynamic change of a scene, so that the accuracy of the foreground image is low; and then, extracting the foreground image of the moving target from the initial foreground image by using the moving area of the moving target, so that the interference of other pixel points of the initial foreground image on the extraction of the foreground image of the moving target is avoided, the probability of error occurrence during the extraction of the foreground image is further reduced, and the accuracy of extracting the foreground image of the moving target is improved.

According to the foreground image extraction method, the initial foreground image is obtained by adding operation according to the first foreground image and the second foreground image, so that the common pixel characteristics of the two foreground images are combined, the influence of interference factors such as noise, shadow and the like on foreground image extraction can be avoided, the outline of the moving target can be accurately obtained by utilizing the first foreground image, the moving area of the moving target in each frame of image can be accurately reflected, the foreground image of the moving target is extracted from the initial foreground image by combining the moving area, the accuracy of foreground image extraction can be improved, and the accuracy of detection and identification of the moving target can be improved.

In one embodiment, the step of obtaining the first foreground image obtained by performing the inter-frame difference on the target image in step S101 may include:

subtracting the target image from two adjacent frames of images respectively to obtain two frames of differential images; binarizing the difference image according to a set segmentation threshold value to obtain a binary image; and multiplying the binary image of the difference image to obtain a first foreground image.

In the embodiment, the first foreground image is obtained by performing three-frame difference processing on the target image and two adjacent frames of images.

After the video image sequence is subjected to low pass, a target image and two adjacent frames of images of the target image are extracted, the gray values of corresponding pixel points of the target image and the two adjacent frames of images are subtracted respectively to obtain two frames of difference images, binarization processing is performed on the two frames of difference images respectively according to a set segmentation threshold value to obtain two frames of binary images, and multiplication operation is performed on the two frames of binary images to obtain a first foreground image.

Specifically, assuming that f (x, y, t-1), f (x, y, t), f (x, y, t +1) are the gray scale values of a certain pixel coordinate (x, y) in the image at the time points of t-1, t and t +1, respectively, f (x, y, t-1) may correspond to the previous frame image of the target image, f (x, y, t) may correspond to the target image, and f (x, y, t +1) may correspond to the next frame image of the target image, the two-frame difference image may be represented as:

diff(x,y,t)=|f(x,y,t)-f(x,y,t-1)|

diff(x,y,t+1)=|f(x,y,t+1)-f(x,y,t)|

diff (x, y, t) is a differential image at the time t, diff (x, y, t +1) is a differential image at the time t +1, the differential image at the time t and the differential image at the time t +1 are used for separating a foreground image and a background image of a target image by selecting a threshold value suitable for segmentation to obtain a binary image, and TH is set as a set segmentation threshold value for determining whether each pixel point in the image belongs to the foreground image or the background area, so that a corresponding binary image is obtained:

the foreground image of the target image is: htemporal foreground(x,y,t)=R(x,y,t)*R(x,y,t+1)。

According to the scheme of the embodiment, the first foreground image of the target image can be effectively obtained, useful information such as the outline, the angular point, the texture and the like of the target image can be comprehensively obtained by obtaining the first foreground image through the three-pin difference algorithm, and the accuracy of extracting the foreground image of the moving target is favorably ensured.

In one embodiment, the step of acquiring a second foreground image obtained by background differentiation of the target image in step S102 may include:

and calculating a background image of the target image according to the initial background image and the background update factor, and carrying out differential operation on the background image and the target image to obtain a second foreground image.

The embodiment mainly performs background difference processing on the target image based on the pre-stored initial background image and the pre-stored background update factor to obtain the second foreground image. The initial background image is an image used for obtaining a current background image of the target image, the background updating factor reflects the background updating speed and is a weight factor of the background updating, the current background image of the target image can be calculated according to the pre-stored initial background image and the pre-stored background updating factor, and then the pixel values of corresponding pixel points of the background image and the target image are subjected to differential operation to obtain a second foreground image.

Generally speaking, the higher the complexity of the algorithm, the higher the extraction accuracy of the foreground image by the background model, the better the detection effect for the moving target, but in the embodiment, the real-time requirement of the algorithm is considered, and the provided high-efficiency background model algorithm is used for performing background difference operation on the target image, so that the second foreground image of the target image is obtained quickly, and the extraction efficiency is improved.

In one embodiment, the step of calculating the background image of the target image according to the initial background image and the background update factor in the above embodiments may further include:

in step S201, a first frame image in the image sequence is set as an initial background image.

The image sequence is an image sequence carrying a target image, for example, a video image sequence, and in this step, a first frame image may be extracted from a pre-stored image sequence and set as an initial background image, so as to further improve the extraction efficiency of the background image.

Step S202, obtaining a background updating factor; determining gray values of all pixel points of the initial background image and the target image;

the method mainly comprises the steps of obtaining a pre-stored background updating factor and obtaining gray values of all pixel points of an initial background image and a target image.

Step S203, calculating the gray value of each pixel point of the background image according to the gray values of each pixel point of the initial background image and the target image by using the following formula:

wherein Hbackground(x, y) represents the gray scale value of each pixel in the background image, α represents the background update factor, and is generally equal to 0.005,representing the grey value of each pixel of the initial background image, f (x, y) representing the target imageThe gray value of each pixel point, x represents the abscissa of the pixel point, and y represents the ordinate of the pixel point;

step S204, obtaining a background image according to the gray value of each pixel point of the background image.

In this step, the background image of the target image is obtained according to the gray values of the pixels of the background image obtained in step S203.

Taking the image sequence as an example, H can be assumedbackground(x, y, t) is the gray value of the pixel point (x, y) in the background image after being updated at the time t, the currently input first frame image is taken as the initial background image, the relative time t is 1, and at this moment, H is the relative time tbackgroundSince (x, y, t0) ═ f (x, y, t), the background image obtained at the time t ≠ 1 after the update is expressed as:

Hbackground(x,y,t)=(1-α)*Hbackground(x,y,t0)+α*f(x,y,t)

the magnitude of α reflects the speed of the background update, and the value of this parameter may be 0.005 for the weighting factor of the background update.

The technical scheme of the embodiment realizes extraction of the background image of the target image, sets the first frame image as the initial background image, and quickly obtains the current background image of the target image by using the background update factor, thereby further improving the extraction efficiency of the background image.

In an embodiment, further, the step of performing a difference operation on the background image and the target image to obtain the second foreground image in the above embodiment may include:

subtracting the target image and the background image to obtain a background difference image; and carrying out binarization processing on the background differential image according to a set segmentation threshold value to obtain a second foreground image.

The embodiment mainly performs difference operation on pixel values of corresponding pixel points of a target image and a background image to obtain a background difference image, and performs binarization processing on the background difference image through a segmentation threshold value to obtain a second foreground image.

Specifically, the background difference image is obtained by subtracting the current real-time image at the time t from the current real-time background image at the time t, the current real-time image at the time t corresponds to the target image, and the background difference image can be calculated by the following formula:

F(x,y,t)=|f(x,y,t)-Hbaxkground(x,y,t)|

wherein, F (x, y, t) is the gray value of each pixel point of the background difference image, Hbackground(x, y, t) is the gray value of each pixel point of the background image, and f (x, y, t) represents the gray value of each pixel point of the target image.

And (3) carrying out binarization processing on the background differential image F (x, y, t) to obtain a second foreground image of the target image:

where TH represents a set division threshold, Hspatial_foregroundAnd (x, y, t) represents the gray value of each pixel point of the second foreground image.

The technical scheme of the embodiment performs background difference operation and binarization processing on the target image and the background image thereof, thereby realizing extraction of the second foreground image of the target image.

In one embodiment, the method may further include the steps of:

step S301, obtaining coordinates and gray values of all pixel points in an image to be binarized;

in this step, the image to be binarized may include two frame difference images obtained by subtracting the target image from two adjacent frame images, respectively, and a background difference image obtained by subtracting the target image from the background image;

the method mainly comprises the step of obtaining coordinates and gray values of all pixel points of an image to be subjected to binarization processing.

Step S302, calculating the mean value and variance of the gray value of each pixel point according to the coordinates and the gray value of each pixel point;

step S303, a segmentation threshold is set according to the mean and the variance.

Taking two frames of difference images and a background difference image as an example for explanation, assuming that the sizes of the two frames of difference images to be binarized and the background difference image are mxn, the two frames of difference images diff (x, y, t) and the background difference image F (x, y, t) can be uniformly expressed as a difference image D (x, y, t), and the following formula is adopted to solve the mean value mu of the pixel points corresponding to the difference imagetSum variance

Then, the difference image D (x, y, t) is segmented into a foreground image and a relatively static background image using the following formula:

the method comprises the following steps that K is a weight factor, the value range is generally 1.0-2.0, K can be 1.5 when verification is conducted in the scheme, most foreground image pixel points of a moving target can be regarded as background image pixel points when the value is too large, and the situation is opposite when the value is too small.

The technical scheme of the embodiment can be used for setting the segmentation threshold value by combining the pixel characteristics of each pixel point of the image to be binarized, and can be used for dynamically setting the binarized segmentation threshold value by combining the pixel characteristics of the image to be binarized, such as the pixel mean value, the variance and the like, so that the accuracy of the segmentation threshold value is improved, and the accurate extraction of the foreground image is facilitated.

In one embodiment, the step of determining the motion region of the moving object according to the contour in step S103 may include:

determining the coordinates of each pixel point of the moving target according to the contour; acquiring a circumscribed rectangular area of the moving target according to the coordinates of each pixel point of the moving target; and determining a motion area according to the circumscribed rectangular area.

In this embodiment, the circumscribed rectangle of the moving target is obtained based on the coordinates of each pixel point of the moving target, so that the moving area of the moving target is determined according to the circumscribed rectangle.

The outline of the moving target marks the outline information such as the position of the moving target in the target image, so that the position of each point of the moving target in the image can be determined according to the outline of the moving target, the position coordinates of each pixel point are extracted from the target image, the circumscribed rectangle of the outline can be calculated according to the position coordinates of each pixel point, the area framed by the circumscribed rectangle in the target image is determined, and the area framed by the circumscribed rectangle can be set as the moving area of the moving target.

Taking the image sequence as an example, suppose that the foreground image of each frame of image in the image sequence has the outlines of a plurality of moving objects, (X)i,Yi) The coordinate vector is the coordinate vector of the outline of the ith moving object, wherein the coordinate vector is used for reflecting the position information of the outline of the moving object on the plane view field, because the moving area of the moving object changes with time, the obtained outline coordinate in the image at each moment is different for the image sequence, so the coordinate vector can be used for representing, the outline of the moving object of the foreground negative film at different moments can be distinguished, and in the object image at the moment t, the moving area of the moving object can be represented as:

wherein Htemporal foreqround mask(x, y, t) is the gray value of each pixel point in the motion area of the moving object, and it can be known that each white rectangular area in the motion area of the moving object exactly and completely covers the outline of the moving object at the corresponding position in the foreground image.

According to the technical scheme, the motion area of the moving target is determined through the outline of the external rectangle, on one hand, the interference of other pixel points in the target image on the moving target can be effectively shielded, the probability of mistakenly extracting the foreground image is reduced, and the accuracy of extracting the foreground image is improved.

In one embodiment, the step of extracting the foreground image of the moving object from the initial foreground image according to the moving region of the moving object in step S104 may include:

generating a foreground negative film of a first foreground image according to the motion area of the moving target; and multiplying the foreground negative film and the initial foreground image to obtain a foreground image of the moving target.

In this embodiment, the foreground negative film is an image for recording a moving area, and can reflect the moving area of the moving object in the current frame image in real time. In this embodiment, after the motion region of the first foreground image is obtained, the pixel value of the corresponding pixel point in the first foreground image is set to 1 according to the motion region, so as to obtain the foreground negative film of the first foreground image, and then the foreground image of the moving target is obtained by using the following formula:

Hfinal fore,qround=Htemporal fore,qround mask*Hinitial motion fore,qround

wherein Htemporal fore,qround maskRepresenting the foreground film, Hinitial motion foregroundRepresenting the initial foreground image, Hfinal foregroundA foreground image representing a moving object.

According to the scheme of the embodiment, the foreground negative film of the first foreground image is generated and multiplied with the initial foreground image to obtain the foreground image of the moving target, the initial foreground image can be corrected, the probability of generating an error extraction result when the foreground image is extracted is reduced, the accuracy and robustness of extracting the foreground image are improved, and the algorithm is simple in design and easy to implement.

In an embodiment, an apparatus for extracting a foreground image is provided, and referring to fig. 2, fig. 2 is a schematic structural diagram of the apparatus for extracting a foreground image in an embodiment, where the apparatus for extracting a foreground image may include:

a first foreground obtaining module 101, configured to obtain a first foreground image obtained by performing inter-frame difference on a target image; the target image is an image carrying a moving target;

a second foreground obtaining module 102, configured to obtain a second foreground image obtained by performing background difference on the target image;

a motion region determining module 103, configured to extract a contour of the moving object from the first foreground image, and determine a motion region of the moving object according to the contour;

a moving foreground extracting module 104, configured to perform an addition operation on the first foreground image and the second foreground image to obtain an initial foreground image, and extract a foreground image of the moving target from the initial foreground image according to a moving region of the moving target.

According to the foreground image extraction device, the initial foreground image is obtained by adding operation according to the first foreground image and the second foreground image, so that the common pixel characteristics of the two foreground images are combined, the influence of interference factors such as noise and shadow on foreground image extraction can be avoided, the outline of the moving target can be accurately obtained by using the first foreground image, the moving area of the moving target in each frame of image can be accurately reflected, the foreground image of the moving target is extracted from the initial foreground image by combining the moving area, the accuracy of extracting the foreground image can be improved, and the accuracy of detecting and identifying the moving target can be improved.

The foreground image extracting device of the present invention corresponds to the foreground image extracting method of the present invention one to one, and for the specific limitations of the foreground image extracting device, reference may be made to the limitations of the foreground image extracting method in the foregoing, and the technical features and the advantageous effects thereof described in the embodiments of the foreground image extracting method are all applicable to the embodiments of the foreground image extracting device, which is hereby stated. The modules in the foreground image extraction device can be wholly or partially realized by software, hardware and a combination thereof. The modules can be embedded in a hardware form or independent from a processor in the computer device, and can also be stored in a memory in the computer device in a software form, so that the processor can call and execute operations corresponding to the modules.

In one embodiment, a moving vehicle identification method is provided, and referring to fig. 3, fig. 3 is a flowchart illustrating the moving vehicle identification method in one embodiment, and the moving vehicle identification method may include the following steps:

step S401, extracting a target image carrying a moving target from a traffic flow video image sequence; wherein the moving object comprises a moving vehicle.

The method comprises the steps of firstly carrying out low-pass filtering processing on traffic flow video images to obtain useful information of characteristics such as outlines, angular points, textures and the like of the traffic flow video images, meanwhile obtaining the traffic flow video images with better smoothness by adjusting the frequency bandwidth of a filter, balancing the suppression and fuzzy processing of noise of the traffic flow video images by setting a filter distribution standard deviation parameter sigma, and extracting target images carrying moving targets from processed traffic flow video image sequences.

Step S402, acquiring a first foreground image obtained by performing interframe difference on a target image; and obtaining a second foreground image obtained by carrying out background difference on the target image.

The target image refers to an image carrying a moving target such as a moving vehicle in a traffic stream video image sequence, the first foreground image refers to a foreground image carrying the moving target in the target image, the first foreground image can be obtained by processing the target image through an inter-frame difference method, and the inter-frame difference method refers to a method for extracting the moving foreground image by performing difference operation on the target image and an adjacent frame image.

The second foreground image is a foreground image carrying a moving target in a traffic flow video image sequence, the second foreground image can be obtained by processing the target image through a background difference method, and the background difference method is a method for extracting a moving image by performing difference operation on a current frame image and a background image.

For the background image, a first frame image in a traffic stream video image sequence in which the target image is located may be set as the background image or background pixel point statistics may be performed on pixel points of a current frame image, and the background image is set according to a statistical result, for example, if a certain pixel point of a real-time foreground image of the current frame image is detected as a foreground for multiple times before the current time, the pixel point is set as a background pixel point, and the background image is generated according to the multiple background pixel points.

In this step, the target image and its adjacent frame image may be obtained, and the inter-frame difference method processing may be performed on the target image and its adjacent frame image to obtain a first foreground image, and the background image of the target image may be obtained, and the background difference method processing may be performed on the target image and its background image to obtain a second foreground image.

Step S403, extracting a contour of the moving object from the first foreground image, and determining a moving area of the moving object according to the contour.

The contour of the moving object refers to a contour line of the moving object, such as a moving vehicle, in the target image, and can be marked in the target image through pixel points with uniform pixel values, and can be used for marking contour information such as a pixel point range occupied by the moving object in the target image.

Extracting the contour of a moving target from a first foreground image, and determining a moving area of the moving target in a target image according to the contour, wherein the moving area can be a circumscribed rectangular pixel frame of the contour of the moving target; the motion area can be used for determining the main motion range of the moving target, effectively shielding the interference of other pixel points in the target image on the moving target, reducing the probability of false extraction of the foreground image and being beneficial to improving the accuracy of extraction of the foreground image of the moving target.

Step S404, the first foreground image and the second foreground image are added to obtain an initial foreground image, and the foreground image of the moving target is extracted from the initial foreground image according to the moving area of the moving target.

In this step, the initial foreground image may be obtained by performing an addition operation on pixel values of corresponding pixels in the first foreground image and the second foreground image, so that common pixel characteristics of the first foreground image and the second foreground image are combined, and the influence of interference factors such as noise and shadow on foreground image extraction can be avoided, specifically, the initial foreground image can make up the influence of a traditional interframe difference method on reducing the accuracy of the foreground image due to misjudgment of a moving target, such as a moving vehicle, which suddenly appears in the target image, and also make up the defect of low accuracy of the foreground image due to a hollow phenomenon of the target image caused by the fact that characteristic data of the moving target cannot be effectively obtained under the condition of dynamic scene change in the traditional background difference method; and then, extracting the foreground image of the moving target from the initial foreground image by using the moving area of the moving target, so that the interference of other pixel points of the initial foreground image on the extraction of the foreground image of the moving target is avoided, the probability of error occurrence during the extraction of the foreground image is further reduced, and the accuracy of extracting the foreground image of the moving target is improved.

And step S405, identifying the moving vehicle in the traffic flow video image sequence according to the foreground image.

The method mainly comprises the steps of after foreground images carrying moving targets are extracted from a traffic flow video image sequence, identifying moving vehicles from all the moving targets of the foreground images.

According to the moving vehicle identification method, the initial foreground image is obtained by adding the first foreground image and the second foreground image of the target image in the traffic flow video image sequence, so that the common pixel characteristics of the two foreground images are combined, the influence of interference factors such as noise, shadow and the like on foreground image extraction can be avoided, the outline of the moving target can be accurately obtained by utilizing the first foreground image, the moving area of the moving target in each frame image is accurately reflected, the foreground image of the moving target is extracted from the initial foreground image by combining the moving area, the accuracy of foreground image extraction can be improved, the foreground image is used for identifying and detecting moving vehicles in the traffic flow video image sequence, and the accuracy of detecting and identifying the moving vehicles is also improved.

In one embodiment, the step of acquiring the first foreground image obtained by performing inter-frame difference on the target image in step S402 may include:

subtracting the target image from two adjacent frames of images respectively to obtain two frames of differential images; binarizing the difference image according to a set segmentation threshold value to obtain a binary image; and multiplying the binary image of the difference image to obtain a first foreground image.

In one embodiment, the step of acquiring a second foreground image obtained by background differentiation of the target image in step S402 may include:

and calculating a background image of the target image according to the initial background image and the background update factor, and carrying out differential operation on the background image and the target image to obtain a second foreground image.

In one embodiment, the step of calculating the background image of the target image according to the initial background image and the background update factor in the above embodiments may further include:

in step S501, a first frame image in the traffic flow video image sequence is set as an initial background image.

In the step, the first frame image can be extracted from the pre-stored traffic flow video image sequence and set as the initial background image, so that the extraction efficiency of the background image is further improved.

Step S502, obtaining a background updating factor; and determining the gray values of all pixel points of the initial background image and the target image.

The method mainly comprises the steps of obtaining a pre-stored background updating factor and obtaining gray values of all pixel points of an initial background image and a target image.

Step S503, calculating the gray value of each pixel point of the background image according to the gray values of each pixel point of the initial background image and the target image by using the following formula:

wherein Hbackground(x, y) represents the gray scale value of each pixel in the background image, α represents the background update factor, and is generally equal to 0.005,the gray value of each pixel point of the initial background image is represented, f (x, y) represents the gray value of each pixel point of the target image, x represents the abscissa of the pixel point, and y represents the ordinate of the pixel point.

Step S504, a background image is obtained according to the gray value of each pixel point of the background image.

In this step, the background image of the target image is obtained according to the gray value of each pixel point of the background image obtained in step S203.

In an embodiment, further, the step of performing a difference operation on the background image and the target image to obtain the second foreground image in the above embodiment may include:

subtracting the target image and the background image to obtain a background difference image; and carrying out binarization processing on the background differential image according to a set segmentation threshold value to obtain a second foreground image.

In one embodiment, the method further comprises the following steps:

step S601, obtaining coordinates and gray values of all pixel points in an image to be binarized;

in this step, the image to be binarized may include two frame difference images obtained by subtracting the target image in the traffic flow video image from the two adjacent frame images, respectively, and a background difference image obtained by subtracting the target image and the background image in the traffic flow video image;

the method mainly comprises the step of obtaining coordinates and gray values of all pixel points of an image to be subjected to binarization processing.

Step S602, calculating the mean value and variance of the gray value of each pixel point according to the coordinates and the gray value of each pixel point;

step S603, a segmentation threshold is set according to the mean and variance.

In one embodiment, the step of determining the motion region of the moving object according to the contour in step S403 may include:

determining the coordinates of each pixel point of the moving target according to the contour; acquiring a circumscribed rectangular area of the moving target according to the coordinates of each pixel point of the moving target; and determining a motion area according to the circumscribed rectangular area.

In one embodiment, the step of extracting the foreground image of the moving object from the initial foreground image according to the moving region of the moving object in step S404 may include:

generating a foreground negative film of a first foreground image according to the motion area of the moving target; and multiplying the foreground negative film and the initial foreground image to obtain a foreground image of the moving target.

In one embodiment, a moving vehicle recognition device is provided, and referring to fig. 4, fig. 4 is a schematic structural diagram of the moving vehicle recognition device in one embodiment, and the moving vehicle recognition device may include:

a target image extraction module 401, configured to extract a target image carrying a moving target from a traffic stream video image sequence; wherein the moving object comprises a moving vehicle;

a foreground image obtaining module 402, configured to obtain a first foreground image obtained by performing inter-frame difference on the target image; acquiring a second foreground image obtained by carrying out background difference on the target image;

a moving region obtaining module 403, configured to extract a contour of the moving object from the first foreground image, and determine a moving region of the moving object according to the contour;

a foreground image extracting module 404, configured to perform an addition operation on the first foreground image and the second foreground image to obtain an initial foreground image, and extract a foreground image of the moving target from the initial foreground image according to a moving region of the moving target;

and a moving vehicle identification module 405, configured to identify a moving vehicle in the traffic flow video image sequence according to the foreground image.

According to the moving vehicle identification device, the initial foreground image is obtained by adding the first foreground image and the second foreground image of the target image in the traffic flow video image sequence, so that the common pixel characteristics of the two foreground images are combined, the influence of interference factors such as noise, shadow and the like on foreground image extraction can be avoided, the outline of the moving target can be accurately obtained by utilizing the first foreground image, the moving area of the moving target in each frame image is accurately reflected, the foreground image of the moving target is extracted from the initial foreground image by combining the moving area, the accuracy of foreground image extraction can be improved, the foreground image is used for identifying and detecting moving vehicles in the traffic flow video image sequence, and the accuracy of detecting and identifying the moving vehicles is also improved.

The moving vehicle identification device of the present invention corresponds to the moving vehicle identification method of the present invention, and for the specific limitations of the moving vehicle identification device, reference may be made to the above limitations of the moving vehicle identification method, and the technical features and advantages thereof described in the above embodiments of the moving vehicle identification method are applicable to the embodiments of the moving vehicle identification device, and thus it is stated that the above features and advantages are all applicable to the embodiments of the moving vehicle identification device. The respective modules in the above-described moving vehicle identification apparatus may be implemented in whole or in part by software, hardware, and a combination thereof. The modules can be embedded in a hardware form or independent from a processor in the computer device, and can also be stored in a memory in the computer device in a software form, so that the processor can call and execute operations corresponding to the modules.

In one embodiment, a computer device is provided, and the computer device may be a terminal, and its internal structure diagram may be as shown in fig. 5, and fig. 5 is an internal structure diagram of the computer device in one embodiment. The computer device includes a processor, a memory, a network interface, a display screen, and an input device connected by a system bus. Wherein the processor of the computer device is configured to provide computing and control capabilities. The memory of the computer device comprises a nonvolatile storage medium and an internal memory. The non-volatile storage medium stores an operating system and a computer program. The internal memory provides an environment for the operation of an operating system and computer programs in the non-volatile storage medium. The network interface of the computer device is used for communicating with an external terminal through a network connection. The computer program is executed by a processor to implement a foreground image extraction method or a moving vehicle identification method. The display screen of the computer equipment can be a liquid crystal display screen or an electronic ink display screen, and the input device of the computer equipment can be a touch layer covered on the display screen, a key, a track ball or a touch pad arranged on the shell of the computer equipment, an external keyboard, a touch pad or a mouse and the like.

Those skilled in the art will appreciate that the configuration shown in fig. 5 is a block diagram of only a portion of the configuration associated with aspects of the present invention and is not intended to limit the computing devices to which aspects of the present invention may be applied, and that a particular computing device may include more or less components than those shown, or may combine certain components, or have a different arrangement of components.

In one embodiment, a computer device is provided, comprising a memory, a processor, and a computer program stored on the memory and executable on the processor, the processor implementing the following steps when executing the computer program:

acquiring a first foreground image obtained by performing interframe difference on a target image; acquiring a second foreground image obtained by carrying out background difference on a target image; extracting the contour of the moving target from the first foreground image, and determining the moving area of the moving target according to the contour; and performing addition operation on the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target.

In one embodiment, the processor, when executing the computer program, further performs the steps of:

subtracting the target image from two adjacent frames of images respectively to obtain two frames of differential images; binarizing the difference image according to a set segmentation threshold value to obtain a binary image; and multiplying the binary image of the difference image to obtain a first foreground image.

In one embodiment, the processor, when executing the computer program, further performs the steps of:

and calculating a background image of the target image according to the initial background image and the background update factor, and carrying out differential operation on the background image and the target image to obtain a second foreground image.

In one embodiment, the processor, when executing the computer program, further performs the steps of:

setting a first frame image in an image sequence as an initial background image; acquiring a background updating factor; determining gray values of all pixel points of the initial background image and the target image; calculating the gray value of each pixel point of the background image by adopting the following formula according to the gray values of each pixel point of the initial background image and the target image:and acquiring the background image according to the gray value of each pixel point of the background image.

In one embodiment, the processor, when executing the computer program, further performs the steps of:

subtracting the target image and the background image to obtain a background difference image; and carrying out binarization processing on the background differential image according to a set segmentation threshold value to obtain a second foreground image.

In one embodiment, the processor, when executing the computer program, further performs the steps of:

acquiring coordinates and gray values of all pixel points in an image to be binarized; calculating the mean value and the variance of the gray value of each pixel point according to the coordinates and the gray value of each pixel point; and setting a segmentation threshold according to the mean and the variance.

In one embodiment, the processor, when executing the computer program, further performs the steps of:

determining the coordinates of each pixel point of the moving target according to the contour; acquiring a circumscribed rectangular area of the moving target according to the coordinates of each pixel point of the moving target; and determining a motion area according to the circumscribed rectangular area.

In one embodiment, the processor, when executing the computer program, further performs the steps of:

generating a foreground negative film of a first foreground image according to the motion area of the moving target; and multiplying the foreground negative film and the initial foreground image to obtain a foreground image of the moving target.

In one embodiment, a computer device is provided, comprising a memory, a processor, and a computer program stored on the memory and executable on the processor, the processor implementing the following steps when executing the computer program:

extracting a target image carrying a moving target from a traffic flow video image sequence; acquiring a first foreground image obtained by performing interframe difference on a target image; acquiring a second foreground image obtained by carrying out background difference on a target image; extracting the contour of the moving target from the first foreground image, and determining a moving area of the moving target according to the contour; adding the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target; and identifying the moving vehicle in the traffic flow video image sequence according to the foreground image.

The computer device according to any of the above embodiments, with the computer program running on the processor, can improve accuracy of extracting a foreground image, and can also improve accuracy of detecting and identifying a moving target.

It will be understood by those skilled in the art that all or part of the processes of the foreground image extraction method and the moving vehicle identification method for implementing the above embodiments may be implemented by a computer program, which may be stored in a non-volatile computer-readable storage medium, and when executed, may include the processes of the above embodiments of the methods. Any reference to memory, storage, databases, or other media used in embodiments provided herein may include non-volatile and/or volatile memory. Non-volatile memory can include read-only memory (ROM), Programmable ROM (PROM), Electrically Programmable ROM (EPROM), Electrically Erasable Programmable ROM (EEPROM), or flash memory. Volatile memory can include Random Access Memory (RAM) or external cache memory. By way of illustration and not limitation, RAM is available in a variety of forms such as Static RAM (SRAM), Dynamic RAM (DRAM), Synchronous DRAM (SDRAM), Double Data Rate SDRAM (DDRSDRAM), Enhanced SDRAM (ESDRAM), Synchronous Link DRAM (SLDRAM), Rambus Direct RAM (RDRAM), direct bus dynamic RAM (DRDRAM), and memory bus dynamic RAM (RDRAM).

Accordingly, in one embodiment, there is provided a computer readable storage medium having a computer program stored thereon, the computer program when executed by a processor implementing the steps of:

acquiring a first foreground image obtained by performing interframe difference on a target image; acquiring a second foreground image obtained by carrying out background difference on a target image; extracting the contour of the moving target from the first foreground image, and determining the moving area of the moving target according to the contour; and performing addition operation on the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target.

In one embodiment, the computer program when executed by the processor further performs the steps of:

subtracting the target image from two adjacent frames of images respectively to obtain two frames of differential images; binarizing the difference image according to a set segmentation threshold value to obtain a binary image; and multiplying the binary image of the difference image to obtain a first foreground image.

In one embodiment, the computer program when executed by the processor further performs the steps of:

and calculating a background image of the target image according to the initial background image and the background update factor, and carrying out differential operation on the background image and the target image to obtain a second foreground image.

In one embodiment, the computer program when executed by the processor further performs the steps of:

setting a first frame image in an image sequence as an initial background image; acquiring a background updating factor; determining gray values of all pixel points of the initial background image and the target image; according to the initial backgroundCalculating the gray value of each pixel point of the background image by adopting the following formula:and acquiring the background image according to the gray value of each pixel point of the background image.

In one embodiment, the computer program when executed by the processor further performs the steps of:

subtracting the target image and the background image to obtain a background difference image; and carrying out binarization processing on the background differential image according to a set segmentation threshold value to obtain a second foreground image.

In one embodiment, the computer program when executed by the processor further performs the steps of:

acquiring coordinates and gray values of all pixel points in an image to be binarized; calculating the mean value and the variance of the gray value of each pixel point according to the coordinates and the gray value of each pixel point; and setting a segmentation threshold according to the mean and the variance.

In one embodiment, the computer program when executed by the processor further performs the steps of:

determining the coordinates of each pixel point of the moving target according to the contour; acquiring a circumscribed rectangular area of the moving target according to the coordinates of each pixel point of the moving target; and determining a motion area according to the circumscribed rectangular area.

In one embodiment, the computer program when executed by the processor further performs the steps of:

generating a foreground negative film of a first foreground image according to the motion area of the moving target; and multiplying the foreground negative film and the initial foreground image to obtain a foreground image of the moving target.

In one embodiment, a computer-readable storage medium is provided, having a computer program stored thereon, which when executed by a processor, performs the steps of:

extracting a target image carrying a moving target from a traffic flow video image sequence; acquiring a first foreground image obtained by performing interframe difference on a target image; acquiring a second foreground image obtained by carrying out background difference on a target image; extracting the contour of the moving target from the first foreground image, and determining a moving area of the moving target according to the contour; adding the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target; and identifying the moving vehicle in the traffic flow video image sequence according to the foreground image.

The computer-readable storage medium according to any of the embodiments above, with the stored computer program, can improve accuracy of extracting a foreground image, and can also improve accuracy of detecting and identifying a moving target.

The technical features of the embodiments described above may be arbitrarily combined, and for the sake of brevity, all possible combinations of the technical features in the embodiments described above are not described, but should be considered as being within the scope of the present specification as long as there is no contradiction between the combinations of the technical features.

The above-mentioned embodiments only express several embodiments of the present invention, and the description thereof is more specific and detailed, but not construed as limiting the scope of the invention. It should be noted that, for a person skilled in the art, several variations and modifications can be made without departing from the inventive concept, which falls within the scope of the present invention. Therefore, the protection scope of the present patent shall be subject to the appended claims.

Claims (10)

1. A foreground image extraction method is characterized by comprising the following steps:

acquiring a first foreground image obtained by performing interframe difference on a target image; the target image is an image carrying a moving target;

acquiring a second foreground image obtained by carrying out background difference on the target image;

extracting the contour of the moving target from the first foreground image, and determining a moving area of the moving target according to the contour;

adding the first foreground image and the second foreground image to obtain an initial foreground image, and extracting the foreground image of the moving target from the initial foreground image according to the moving area of the moving target; the method comprises the following steps: determining pixel points corresponding to the motion area in the first foreground image according to the motion area of the motion target, and generating a foreground negative film of the first foreground image according to the pixel points; wherein the foreground negative film is an image for recording the motion area; and multiplying the foreground negative film and the initial foreground image to obtain a foreground image of the moving target.

2. The foreground image extraction method of claim 1, wherein the step of obtaining a first foreground image obtained by performing inter-frame difference on the target image comprises:

subtracting the target image from two adjacent frames of images respectively to obtain two frames of differential images;

carrying out binarization on the difference image according to a set segmentation threshold value to obtain a binary image;

and multiplying the binary image of the difference image to obtain the first foreground image.

3. The foreground image extraction method of claim 1, wherein the step of obtaining a second foreground image obtained by performing background differentiation on the target image comprises:

and calculating a background image of the target image according to the initial background image and the background update factor, and carrying out differential operation on the background image and the target image to obtain a second foreground image.

4. The foreground image extraction method of claim 3, wherein the step of calculating the background image of the target image according to the initial background image and the background update factor comprises:

setting a first frame image in an image sequence as the initial background image; wherein the sequence of images carries the target image;

acquiring the background updating factor; determining the gray values of all pixel points of the initial background image and the target image;

calculating the gray value of each pixel point of the background image by adopting the following formula according to the gray value of each pixel point:

wherein Hbackground(x, y) represents the gray value of each pixel point of the background image, alpha represents the background update factor,expressing the gray value of each pixel point of the initial background image, f (x, y) expressing the gray value of each pixel point of the target image, x expressing the abscissa of the pixel point, and y expressing the ordinate of the pixel point;

acquiring the background image according to the gray value of each pixel point of the background image;

the step of performing a difference operation on the background image and the target image to obtain a second foreground image comprises:

subtracting the target image and the background image to obtain a background difference image;

and carrying out binarization processing on the background differential image according to a set segmentation threshold value to obtain the second foreground image.

5. The foreground image extraction method of any one of claims 1 to 4, wherein the step of determining the motion region of the moving object according to the contour comprises:

determining the coordinates of each pixel point of the moving target according to the contour;

acquiring a circumscribed rectangular area of the moving target according to the coordinates of each pixel point of the moving target;

and determining the motion area according to the circumscribed rectangular area.

6. The foreground image extraction method of claim 3 or 4, further comprising the steps of:

acquiring coordinates and gray values of all pixel points in an image to be binarized;

calculating the mean value and the variance of the gray value of each pixel point according to the coordinates and the gray value of each pixel point;

and setting a segmentation threshold according to the mean and the variance.

7. A moving vehicle identification method, comprising the steps of:

extracting a target image carrying a moving target from a traffic flow video image sequence; wherein the moving object comprises a moving vehicle;