Don't show again

Featured Models

These models are deployed for industry-leading speeds to excel at production tasks, and they enhance role-play, spark engaging discussions, and foster creativity, with content compatibility that is not subject to restrictions.

meta-llama/llama-3.1-8b-instruct

Meta's latest class of models, Llama 3.1, launched with a variety of sizes and configurations. The 8B instruct-tuned version is particularly fast and efficient. It has demonstrated strong performance in human evaluations, outperforming several leading closed-source models.

16384 context

input$0.05/M tokens

output$0.05/M tokens

NEW

meta-llama/llama-3.1-70b-instruct

Meta's latest class of models, Llama 3.1, has launched with a variety of sizes and configurations. The 70B instruct-tuned version is optimized for high-quality dialogue use cases. It has demonstrated strong performance in human evaluations compared to leading closed-source models.

32768 context

input$0.34/M tokens

output$0.39/M tokens

NEW

meta-llama/llama-3.1-405b-instruct

Meta's latest class of models, Llama 3.1, launched with a variety of sizes and configurations. This 405B instruct-tuned version is optimized for high-quality dialogue use cases. It has demonstrated strong performance compared to leading closed-source models, including GPT-4o and Claude 3.5 Sonnet, in evaluations.

32768 context

input$2.75/M tokens

output$2.75/M tokens

NEW

meta-llama/llama-3-8b-instruct

Meta's latest class of model (Llama 3) launched with a variety of sizes & flavors. This 8B instruct-tuned version was optimized for high quality dialogue usecases. It has demonstrated strong performance compared to leading closed-source models in human evaluations.

8192 context

input$0.04/M tokens

output$0.04/M tokens

meta-llama/llama-3-70b-instruct

Meta's latest class of model (Llama 3) launched with a variety of sizes & flavors. This 70B instruct-tuned version was optimized for high quality dialogue usecases. It has demonstrated strong performance compared to leading closed-source models in human evaluations.

8192 context

input$0.51/M tokens

output$0.74/M tokens

HOT

gryphe/mythomax-l2-13b

The idea behind this merge is that each layer is composed of several tensors, which are in turn responsible for specific functions. Using MythoLogic-L2's robust understanding as its input and Huginn's extensive writing capability as its output seems to have resulted in a model that exceeds at both, confirming my theory. (More details to be released at a later time).

4096 context

input$0.09/M tokens

output$0.09/M tokens

HOT

google/gemma-2-9b-it

Gemma 2 9B by Google is an advanced, open-source language model that sets a new standard for efficiency and performance in its size class.

Designed for a wide variety of tasks, it empowers developers and researchers to build innovative applications, while maintaining accessibility, safety, and cost-effectiveness.

8192 context

input$0.08/M tokens

output$0.08/M tokens

mistralai/mistral-nemo

A 12B parameter model with a 128k token context length built by Mistral in collaboration with NVIDIA. The model is multilingual, supporting English, French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, and Hindi. It supports function calling and is released under the Apache 2.0 license.

131072 context

input$0.17/M tokens

output$0.17/M tokens

microsoft/wizardlm-2-8x22b

WizardLM-2 8x22B is Microsoft AI's most advanced Wizard model. It demonstrates highly competitive performance compared to leading proprietary models, and it consistently outperforms all existing state-of-the-art opensource models.

65535 context

input$0.62/M tokens

output$0.62/M tokens

HOT

mistralai/mistral-7b-instruct

A high-performing, industry-standard 7.3B parameter model, with optimizations for speed and context length.

32768 context

input$0.059/M tokens

output$0.059/M tokens

microsoft/wizardlm-2-7b

WizardLM-2 7B is the smaller variant of Microsoft AI's latest Wizard model. It is the fastest and achieves comparable performance with existing 10x larger opensource leading models. It is a finetune of Mistral 7B Instruct.

32768 context

input$0.06/M tokens

output$0.06/M tokens

openchat/openchat-7b

OpenChat 7B is a library of open-source language models, fine-tuned with "C-RLFT (Conditioned Reinforcement Learning Fine-Tuning)" - a strategy inspired by offline reinforcement learning. It has been trained on mixed-quality data without preference labels.

4096 context

input$0.06/M tokens

output$0.06/M tokens

nousresearch/hermes-2-pro-llama-3-8b

Hermes 2 Pro is an upgraded, retrained version of Nous Hermes 2, consisting of an updated and cleaned version of the OpenHermes 2.5 Dataset, as well as a newly introduced Function Calling and JSON Mode dataset developed in-house.

8192 context

input$0.14/M tokens

output$0.14/M tokens

sao10k/l3-70b-euryale-v2.1

The uncensored llama3 model is a powerhouse of creativity, excelling in both roleplay and story writing. It offers a liberating experience during roleplays, free from any restrictions. This model stands out for its immense creativity, boasting a vast array of unique ideas and plots, truly a treasure trove for those seeking originality. Its unrestricted nature during roleplays allows for the full breadth of imagination to unfold, akin to an enhanced, big-brained version of Stheno. Perfect for creative minds seeking a boundless platform for their imaginative expressions, the uncensored llama3 model is an ideal choice

16000 context

input$1.48/M tokens

output$1.48/M tokens

cognitivecomputations/dolphin-mixtral-8x22b

Dolphin 2.9 is designed for instruction following, conversational, and coding. This model is a finetune of Mixtral 8x22B Instruct. It features a 64k context length and was fine-tuned with a 16k sequence length using ChatML templates.The model is uncensored and is stripped of alignment and bias. It requires an external alignment layer for ethical use.

16000 context

input$0.9/M tokens

output$0.9/M tokens

jondurbin/airoboros-l2-70b

This is a fine-tuned Llama-2 model designed to support longer and more detailed writing prompts, as well as next-chapter generation. It also includes an experimental role-playing instruction set with multi-round dialogues, character interactions, and varying numbers of participants

4096 context

input$0.5/M tokens

output$0.5/M tokens

lzlv_70b

A Mythomax/MLewd_13B-style merge of selected 70B models. A multi-model merge of several LLaMA2 70B finetunes for roleplaying and creative work. The goal was to create a model that combines creativity with intelligence for an enhanced experience.

4096 context

input$0.58/M tokens

output$0.78/M tokens

nousresearch/nous-hermes-llama2-13b

Nous-Hermes-Llama2-13b is a state-of-the-art language model fine-tuned on over 300,000 instructions. This model was fine-tuned by Nous Research, with Teknium and Emozilla leading the fine tuning process and dataset curation, Redmond AI sponsoring the compute, and several other contributors.

4096 context

input$0.17/M tokens

output$0.17/M tokens

teknium/openhermes-2.5-mistral-7b

OpenHermes 2.5 Mistral 7B is a state of the art Mistral Fine-tune, a continuation of OpenHermes 2 model, which trained on additional code datasets.

4096 context

input$0.17/M tokens

output$0.17/M tokens

sophosympatheia/midnight-rose-70b

A merge with a complex family tree, this model was crafted for roleplaying and storytelling. Midnight Rose is a successor to Rogue Rose and Aurora Nights and improves upon them both. It wants to produce lengthy output by default and is the best creative writing merge produced so far by sophosympatheia.

4096 context

input$0.8/M tokens

output$0.8/M tokens

starcannon-v2

8192 context

input$1.48/M tokens

output$1.48/M tokens

qwen/qwen-2.5-72b-instruct

Qwen2.5 is the latest series of Qwen large language models. For Qwen2.5, we release a number of base language models and instruction-tuned language models ranging from 0.5 to 72 billion parameters.

32000 context

input$0.38/M tokens

output$0.4/M tokens

sao10k/l31-70b-euryale-v2.2

Euryale L3.1 70B v2.2 is a model focused on creative roleplay from Sao10k. It is the successor of Euryale L3 70B v2.1.

16000 context

input$1.48/M tokens

output$1.48/M tokens

qwen/qwen-2-7b-instruct

Qwen2 is the newest series in the Qwen large language model family. Qwen2 7B is a transformer-based model that demonstrates exceptional performance in language understanding, multilingual capabilities, programming, mathematics, and reasoning.

32768 context

input$0.054/M tokens

output$0.054/M tokens

qwen/qwen-2-72b-instruct

Qwen2 is the latest series in the Qwen family of large language models. Qwen2 72B is a transformer-based model that excels in language comprehension, multilingual capabilities, programming, mathematics, and reasoning.

32768 context

input$0.34/M tokens

output$0.39/M tokens

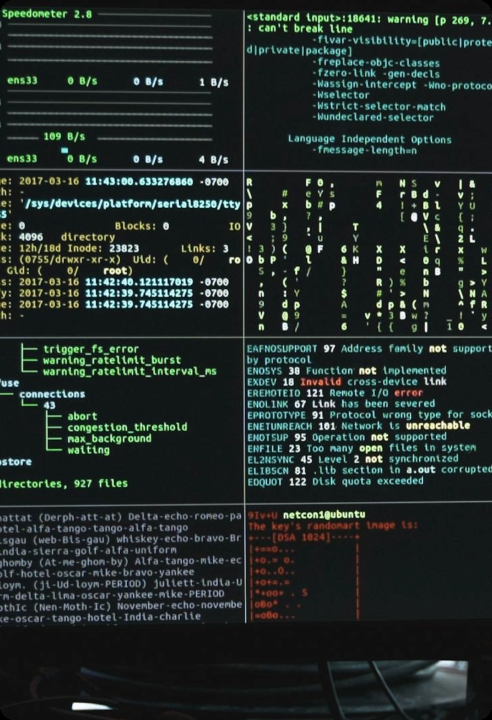

An example Chat Completions API call looks like the following

To learn more, you can view the full API reference documentation for the Chat API.

Copy

from openai import OpenAI

client = OpenAI(

base_url='https://api.novita.ai/v3/openai',

api_key='',

# Get the Novita AI API Key by referring: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key

)

completion_res = client.completions.create(

model='gryphe/mythomax-12-13b',

prompt='A chat between a curious user and an artificial intelligence assistant',

stream=True,

max_tokens=512,

)

Novita LLMs API Benefits

Deploy models to production more reliably and scalably, faster and cheaper with our serverless platform than self-developing infrastructure.

You may focus your energy on application growth and customer service, while the LLM Infrastructure can be entrusted to the Novita Team.

Reliable

Cost-Effective

Auto Scaling

Ideal App Scenarios

AI Companion Chat

AI Novel Generation

AI Summarization

AI Code Generation

Q&A

How can Novita LLM API accelerate generative AI app development for AI startups?

Novita LLM API is designed to help AI startups rapidly build generative AI applications by granting swift access to top open-source models. Our model API is comprehensive, user-friendly, and automatically scalable, making us the most cost-effective provider of open-source model APIs, with 24/7 service capabilities.

How Can New Users Get Started with Novita LLM API?

We offer new users free credits to explore our open-source large language models at no cost. For API access, please refer to our tutorial. If you need further assistance or have specific questions, join our Discord community or schedule a voice call with our team at https://meet.brevo.com/novita-ai/contact-sales.

How Does Novita AI Ensure Your Data Privacy and Security?

At Novita AI, we prioritize your data privacy and security. Rest assured, we do not store user prompt data for model training purposes. We respect and safeguard the confidentiality of your information.