Neural style transfer is an algorithm that combines the content of one image with the style of another image using CNN. Given a content image and a style image, the goal is to generate a target image that minimizes the content difference with the content image and the style difference with the style image.

To minimize the content difference, we forward propagate the content image and the target image to pretrained VGGNet respectively, and extract feature maps from multiple convolutional layers. Then, the target image is updated to minimize the mean-squared error between the feature maps of the content image and its feature maps.

As in computing the content loss, we forward propagate the style image and the target image to the VGGNet and extract convolutional feature maps. To generate a texture that matches the style of the style image, we update the target image by minimizing the mean-squared error between the Gram matrix of the style image and the Gram matrix of the target image (feature correlation minimization). See here for how to compute the style loss.

$ pip install -r requirements.txt

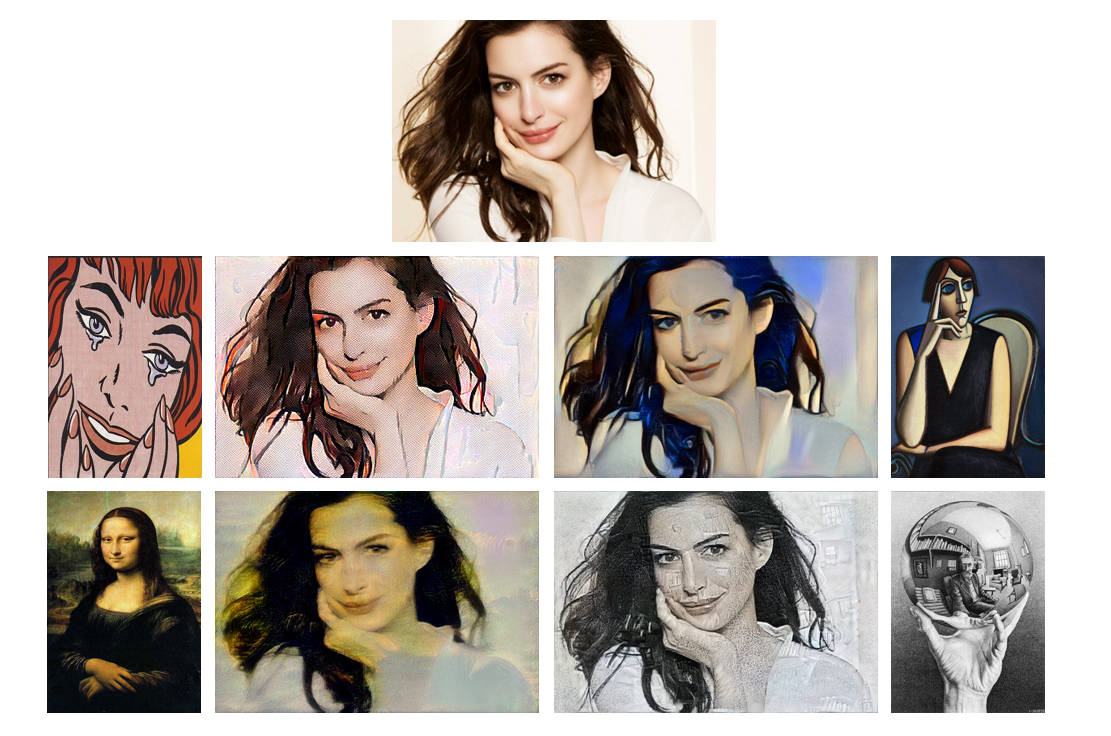

$ python main.py --content='png/content.png' --style='png/style.png'The following is the result of applying variaous styles of artwork to Anne Hathaway's photograph.