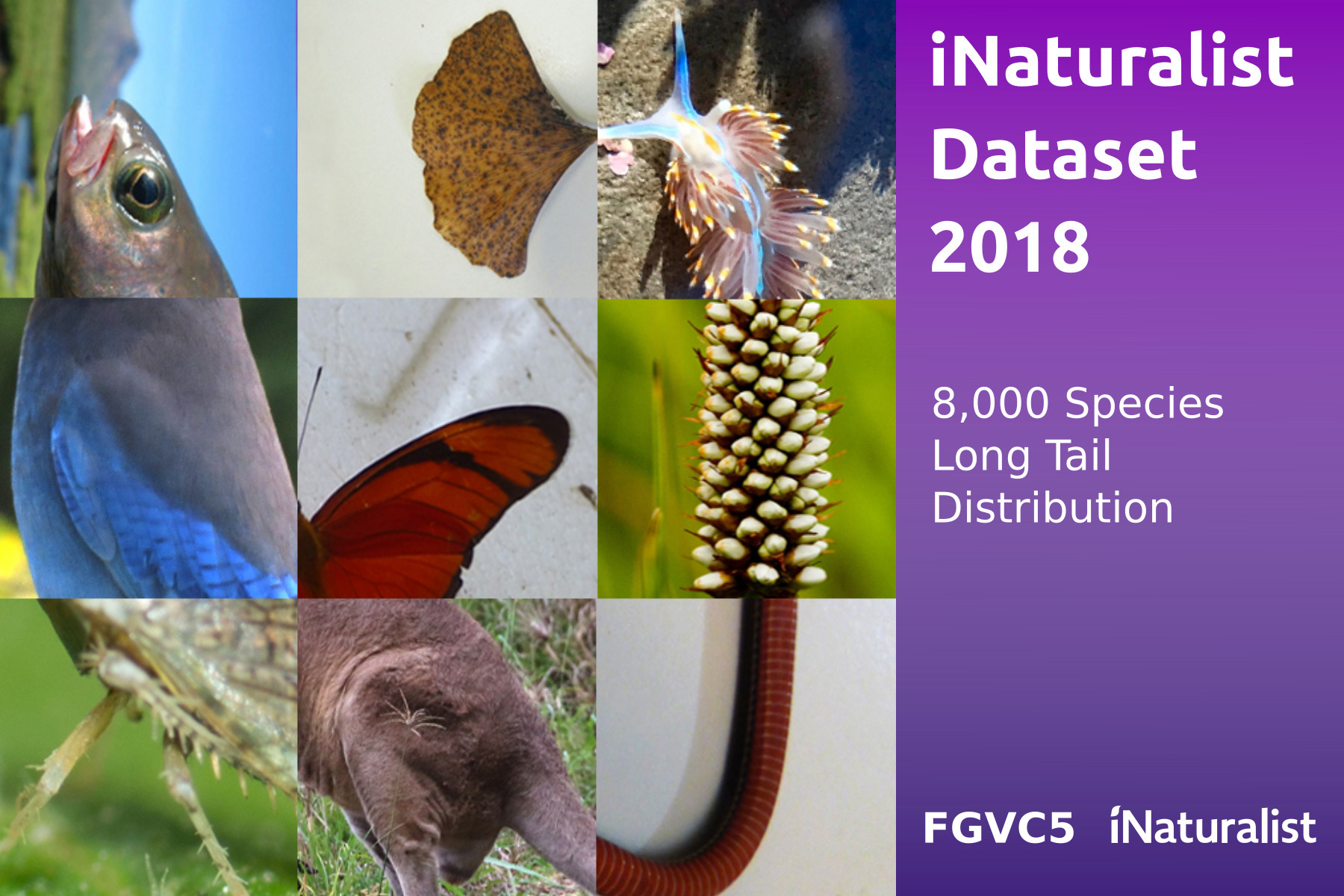

The 2018 competition is part of the FGVC^5 workshop at CVPR.

Please open an issue if you have questions or problems with the dataset.

February 15th, 2021:

- AWS Open Data download links now freely available. See the Data section below.

August 17th, 2020:

AWS S3 download links were created due to problems with the original Google and Caltech links. The dataset files are in a "requester pays" bucket, so you will need to download them through an AWS API. See the Data section below.

August 16th, 2019:

- Additional metadata in the form of latitude, longitude, date, and user_id for each of images in the train and validation sets can be found here.

June 23rd, 2018:

-

Un-obfuscated names are released. Simply replace the

categorieslist in the dataset files with the list found in this file. -

Thanks to everyone who attended and participated in the FGVC5 workshop! Slides from the competition overview and presentations from the top two teams can be found here.

-

A video of the validation images can be viewed here.

April 10th, 2018:

- Bounding boxes have been added to the 2017 dataset, see here.

We are using Kaggle to host the leaderboard. Checkout the competition page here.

| Data Released | February, 2018 |

| Submission Server Open | February, 2018 |

| Submission Deadline | June, 2018 |

| Winners Announced | June, 2018 |

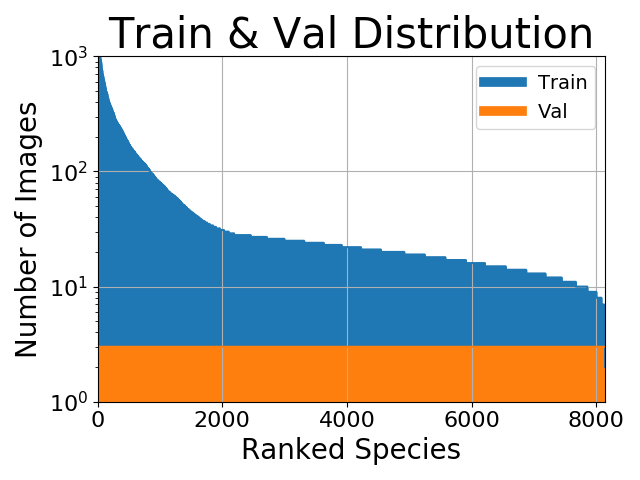

There are a total of 8,142 species in the dataset, with 437,513 training images, 24,426 validation images, and 149,394 test images.

| Super Category | Category Count | Train Images | Val Images |

|---|---|---|---|

| Plantae | 2,917 | 118,800 | 8,751 |

| Insecta | 2,031 | 87,192 | 6,093 |

| Aves | 1,258 | 143,950 | 3,774 |

| Actinopterygii | 369 | 7,835 | 1,107 |

| Fungi | 321 | 6,864 | 963 |

| Reptilia | 284 | 22,754 | 852 |

| Mollusca | 262 | 8,007 | 786 |

| Mammalia | 234 | 20,104 | 702 |

| Animalia | 178 | 5,966 | 534 |

| Amphibia | 144 | 11,156 | 432 |

| Arachnida | 114 | 4,037 | 342 |

| Chromista | 25 | 621 | 75 |

| Protozoa | 4 | 211 | 12 |

| Bacteria | 1 | 16 | 3 |

| Total | 8,142 | 437,513 | 24,426 |

Click on the image below to view a video showing images from the validation set.

We follow a similar metric to the classification tasks of the ILSVRC. For each image , an algorithm will produce 3 labels

,

. We allow 3 labels because some categories are disambiguated with additional data provided by the observer, such as latitude, longitude and date. For a small percentage of images, it might also be the case that multiple categories occur in an image (e.g. a photo of a bee on a flower). For this competition each image has one ground truth label

, and the error for that image is:

The overall error score for an algorithm is the average error over all test images:

The 2018 competition differs from the 2017 Competition in several ways:

The 2017 dataset categories contained mostly species, but also had a few additional taxonomic ranks (e.g. genus, subspecies, and variety). The 2018 categories are all species.

The 2018 dataset contains kingdom, phylum, class, order, family, and genus taxonomic information for all species. However, we have obfuscated all taxonomic names (including the species name) to hinder participants from performing web searchs to collect additional data.

The 2018 dataset contains some species and images that are found in the 2017 dataset. However, we will not provide a mapping between the two datasets.

The 2018 competition allows for 3 guesses per test image, whereas the 2017 competition allowed 5.

Participants are welcome to use the iNaturalist 2017 Competition dataset as an additional data source. There is an overlap between the 2017 species and the 2018 species, however we do not provide a mapping between the two datasets. Besides using the 2017 dataset, participants are restricted from collecting additional natural world data for the 2018 competition. Pretrained models may be used to construct the algorithms (e.g. ImageNet pretrained models, or iNaturalist 2017 pretrained models). Please specify any and all external data used for training when uploading results.

The general rule is that participants should only use the provided training and validation images (with the exception of the allowed pretrained models) to train a model to classify the test images. We do not want participants crawling the web in search of additional data for the target categories. Participants should be in the mindset that this is the only data available for these categories.

Participants are allowed to collect additional annotations (e.g. bounding boxes, keypoints) on the provided training and validation sets. Teams should specify that they collected additional annotations when submitting results.

We follow the annotation format of the COCO dataset and add additional fields. The annotations are stored in the JSON format and are organized as follows:

{

"info" : info,

"images" : [image],

"categories" : [category],

"annotations" : [annotation],

"licenses" : [license]

}

info{

"year" : int,

"version" : str,

"description" : str,

"contributor" : str,

"url" : str,

"date_created" : datetime,

}

image{

"id" : int,

"width" : int,

"height" : int,

"file_name" : str,

"license" : int,

"rights_holder" : str

}

category{

"id" : int,

"name" : str,

"supercategory" : str,

"kingdom" : str,

"phylum" : str,

"class" : str,

"order" : str,

"family" : str,

"genus" : str

}

annotation{

"id" : int,

"image_id" : int,

"category_id" : int

}

license{

"id" : int,

"name" : str,

"url" : str

}

The submission format for the Kaggle competition is a csv file with the following format:

id,predicted

12345,0 78 23

67890,83 13 42

The id column corresponds to the test image id. The predicted column corresponds to 3 category ids, separated by spaces. You should have one row for each test image. Please sort your predictions from most confident to least, from left to right, this will allow us to study top-1, top-2, and top-3 accuracy.

By downloading this dataset you agree to the following terms:

- You will abide by the iNaturalist Terms of Service

- You will use the data only for non-commercial research and educational purposes.

- You will NOT distribute the above images.

- The California Institute of Technology makes no representations or warranties regarding the data, including but not limited to warranties of non-infringement or fitness for a particular purpose.

- You accept full responsibility for your use of the data and shall defend and indemnify the California Institute of Technology, including its employees, officers and agents, against any and all claims arising from your use of the data, including but not limited to your use of any copies of copyrighted images that you may create from the data.

The dataset is freely available through the AWS Open Data Program. Download the dataset files here:

- All training and validation images [120GB]

- s3:https://ml-inat-competition-datasets/2018/train_val2018.tar.gz

- Running

md5sum train_val2018.tar.gzshould produceb1c6952ce38f31868cc50ea72d066cc3 - Images have a max dimension of 800px and have been converted to JPEG format

- Untaring the images creates a directory structure like

train_val2018/super category/category/image.jpg. This may take a while.

- Training annotations [26MB]

- s3:https://ml-inat-competition-datasets/2018/train2018.json.tar.gz

- Running

md5sum train2018.json.tar.gzshould producebfa29d89d629cbf04d826a720c0a68b0

- Validation annotations [26MB]

- s3:https://ml-inat-competition-datasets/2018/val2018.json.tar.gz

- Running

md5sum val2018.json.tar.gzshould producef2ed8bfe3e9901cdefceb4e53cd3775d

- Location annotations (train and val) [11MB]

- s3:https://ml-inat-competition-datasets/2018/inat2018_locations.zip

- Running

md5sum inat2018_locations.zipshould produce1704763abc47b75820aa5a3d93c6c0f3

- Test images [40GB]

- s3:https://ml-inat-competition-datasets/2018/test2018.tar.gz

- Running

md5sum test2018.tar.gzshould produce4b71d44d73e27475eefea68886c7d1b1 - Images have a max dimension of 800px and have been converted to JPEG format

- Untaring the images creates a directory structure like

test2018/image.jpg.

- Test image info [6.3MB]

- s3:https://ml-inat-competition-datasets/2018/test2018.json.tar.gz

- Running

md5sum test2018.json.tar.gzshould producefc717a7f53ac72ed8b250221a08a4502

- Un-obfuscated category names

- s3:https://ml-inat-competition-datasets/2018/categories.json.tar.gz

Example s3cmd usage for downloading the training and validation images:

pip install s3cmd

s3cmd \

--access_key XXXXXXXXXXXXXXXXXXXX \

--secret_key XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX \

get s3:https://ml-inat-competition-datasets/2018/train_val2018.tar.gz .

A pretrained InceptionV3 model in PyTorch is available here.