by Kaisiyuan Wang, Hang Zhou, Qianyi Wu, Jiaxiang Tang, Zhiliang Xu, Borong Liang, Tianshu Hu, Errui Ding, Jingtuo Liu, Ziwei Liu, Jingdong Wang.

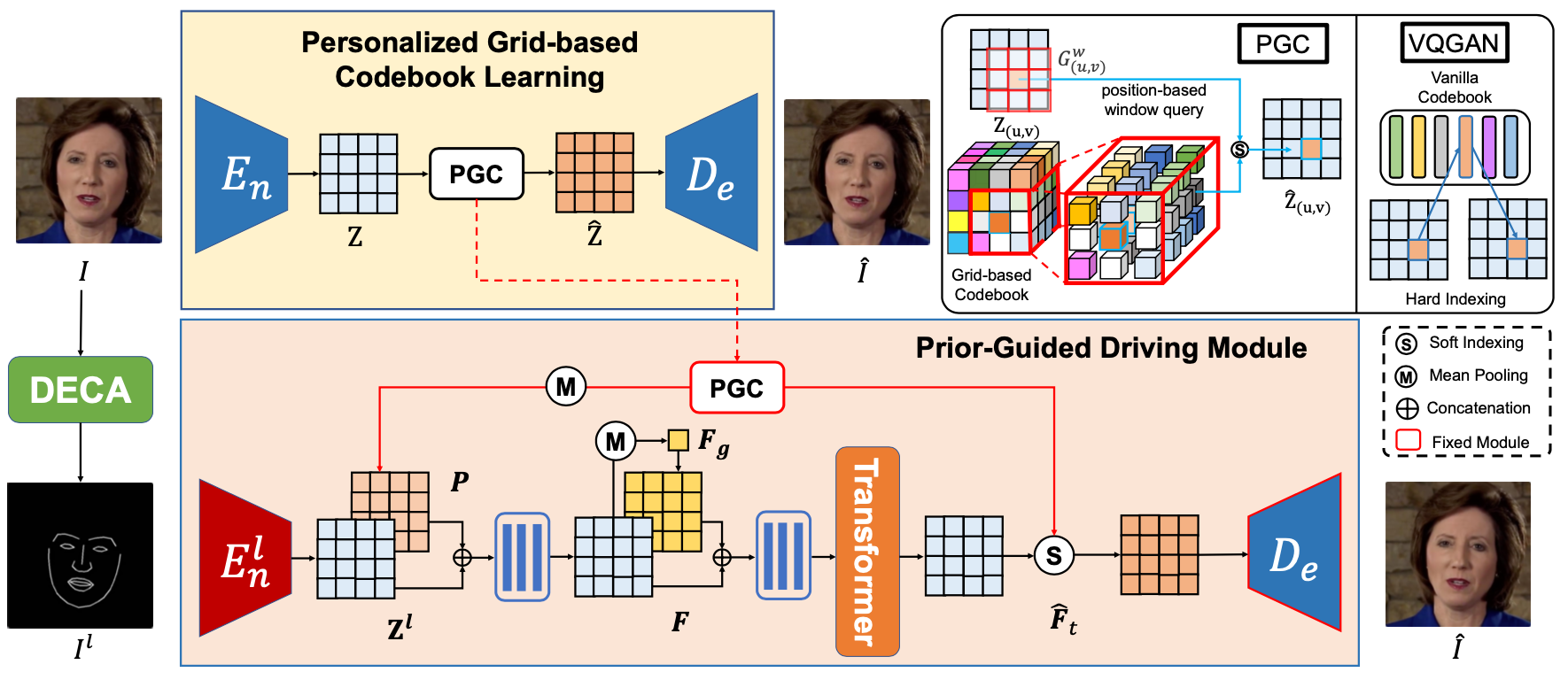

This repository is a PyTorch implementation of our Siggraph 2023 paper Efficient Video Portrait Reenactment via Grid-based Codebook.

This repository is based on Pytorch, so please follow the official instructions here. The code is tested under pytorch1.7 and Python 3.6 on Ubuntu 16.04. In addition, our pre-processing depends on a 3D reconstruction approach DECA: Detailed Expression Capture and Animation to produce 2D facial landmarks as driving signals. Please follow here to install it.

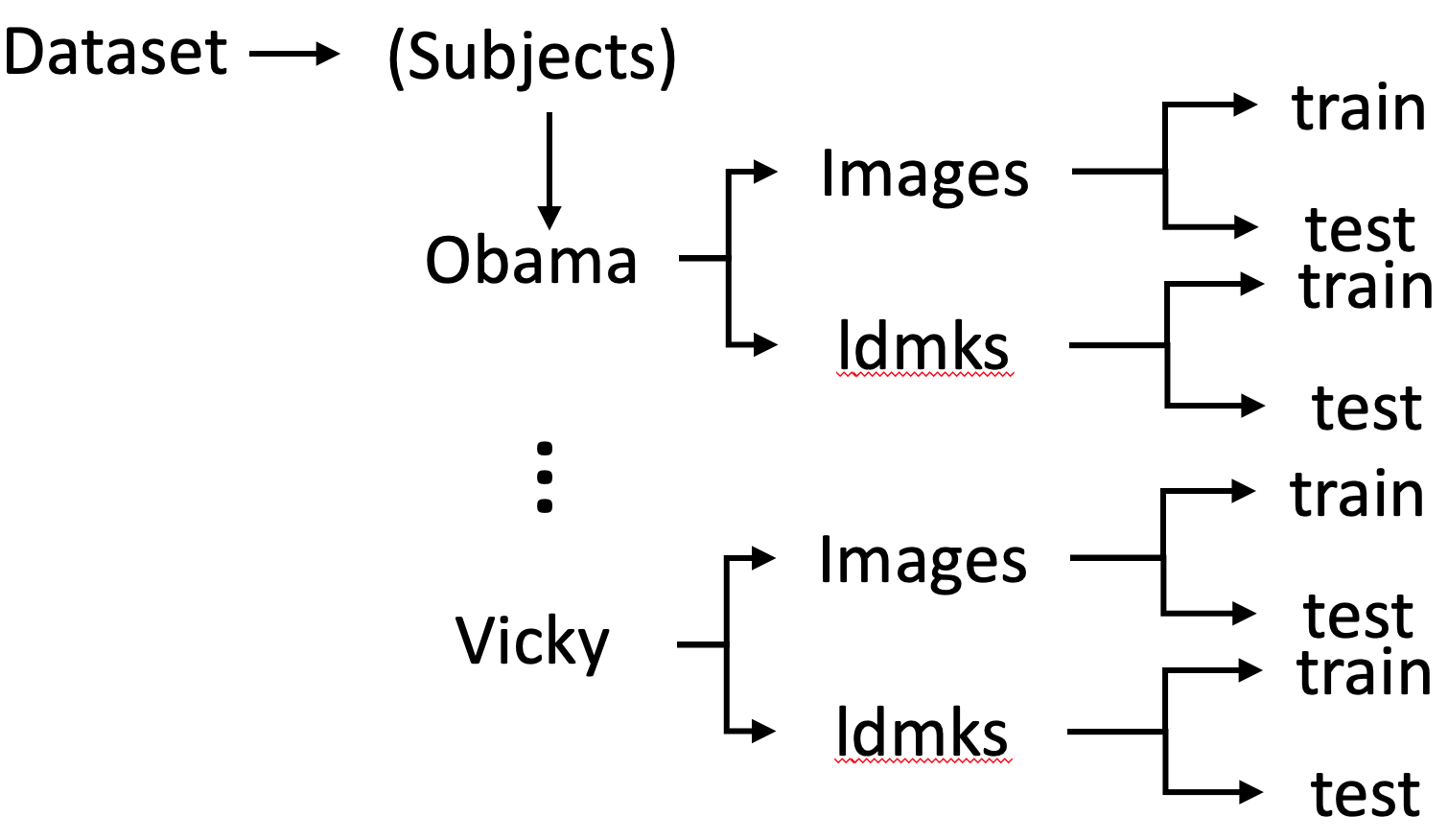

- First, find the pre-trained models of a portrait (e.g., Obama and Vicky) from here. Download his/her folder from both "Grid_Codebook" and "Reenactment" and then put them in a newly created folder "Pretrained". The pre-trained models of each portrait include a codebook model and two reenactment models.

- We have provided a set of test examples of Obama in "Dataset/Obama/heatmap/test", and you can assign this directory as the data root in test_grid.py from "Reenactment"

- Run the following command to synthesize a high-fidelity facial animation

cd Reenactment

sh demo.sh

- The generated videos are stored in the "Results" folder.

Given a portrait video (with a static background), we first extract the frames and crop the human face part out at the resolution of 512 x 512 by running

python pre_processing.py -i [video_path, xxx.mp4] -o [data_path, /%05d.jpg]

Sometimes the cropping will not be perfect, as some portraits have special facial structures. Please feel free to adjust the ratio value in this script, so that the entire human head part can be included.

You can either select videos from HDTF or just record a video yourself, and the processed data should be stored in the "Dataset" Folder, which is structured as below:

cd Grid_Codebook_Modeling

python main.py --base configs/obama_sliding_4.yaml -t True --gpu [gpu_id]

The training data folder can be assigned at "Grid_Codebook_Modeling/config/lrw_train.yaml"

cd Reenactment

python train_grid.py --data_root ['your dataset path'] -m ['Subject_name'] --load_path ['Pretrain Model path'] --gpu ["gpu_id"]

During training Stage 1, you will notice our VPGC has a much faster convergence process than VQGAN based on the vanilla codebook. A comparison of intermediate training results is shown below:

If you find this code useful for your research, please cite our paper:

@inproceedings{10.1145/3588432.3591509,

author = {Wang, Kaisiyuan and Zhou, Hang and Wu, Qianyi and Tang, Jiaxiang and Xu, Zhiliang and Liang, Borong and Hu, Tianshu and Ding, Errui and Liu, Jingtuo and Liu, Ziwei and Wang, Jingdong},

title = {Efficient Video Portrait Reenactment via Grid-Based Codebook},

year = {2023},

isbn = {9798400701597},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3588432.3591509},

doi = {10.1145/3588432.3591509},

abstract = {While progress has been made in the field of portrait reenactment, the problem of how to efficiently produce high-fidelity and accurate videos remains. Recent studies build direct mappings between driving signals and their predictions, leading to failure cases when synthesizing background textures and detailed local motions. In this paper, we propose the Video Portrait via Grid-based Codebook (VPGC) framework, which achieves efficient and high-fidelity portrait modeling. Our key insight is to query driving signals in a position-aware textural codebook with an explicit grid structure. The grid-based codebook stores delicate textural information locally according to our observations on video portraits, which can be learned efficiently and precisely. We subsequently design a Prior-Guided Driving Module to predict reliable features from the driving signals, which can be later decoded back to high-quality video portraits by querying the codebook. Comprehensive experiments are conducted to validate the effectiveness of our approach.},

booktitle = {ACM SIGGRAPH 2023 Conference Proceedings},

articleno = {66},

numpages = {9},

keywords = {Facial Animation, Video Synthesis},

location = {Los Angeles, CA, USA},

series = {SIGGRAPH '23}

}

This repo is built on VQGAN code, and we also borrow some architecture codes from ViT and LSP.