[1] Mingxing Tan and Quoc V. Le. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. ICML 2019. Arxiv link: https://arxiv.org/abs/1905.11946.

Updates

-

[Mar 2020] Released mobile/IoT device friendly EfficientNet-lite models: README.

-

[Feb 2020] Released EfficientNet checkpoints trained with NoisyStudent: paper.

-

[Nov 2019] Released EfficientNet checkpoints trained with AdvProp: paper.

-

[Oct 2019] Released EfficientNet-CondConv models with conditionally parameterized convolutions: README, paper.

-

[Oct 2019] Released EfficientNet models trained with RandAugment: paper.

-

[Aug 2019] Released EfficientNet-EdgeTPU models: README and blog post.

-

[Jul 2019] Released EfficientNet checkpoints trained with AutoAugment: paper, blog post

-

[May 2019] Released EfficientNets code and weights: blog post

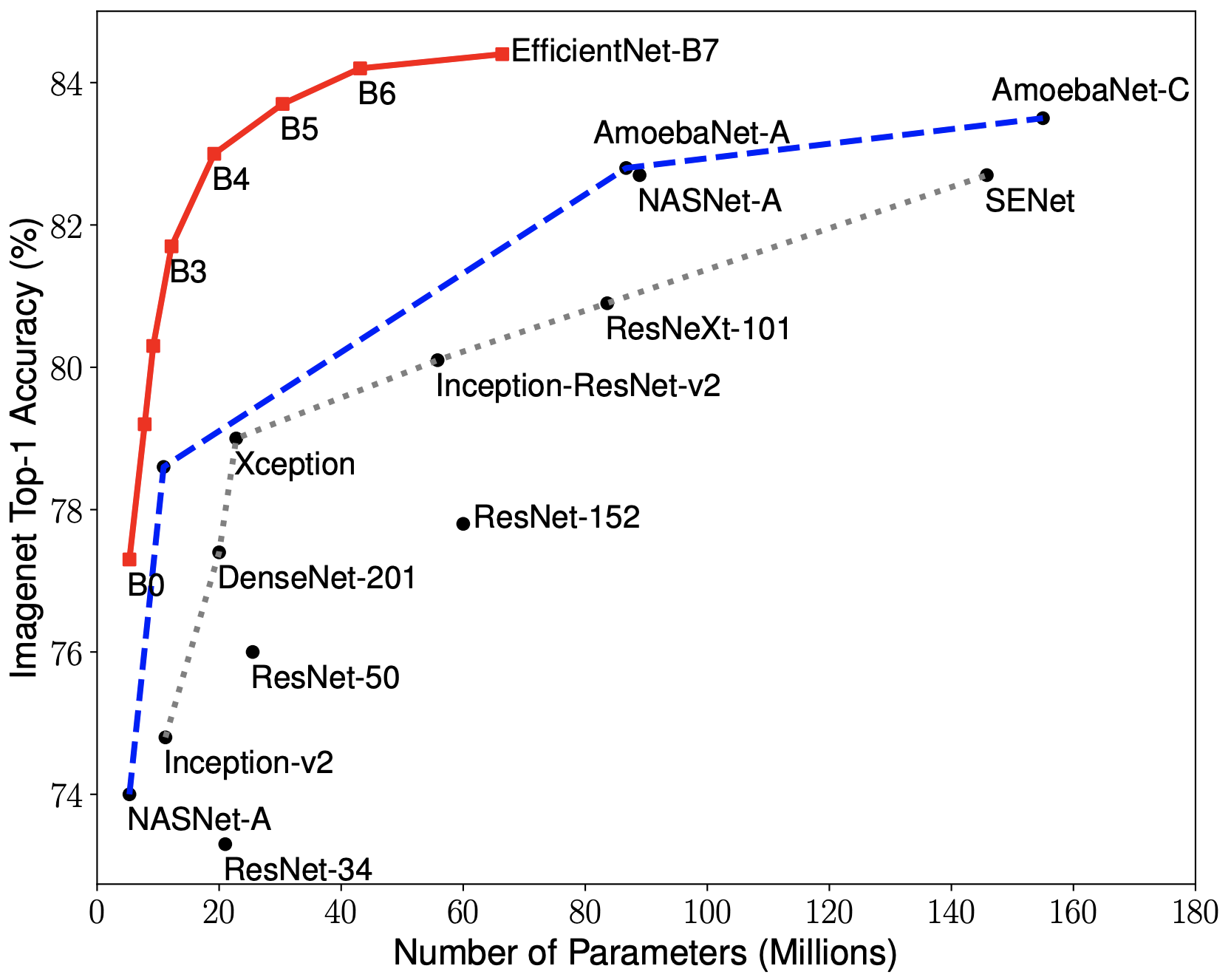

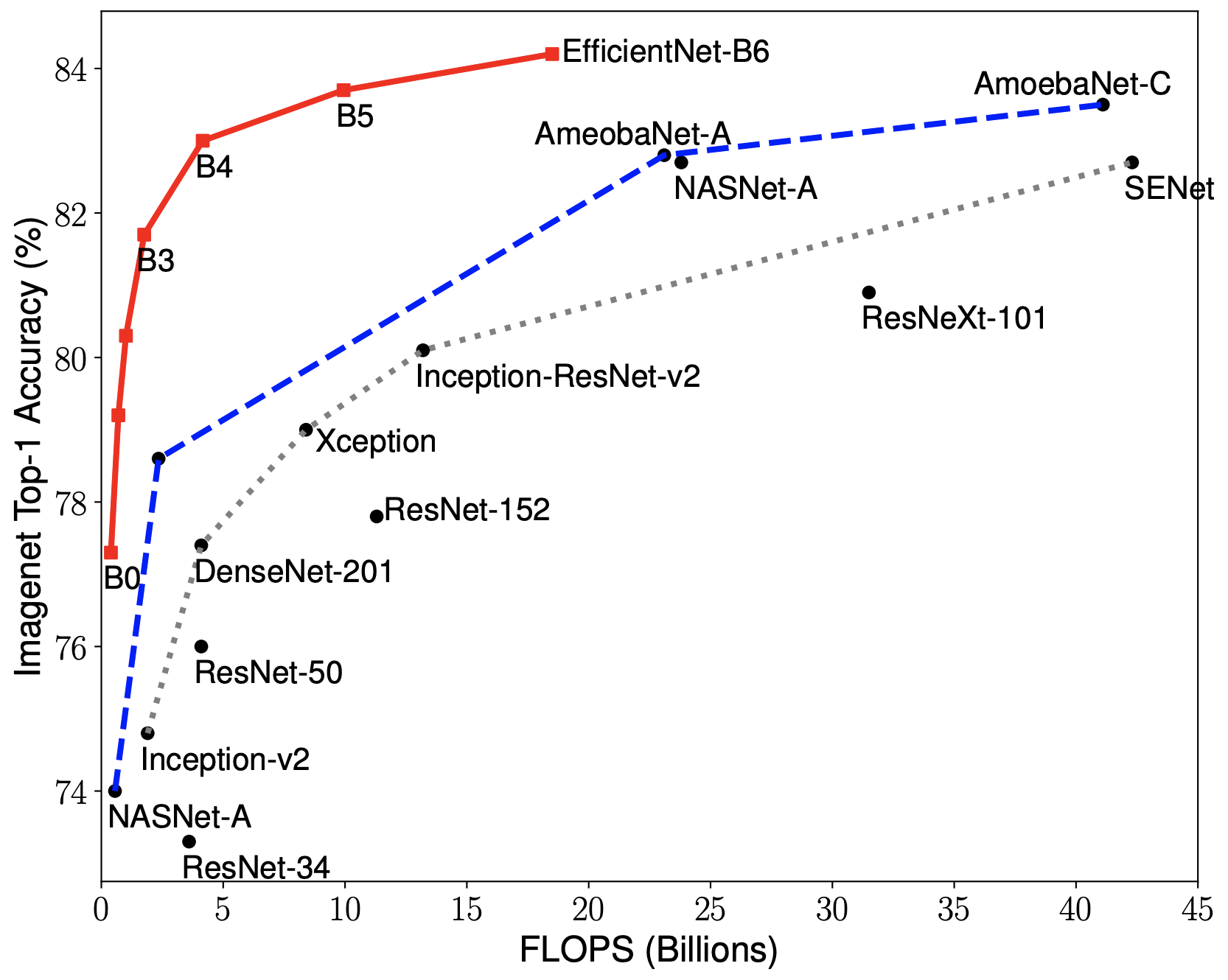

EfficientNets are a family of image classification models, which achieve state-of-the-art accuracy, yet being an order-of-magnitude smaller and faster than previous models.

We develop EfficientNets based on AutoML and Compound Scaling. In particular, we first use AutoML MNAS Mobile framework to develop a mobile-size baseline network, named as EfficientNet-B0; Then, we use the compound scaling method to scale up this baseline to obtain EfficientNet-B1 to B7.

|

|

EfficientNets achieve state-of-the-art accuracy on ImageNet with an order of magnitude better efficiency:

-

In high-accuracy regime, our EfficientNet-B7 achieves state-of-the-art 84.4% top-1 / 97.1% top-5 accuracy on ImageNet with 66M parameters and 37B FLOPS, being 8.4x smaller and 6.1x faster on CPU inference than previous best Gpipe.

-

In middle-accuracy regime, our EfficientNet-B1 is 7.6x smaller and 5.7x faster on CPU inference than ResNet-152, with similar ImageNet accuracy.

-

Compared with the widely used ResNet-50, our EfficientNet-B4 improves the top-1 accuracy from 76.3% of ResNet-50 to 82.6% (+6.3%), under similar FLOPS constraint.

To train EfficientNet on ImageNet, we hold out 25,022 randomly picked images (image filenames, or 20 out of 1024 total shards) as a 'minival' split, and conduct early stopping based on this 'minival' split. The final accuracy is reported on the original ImageNet validation set.

We have provided a list of EfficientNet checkpoints:.

- With baseline ResNet preprocessing, we achieve similar results to the original ICML paper.

- With AutoAugment preprocessing, we achieve higher accuracy than the original ICML paper.

- With RandAugment preprocessing, accuracy is further improved.

- With AdvProp, state-of-the-art results (w/o extra data) are achieved.

- With NoisyStudent, state-of-the-art results (w/ extra JFT-300M unlabeled data) are achieved.

| B0 | B1 | B2 | B3 | B4 | B5 | B6 | B7 | B8 | L2-475 | L2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline preprocessing | 76.7% (ckpt) | 78.7% (ckpt) | 79.8% (ckpt) | 81.1% (ckpt) | 82.5% (ckpt) | 83.1% (ckpt) | |||||

| AutoAugment (AA) | 77.1% (ckpt) | 79.1% (ckpt) | 80.1% (ckpt) | 81.6% (ckpt) | 82.9% (ckpt) | 83.6% (ckpt) | 84.0% (ckpt) | 84.3% (ckpt) | |||

| RandAugment (RA) | 83.7% (ckpt) | 84.7% (ckpt) | |||||||||

| AdvProp + AA | 77.6% (ckpt) | 79.6% (ckpt) | 80.5% (ckpt) | 81.9% (ckpt) | 83.3% (ckpt) | 84.3% (ckpt) | 84.8% (ckpt) | 85.2% (ckpt) | 85.5% (ckpt) | ||

| NoisyStudent + RA | 78.8% (ckpt) | 81.5% (ckpt) | 82.4% (ckpt) | 84.1% (ckpt) | 85.3% (ckpt) | 86.1% (ckpt) | 86.4% (ckpt) | 86.9% (ckpt) | - | 88.2%(ckpt) | 88.4% (ckpt) |

*To train EfficientNets with AutoAugment (code), simply add option "--augment_name=autoaugment". If you use these checkpoints, you can cite this paper.

**To train EfficientNets with RandAugment (code), simply add option "--augment_name=randaugment". For EfficientNet-B5 also add "--randaug_num_layers=2 --randaug_magnitude=17". For EfficientNet-B7 or EfficientNet-B8 also add "--randaug_num_layers=2 --randaug_magnitude=28". If you use these checkpoints, you can cite this paper.

* AdvProp training code coming soon. Please set "--advprop_preprocessing=True" for using AdvProp checkpoints. If you use AdvProp checkpoints, you can cite this paper.

* NoisyStudent training code coming soon. L2-475 means the same L2 architecture with input image size 475 (Please set "--input_image_size=475" for using this checkpoint). If you use NoisyStudent checkpoints, you can cite this paper.

*Note that AdvProp and NoisyStudent performance is derived from baselines that don't use holdout eval set. They will be updated in future."

A quick way to use these checkpoints is to run:

$ export MODEL=efficientnet-b0

$ wget https://storage.googleapis.com/cloud-tpu-checkpoints/efficientnet/ckpts/${MODEL}.tar.gz

$ tar xf ${MODEL}.tar.gz

$ wget https://upload.wikimedia.org/wikipedia/commons/f/fe/Giant_Panda_in_Beijing_Zoo_1.JPG -O panda.jpg

$ wget https://storage.googleapis.com/cloud-tpu-checkpoints/efficientnet/eval_data/labels_map.json

$ python eval_ckpt_main.py --model_name=$MODEL --ckpt_dir=$MODEL --example_img=panda.jpg --labels_map_file=labels_map.json

Please refer to the following colab for more instructions on how to obtain and use those checkpoints.

eval_ckpt_example.ipynb: A colab example to load EfficientNet pretrained checkpoints files and use the restored model to classify images.

import efficientnet_builder

features, endpoints = efficientnet_builder.build_model_base(images, 'efficientnet-b0')

- Use

featuresfor classification finetuning. - Use

endpoints['reduction_i']for detection/segmentation, as the last intermediate feature with reduction leveli. For example, if input image has resolution 224x224, then:endpoints['reduction_1']has resolution 112x112endpoints['reduction_2']has resolution 56x56endpoints['reduction_3']has resolution 28x28endpoints['reduction_4']has resolution 14x14endpoints['reduction_5']has resolution 7x7

To train this model on Cloud TPU, you will need:

- A GCE VM instance with an associated Cloud TPU resource

- A GCS bucket to store your training checkpoints (the "model directory")

- Install TensorFlow version >= 1.13 for both GCE VM and Cloud.

Then train the model:

$ export PYTHONPATH="$PYTHONPATH:/path/to/models"

$ python main.py --tpu=TPU_NAME --data_dir=DATA_DIR --model_dir=MODEL_DIR

# TPU_NAME is the name of the TPU node, the same name that appears when you run gcloud compute tpus list, or ctpu ls.

# MODEL_DIR is a GCS location (a URL starting with gs:https:// where both the GCE VM and the associated Cloud TPU have write access

# DATA_DIR is a GCS location to which both the GCE VM and associated Cloud TPU have read access.

For more instructions, please refer to our tutorial: https://cloud.google.com/tpu/docs/tutorials/efficientnet