This repository is the official implementation of How Attentive are Graph Attention Networks?.

GATv2 is now available as part of PyTorch Geometric library!

https://pytorch-geometric.readthedocs.io/en/latest/modules/nn.html#torch_geometric.nn.conv.GATv2Conv

and also is in this main directory.

GATv2 is now available as part of DGL library!

https://docs.dgl.ai/en/latest/api/python/nn.pytorch.html#gatv2conv

and also in this repository.

GATv2 is now available as part of Google's TensorFlow GNN library!

The code for reproducing the DictionaryLookup experiments can be found in the dictionary_lookup directory.

The rest of the code for reproducing the experiments in the paper will be made publicly available.

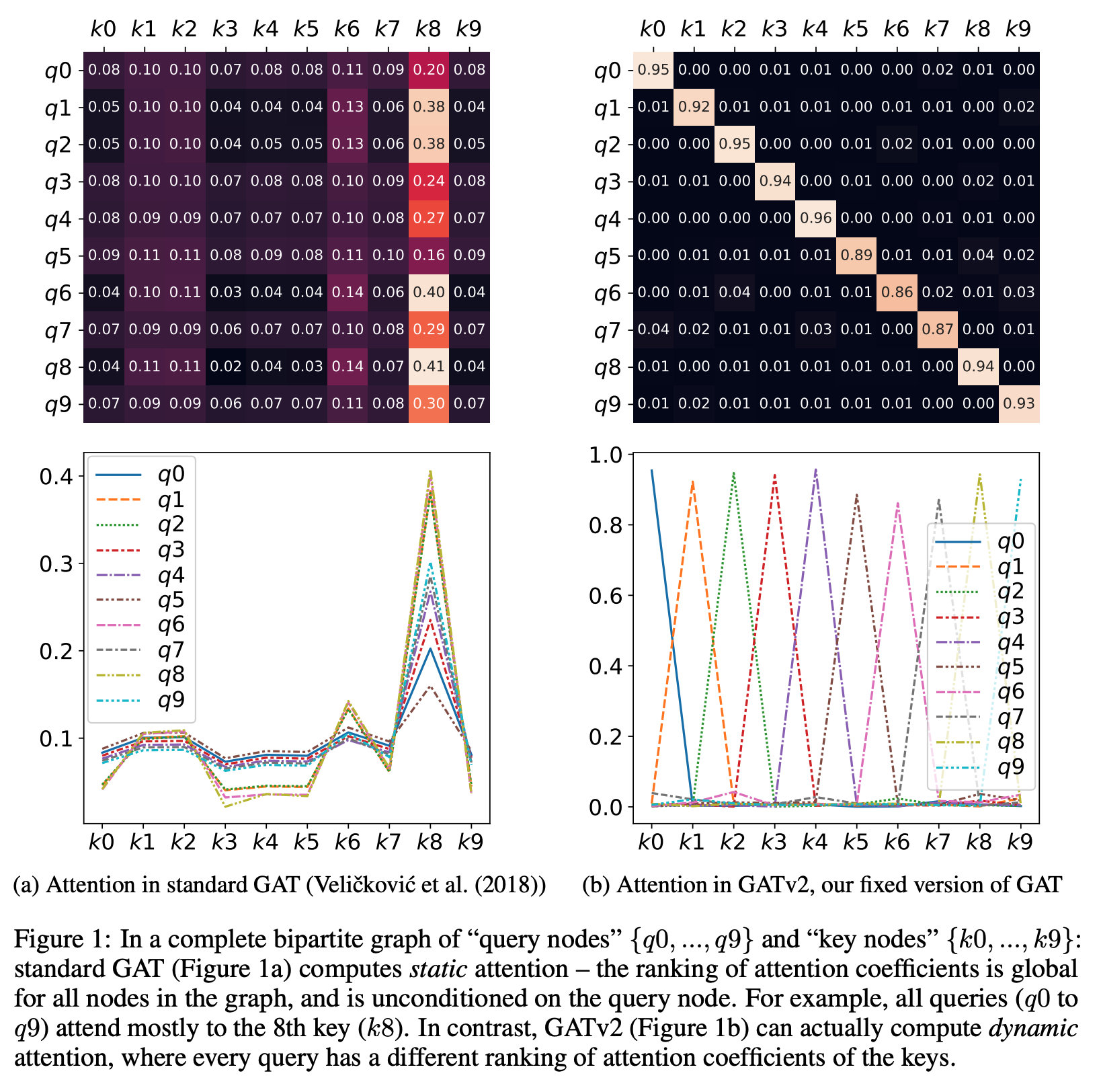

How Attentive are Graph Attention Networks?

@article{brody2021attentive,

title={How Attentive are Graph Attention Networks?},

author={Brody, Shaked and Alon, Uri and Yahav, Eran},

journal={arXiv preprint arXiv:2105.14491},

year={2021}

}