This repository is an extension of the paper: Large Language Models Are Zero Shot Time Series Forecasters by Nate Gruver, Marc Finzi, Shikai Qiu and Andrew Gordon Wilson (NeurIPS 2023).

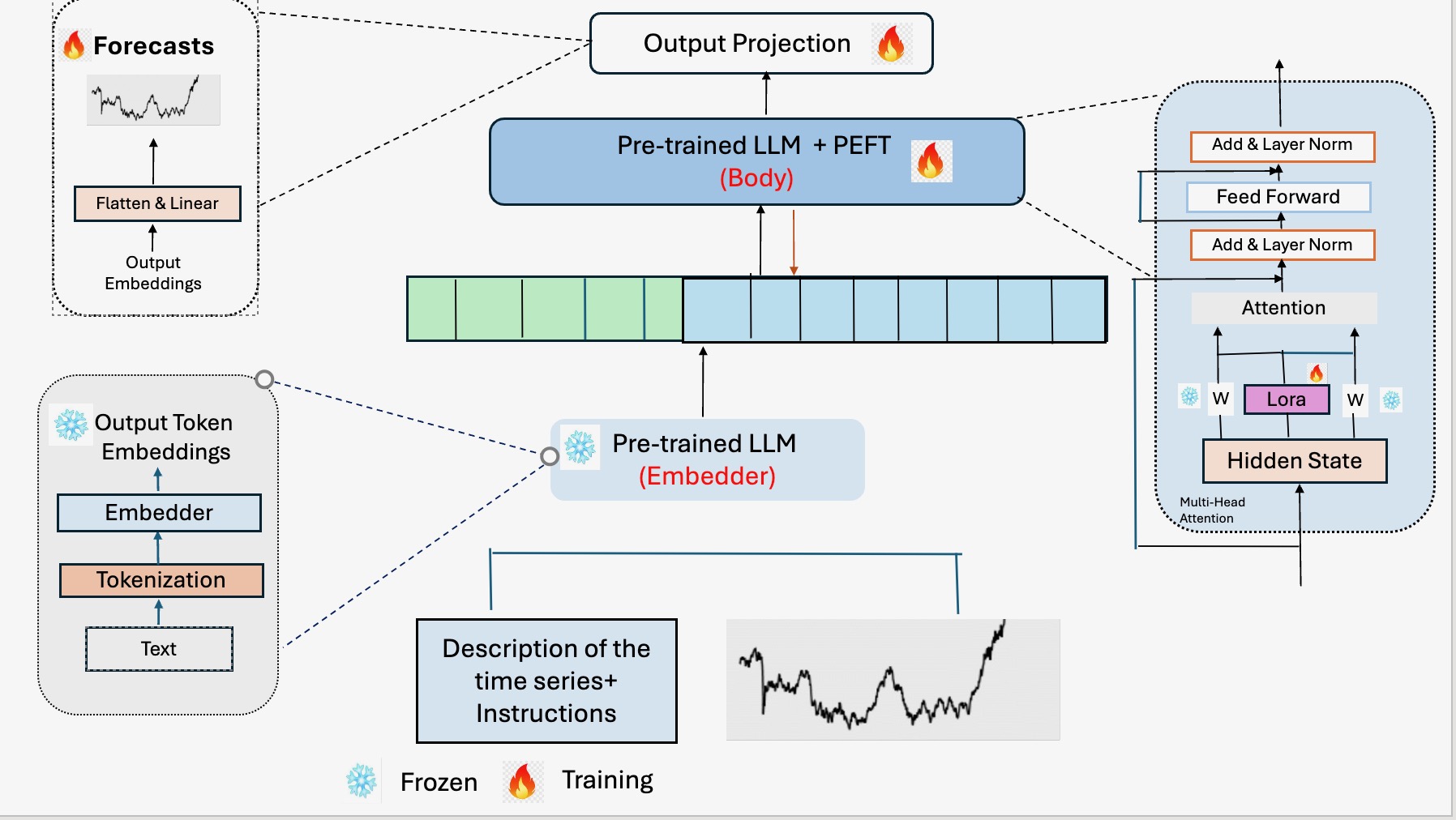

Schematic of our time series forecasting pipeline. This consists of three stages -- (a) Training of output projections with the generated samples at time points from dynamical systems (b) Training of the adapter of the LLM for fine-tuning (c) Using the trained model for probabilistic time series forecasting.- Raunak Dey

- Chandramani Lu

- Ravi Chepuri

- You (contributions welcome)! Please shoot me an email at Raunak Dey

Run the following command to install all dependencies in a conda environment named llmtime. Change the cuda version for torch if you don't have cuda 11.8.

source install.sh

After installation, activate the environment with

conda activate llmtime

If you prefer not using conda, you can also install the dependencies listed in install.sh manually.

Add your openai api key to ~/.bashrc with

echo "export OPENAI_API_KEY=<your key>" >> ~/.bashrc

Finally, if you have a diffferent OpenAI API base, change it in your ~/.bashrc with

echo "export OPENAI_API_BASE=<your base url>" >> ~/.bashrc

Here are some tips for using LLMTime:

- Performance is not too sensitive to the data scaling hyperparameters

alpha, beta, basic. A good default isalpha=0.95, beta=0.3, basic=False. For data exhibiting symmetry around 0 (e.g. a sine wave), we recommend settingbasic=Trueto avoid shifting the data. - The recently released

gpt-3.5-turbo-instructseems to require a lower temperature (e.g. 0.3) than other models, and tends to not outperformtext-davinci-003from our limited experiments. - Tuning hyperparameters based on validation likelihoods, as done by

get_autotuned_predictions_data, will often yield better test likelihoods, but won't necessarily yield better samples.

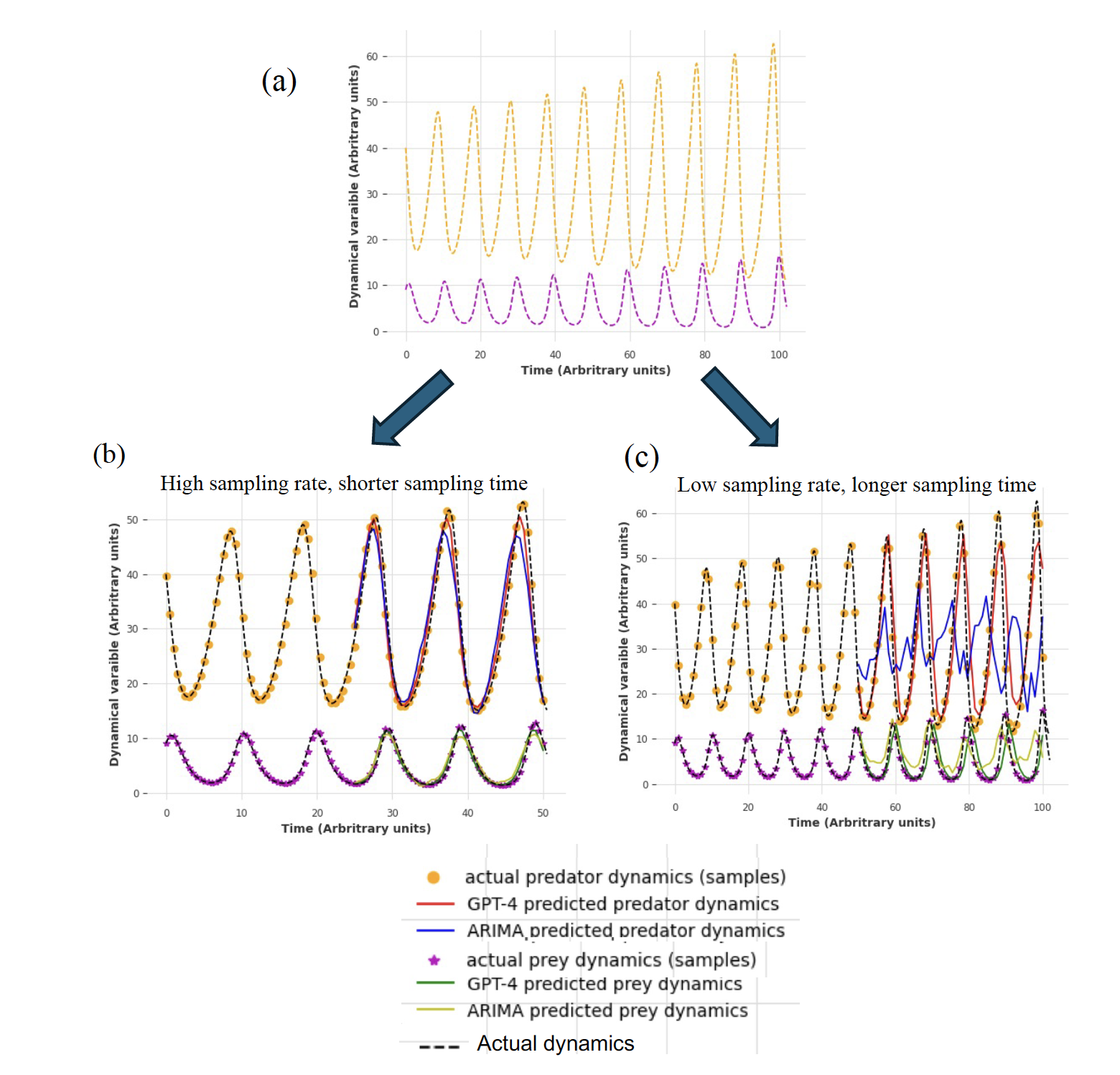

Lokta-Volterra: characteristic timescale matters! -- we can aggregate tokens, when a higher sampling rate is not necessary

- Time series tokenized with LLM can be used for probabilistic forecasting

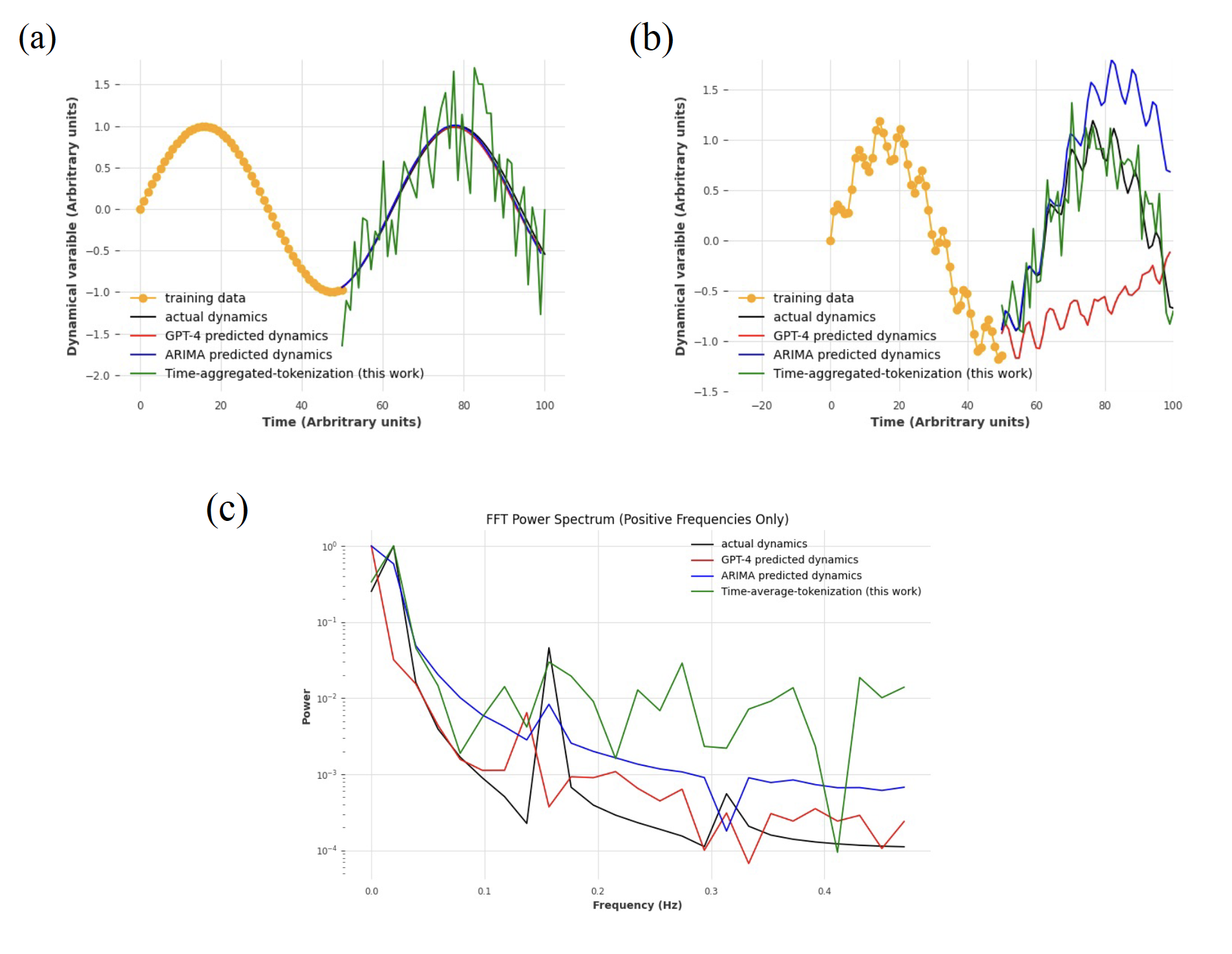

- For an oscillatory dynamical system GPT-4 can miss the low-frequency component (which was less dominant in our experiment) and focus on the more dominant high-frequency component. With fine-tuned LLama, we can capture the low-frequency component while ignoring the high-frequency one, as it involves aggregation in the smaller segments.

- Due to the aggregation of textual information, our method requires less computing power and can be more effectively fine-tuned.

- GPT-4 is better in capturing long-term memory in the time series, with the caveat that it will need a large context window for time series with longer memory. In that case, our method can be more effective in capturing these long-term dependencies.

- When individual components of the multivariate time series are modelled independently, GPT-4 is better than standard statistical techniques, as they ignore the cross-correlation effects.

- We think it is more effective to sample with a higher sampling rate for less time (number of training points being constant), given the governing dynamics remain unchanged. A sampling rate less than the characteristic timescale of the dynamical process will lead to erroneous forecasting.