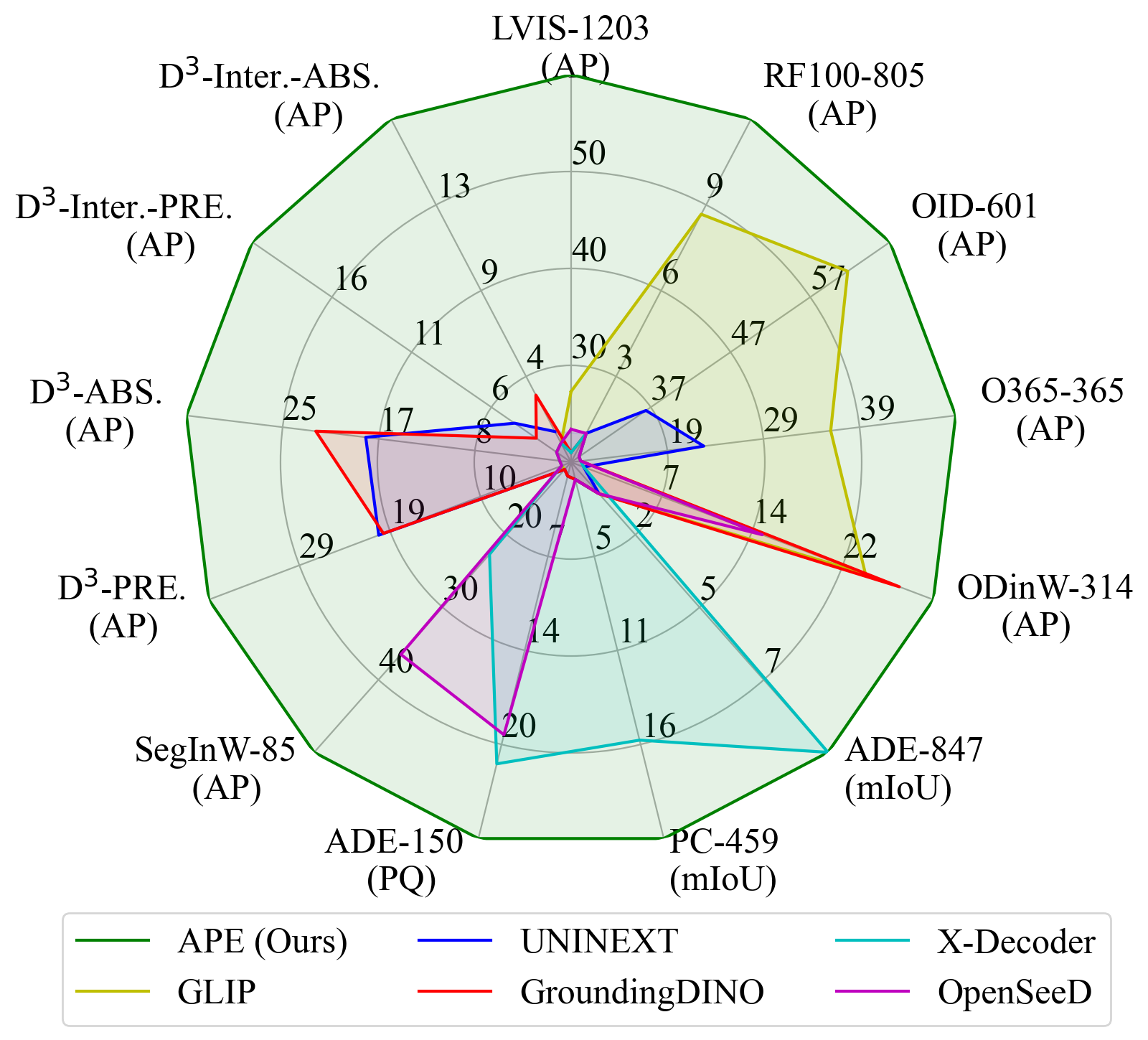

- High Performance. SotA (or competitive) performance on 160 datasets with only one model.

- Perception in the Wild. Detect and segment everything with thousands of vocabularies or language descriptions all at once.

- Flexible. Support both foreground objects and background stuff for instance segmentation and semantic segmentation.

2024.04.07Release checkpoints for APE-Ti with only 6M backbone!2024.02.27APE has been accepted to CVPR 2024!2023.12.05Release training codes!2023.12.05Release checkpoints for APE-L!2023.12.05Release inference codes and demo!

- Release inference code and demo.

- Release checkpoints.

- Release training codes.

- Add clean docs.

- Clone the APE repository from GitHub:

git clone https://github.com/shenyunhang/APE

cd APE- Install the required dependencies and APE:

pip3 install -r requirements.txt

python3 -m pip install -e .Web UI demo

pip3 install gradio

cd APE/demo

python3 app.py

This demo will detect GPUs and use one GPU if you have GPUs.

Please feel free to try our Online Demo!

Following here to prepare the following datasets:

| Name | COCO | LVIS | Objects365 | Openimages | VisualGenome | SA-1B | RefCOCO | GQA | PhraseCut | Flickr30k | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Train | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Test | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | |

| Name | ODinW | SegInW | Roboflow100 | ADE20k | ADE-full | BDD10k | Cityscapes | PC459 | PC59 | VOC | D3 |

| Train | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Test | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

Noted we do not use coco_2017_train for training.

Instead, we augment lvis_v1_train with annotations from coco, and keep the image set unchanged.

And we register it as lvis_v1_train+coco for instance segmentation and lvis_v1_train+coco_panoptic_separated for panoptic segmentation.

We provide several scripts to evaluate all models.

It is necessary to adjust the checkpoint location and GPU number in the scripts before running them.

scripts/eval_APE-L_D.sh

scripts/eval_APE-L_C.sh

scripts/eval_APE-L_B.sh

scripts/eval_APE-L_A.sh

scripts/eval_APE-Ti.shAPE-L_D

python3 demo/demo_lazy.py \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitl_eva02_clip_vlf_lsj1024_cp_16x4_1080k.py \

--input image1.jpg image2.jpg image3.jpg \

--output /path/to/output/dir \

--confidence-threshold 0.1 \

--text-prompt 'person,car,chess piece of horse head' \

--with-box \

--with-mask \

--with-sseg \

--opts \

train.init_checkpoint=/path/to/APE-D/checkpoint \

model.model_language.cache_dir="" \

model.model_vision.select_box_nums_for_evaluation=500 \

model.model_vision.text_feature_bank_reset=True \

To disable xformers, add the following option:

model.model_vision.backbone.net.xattn=False \

To use pytorch version of MultiScaleDeformableAttention, add the following option:

model.model_vision.transformer.encoder.pytorch_attn=True \

model.model_vision.transformer.decoder.pytorch_attn=True \

git lfs install

git clone https://huggingface.co/QuanSun/EVA-CLIP models/QuanSun/EVA-CLIP/

git clone https://huggingface.co/BAAI/EVA models/BAAI/EVA/

git clone https://huggingface.co/Yuxin-CV/EVA-02 models/Yuxin-CV/EVA-02/Resize patch size:

python3 tools/eva_interpolate_patch_14to16.py --input models/QuanSun/EVA-CLIP/EVA02_CLIP_E_psz14_plus_s9B.pt --output models/QuanSun/EVA-CLIP/EVA02_CLIP_E_psz14to16_plus_s9B.pt --image_size 224

python3 tools/eva_interpolate_patch_14to16.py --input models/QuanSun/EVA-CLIP/EVA01_CLIP_g_14_plus_psz14_s11B.pt --output models/QuanSun/EVA-CLIP/EVA01_CLIP_g_14_plus_psz14to16_s11B.pt --image_size 224

python3 tools/eva_interpolate_patch_14to16.py --input models/QuanSun/EVA-CLIP/EVA02_CLIP_L_336_psz14_s6B.pt --output models/QuanSun/EVA-CLIP/EVA02_CLIP_L_336_psz14to16_s6B.pt --image_size 336

python3 tools/eva_interpolate_patch_14to16.py --input models/Yuxin-CV/EVA-02/eva02/pt/eva02_Ti_pt_in21k_p14.pt --output models/Yuxin-CV/EVA-02/eva02/pt/eva02_Ti_pt_in21k_p14to16.pt --image_size 224Single node:

python3 tools/train_net.py \

--num-gpus 8 \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitl_eva02_clip_vlf_lsj1024_cp_16x4_1080k_mdl.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitl_eva02_clip_vlf_lsj1024_cp_16x4_1080k_mdl_`date +'%Y%m%d_%H%M%S'`Multiple nodes:

python3 tools/train_net.py \

--dist-url="tcp:https://${MASTER_IP}:${MASTER_PORT}" \

--num-gpus ${HOST_GPU_NUM} \

--num-machines ${HOST_NUM} \

--machine-rank ${INDEX} \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitl_eva02_clip_vlf_lsj1024_cp_16x4_1080k_mdl.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitl_eva02_clip_vlf_lsj1024_cp_16x4_1080k_mdl_`date +'%Y%m%d_%H'`0000Single node:

python3 tools/train_net.py \

--num-gpus 8 \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO/ape_deta/ape_deta_vitl_eva02_vlf_lsj1024_cp_1080k.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO/ape_deta/ape_deta_vitl_eva02_vlf_lsj1024_cp_1080k_`date +'%Y%m%d_%H%M%S'`Multiple nodes:

python3 tools/train_net.py \

--dist-url="tcp:https://${MASTER_IP}:${MASTER_PORT}" \

--num-gpus ${HOST_GPU_NUM} \

--num-machines ${HOST_NUM} \

--machine-rank ${INDEX} \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO/ape_deta/ape_deta_vitl_eva02_vlf_lsj1024_cp_1080k.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO/ape_deta/ape_deta_vitl_eva02_vlf_lsj1024_cp_1080k_`date +'%Y%m%d_%H'`0000Single node:

python3 tools/train_net.py \

--num-gpus 8 \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_REFCOCO/ape_deta/ape_deta_vitl_eva02_vlf_lsj1024_cp_1080k.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_REFCOCO/ape_deta/ape_deta_vitl_eva02_vlf_lsj1024_cp_1080k_`date +'%Y%m%d_%H%M%S'`Multiple nodes:

python3 tools/train_net.py \

--dist-url="tcp:https://${MASTER_IP}:${MASTER_PORT}" \

--num-gpus ${HOST_GPU_NUM} \

--num-machines ${HOST_NUM} \

--machine-rank ${INDEX} \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_REFCOCO/ape_deta/ape_deta_vitl_eva02_vlf_lsj1024_cp_1080k.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_REFCOCO/ape_deta/ape_deta_vitl_eva02_vlf_lsj1024_cp_1080k_`date +'%Y%m%d_%H'`0000Single node:

python3 tools/train_net.py \

--num-gpus 8 \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VG/ape_deta/ape_deta_vitl_eva02_lsj1024_cp_720k.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VG/ape_deta/ape_deta_vitl_eva02_lsj1024_cp_720k_`date +'%Y%m%d_%H%M%S'`Multiple nodes:

python3 tools/train_net.py \

--dist-url="tcp:https://${MASTER_IP}:${MASTER_PORT}" \

--num-gpus ${HOST_GPU_NUM} \

--num-machines ${HOST_NUM} \

--machine-rank ${INDEX} \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VG/ape_deta/ape_deta_vitl_eva02_lsj1024_cp_720k.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VG/ape_deta/ape_deta_vitl_eva02_lsj1024_cp_720k_`date +'%Y%m%d_%H'`0000Single node:

python3 tools/train_net.py \

--num-gpus 8 \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitt_eva02_vlf_lsj1024_cp_16x4_1080k_mdl.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitt_eva02_vlf_lsj1024_cp_16x4_1080k_mdl_`date +'%Y%m%d_%H%M%S'`Multiple nodes:

python3 tools/train_net.py \

--dist-url="tcp:https://${MASTER_IP}:${MASTER_PORT}" \

--num-gpus ${HOST_GPU_NUM} \

--num-machines ${HOST_NUM} \

--machine-rank ${INDEX} \

--resume \

--config-file configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitt_eva02_vlf_lsj1024_cp_16x4_1080k_mdl.py \

train.output_dir=output/APE/configs/LVISCOCOCOCOSTUFF_O365_OID_VGR_SA1B_REFCOCO_GQA_PhraseCut_Flickr30k/ape_deta/ape_deta_vitt_eva02_vlf_lsj1024_cp_16x4_1080k_mdl_`date +'%Y%m%d_%H'`0000git lfs install

git clone https://huggingface.co/shenyunhang/APE

| name | Checkpoint | Config | |

|---|---|---|---|

| 1 | APE-L_A | HF link | link |

| 2 | APE-L_B | HF link | link |

| 3 | APE-L_C | HF link | link |

| 4 | APE-L_D | HF link | link |

| 4 | APE-Ti | HF link | link |

If you find our work helpful for your research, please consider citing the following BibTeX entry.

@inproceedings{APE,

title={Aligning and Prompting Everything All at Once for Universal Visual Perception},

author={Shen, Yunhang and Fu, Chaoyou and Chen, Peixian and Zhang, Mengdan and Li, Ke and Sun, Xing and Wu, Yunsheng and Lin, Shaohui and Ji, Rongrong},

journal={CVPR},

year={2024}

}