Shan Jia,

Mingzhen Huang, Zhou Zhou, Yan Ju, Jialing Cai, Siwei Lyu

University at Buffalo, State University of New York, NY, USA

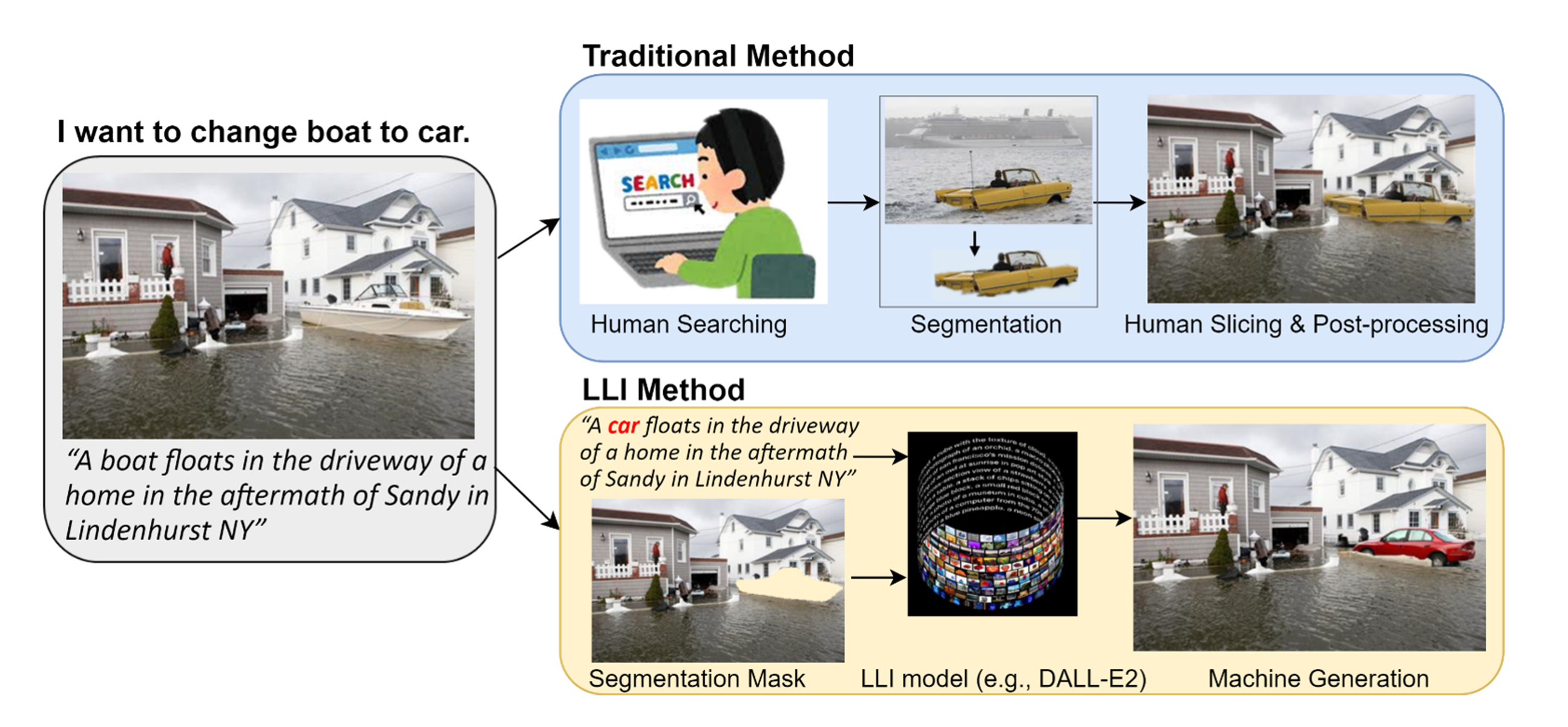

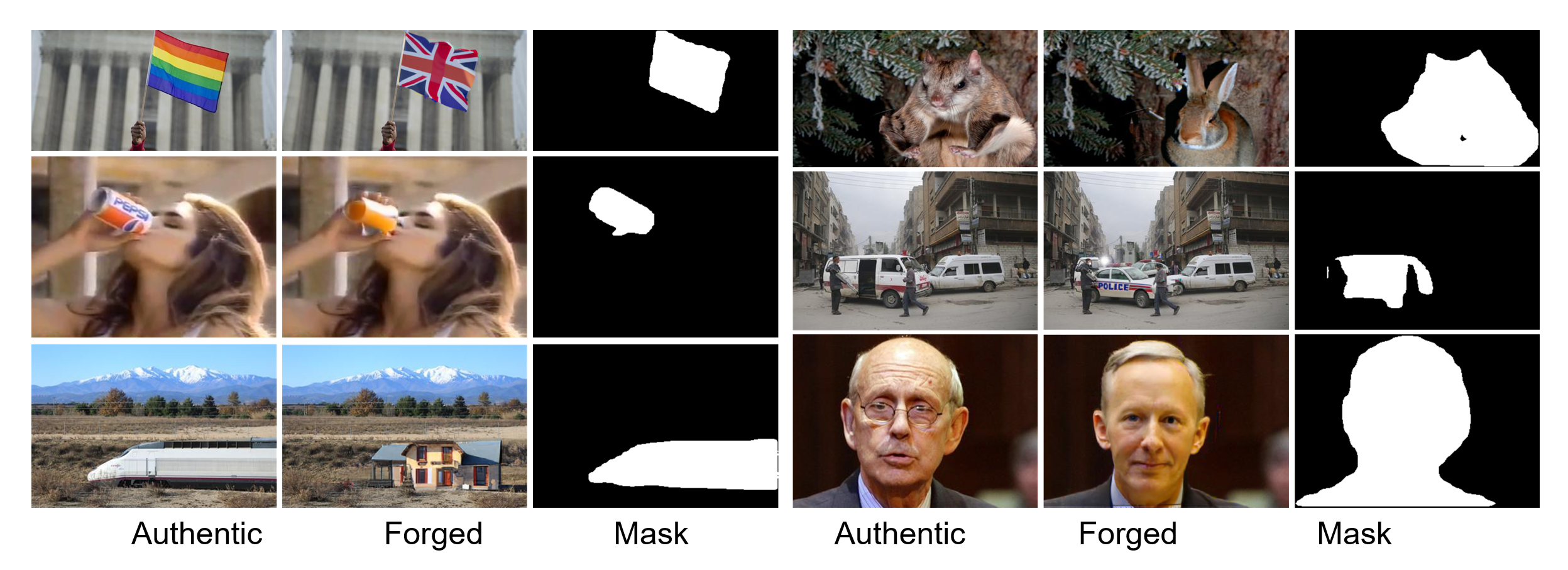

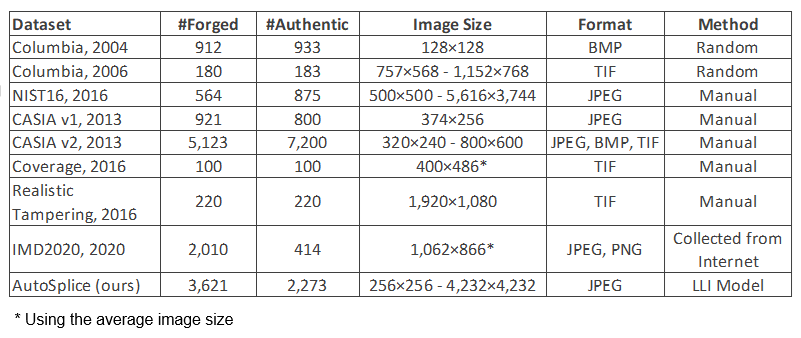

The AutoSplice dataset was proposed in the CVPR2023 Workshop on Media Forensics paper "AutoSplice: A Text-prompt Manipulated Image Dataset for Media Forensics", which leverages the DALL-E2 1 language-image model to automatically generate and splice masked regions guided by a text prompt. It consists of 5,894 manipulated and authentic images.

The database contains 3, 621 images by locally or globally manipulating real-world images, and 2, 273 authentic images.

Three JPEG compression versions along with their manipulation masks and captions are included,

- JPEG-100, lossless compression with a JPEG quality factor of 100;

- JPEG-90, gently lossy compression with a JPEG quality factor of 90;

- JPEG-75, lossy compression with a JPEG factor of 75 (the same as the authentic images derived from the Visual News dataset2), used for fine-tuning tasks.

If you would like to access the AutoSplice dataset, please fill out this Google Form. The download link will be sent to you once the form is accepted (in 48 hours). If you have any questions, please send an email to [[email protected]].

The AutoSplice dataset is released only for academic research. Researchers from educational institutes are allowed to use this database freely for noncommercial purposes.

If you use this dataset, please cite the following papers:

@inproceedings{jia2023autosplice,

title={AutoSplice: A Text-prompt Manipulated Image Dataset for Media Forensics},

author={Jia, Shan and Huang, Mingzhen and Zhou, Zhou and Ju, Yan and Cai, Jialing and Lyu, Siwei},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={893--903},

year={2023}

}

Footnotes

-

Ramesh, Aditya, et al. "Hierarchical text-conditional image generation with clip latents." arXiv preprint arXiv:2204.06125 (2022). ↩

-

Liu, Fuxiao, et al. "Visual news: Benchmark and challenges in news image captioning." Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (2021). ↩