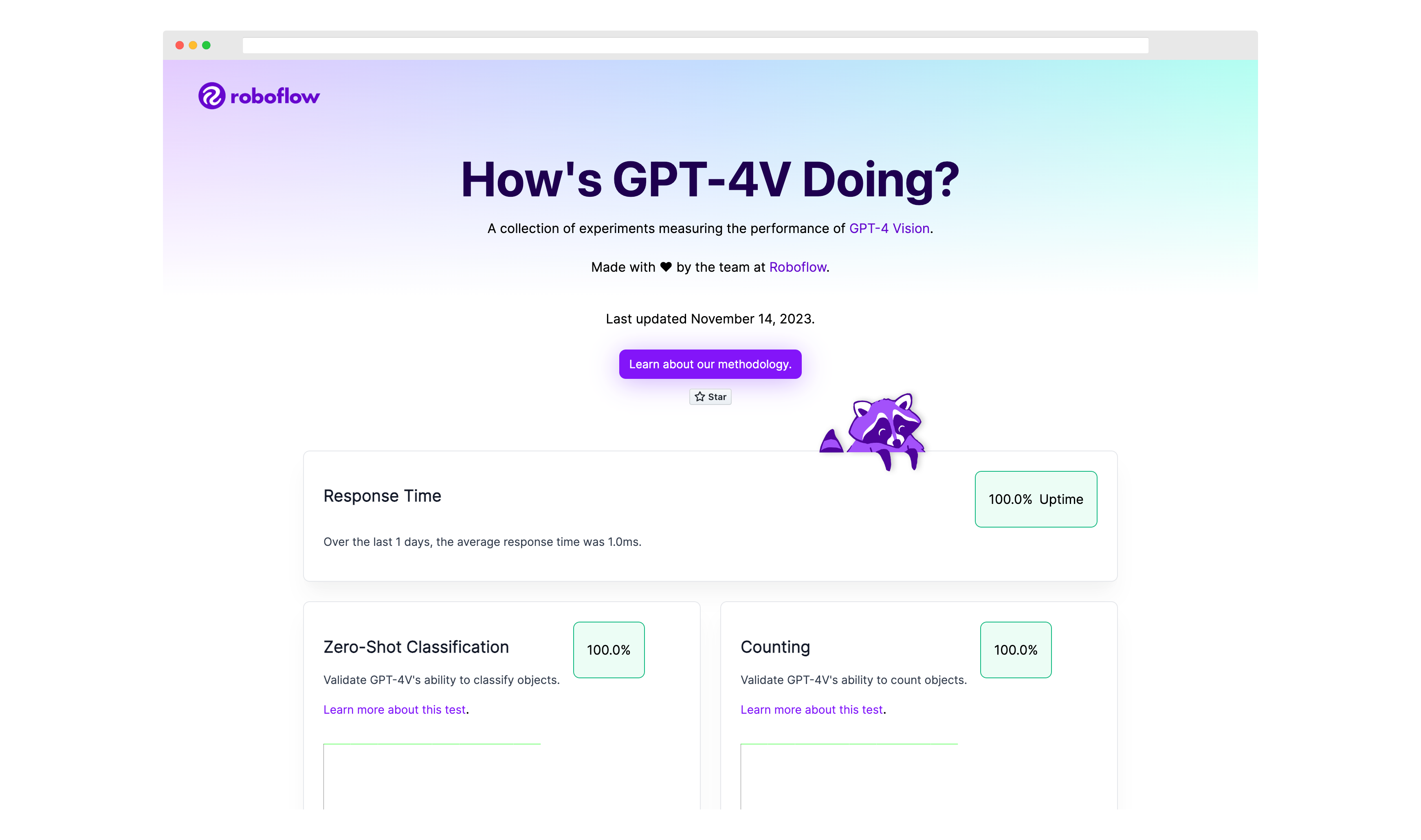

Open source monitor that tests how GPT-4V performs on image prompts over time.

This project is not affiliated with OpenAI.

See the companion foundation-vision-benchmark repository for more information about qualitatively evaluating foundation vision models like we do on this website.

Given the vast array of possibilities with vision models, no set of tests, this one included, can comprehensively evaluate what a model can do; this repository is a starting point for exploration.

We would love your help in making this repository even better! Whether you want to add a new experiment or have any suggestions for improvement, feel free to open an issue or pull request.

We welcome additions to the list of tests. If you contribute a test, we will run it daily.

To contribute a test, first fork this repository. Then, clone your fork locally:

git clone <your fork>

cd gpt-checkupHere is the structure of the project:

tests: Contains the tests that are run daily.images: Contains the images used in the tests.results: Contains the results of the tests saved from each day.web.py: Contains the code used to run the tests and create the website.template.html: The template for the website. Used to generate theindex.htmlfile.

Then, create a new file in the tests directory with the name of the test you want to add. Use the mathocr.py file as an example.

In your test, you will need to specify:

- A test name

- A test ID

- The question you are answering (for display on the website only)

- The prompt to send to the model

- The image to send to the model

- A description of the method used in the test (for display on the website only)

Add the image(s) you want to use in your test(s) in the images directory.

Add the name of the test class you created to the test_list list in the web.py file

and to the imports in the tests/__init__.py file.

Before you run the tests locally, you will need to set up an OpenAI API key. Refer to the official OpenAI documentation for instructions on how to retrieve your OpenAI API key.

Export your key into a variable called OPENAI_API_KEY in your environment:

export OPENAI_API_KEY=<your key>Then, run the tests:

python3 web.pyYou will see messages printed in your console as each test is run:

Running Document OCR test...

Running Handwriting OCR test...

Running Structured Data OCR test...

Running Math OCR test...

Running Object Detection test...

Running Graph Understanding test...

...

When the script has run, the results of all tests will show up in the index.html file. A new file will be added to the results directory which contains a JSON representation of the test results.