Author: Panji Brotoisworo

Contact: [email protected]

Tweet2Map is a python script that mines Metro Manila Development Authority (MMDA) Tweets (@mmda) into a usable database for traffic accident research in Metro Manila. Please take note that you need your own unique Twitter API code in order to use this script. This script uses the Tweepy library in order to connect with the Twitter API, Geopandas and Shapely for the Spatial Join, and uses RegEx for text parsing. For more information regarding this script please visit the project page on my blog. This project is in no way affiliated with the MMDA and is a personal project.

- Spellchecker using the Peter Norvig algorithm to fix typos and wrong spelling of locations and other information

- Permutations to try different combinations of locations

- Eg, if the script cannot find EDSA ORTIGAS MRT, it will try EDSA MRT ORTIGAS, and so on

- Natural Language Processing to replace RegEx

It is recommended that you install a Python 3.8 virtual environment. At minumum a 3.6 environment may still work. Once the environment is installed, install the relevant packages by installing these libraries:

tweepy pandas geopandas rtree

Run the main.py to initialize and create the config file.

Create a Twitter developer account and get your own Twitter API tokens here. Afterwards, you have 2 options of entering your API tokens into the Tweet2Map software. You can manually input the tokens into the config.ini file or you can input them via the CLI using these arguments:

-consumer_secret-consumer_key-access_token-access_secret

Start downloading and caching tweets for later processing by running main.py without any arguments. This is designed to be run on a schedule automatically so you can just set a schedule to run it automatically come back when you area ready to process the tweets and add them to the database.

Run the processing script by adding the -p argument as seen below:

python main.py -p

This will download the latest tweets and also load all the cached tweets. It will perform duplicate checks according to the tweet ID and will look in the newly downloaded tweets, cached tweets, and processed tweets in the incident database.

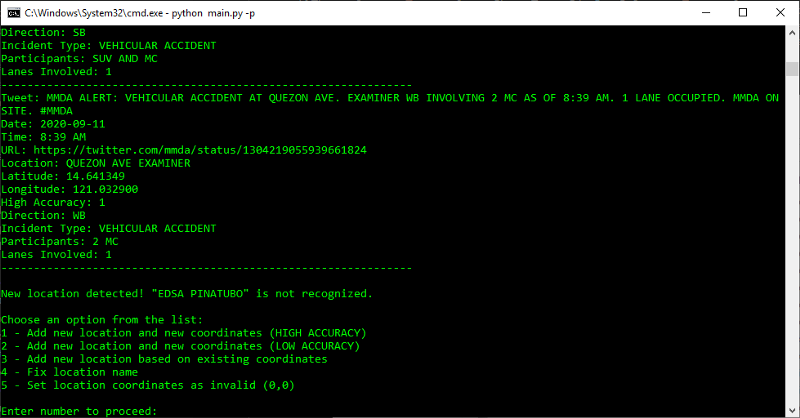

You will inevitably run into new locations that are not in the database and you will encounter this prompt:

You can check the database for an existing location. Often times there are many different names for the same location. In this case, there were no good matches so we go back to prompt by typing in "BREAK".

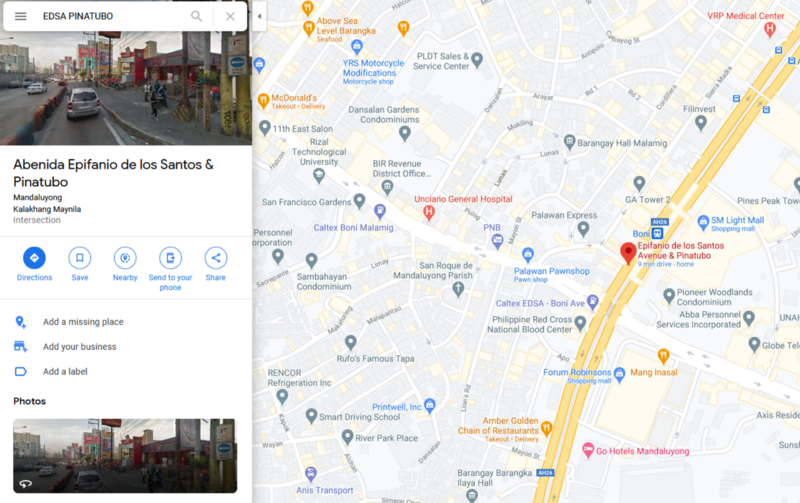

So you can search for the location on Google Maps. In this case, "EDSA PINATUBO" resulted in a very precise location which we can add to the database.

We get the location by right clicking the location and clicking "What's here?". This will reveal the coordinates which can be copy and pasted into the terminal.

We paste it into the prompt. Then type "Y" to confirm.