A video of our results can be seen in

This code is based on the Generative Inpainting [1][2] papers and it's repository.

Based on the Generative Inpainting network we proposed adaptations to deal with disparity images inpainting. Our results have been publish on IV 2019 and on this repository we have the code used on this publication.

To use this code, please follow the installation instructions from the original repository since the dependencies are the same.

The directory /training_data has the disparity images for the training and the /data_flist contains the .flist files wich list the images.

For training the command is:

python3 train.py

For testing use:

python3 test.py --image 'input_image' --mask 'removed_area_mask' --output 'output_image' --checkpoint_dir model_logs/'trained_model_dir'

For the paper all the train and test were made in a GeForce GTX 1080ti.

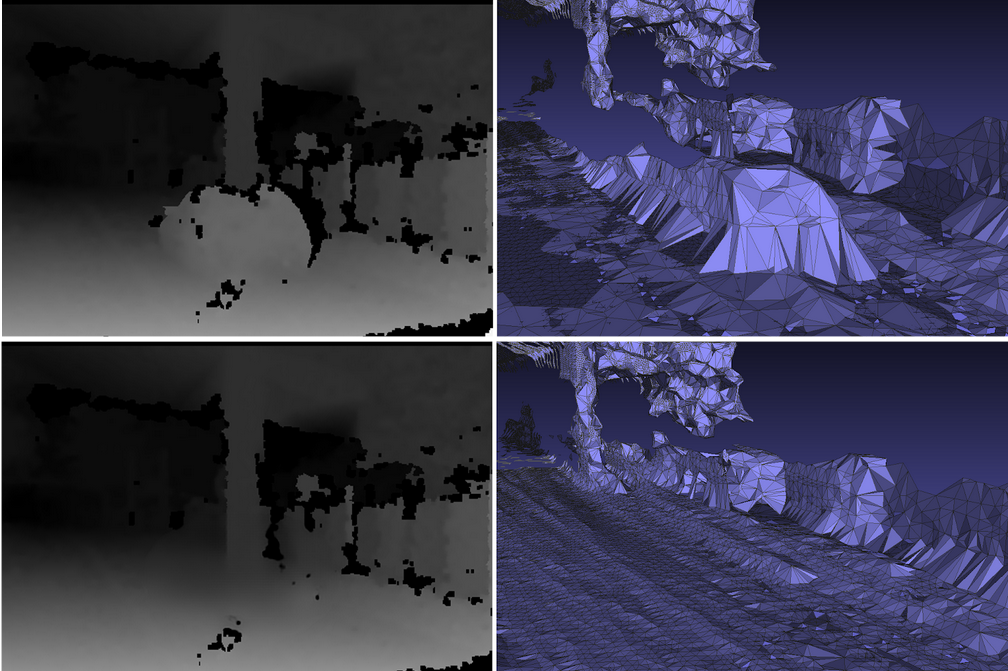

Depth map object removal example and the respective 3D mesh reconstruction:

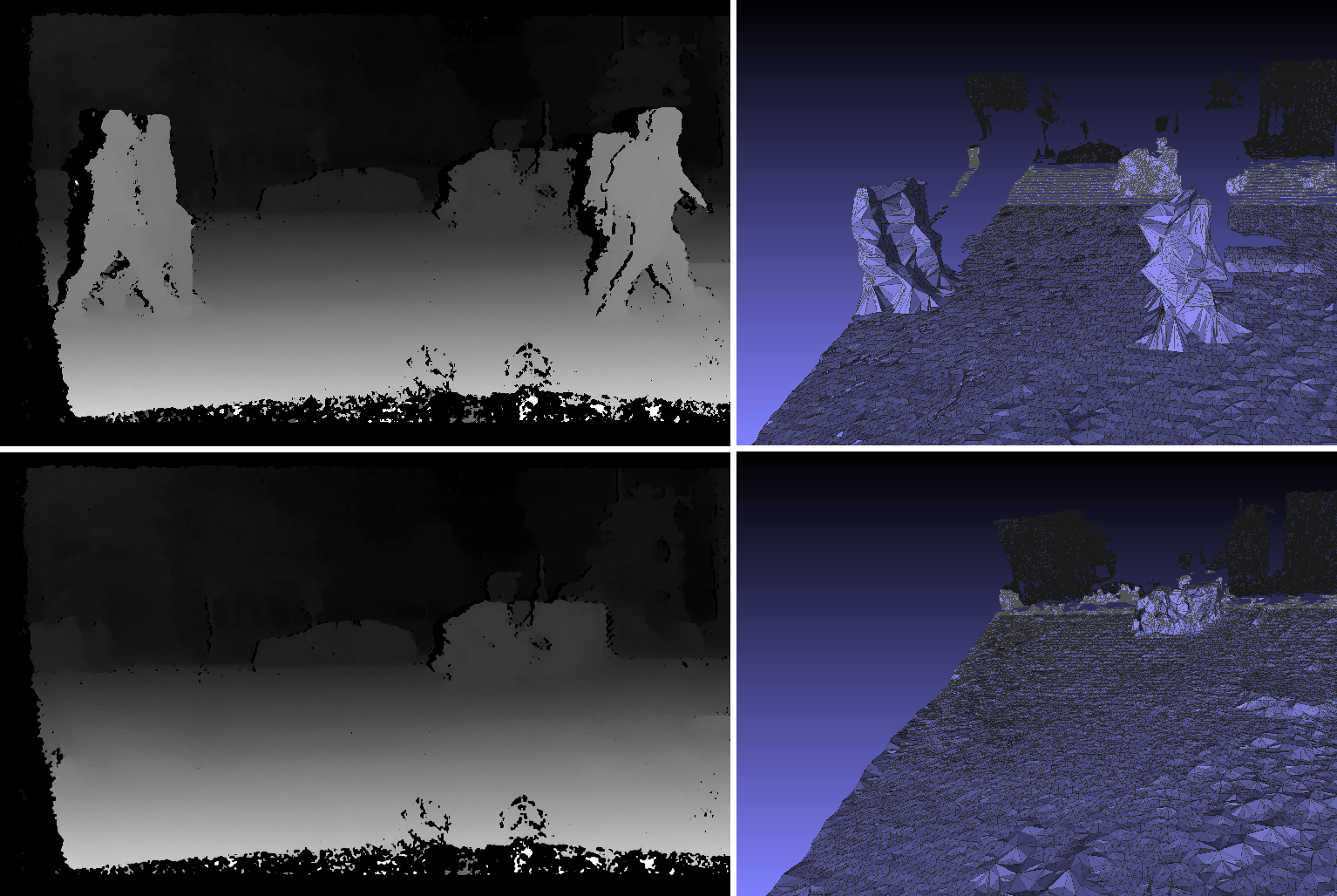

Object Removal from KITTI Images:

Object Removal from CityScapes Images:

CC 4.0 Attribution-NonCommercial International

The software is for educaitonal and academic research purpose only.

Please cite this work as:

@INPROCEEDINGS{8814157,

author={L. P. N. {Matias} and M. {Sons} and J. R. {Souza} and D. F. {Wolf} and C. {Stiller}},

booktitle={2019 IEEE Intelligent Vehicles Symposium (IV)},

title={VeIGAN: Vectorial Inpainting Generative Adversarial Network for Depth Maps Object Removal},

year={2019},

pages={310-316},

doi={10.1109/IVS.2019.8814157},

ISSN={1931-0587},

month={June},

}