- Compiled and edited by: Mahmoud Parsian

- Last updated date: 6/26/2023 (June 26, 2023)

Table of Contents

- Prelude

- Introduction

- Algorithm

- List of Algorithms

- Example of a Simple Algorithm

- Types of Algorithms

- Greedy Algorithm

- Recursive Algorithms

- Algorithm Complexity

- Distributed Algorithm

- Access Control List (ACL)

- Apache Software Foundation (ASF)

- Partitioner

- Aggregation

- Data Aggregation

- Data Governance

- What is Data Visualization?

- Jupyter Notebook

- Analytics

- Data Analytics

- Data Analysis Process

- Data Lake

- Data Science

- Data Science Process

- Anonymization

- API

- Application

- Data Sizes

- Behavioural Analytics

- ODBC

- JDBC

- Big Data

- Big Data Engineering

- Big Data Life Cycle

- Big Data Modeling

- Big Data Platforms and Solutions

- Biometrics

- Data Modelling

- Design Patterns

- Data Set

- Data Type

- Composite Data Type

- Abstract Data Type

- Apache Lucene

- Apache Solr

- Apache Hadoop

- What is the difference between Hadoop and RDBMS?

- Replication

- Replication Factor

- What makes Hadoop Fault tolerant?

- Big Data Formats

- Parquet Files

- Columnar vs. Row Oriented Databases

- Tez

- Apache HBase

- Google Bigtable

- Hadoop Distributed File System - HDFS

- Scalability

- Amazon S3

- Amazon Athena

- Google BigQuery

- Commodity Server

- Fault Tolerance and Data Replication

- High-Performance-Computing (HPC)

- History of MapReduce

- Classic MapReduce Books and Papers

- Apache Spark Books

- MapReduce

- MapReduce Terminology

- MapReduce Architecture

- What is an Example of a Mapper in MapReduce

- What is an Example of a Reducer in MapReduce

- What is an Example of a Combiner in MapReduce

- Partition

- Parallel Computing

- Difference between Concurrency and Parallelism?

- How does MapReduce work?

- Word Count in MapReduce

- Word Count in PySpark

- Finding Average in MapReduce

- What is an Associative Law

- What is a Commutative Law

- Monoid

- Monoids as a Design Principle for Efficient MapReduce Algorithms

- What Does it Mean that "Average of Average is Not an Average"

- Advantages of MapReduce

- What is a MapReduce Job

- Disadvantages of MapReduce

- Job Flow in MapReduce

- Hadoop vs. Spark

- Apache Spark

- Apache Spark Components

- Apache Spark in Large-Scale Sorting

- DAG in Spark

- Spark Concepts and Key Terms

- Spark Driver Program

- Apache Spark Ecosystem

- Spark as a superset of MapReduce

- Why is Spark powerful?

- What is an Spark RDD

- What are Spark Mappers?

- What are Spark Reducers?

- Data Structure

- Difference between Spark Action and Transformation

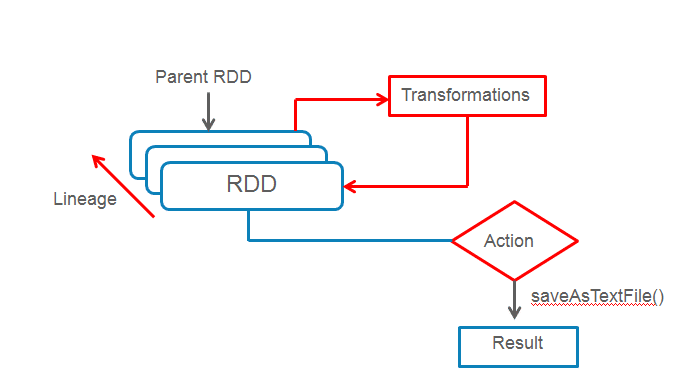

- What is a Lineage in Spark?

- What are Spark operations or functions?

- Spark Transformation

- Spark Programming Model

- What is SparkContext in PySpark

- What is SparkSession in PySpark

- What is Lazy Binding in Spark?

- Difference between reduceByKey() and combineByKey()

- What is an example of combineByKey()

- What is an example of reduceByKey()

- What is an example of groupByKey()

- Difference of groupByKey() and reduceByKey()

- What is a DataFrame?

- What is an Spark DataFrame?

- Spark RDD Example

- Spark DataFrame Example

- Join Operation in MapReduce

- Join Operation in Spark

- Spark Partitioning

- Physical Data Partitioning

- GraphFrames

- Example of a GraphFrame

- Advantages of using Spark

- GraphX

- Cluster

- Cluster Manager

- Master node

- Worker node

- Cluster computing

- Concurrency

- Histogram

- Structured data

- Unstructured data

- Correlation analysis

- Data aggregation tools

- Data analyst

- Database

- Database Management System

- Data cleansing

- Data mining

- Data virtualization

- De-identification

- ETL - Extract, Transform and Load

- Failover

- Graph Databases

- Grid computing

- Key-Value Databases

- (key, value)

- Java

- Python

- Tuples in Python

- Lists in Python

- Difference between Tuples and Lists in Python

- JavaScript

- In-memory

- Latency

- Location data

- Machine Learning

- Internet of Things

- Metadata

- Natural Language Processing (NLP)

- Network analysis

- Workflow

- Schema

- Difference between Tuple and List in Python

- Object Databases

- Pattern Recognition

- Predictive analysis

- Privacy

- Query

- Regression analysis

- Real-time data

- Scripting

- Sentiment Analysis

- SQL

- Time series analysis

- Variability

- What are the 4 Vs of Big Data?

- Variety

- Velocity

- Veracity

- Volume

- XML Databases

- Big Data Scientist

- Classification analysis

- Cloud computing

- Distributed computing

- Clustering analysis

- Database-as-a-Service

- Database Management System (DBMS)

- Distributed File System

- Document Store Databases

- NoSQL

- Scala

- Columnar Database

- Data Analyst

- Data Scientist

- Data Model and Data Modelling

- Data Model

- Hive

- Load Balancing

- Log File

- Parallel Processing

- Server (or node)

- Abstraction layer

- Cloud

- Data Ingestion

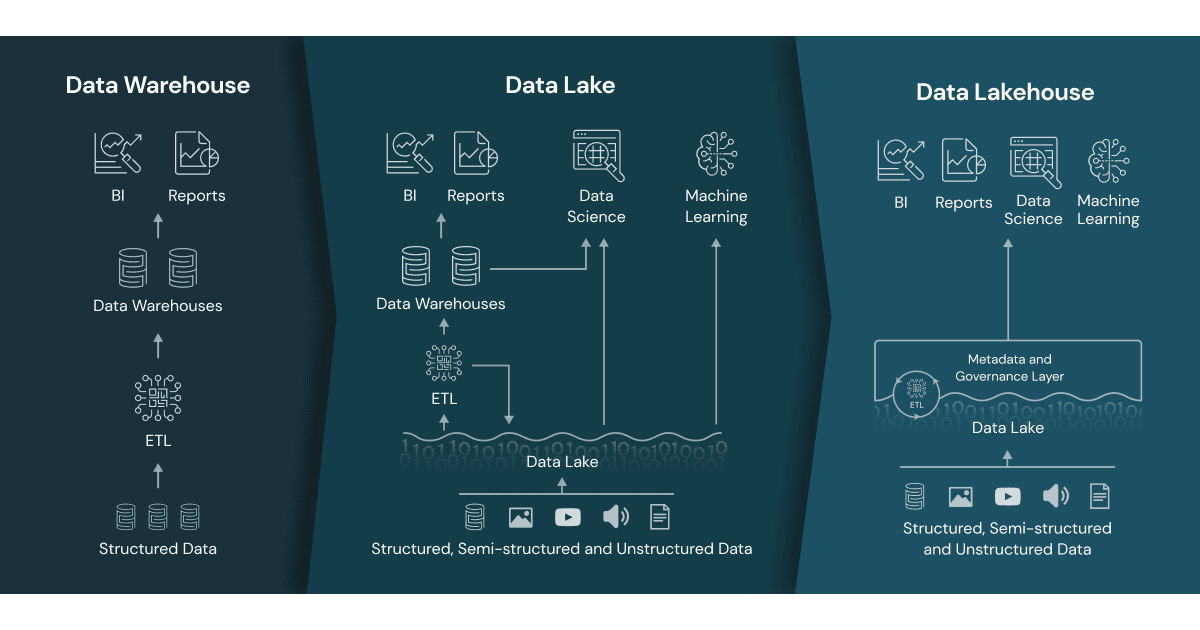

- Data Warehouse

- Open-Source

- Relational Database

- RDBMS

- Input Splits in Hadoop

- Sort and Shuffle function in MapReduce

- NoSQL Database

- PySpark

- Boolean Predicate

- Cartesian Product

- Python Lambda

- Data transformation

- Data Curation

- What is Bioinformatics?

- Software Framework

- Software Library

- Difference between a Library and Framework

- Software Engineering

- Spark Streaming

- Spark SQL

- Spark Programming Languages

- spark-packages.org

- DATA LAKEHOUSE

- Snowflake

- What is a synchronization?

- References

- List of Books by Mahmoud Parsian

-

This glossary is written for my students taking Big Data Modeling & Analytics at Santa Clara University.

-

This is not a regular glossary: it is a detailed glossary for my students to learn basics of key terms in big data, MapReduce, and PySpark (Python API for Apache Spark).

|

|

"... This book will be a great resource for both readers looking to implement existing algorithms in a scalable fashion and readers who are developing new, custom algorithms using Spark. ..." Dr. Matei Zaharia Original Creator of Apache Spark FOREWORD by Dr. Matei Zaharia |

Big data is a broad and rapidly evolving topic. Big data is a vast and complex field that is constantly evolving, and for that reason, it’s important to understand the basic common terms and the more technical vocabulary so that your understanding can evolve with it. The intention is to put these definitions and concepts in one single place for ease of exploring, searching and learning.

Big data environment involves many tools and technologies:

- Data preparation from multiple sources

- Engine for large-scale data analytics (such as Spark)

- ETL processes to analyze prepare data for Query engine

- Relational database systems

- Query engines such as Amazon Athena, Google BigQuery, Snowflake

- much more...

The purpose of this glossary is to shed some light on the fundamental definitions of big data, MapReduce, and Spark. This document is a list of terms, words, concepts, and examples found in or relating to big data, MapReduce, and Spark.

Why are algorithms called algorithms? It's thanks to Persian mathematician Muhammad al-Khwarizmi who was born way back in around AD780. Mohammed Ibn Musa-al-Khwarizmi was a Persian mathematician and creator of the term: algorithm.

- An algorithm is a mathematical formula that can perform certain analyses on data

- An algorithm is a procedure used for solving a problem or performing a computation.

- An algorithm is a set of well-defined steps to solve a problem

- For example, given a set of words, sort them in ascending order

- For example, given a set of text documents, find frequecy of every unique word

- For example, given a set of numbers, find (minimum, maximum) of given numbers

An algorithm is a step-by-step set of operations which can be performed to solve a particular class of problem. The steps may involve calculation, processing, or reasoning. To be effective, an algorithm must reach a solution in finite space and time. As an example, Google uses algorithms extensively to rank page results and autocomplete user queries. Typically an algorithm is implemented using a programming language such as Python, Java, SQL, ...

In big data world, an algorithm can be implemented using a compute engine such as MapReduce and Spark.

In The Art of Computer Programming, a famous computer scientist, Donald E. Knuth, defines an algorithm as a set of steps, or rules, with five basic properties:

- Finiteness: an algorithm must always terminate after a finite number of steps. An algorithm must start and stop. The rules an algorithm applies must also conclude in a reasonable amount of time. What "reasonable" is depends on the nature of the algorithm, but in no case can an algorithm take an infinite amount of time to complete its task. Knuth calls this property the finiteness of an algorithm.

- Definiteness: each step of an algorithm must be precisely defined. The actions that an algorithm performs cannot be open to multiple interpretations; each step must be precise and unambiguous. Knuth terms this quality definiteness. An algorithm cannot iterate a "bunch" of times. The number of times must be precisely expressed, for example

2,1000000, or a randomly chosen number.

- Input: an algorithm has zero or more inputs. An algorithm starts its computation from an initial state. This state may be expressed as input values given to the algorithm before it starts.

- Output: an algorithm has one or more outputs. An algorithm must produces a result with a specific relation to the inputs.

- Effectiveness: an algorithm is also generally expected to be effective. The steps an algorithm takes must be sufficiently simple that they could be expressed "on paper"; these steps must make sense for the quantities used. Knuth terms this property effectiveness.

- Sum of list of numbers

- Average of list of numbers

- Median of list of numbers

- Finding the standard deviation for a set of numbers

- Mode of list of numbers: the most frequent number-that is, the number that occurs the highest number of times.

- Minimum and Maximum of list of numbers

- Cartesian product of two sets

- Given a list of numbers, find (number-of-zeros, number-of-negatives, number-of-positives)

- Word Count: given a set of text documents, find frequency of every unique word in these documents

- Given a set of integer numbers, identify prime and non-prime numbers

- Anagrams Count: given a set of text documents, find frequency of every unique anagram in these documents

- Given users, movies, and ratings (1 to 5), what are the Top-10 movies rated higher than 2.

- DNA base count for FASTA and FASTQ files

- Given a list of numbers, sort them in ascending order

- Given a list of strings, sort them in descending order

- Sorting algorithms (BubbleSort, QuickSort, ...)

- T-Test algorithm

- Tree traversal algorithms

- Suffix Tree Algorithms

- Red–Black tree algorithms

- Dijkstra's Algorithm

- Merge Sort

- Quicksort

- Depth First Search

- Breadth-First Search

- Linear Search

- Binary Search

- Minimum Spanning Tree Algorithms

- Bloom Filter

- All-Pairs Shortest Paths – Floyd Warshall Algorithm

- Kmers for DNA sequences

- Huffman Coding Compression Algorithm

- Bioinformatics Algorithms

- The Knuth-Morris-Pratt algorithm

- Connected Components

- Finding Unique Triangles in a graph

US Change Algorithm: Convert some amount of money (in cents/pennies) to fewest number of coins. Here we assume that the penny, nickel, dime, and quarter are the circulating coins that we use today.

FACTs on US Coins:

- One dollar = 4 quarters = 100 pennies

- One quarter = 25 pennies

- One dime = 10 pennies

- One nickle = 5 pennies

-

Input: An amount of money,

M, in pennies (as in integer) -

Output: The smallest number of quarters

q, dimesd, nicklesn, and penniespwhose value add toM: the following rules must be satisfied:25q +10d + 5n + p = Mand(q + d + n + p)is as small as possible.min(q + d + n + p)

-

Algorithm: Greedy algorithm: a greedy algorithm is any algorithm that follows the problem-solving heuristic of making the locally optimal choice at each stage. According to the National Institute of Standards and Technology (NIST), a greedy algorithm is one that always takes the best immediate, or local, solution while finding an answer. Greedy algorithms find the overall, or globally, optimal solution for some optimization problems, but may find less-than-optimal solutions for some instances of other problems.

Greedy algorithm is designed to achieve optimum solution for a given problem (here US Change problem). In greedy algorithm approach, decisions are made from the given solution domain. As being greedy, the closest solution that seems to provide an optimum solution is chosen.

-

Algorithm Implementation: The following is a basic US Change Algorithm in Python. In this algorithm, we use the

divmod(arg_1, arg_2)built-in function which returns a tuple containing the quotient and the remainder whenarg_1(dividend) is divided byarg_2(divisor). We are using Greedy algorithm, which finds the optimal solution:- first, find the number of quarters

(x 25), - second, find the number of dimes

(x 10), - third, find the number of nickles

(x 5), and - finally the number of pennies

(x 1).

- first, find the number of quarters

US Change Algorithm in Python:

# M : number of pennies

# returns (q, d, n, p)

# where

# q = number of quarters

# d = number of dimes

# n = number of nickle

# p = number of pennies

# where

# 25q +10d + 5n + p = M and

# (q + d + n + p) is as small as possible.

#

def change(M):

# step-1: make sure that M is an integer

if not(isinstance(M, int)):

print('M is not an integer')

return (0, 0, 0, 0)

#end-if

# here: M is an integer type

# step-2: make sure M > 0

if (M < 1): return (0, 0, 0, 0)

# step-3: first, find quarters as q

q, p = divmod(M, 25)

if (p == 0): return (q, 0, 0, 0)

# here 0 =< p < 25

# step-4: find dimes

d, p = divmod(p, 10)

if (p == 0): return (q, d, 0, 0)

# here 0 =< p < 9

# step-5: find nickles and pennies

n, p = divmod(p, 5)

# here 0 =< p < 5

# step-6: return the final result

return (q, d, n, p)

#end-defBasic testing of the change() function:

>>> change(None)

M is not an integer

(0, 0, 0, 0)

>>> change([1, 2, 3])

M is not an integer

(0, 0, 0, 0)

>>> change('x')

M is not an integer

(0, 0, 0, 0)

>>> change(2.4)

M is not an integer

(0, 0, 0, 0)

>>> change(0)

(0, 0, 0, 0)

>>> change(141)

(5, 1, 1, 1)

>>> change(30)

(1, 0, 1, 0)

>>> change(130)

(5, 0, 1, 0)

>>> change(55089)

(2203, 1, 0, 4)

>>> change(44)

(1, 1, 1, 4)-

Sorting algorithms: Bubble Sort, insertion sort, and many more. These algorithms are used to sort the data in a particular format.

-

Searching algorithms: Linear search, binary search, etc. These algorithms are used in finding a value or record that the user demands.

-

Graph Algorithms: It is used to find solutions to problems like finding the shortest path between cities, and real-life problems like traveling salesman problems.

-

Dynamic Programming Algorithms

-

Greedy Algorithms: minimization and maximization

Greedy Algorithm is defined as a method for solving optimization problems by taking decisions that result in the most evident and immediate benefit irrespective of the final outcome. It works for cases where minimization or maximization leads to the required solution.

Characteristics of Greedy algorithm: For a problem to be solved using the Greedy approach, it must follow a few major characteristics:

- There is an ordered list of resources (profit, cost, value, etc.)

- Maximum of all the resources(max profit, max value, etc.) are taken.

- For example, in the fractional knapsack problem, the maximum value/weight is taken first according to available capacity.

Storing Files on Tape is an example of Greedy Algorithm.

In computer science, recursion is a method of solving a computational problem where the solution depends on solutions to smaller instances of the same problem. Recursion solves such recursive problems by using functions that call themselves from within their own code.

A [recursive algorithm] (https://www.cs.odu.edu/~toida/nerzic/content/recursive_alg/rec_alg.html) is an algorithm which calls itself with "smaller (or simpler)" input values, and which obtains the result for the current input by applying simple operations to the returned value for the smaller (or simpler) input. More generally if a problem can be solved utilizing solutions to smaller versions of the same problem, and the smaller versions reduce to easily solvable cases, then one can use a recursive algorithm to solve that problem. For example, the elements of a recursively defined set, or the value of a recursively defined function can be obtained by a recursive algorithm.

The classic example of recursive programming involves

computing factorials. The factorial of a number is computed

as that number times all of the numbers below it up to and

including 1. For example, factorial(5) is the same as

5 * 4 * 3 * 2 * 1, and factorial(3) is 3 * 2 * 1.

An interesting property of a factorial is that the factorial

of a number is equal to the starting number multiplied by the

factorial of the number immediately below it. For example,

factorial(5) is the same as 5 * factorial(4). You could

almost write the factorial function simply as this:

factorial(n) = 1 for n = 0 (base definition)

factorial(n) = n * factorial(n ‑ 1) for n > 0 (general definition)

Factorial function (recursive) can be expressed in a pseudo-code:

# assumption: n >= 0

int factorial(int n) {

if (n == 0) {

return 1;

}

else {

return n * factorial(n ‑ 1);

}

}

Factorial function (recursive) in Python:

# assumption: n >= 0

def recursive_factorial(n):

if n == 0:

return 1

else:

return n * recursive_factorial(n-1)

#end-if

#end-defExample of Recursive Algorithms:

- Factorial of a Number

- Greatest Common Divisor

- Fibonacci Numbers

- Recursive Binary Search

- Linked List

- Reversing a String

- QuickSort Algorithm

- Binary Tree Algorithms

- Towers of Hanoi

- Inorder/Preorder/Postorder Tree Traversals

- DFS of Graph

- File system traversal

Recursive definitions are often used to model the

structure of expressions and statements in programming

languages. Language designers often express grammars

in a syntax such as Backus–Naur form; here is such a

grammar, for a simple language of arithmetic

expressions (denoted as an <expr>) with

multiplication and addition:

<expr> ::= <number>

| (<expr> * <expr>)

| (<expr> + <expr>)

An algorithm is analyzed using Time

Complexity and Space Complexity. Writing

an efficient algorithm help to consume

the minimum amount of time for processing

the logic. For algorithm A, it is judged

on the basis of two parameters for an

input of size n :

-

Time Complexity: Time taken by the algorithm to solve the problem. It is measured by calculating the iteration of loops, number of comparisons etc. In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm.

-

Space Complexity: Space taken by the algorithm to solve the problem. It includes space used by necessary input variables and any extra space (excluding the space taken by inputs) that is used by the algorithm. For example, if we use a hash table (a kind of data structure), we need an array to store values so this is an extra space occupied, hence will count towards the space complexity of the algorithm. This extra space is known as Auxiliary Space.

A distributed algorithm is an algorithm designed

to run on computer hardware constructed from

interconnected processors. Distributed algorithms

are used in different application areas of

distributed computing, such as DNA analysis,

telecommunications, scientific computing,

distributed information processing, and real-time

process control. Standard problems solved by

distributed algorithms include leader election,

consensus, distributed search, spanning tree

generation, mutual exclusion, finding association

of genes in DNA, and resource allocation.

Distributed algorithms run in parallel/concurrent

environments.

In implementing distributed algorithms, you have to make sure that your aggregations and reductions are semantically correct (since these are executed partition by partition) regardless of the number of partitions for your data. For example, you need to remember that average of an average is not an average.

Example of systems running distributed algorithms:

-

Apache Spark can be used to implement and run distributed algorithms.

-

MapReduce/Hadoop can be used to implement and run distributed algorithms.

-

Amazon Athena

-

Google BigQuery

-

Snowflake

In a nutshell, in computer security, an access-control-list (ACL) is a list of permissions associated with a system resource (object).

In a file system, ACL is a list of permissions associated with an object in a computer file system. An ACL specifies which users or processes are allowed to access an object, and what operations can be performed.

ASF is a non-profit corporation that supports various open-source software products, including Apache Hadoop, Apache Spark, and Apache Maven. Apache projects are developed by teams of collaborators and protected by an ASF license that provides legal protection to volunteers who work on Apache products and protect the Apache brand name.

Apache projects are characterized by a collaborative, consensus-based development process and an open and pragmatic software license. Each project is managed by a self-selected team of technical experts who are active contributors to the project.

Partitioner is a program, which distributes the data across the cluster. The types of partitioners are

- Hash Partitioner

- Murmur3 Partitioner

- Random Partitioner

- Order Preserving Partitioner

For example, an Spark RDD of 480,000,000,000

elements might be partitioned in to 60,000

chunks (partitions), where each chunk/partition

will have a bout 8,000,000 elements.

480,000,000,000 = 60,000 x 8,000,000

One of the main reasons of data partitioning is to process many small partitions in parallel (at the same time) to reduce the overall data processing time.

In Apache Spark, your data can be represented as an RDD, and Spark partitions (to enable parallelism of data transformations) your immutable RDD into chunks called partitions. The Partitions are the parts of RDD that allow Spark to execute in parallel on a cluster of nodes. It is distributed across the node of the cluster and logical division of data. In Spark, all input, intermediate, and output data is presented as partitions in which one task process one partitions at a time. RDD is a group of partitions.

In the following figure, the Spark RDD has

8 elements as {1, 3, 5, 7, 9, 11, 13, 15}

and has 4 partitions as:

RDD = { Partition-1, Partition-2, Partition-3, Partition-4 }Partition-1:{ 1, 3 }Partition-2:{ 5, 7 }Partition-3:{ 9, 11 }Partition-4:{ 13, 15 }

The main purpose of partitions is to enable independent and parallel execution of transformations (such as mappers, filters, and reducers). Partitions can be executed on different servers/nodes.

-

A process of searching, gathering and presenting data.

-

Data aggregation refers to the process of collecting data and presenting it in a summarised format. The data can be gathered from multiple sources to be combined for a summary.

Data aggregation refers to the collection of data from multiple sources to bring all the data together into a common athenaeum for the purpose of reporting and/or analysis.

-

Data aggregation is the process of compiling typically some large amounts of information from a given database and organizing it into a more consumable and comprehensive medium.

-

For example, read customer data from a data source, read products data from another data source, then write algorithms to find average age of customer by product

-

For example, read users data from a data source, read movies from another data source, read ratings from a data source, and fianlly find median rating for movies rated last year

What is Data Aggregation? Data aggregators summarize data from multiple data sources. They provide capabilities for multiple aggregate measurements, such as sum, median, average and counting.

In a nutshell, we can say that data aggregation is the process of bringing together data from multiple sources and consolidating it in a storage solution for data analysis and reporting.

What does data governance mean? Data governance means setting internal standards—data policies—that apply to how data is gathered, stored, processed, and disposed of. It governs who can access what kinds of data and what kinds of data are under governance.

According to Bill Inmon (father of Data Warehousing): "Data governance is about providing oversight to ensure the data brings value and supports the business strategy, which is the high-level business plan to achieve the business goals. Data governance is important to protect the needs of all stakeholders." Data privacy and security should be considered in every phase of the data lifecycle.

Accoring to Snowflake: the following are the 5 critical components of successful Data Governance:

- Data Architecture

- Data Quality

- Data Management

- Data Security

- Data Compliance

Data visualization is the representation of data through use of common graphics, such as charts, plots, infographics, and even animations. These visual displays of information communicate complex data relationships and data-driven insights in a way that is easy to understand.

Data visualization can be utilized for a variety of purposes, and it’s important to note that is not only reserved for use by data teams. Management also leverages it to convey organizational structure and hierarchy while data analysts and data scientists use it to discover and explain patterns and trends.

The Jupyter Notebook is the web application for creating and sharing computational documents. It offers a simple, streamlined, document centric experience.

Jupyter Notebook is a popular application that enables you to edit, run and share Python code into a web view. It allows you to modify and re-execute parts of your code in a very flexible way. That’s why Jupyter is a great tool to test and prototype programs.

There are two ways to get PySpark available in a Jupyter Notebook:

-

Configure PySpark driver to use Jupyter Notebook: running pyspark will automatically open a Jupyter Notebook

-

Load a regular Jupyter Notebook and load PySpark using findSpark package

The following shell script enables you to run PySpark in Jupyter (you need to update your script accordingly):

# define your Python PATH

export PATH=/home/mparsian/Library/Python/3.10/bin:$PATH

# define your Spark installation directory

export SPARK_HOME=/home/mparsian/spark-3.3.1

# update your PATH for Spark

export PATH=$SPARK_HOME/bin:$PATH

# define Python for PySpark Driver

export PYSPARK_DRIVER_PYTHON=jupyter

# You want to run it as a notebook

export PYSPARK_DRIVER_PYTHON_OPTS='notebook'

# invoke PySpark in Jupyter

$SPARK_HOME/bin/pyspark- The discovery of insights in data, find interesting patterns in data

- For example, given a graph, find (identify) all of the triangles

- For example, given a DNA data, find genes, which are assocaited with each other

- For example, given a DNA data, find rare variants

What is Data Analytics? Data analytics helps individuals and organizations make sense of data. Data analysts typically analyze raw data for insights, patterns, and trends.

According to NIST: "analytics is the systematic processing and manipulation of data to uncover patterns, relationships between data, historical trends and attempts at predictions of future behaviors and events."

Data analytics helps individuals and organizations make sense of data. Data analysts typically analyze raw data for insights and trends.

Data analytics converts raw data into actionable insights. It includes a range of tools, technologies, and processes used to find trends and solve problems by using data. Data analytics can shape business processes, improve decision making, and foster business growth.

Data Analytics is the process of examining large data sets to uncover hidden patterns, unknown correlations, trends, customer preferences and other useful business insights. The end result might be a report, an indication of status or an action taken automatically based on the information received. Businesses typically use the following types of analytics:

-

Behavioral Analytics: Using data about people’s behavior to understand intent and predict future actions.

-

Descriptive Analytics: Condensing big numbers into smaller pieces of information. This is similar to summarizing the data story. Rather than listing every single number and detail, there is a general thrust and narrative.

-

Diagnostic Analytics: Reviewing past performance to determine what happened and why. Businesses use this type of analytics to complete root cause analysis.

-

Predictive Analytics: Using statistical functions on one or more data sets to predict trends or future events. In big data predictive analytics, data scientists may use advanced techniques like data mining, machine learning and advanced statistical processes to study recent and historical data to make predictions about the future. It can be used to forecast weather, predict what people are likely to buy, visit, do or how they may behave in the near future.

-

Prescriptive Analytics: Prescriptive analytics builds on predictive analytics by including actions and make data-driven decisions by looking at the impacts of various actions.

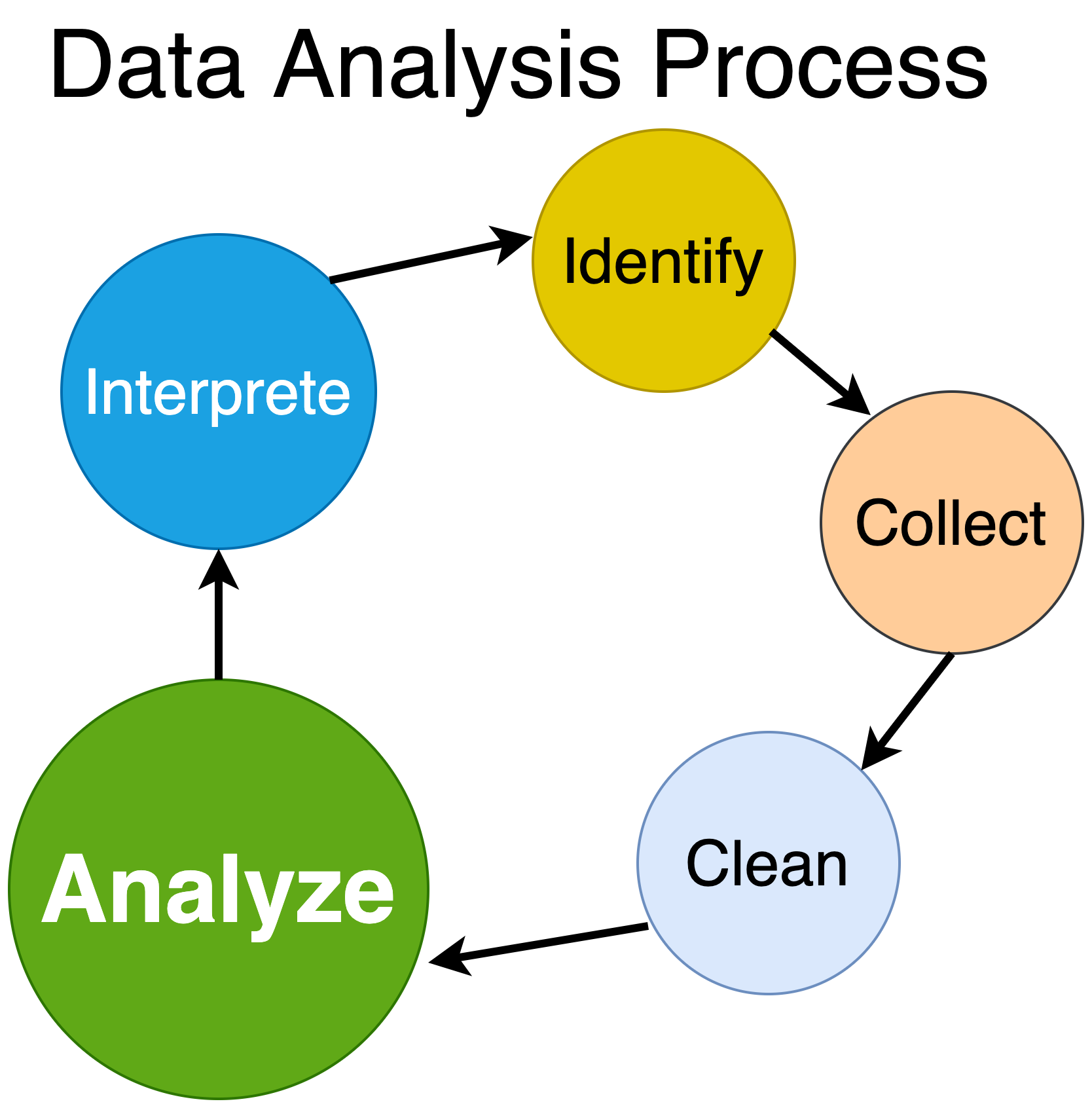

The data analysis process consists of 5 key stages.

-

Identify: you first need to identify why do you need this data and what is the purpose of it in the enterprise

-

Collect: this is the stage where you start collecting the needed data. Here, you define which sources of information you will use and how you will use them. The collection of data can come in different forms such as internal or external sources; you need to identify where the data will reside

-

Clean: Once you have the necessary data it is time to clean it and leave it ready for analysis. Not all the data you collect will be useful, when collecting big amounts of information in different formats it is very likely that you will find yourself with duplicate or badly formatted data.

-

Analyze: With the help of various tools and techniques such as statistical analysis, regressions, neural networks, text analysis, DNA analysis, and more, you can start analyzing and manipulating your data to extract relevant conclusions. At this stage, you find trends, correlations, variations, and patterns that can help you answer the questions you first thought of in the identify stage.

-

Interprete: Last but not least you have one of the most important steps: it is time to interpret your results. This stage is where the researcher comes up with courses of action based on the findings.

7 Fundamental Steps to Complete a Data Analytics Project:

A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale.

A storage repository that holds a vast amount of raw data in its native format until it's required. Every data element within a data lake is assigned a unique identifier and set of extended metadata tags. When a business question arises, users can access the data lake to retrieve any relevant, supporting data.

Data science is really the fusion of three disciplines: computer science, mathematics, and business.

Data Science is the field of applying advanced analytics techniques and scientific principles to extract valuable information from data. Data science typically involves the use of statistics, data visualization and mining, computer programming, machine learning and database engineering to solve complex problems.

Data science is the methodology for the synthesis of useful knowledge directly from data through a process of discovery or of hypothesis formulation and hypothesis testing. Data science is tightly linked to the analysis of Big Data, and refers to the management and execution of the end-to-end data processes, including the behaviors of the components of the data system. As such, data science includes all of analytics as a step in the process. Data science contains different approaches to leveraging data to solve mission needs. While the term data science can be understood as the activities in any analytics pipeline that produces knowledge from data, the term is typically used in the context of Big Data.

According to NIST, Data Science is focused on the end-to-end data processing life cycle of Big Data and related activities. The data science life cycle encompasses the data analytics life cycle (as described below) plus many more activities including policy and regulation, governance, operations, data security, master data management, meta-data management, and retention/destruction. The data analytics life cycle is focused on the processing of Big Data, from data capture to use of the analysis. The data analytics life cycle is the set of processes that is guided by the organizational need to transform raw data into actionable knowledge, which includes data collection, preparation, analytics, visualization, and access.

The end-to-end data science life cycle consists of five fundamental steps:

-

Capture: gathering and storing data, typically in its original form (i.e., raw data);

-

Preparation: processes that convert raw data into cleaned, organized information;

-

Analysis: techniques that produce synthesized knowledge from organized information;

-

Visualization: presentation of data or analytic results in a way that communicates to others;

-

Action: processes that use the synthesized knowledge to generate value for the enterprise.

-

Making data anonymous; removing all data points that could lead to identify a person

-

For example, replacing social security numbers with fake 18 digit numbers

-

For example, replacing patient name with fake ID.

-

An Application Programming Interface (API) is a set of function definitions, protocols, and tools for building application software. What Are APIs Used For? APIs are used to abstract the complexity of back-end logic in a variety of software systems.

-

For example, MapReduce paradigm provides the following functions

- mapper:

map() - reducer:

reduce() - combiner:

combine()[optional]

- mapper:

-

For example, Apache Spark provides

- RDDs and DataFrames as Data Abstractions

- mappers:

map(),flatMap(),mapPartitions() - filters:

filter() - reducers:

groupByKey(),reduceByKey(),combineByKey() - SQL access to DataFrames

-

For example, Google Maps API: The Google Maps API gives users the privilege of nearly limitless geographic aptitude at their fingertips. Search nearby restaurants, niche shops, and whatever else is in relative distance to your location.

-

SQL API:

- CREATE TABLE

- DROP TABLE

- INSERT row(s)

- DELETE row(s)

- UPDATE row(s)

- SELECT

- SELECT ... GROUP BY

- SELECT ... ORDER BY

- JOIN

- ...

- An Application is a computer software that enables a computer to perform a certain task

- For example, a payroll application, which issues monthly checks to employees

- For example, a MapReduce application, which identifies and eliminates duplicate records

- For example, an Spark application, which finds close and related communities in a given graph

- For example, an Spark application, which finds rare variants for DNA samples

| Short name | Full Name | Description |

|---|---|---|

| Bit | Bit | 0 or 1 |

| B | Byte | 8 bits: (00000000 .. 11111111) : can represent 256 combinations (0 to 255) |

| KB | Kilo Byte | 1,024 bytes = 210 bytes ~ 1000 bytes |

| MB | Mega Byte | 1,024 x 1,024 bytes = 1,048,576 bytes ~ 1000 KB |

| GB | Giga Byte | 1,024 x 1,024 x 1,024 bytes = 1,073,741,824 bytes ~ 1000 MB |

| TB | Tera Byte | 1,024 x 1,024 x 1,024 x 1024 bytes = 1,099,511,627,776 bytes ~ 1000 GB |

| PB | Peta Byte | 1,024 x 1,024 x 1,024 x 1024 x 1024 bytes = 1,125,899,906,842,624 bytes ~ 1000 TB |

| EB | Exa Byte | 1,152,921,504,606,846,976 (= 260) bytes ~ 1000 PB |

| ZB | Zetta Byte | 1,208,925,819,614,629,174,706,176 bytes (= 280) bytes |

~ denotes "about"

Behavioural Analytics is a kind of analytics that informs about the how, why and what instead of just the who and when. It looks at humanized patterns in the data.

Open Database Connectivity (ODBC) is a standard application programming interface (API) for accessing database management systems (DBMS). The designers of ODBC aimed to make it independent of database systems and operating systems. An application written using ODBC can be ported to other platforms, both on the client and server side, with few changes to the data access code.

Open Database Connectivity (ODBC) is a protocol that you can use to connect a Microsoft Access database to an external data source such as Microsoft SQL Server.

Java database connectivity (JDBC) is the specification of a standard application programming interface (API) that allows Java programs to access database management systems. The JDBC API consists of a set of interfaces and classes written in the Java programming language.

Using these standard interfaces and classes, programmers can write applications that connect to databases, send queries written in structured query language (SQL), and process the results.

Since JDBC is a standard specification, one Java program that uses the JDBC API can connect to any database management system (DBMS), as long as a driver exists for that particular DBMS.

According to Gartner: "Big Data is high-volume, high-velocity and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation."

Big Data consists of extensive datasets -- primarily in the characteristics of volume, velocity, variety, and/or variability -- that require a scalable architecture for efficient storage, manipulation, and analysis.

Big data is an umbrella term for any collection of data sets so large or complex that it becomes difficult to process them using traditional data-processing applications. In a nutshell, big data refers to data that is so large, fast or complex that it's difficult or impossible to process using traditional methods. Also, big data deals with accessing and storing large amounts of information for analytics.

So, what is Big Data? Big Data is a large data set with increasing volume, variety and velocity.

Big data solutions may have many components (to mention some):

- Distributed File System (such as HDFS, Amazon S3)

- Analytics Engine (such as Spark)

- Query Engine (Such as Snowflake, Amazon Athena, Google BigQuery, ...)

- ETL Support

- Relational database systems

- Search engine (sich as Apache Solr, Apache Lucene)

- ...

Big Data engineering is the discipline for engineering scalable systems for data-intensive processing.

Data collected from different sources are in a raw format, i.e., usually in a form that is not fit for Data Analysis. The idea behind what is Big Data Engineering is not only to collect Big Data but also to transform and store it in a dedicated database that can support insights generation or the creation of Machine Learning based solutions.

What is the Data Engineering

Lifecycle?

The data engineering lifecycle comprises stages

that turn raw data ingredients into a useful end

product, ready for consumption by analysts, data

scientists, ML engineers, and others.

Data Engineers are the force behind Data Engineering that is focused on gathering information from disparate sources, transforming the data, devising schemas, storing data, and managing its flow.

So how is data actually processed when dealing with a big data system? While approaches to implementation differ, there are some commonalities in the strategies and software that we can talk about generally. While the steps presented below might not be true in all cases, they are widely used.

The general categories of activities (typically using a cluster computing services) involved with big data processing are:

- Understanding data requirements

- Ingesting data into the system

- Persisting the data in storage

- Computing and Analyzing data

- Visualizing the results

What is Big Data Modeling? Data modeling is the method of constructing a specification for the storage of data in a database. It is a theoretical representation of data objects and relationships between them. The process of formulating data in a structured format in an information system is known as data modeling.

In a practical sense, Big Data Modeling involves:

-

Queries: understand queries and algorithms, which needs to be implemented using big data

-

Formalizing Queries: understaning queries for big data (how big data will be accessed, what are the parameters to these queries): this is a very important step to understand queries before designing proper data model

-

Data Model: once queries are understood, then design a data model, which optimally satisfies queries

-

Data Analytics Engine: engine which distributed algorithms and ETL will run; for example: Apache Spark

-

ETL Processes: design and implement ETL processes to build big data in a suitable format and environment

-

Scalability: scalability needs to be understood and addressed at every level

- Apache Hadoop, which implements a MapReduce paradigm.

Hadoop is slow and very complex (does not take advantage

of RAM/memory). Hadoop's analytics API is limited to

map-then-reducefunctions. - Apache Spark, which implements a superset of MapReduce paradigm: it is fast, and has a very simple and powerful API and works about 100 times faster than Hadoop. Spark takes advantage of memory and embraces in-memory computing. Spark can be used for ETL and implementing many types of distributed algorithms.

- Apache Tez

- Amazon Athena (mainly used as a query engine)

- Snowflake (mainly used as a query engine)

- Google BigQuery: is a serverless and multicloud data warehouse designed to help you turn big data into valuable business insights

According to dictionary: the automated recognition of individuals by means of unique physical characteristics, typically for the purposes of security. Biometrics refers to the use of data and technology to identify people by one or more of their physical traits (for example, face recognition).

While there are many types of biometrics for authentication, the five most common types of biometric identifiers are: fingerprints, facial, voice, iris, and palm or finger vein patterns.

Data modeling is the process of creating a data model for the data to be stored in a database. This data model is a conceptual representation of Data objects, the associations between different data objects, and the rules. The analysis of data sets using data modelling techniques to create insights from the data:

- data summarization,

- data aggregation,

- joining data

There are 5 different types of data models:

-

Hierarchical Data Model: A hierarchical data model is a structure for organizing data into a tree-like hierarchy, otherwise known as a parent-child relationship.

-

Relational Data Model: relational model represents the database as a collection of relations. A relation is nothing but a table of values (or rows and columns).

-

Entity-relationship (ER) Data Model: an entity relationship diagram (ERD), also known as an entity relationship model, is a graphical representation that depicts relationships among people, objects, places, concepts or events within an information technology (IT) system.

-

Object-oriented Data Model: the Object-Oriented Model in DBMS or OODM is the data model where data is stored in the form of objects. This model is used to represent real-world entities. The data and data relationship is stored together in a single entity known as an object in the Object Oriented Model.

-

Dimensional Data Model: Dimensional Modeling (DM) is a data structure technique optimized for data storage in a Data warehouse. The purpose of dimensional modeling is to optimize the database for faster retrieval of data. The concept of Dimensional Modelling was developed by Ralph Kimball and consists of “fact” and “dimension” tables.

What is a design pattern? In software engineering, a design pattern is a general repeatable solution to a commonly occurring problem in software design. In general, design patterns are categorized mainly into three categories:

- Creational Design Pattern

- Structural Design Pattern

- Behavioral Design Pattern

Gang of Four (Erich Gamma, Richard Helm, Ralph Johnson, and John Vlissides) Design Patterns is the collection of 23 design patterns from the book Design Patterns: Elements of Reusable Object-Oriented Software.

What are data design patterns? Data Design Pattern is a general repeatable solution to a commonly occurring data problem in big data area.

The following are common Data Design Patterns:

- Summarization patterns

- Filtering patterns

- In-Mapper patterns

- Data Organization patterns

- Join patterns

- Meta patterns

- Input/Output patterns

The data design patterns can be implemented by MapReduce and Spark and other big data solutions.

A collection of (structured, semi-structured, and unstructured) data.

Example of Data Sets:

- DNA data samples for 10,000 patients can be a data set.

- Daily log files for a search engine

- Weekly credit card transactions

- Monthly flight data for a country

- Twitter daily data

- Facebook daily messages

In computer science and computer programming, a data type (or simply type) is a set of possible values and a set of allowed operations on it. A data type tells the compiler or interpreter how the programmer intends to use the data.

In a nutshell, data types are the entities that tell the compiler or interpreter that which variable will hold what kind of values. While providing the inputs, there can be a different kind of data entered by a user likewise a number or a character or a sequence of an alphanumeric value. To handle these different kinds of data, the language has Data Types. These data types are of two types:

-

Primitive Data Type:

These are the readymade/built-in data type that comes as a part of language compiler. -

Composite Data Type:

These are the data types designed by the user as per requirements. These data types are always based on the primitive ones. Python and Java provide built-in composite data types (such as lists, sets, arrays, ...) -

User-Defined Data Type:

These are the custom data types designed and created by programmers by theclasskeyword. For example, a Python class is like an outline for creating a new object. An object is anything that you wish to manipulate or change while working through the code. Every time a class object is instantiated, which is when we declare a variable, a new object is initiated from scratch.

For example,

-

Java is a strongly typed (strong typing means that the type of a value doesn't change in unexpected ways) language, every variable must be defined by an explicit data type before usage. Java is considered strongly typed because it demands the declaration of every variable with a data type. Users cannot create a variable without the range of values it can hold.

* Java example // bob's data type is int int bob = 1; // bob can not change its type: the following line is invalid // String bob = "bob"; // but, you can use anther variable name String bob_name = "bob"; -

Python is strongly, dynamically typed:

-

Strong typing means that the type of a value doesn't change in unexpected ways. A string containing only digits doesn't magically become a number, as may happen in Perl. Every change of type requires an explicit conversion.

-

Dynamic typing means that runtime objects (values) have a type, as opposed to static typing where variables have a type.

-

Python example

# bob's data type is int bob = 1 # bob's data type changes to str bob = "bob"This works because the variable does not have a type; it can name any object. After

bob=1, you'll find thattype(bob)returns int, but afterbob="bob", it returnsstr. (Note that type is a regular function, so it evaluates its argument, then returns the type of the value.)

-

-

A data type that allows you to represent a single data value in a single column position. In a nutshell, a primitive data type is either a data type that is built into a programming language, or one that could be characterized as a basic structure for building more sophisticated data types.

-

Java examples:

int a = 10; boolean b = true; double d = 2.4; String s = "fox"; String t = null; -

Python examples:

a = 10 b = True d = 2.4 s = "fox" t = None

In computer science, a composite data type or compound data type is any data type which can be constructed in a program using the programming language's primitive data types.

-

Java examples:

import java.util.Arrays; import java.util.List; ... int[] a = {10, 11, 12}; List<String> names = Arrays.asList("n1", "n2", "n3"); -

Python examples:

a = [10, 11, 12]; names = ("n1", "n2", "n3") # immutable names = ["n1", "n2", "n3"] # mutable

In Java and Python, custom composite data types can be created by the concept of "class" and objects are created by instantiation of the class objects.

In computer science, an abstract data type (ADT) is a mathematical model for data types. An abstract data type is defined by its behavior (semantics) from the point of view of a user, of the data, specifically in terms of possible values, possible operations on data of this type, and the behavior of these operations. This mathematical model contrasts with data structures, which are concrete representations of data, and are the point of view of an implementer, not a user.

Abstract Data type (ADT) is a type (or class) for objects whose behavior is defined by a set of values and a set of operations. The definition of ADT only mentions what operations are to be performed but not how these operations will be implemented.

The stack data structure is a linear data structure that accompanies a principle known as LIFO (Last-In-First-Out) or FILO (First-In-Last-Out). In computer science, a stack is an abstract data type that serves as a collection of elements, with two main operations (adding or removing is only possible at the top):

-

push, which adds an element to the collection, and -

pop, which removes the most recently added element that was not yet removed.##### Stack as an Abstract Data Tyoe ##### # create a new empty Stack CREATE: -> Stack # add an Item to a given Stack PUSH: Stack × Item -> Stack # Return the top element and remove from the Stack POP: Stack -> Item # Gets the element at the top of the Stack without removing it PEEK: Stack -> Item # remove the top element and return an updated Stack REMOVE: Stack -> Stack # return True if the Stack is empty, otherwise return False IS_EMPTY: Stack -> Boolean # return the size of a given Stack SIZE: Stack -> Integer

For example, operations for a Queue (First-In-First-Out -- FIFO) as an abstract data type can be specified as the following. Note that a Queue can be implemented in many ways (using lists, arrays, linked lists, ...). Queue is an abstract data structure, somewhat similar to Stacks. Unlike stacks, a queue is open at both its ends. One end is always used to insert data (enqueue) and the other is used to remove data (dequeue). Queue follows First-In-First-Out methodology, i.e., the data item stored first will be accessed first.

##### Queue as an Abstract Data Type #####

# create a new empty Queue

CREATE: -> Queue

# add an Item to a given Queue

ADD: Queue × Item -> Queue

# Return a front element and remove from the Queue

FRONT: Queue -> Item

# Gets the element at the front of the Queue without removing it

PEEK: Queue -> Item

# remove a front element and return an updated Queue

REMOVE: Queue -> Queue

# return True if the Queue is empty, otherwise return False

IS_EMPTY: Queue -> Boolean

# return the size of a given Queue

SIZE: Queue -> Integer

Lucene is an open-source search engine software library written in Java. It provides robust search and indexing features.

Apache Lucene is a high-performance, full-featured search engine library written entirely in Java. It is a technology suitable for nearly any application that requires structured search, full-text search, faceting, nearest-neighbor search across high dimensionality vectors, spell correction or query suggestions.

Solr is the popular, blazing-fast, open source enterprise search platform built on Apache Lucene. Solr is highly reliable, scalable and fault tolerant, providing distributed indexing, replication and load-balanced querying, automated failover and recovery, centralized configuration and more. Solr powers the search and navigation features of many of the world's largest internet sites.

Hadoop is an open source framework that is built to enable the process and storage of big data across a distributed file system. Hadoop implements MapReduce paradigm, it is slow and complex and uses disk for read/write operations. Hadoop does not take advantage of in-memory computing. Hadoop runs in a computing cluster.

Hadoop takes care of running your MapReduce

code (by map() first, then reduce() logic)

across a cluster of machines. Its

responsibilities include chunking up the

input data, sending it to each machine,

running your code on each chunk, checking

that the code ran, passing any results

either on to further processing stages

or to the final output location, performing

the sort that occurs between the map

and reduce stages and sending each

chunk of that sorted data to the right machine,

and writing debugging information on each

job’s progress, among other things.

NameNode is the master node in the Apache

Hadoop HDFS Architecture that maintains and

manages the blocks present on the DataNodes

(worker nodes). NameNode is a very highly

available server that manages the File System

Namespace and controls access to files by

clients.

Hadoop provides:

-

MapReduce: you can run MapReduce jobs by implementing a series of

map() first, then reduce()functions. With MapReduce, you can analyze data at a scale. -

HDFS: Hadoop Distributed File System

-

Fault Tolerance (by replicating data in two or more worker nodes)

Note that for some big data problems, a single MapReduce job will not be enough to solve the problem, in this case, then you might need to run multiple Mapreduce jobs (as illustrated below):

Big data solution with Hadoop (comprised of 2 MapReduce jobs):

- Hadoop is an implementation of MapReduce paradigm

- RDBMS denoes a relational database system such as Oracle, MySQL, Maria

| Criteria | Hadoop | RDBMS |

|---|---|---|

| Data Types | Processes semi-structured and unstructured data | Processes structured data |

| Schema | Schema on Read | Schema on Write |

| Best Fit for Applications | Data discovery and Massive Storage/Processing of Unstructured data. | Best suited for OLTP and ACID transactions |

| Speed | Writes are Fast | Reads are Fast |

| Data Updates | Write once, Read many times | Read/Write many times |

| Data Access | Batch | Interactive and Batch |

| Data Size | Tera bytes to Peta bytes | Giga bytes to Tera bytes |

| Development | Time consuming and complex | Simple |

| API | Low level (by map() and reduce()) functions |

SQL and extensive |

In computer science and software engineering, replication refers to the use of redundant resources to improve reliability, fault-tolerance, or performance. One example of a replication is data replication. For example in Hadoop: HDFS (Hadoop Distributed File System) is designed to reliably store very large files across machines in a large cluster. It stores each file as a sequence of blocks; all blocks in a file except the last block are the same size. The blocks of a file are replicated for fault tolerance: it means that if a server holding specific data (say block X) fails, then that specific data (block X) can be retrieved and read from other replicated servers.

In HDFS, the block size and replication factor are configurable per file. An application can specify the number of replicas of a file. The replication factor can be specified at file creation time and can be changed later. Files in HDFS are write-once and have strictly one writer at any time.

Replication Example:

- file name: sample.txt

- file size: 1900 MB

- Data Block Size: 512 MB

- Replication Factor: 3

- Cluster of 6 nodes (one master + 6 worker nodes)::

- One Master node (no actual data is stored in the master node, the master node saves/stores metadata information)

- 5 worker/data nodes (actual data is stored in worker/data nodes) denoted as { W1, W2, W3, W4, W5 }

Since file size is 1900 MB, this means that this file is partitioned

into 4 blocks (1900 <= (4 * 512)):

- 1900 = 512 + 512 + 512 + 364

- Block-1 (B1): 512 MB

- Block-2 (B2): 512 MB

- Block-3 (B3): 512 MB

- Block-4 (B4): 512 MB (But only 364 MB is utilized)

With replication factor of 3, worker nodes might hold these blocks as (note that there will not be any duplicate blocks per data nodes):

- W1: { B1, B3 }

- W2: { B2, B4 }

- W3: { B3, B4, B2 }

- W4: { B4, B3, B1 }

- W5: { B1, B2 }

Since replication factor is 3, therefore only 2 (3-1) data nodes can safely fail.

The total number of replicas across the cluster is referred to as the replication factor (RF). A replication factor of 1 means that there is only one copy of each row in the cluster. If the node containing the row goes down, the row cannot be retrieved. A replication factor of 2 means two copies of each row, where each copy is on a different node. All replicas are equally important; there is no primary or master replica.

Given a cluster of N+1 nodes (a master and

N worker nodes), if data replication factor

is R, and N > R, then (R - 1) nodes can

safely fail without impacting any running job

in the cluster.

Fault tolerance is the property that enables a system to continue working in the event of failure (one or more ) of some component(s). Hadoop is said to be highly fault tolerant. Hadoop achieves this feat through the process of cluster technology and data replication. Data is replicated across multiple nodes in a Hadoop cluster. The data is associated with a replication factor (RF), which indicates the number of copies of the data that are present across the various nodes in a Hadoop cluster. For example, if the replication factor is 4, the data will be present in four different nodes of the Hadoop cluster, where each node will contain one copy each. In this manner, if there is a failure in any one of the nodes, the data will not be lost, but can be recovered from one of the other nodes which contains copies or replicas of the data.

In a cluster of M nodes, if replication factor

is N (where M > N) then N-1 nodes can

safely fail without impacting a running job

in the cluster.

Data comes in many varied formats:

-

Avro

- Avro stores the data definition in JSON format making it easy to read and interpret

-

Parquet

- Parquet is an open source, binary, column-oriented data file format designed for efficient data storage and retrieval

-

ORC

- The Optimized Row Columnar (ORC) file format provides a highly efficient way to store Hive data.

-

Text files (log data, CSV, ...)

-

XML

-

JSON

-

JDBC (read/write from/to relational tables)

-

DNA Data Foramts:

- FASTQ

- FASTA

- VCF

- ...

-

+more...

Apache Parquet is a columnar file format that supports block level compression and is optimized for query performance as it allows selection of 10 or less columns from from 50+ columns records.

Apache Spark can read/write from/to Parquet data format.

Parquet is a columnar open source storage format that can efficiently store nested data which is widely used in Hadoop and Spark.

Characteristics of Parquet:

- Free and open source file format.

- Language agnostic.

- Column-based format - files are organized by column, rather than by row, which saves storage space and speeds up analytics queries.

- Used for analytics (OLAP) use cases, typically in conjunction with traditional OLTP databases.

- Highly efficient data compression and decompression.

- Supports complex data types and advanced nested data structures.

Benefits of Parquet:

- Good for storing big data of any kind (structured data tables, images, videos, documents).

- Saves on cloud storage space by using highly efficient column-wise compression, and flexible encoding schemes for columns with different data types.

- Increased data throughput and performance using techniques like data skipping, whereby queries that fetch specific column values need not read the entire row of data.

Columnar databases have become the popular choice for storing analytical data workloads. In a nutshell, Column oriented databases, store all values from each column together whereas row oriented databases store all the values in a row together.

If you need to read MANY rows but only a FEW columns, then Column-Oriented databases are the way to go. If you need to read a FEW rows but MANY columns then row oriented databases are better suited.

Apache Tez (which implements MapReduce paradigm) is a framework to create high performance applications for batch and data processing. YARN of Apache Hadoop coordinates with it to provide the developer framework and API for writing applications of batch workloads.

The Tez is aimed at building an application framework which allows for a complex directed-acyclic-graph (DAG) of tasks for processing data. It is currently built atop Apache Hadoop YARN.

-

Apache HBase is an open source, non-relational, distributed database running in conjunction with Hadoop.

-

HBase is a column-oriented non-relational database management system that runs on top of Hadoop Distributed File System (HDFS).

-

HBase can support billions of data points.

Features of HBase:

- HBase is linearly scalable.

- It has automatic failure support.

- It provides consistent read and writes.

- It integrates with Hadoop, both as a source and a destination.

- It has easy Java API for client.

- It provides data replication across clusters.

According to Google: Google Bigtable is an HBase-compatible, enterprise-grade NoSQL database service with single-digit millisecond latency, limitless scale, and 99.999% availability for large analytical and operational workloads.

Bigtable is a fully managed wide-column and key-value NoSQL database service for large analytical and operational workloads as part of the Google Cloud portfolio.

HDFS (Hadoop Distributed File System) is a distributed file system designed to run on commodity hardware. You can place huge amount of data in HDFS. You can create new files or directories. You can delete files, but you can not edit/update files in place.

Features of HDFS:

-

Data replication. This is used to ensure that the data is always available and prevents data loss

-

Fault tolerance and reliability

-

High availability

-

Scalability

-

High throughput

-

Data locality

-

HDFS General format:

hdfs:https://<host>:<port>/folder_1/.../folde_n/file -

HDFS Example:

hdfs:https://localhost:8020/data/2023-01-07/samples.txt

Scalability is the ability of a system or process to maintain acceptable performance levels as workload or scope increases.

According to Gartner:

Scalability is the measure of a system’s ability to increase or decrease in performance and cost in response to changes in application and system processing demands. Examples would include how well a hardware system performs when the number of users is increased, how well a database withstands growing numbers of queries, or how well an operating system performs on different classes of hardware. Enterprises that are growing rapidly should pay special attention to scalability when evaluating hardware and software.

For example, an application program would be scalable if it could be moved from a smaller to a larger operating system and take full advantage of the larger operating system in terms of performance (user response time and so forth) and the larger number of users that could be handled.

Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance. Customers of all sizes and industries can use Amazon S3 to store and protect any amount of data for a range of use cases, such as data lakes, websites, mobile applications, backup and restore, archive, enterprise applications, IoT devices, and big data analytics. Amazon S3 provides management features so that you can optimize, organize, and configure access to your data to meet your specific business, organizational, and compliance requirements.

Objects are the fundamental entities stored in Amazon S3. Objects are stored as:

-

General format:

s3:https://<bucket-name>/folder_1/.../folde_n/file -

Example:

s3:https://my_bucket_name/data/2023-01-07/samples.txt

What is Amazon Athena? Amazon Athena is a service that enables data analysts to perform interactive queries using SQL, JDBC, and native API. The Amazon Athena is widely used and is defined as an interactive query service that makes it easy to analyze data in Amazon S3 using the standard SQL.

-

Amazon Athena is serverless, so there is no infrastructure to manage, and users pay only for the queries that they run.

- No cluster set up is required

- No cluster management is required

- No database server setup is required

-

Amazon Athena is easy to use and simply point to users' data in Amazon S3, define the schema, and start querying using standard SQL.

-

Most results are delivered within seconds. With Athena, there’s no need for complex ETL jobs to prepare user's data for the analysis and this makes it easy for anyone with SQL skills to quickly analyze large-scale datasets.

-

Amazon Athena is out-of-the-box integrated with the AWS Glue Data Catalog allowing users to create the unified metadata repository across various services, crawl data sources to discover schemas and populate their Catalog with new and modified table and partition definitions, and maintain the schema versioning.

-

Amazon Athena is the serverless data query tool which means it is scalable and cost-effective at the same time. Usually, customers are charged on a pay per query basis which further translates to the number of queries that are executed at a given time.

-

The normal charge for scanning 1TB of data from S3 is 5 USD and although it looks quite a small amount at a first glance when users have multiple queries running on hundreds and thousands of GB of data, the price might get out of control at times.

Partitioning data in Athena:

analyze slice of data rather than the whole data:

By physical partitioning your data (using

directory structure partitioning), you can

restrict the amount of data scanned by each

query, thus improving performance and reducing

cost. You can partition your data by any key.

A common practice is to partition the data based

on time, often leading to a multi-level partitioning

scheme. For example, a customer who has data coming

in every hour might decide to partition by year,

month, date, and hour. Another customer, who has

data coming from many different sources but that

is loaded only once per day, might partition by

a data source identifier and date.

For genomic data, you might partition your data by a chromosome (1, 2, ..., 22, X, Y, MT). Partitioning your genomic data by chromosome will look like:

<data-root-dir> --+

|/chromosome=1/<data-for-chromosome-1>

|/chromosome=2/<data-for-chromosome-2>

...

|/chromosome=22/<data-for-chromosome-22>

|/chromosome=X/<data-for-chromosome-X>

|/chromosome=Y/<data-for-chromosome-Y>

|/chromosome=MT/<data-for-chromosome-MT>Therefore, when you query for chromosome

2, then you just query slice of data

(<data-root-dir>/chromosome=2/)

rather than the whole data:

SELECT ...

FROM <table-pointing-to-your-data-root-dir>

WHERE chromosome=2

-

BigQuery is a serverless and cost-effective enterprise data warehouse.

-

BigQuery supports the Google Standard SQL dialect, but a legacy SQL dialect is also available.

-

BigQuery has built-in machine learning and BI that works across clouds, and scales with your data.

-

BigQuery is a fully managed enterprise data warehouse that helps you manage and analyze your data with built-in features like machine learning, geospatial analysis, and business intelligence.

-

BigQuery's query engine can run SQL queries on terabytes of data within seconds, and petabytes within minutes. BigQuery gives you this performance without the need to maintain the infrastructure or rebuild or create indexes. BigQuery's speed and scalability make it suitable for use in processing huge datasets.

-

BigQuery storage: BigQuery stores data using a columnar storage format that is optimized for analytical queries. BigQuery presents data in tables, rows, and columns and provides full support for database transaction semantics (ACID). BigQuery storage is automatically replicated across multiple locations to provide high availability.

-

With Google Cloud’s pay-as-you-go pricing structure, you only pay for the services you use.

A server refers to a computer with CPUs

(example 64 cores), some RAMs (example,

128GB RAM), and some disk space (example,

4TB). Commodity server/hardware/computer,

sometimes known as off-the-shelf server/hardware,

is a computer device or IT component that

is relatively inexpensive ($5K to $20K),

widely available and basically interchangeable

with other hardware of its type. Since commodity

hardware is not expensive, it is used in

building/creating clusters for big

data computing (scale-out architecture).

Commodity hardware is often deployed for

high availability and disaster recovery

purposes.

Hadoop and Spark clusters use a set of commodity server/hardware.

Fault-tolerance is the ability of a system to continue to run when a component of the system (such as a server node, disk, ...) fails.

HDFS is designed to reliably store very large files across machines in a large cluster. It stores each file as a sequence of blocks; all blocks in a file except the last block are the same size. The blocks of a file are replicated for fault tolerance.

Block size can be configured. For example, let block size to be 512 MB. Now, let's place a file (sample.txt) of 1800 MB in HDFS:

1800MB = 512MB (Block-1) + 512MB (Block-2) + 512MB (Block-3) + 264MB (Block-4)

Lets denote

Block-1 by B1

Block-2 by B2

Block-3 by B3

Block-4 by B4

Note that the last block, Block-4, has only 264 MB of useful data.

Let's say, we have a cluster of 6 nodes (one master and 5 worker nodes {W1, W2, W3, W4, W5} and master does not store any actual data), also assume that the replication factor is 2, therefore, blocks will be placed as:

W1: B1, B4

W2: B2, B3

W3: B3, B1

W4: B4

W5: B2

Fault Tolerance: if replication factor is N,

then (N-1) nodes can safely fail without a

job fails.

Using supercomputers to solve highly complex and advanced computing problems. This is a scale-up architecture and not a scale-out architecture. High-performance computing (HPC) uses supercomputers and computer clusters to solve advanced computation problems. HPC has a high cost due to the high cost of supercomputers.

Scaling up is adding further resources, like hard drives and memory, to increase the computing capacity of physical servers. Whereas scaling out is adding more servers to your architecture to spread the workload across more server/machines.

Hadoop and Spark use scale-out architectures.

MapReduce was developed by Google back in 2004 by

Jeffery Dean and Sanjay Ghemawat of Google (Dean &

Ghemawat, 2004). In their paper, [MAPREDUCE:

SIMPLIFIED DATA PROCESSING ON LARGE CLUSTERS]

(https://www.alexdelis.eu/M125/Papers/p107-dean.pdf)

and was inspired by the map() and reduce()

functions commonly used in functional programming.

At that time, Google’s proprietary MapReduce system

ran on the Google File System (GFS).

MapReduce implementations:

-

Apache Hadoop is an open-source implementation of Google's MapReduce.

-

Apache Spark is an open-source superset implementation of Google's MapReduce (Spark eliminates many problems of Hadoop and sets a new standard for data analytics).

-

Data-Intensive Text Processing with MapReduce by Jimmy Lin and Chris Dyer, January 27, 2013

-

MapReduce: Simplified Data Processing on Large Clusters by Jeffrey Dean and Sanjay Ghemawat

-

Google’s MapReduce Programming Model — Revisited by Ralf Lammel

-

Hadoop: The Definitive Guide: Storage and Analysis at Internet Scale 4th Edition by Tom White

-

Data Algorithms with Spark, by Mahmoud Parsian, O'Reilly 2022

-

Learning Spark, 2nd Edition by Jules S. Damji, Brooke Wenig, Tathagata Das, Denny Lee, 2020

-

Motivation: Large Scale Data Processing (Google uses MapReduce to index billions of documents on a daily basis)

-

Scale-out Architecture: use many commodity servers in cluster computing environment

-

Parallelism: Many tasks at the same time: Process lots of data in parallel (using cluster computing) to produce other needed data

-

Cluster computing: want to use tens, hundreds or thousands of servers to minimize analytics time

-

... but this needs to be easy

MapReduce is a software framework for processing vast amounts of data. MapReduce is a parallel programming model for processing data on a distributed system. MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel, distributed algorithm on a cluster.

MapReduce provides: