[TOC]

Android & iOS: Demo Directory

Refer to the compilation documentation to build libraries.

There are no built-in model files in the project, But MNN has provided a shell script to download, convert Tensorflow, Caffe model files. Converted models are saved in resource directory witch is referenced as a resource directory in iOS & Android project.

Steps:

- To install the converter, please refer to the Converter Doc.

- Execute the script get_model.sh.

- You will see the converted model files in the resource directory after succeed.

Here's how to integrate MNN in an Android/iOS IDE.

pod 'MNN', :path => "path/to/MNN"#import <MNN/Interpreter.hpp>

#import <MNN/Tensor.hpp>

#import <MNN/ImageProcess.hpp>This section describes how to integrate MNN in Android. knowledge of JNI is involved but not illustrated here, if you do not understand its usage, please refer to Official Documentation。

Under Android Studio (2.2+), cmake is recommended(you can also choose the native ndk-build tool directly)and the Gradle plug-in is used to build or use the so library。

Note: It is highly recommended to install ccache to speed up the compilation of MNN, macOS brew install ccache ubuntu apt-get install ccache.

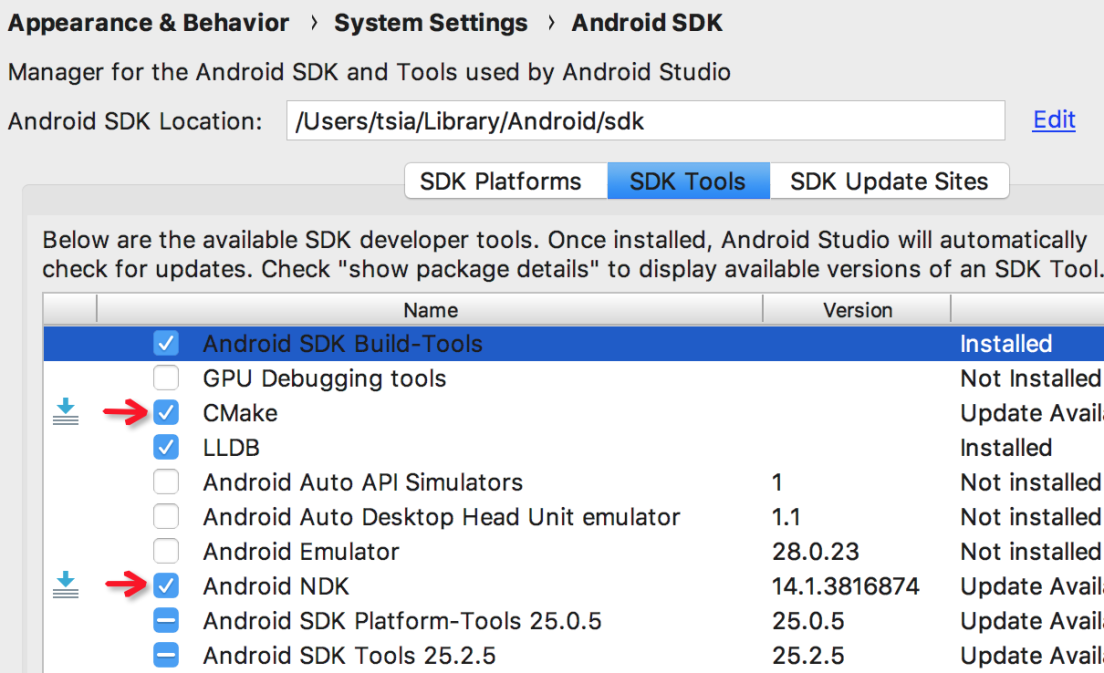

You need to download the NDK and cmake firstly:

Open Android Studio -> Preferences -> Appearance&Behavior -> System Setting -> Android SDK, Or search the Android SDK directly on the left, select SDK Tools, and download the NDK and cmake toolkits.

Add the compiled MNN so libraries and header files to the project, the demo has included the compiled so libraries of CPU, GPU, OpenCL and Vulkan under armeabi-v7a and arm64-v8a. We added them to the libs directory:

Then create a CMakeLists.txt that associates the pre-built MNN so libraries (see compilation documentation above) :

cmake_minimum_required(VERSION 3.4.1)

set(lib_DIR ${CMAKE_SOURCE_DIR}/libs)

include_directories(${lib_DIR}/includes)

set (CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -fopenmp")

set (CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fopenmp")

set (CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -std=gnu99 -fvisibility=hidden -fomit-frame-pointer -fstrict-aliasing -ffunction-sections -fdata-sections -ffast-math -flax-vector-conversions")

set (CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -fvisibility=hidden -fvisibility-inlines-hidden -fomit-frame-pointer -fstrict-aliasing -ffunction-sections -fdata-sections -ffast-math -fno-rtti -fno-exceptions -flax-vector-conversions")

set (CMAKE_LINKER_FLAGS "${CMAKE_LINKER_FLAGS} -Wl,--gc-sections")

add_library( MNN SHARED IMPORTED )

set_target_properties(

MNN

PROPERTIES IMPORTED_LOCATION

${lib_DIR}/${ANDROID_ABI}/libMNN.so

)

...Then configure the gradle, specifying the CMakeLists.txt path and the jniLibs path

android {

...

externalNativeBuild {

cmake {

path "CMakeLists.txt"

}

}

sourceSets {

main {

jniLibs.srcDirs = ['libs']

}

}

...

}Not all so libraries are need to be loaded, choose what you need. In the example, CPU, GPU, OpenCL and Vulkan are loaded.

static {

System.loadLibrary("MNN");

try {

System.loadLibrary("MNN_CL");

System.loadLibrary("MNN_GL");

System.loadLibrary("MNN_Vulkan");

} catch (Throwable ce) {

Log.w(Common.TAG, "load MNN GPU so exception=%s", ce);

}

...

}Next, you can wrap the native method to call the MNN C++ interface, since the direct call layer in Java involves parameter passing and transformation, it is not as convenient as using the C++ interface directly. It is not necessary and difficult to implement the call granularity corresponding to C++ interfaces in the upper layer, so we usually build a native library on our own, in which we encapsulate a series of interfaces convenient for upper layer calls according to the call procedure of MNN.

Demo shows an encapsulated best practice. mnnnetnative.cpp encapsulates the C++ interface of MNN, which will be packaged as libMNNcore.so with cmake. To facilitate the invocation of the Java layer, we encapsulate three classes:

- MNNNetNative: Only native method declaration is provided, which corresponds to the interface of MNNnetnative.cpp

- MNNNetInstance: Provides interfaces for network creation, input, inference, output, and destruction

- MNNImageProcess: Provides interfaces related to image processing

You can copy them directly into your project to avoid the hassle of encapsulation (recommended). Of course, if you are familiar with the MNN C++ interface and jni, you can also wrap it in your own way.

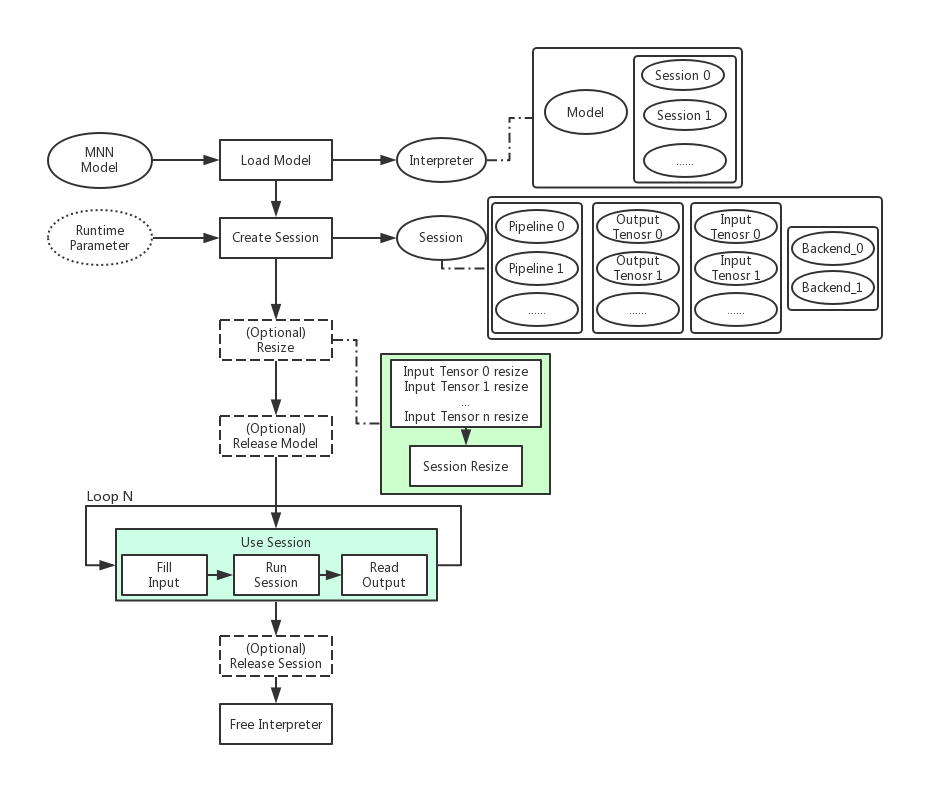

No matter how the upper layer is encapsulated in any environment, the bottom layer is to call the MNN C++ interface. Just make sure the basic steps of the call are correct. The basic call process of MNN is as follows:

We provide the MobileNet example:

// Create a interpreter

NSString *model = [[NSBundle mainBundle] pathForResource:@"mobilenet" ofType:@"mnn"];

auto interpreter = std::shared_ptr<MNN::Interpreter>(MNN::Interpreter::createFromFile(model.UTF8String));

// Create a session

MNN::ScheduleConfig config;

config.type = MNN_FORWARD_CPU;

config.numThread = 4;

MNN::Session *session = interpreter->createSession(config);

/*

* Set input

* 1. Normalization processing 2.Format transformation; 3. Image transformation (pruning, rotating, scaling); 4. Data input tensor

*/

int w = image.size.width;

int h = image.size.height;

unsigned char *rgba = (unsigned char *)calloc(w * h * 4, sizeof(unsigned char)); {

CGColorSpaceRef colorSpace = CGImageGetColorSpace(image.CGImage);

CGContextRef contextRef = CGBitmapContextCreate(rgba, w, h, 8, w * 4, colorSpace,

kCGImageAlphaNoneSkipLast | kCGBitmapByteOrderDefault);

CGContextDrawImage(contextRef, CGRectMake(0, 0, w, h), image.CGImage);

CGContextRelease(contextRef);

}

const float means[3] = {103.94f, 116.78f, 123.68f};

const float normals[3] = {0.017f, 0.017f, 0.017f};

MNN::CV::ImageProcess::Config process;

::memcpy(process.mean, means, sizeof(means));

::memcpy(process.normal, normals, sizeof(normals));

process.sourceFormat = MNN::CV::RGBA;

process.destFormat = MNN::CV::BGR;

std::shared_ptr<MNN::CV::ImageProcess> pretreat(MNN::CV::ImageProcess::create(process));

MNN::CV::Matrix matrix;

matrix.postScale((w - 1) / 223.0, (h - 1) / 223.0);

pretreat->setMatrix(matrix);

auto input = interpreter->getSessionInput(session, nullptr);

pretreat->convert(rgba, w, h, 0, input);

free(rgba);

// Inference

interpreter->runSession(session);

// Obtain the output

MNN::Tensor *output = interpreter->getSessionOutput(session, nullptr);

auto copy = std::shared_ptr<MNN::Tensor>(MNN::Tensor::createHostTensorFromDevice(output));

float *data = copy->host<float>();The following procedures are called using the interface encapsulated by MNNNetInstance in the demo:

// create net instance

MNNNetInstance instance = MNNNetInstance.createFromFile(MainActivity.this, modelFilePath);

// create session

MNNNetInstance.Config config= new MNNNetInstance.Config();

config.numThread = 4;

config.forwardType = MNNForwardType.FORWARD_CPU.type;

//config.saveTensors = new String[]{"layer name"};

MNNNetInstance.Session session = instance.createSession(config);

// get input tensor

MNNNetInstance.Session.Tensor inputTensor = session.getInput(null);

/*

* Set input

* 1. Normalization processing 2.Format transformation; 3. Image transformation (pruning, rotating, scaling); 4. Data input tensor

*/

MNNImageProcess.Config config = new MNNImageProcess.Config();

// normalization params

config.mean = ...

config.normal = ...

// input data format convert

config.source = MNNImageProcess.Format.YUV_NV21;

config.dest = MNNImageProcess.Format.BGR;

// transform

Matrix matrix = new Matrix();

matrix.postTranslate((bmpWidth - TestWidth) / 2, (bmpHeight- TestHeight) / 2);// translate

matrix.postScale(2 ,2);// scale

matrix.postRotate(90);// rotate

matrix.invert(matrix);

// bitmap input

MNNImageProcess.convertBitmap(orgBmp, inputTensor, config, matrix);

// buffer input

//MNNImageProcess.convertBitmap(buffer, inputTensor, config, matrix);

// inference

session.run();

//session.runWithCallback(new String[]{"layer name"})

// get output tensor

MNNNetInstance.Session.Tensor output = session.getOutput(null);

// get results

float[] result = output.getFloatData();// float results

//int[] result = output.getIntData();// int results

//byte[] result = output.getUINT8Data();// uint8 results

...

// instance release

instance.release();The interfaces are introduced according to the calling sequence below.

static Interpreter* createFromFile(const char* file);- file: local path to the model file

Interpreter object

MNN::ScheduleConfig config;

config.type = MNN_FORWARD_CPU;

config.numThread = 4;

MNN::Session *session = interpreter->createSession(config);- Config: ScheduleConfig object, in which, the scheduling type of forwardType will be specified, the number of threads numThread and the intermediary saveTensors to be saved; iOS supports MNN_FORWARD_CPU and MNN_FORWARD_METAL execution type.

Session object

Tensor* getSessionInput(const Session* session, const char* name);

Tensor* getSessionOutput(const Session* session, const char* name);- session: The session that the network executes in

- name: Specifies the name of the fetching input/output tensor, default as null

Tensor object

This is not a required step, but if you need to resize, you need to resize all input tensors, and then resize session.

void resizeTensor(Tensor* tensor, const std::vector<int>& dims);- dims: Dimension information

After all the input tensor resize is completed, then resize the session.

void resizeSession(Session* session);Prepare input data and set them into the inputTensor after necessary pre-processing.

auto input = interpreter->getSessionInput(session, nullptr);

pretreat->convert(rgba, w, h, 0, input);// The convert step of the ImageProcess will put the processed data directly into the inputTensorauto inputTensor = net->getSessionInput(session, name.UTF8String);

MNN::Tensor inputTensorUser(inputTensor, inputTensor->getDimensionType());

if (nullptr == inputTensorUser.host<float>()) {

auto tmpTensor = MNN::Tensor::create<uint8_t>(dims, &data);

tmpTensor->copyToHostTensor(inputTensor);

} else {

auto inputData = inputTensorUser.host<float>();

auto size = inputTensorUser.size();

::memcpy(inputData, data, size);

inputTensor->copyFromHostTensor(&inputTensorUser);

}interpreter->runSession(session);- session: Network session

Inference completion state : MNN::ErrorCode

Supports float, int, uint8_t, etc. Make a choice according to actual needs. Take float as an example:

MNN::Tensor *output = interpreter->getSessionOutput(session, nullptr);

auto copy = std::shared_ptr<MNN::Tensor>(MNN::Tensor::createHostTensorFromDevice(output));

float *data = copy->host<float>();The following interfaces are introduced in order of invocation (take the encapsulation of MNNNetInstance and MNNImageProcess in the Demo as examples).

public static MNNNetInstance createFromFile(Context context, String fileName)- context: context

- fileName: The local path where the model resides

MNNNetInstance object

public Session createSession(Config config);- config: Config object, in which forwardType, numThread, saveTensors can be specified

session object

Note: saveTensors for the need to save intermediate output the name of the layer specified, you can specify multiple. The Tensor of the middle layer will then be extracted directly from the getOutput("layer name") after they extrapolate.

public Tensor getInput(String name)- name: Specifies the name of the fetching input tensor, defaults as null

Input Tensor Object

This is not a required step, but if you need to resize, you need to resize all input tensors, and then resize session.

public void reshape(int[] dims);- dims: Dimensional information.

public void reshape()After all the input tensor resize is completed, then resize the session.

And the first step is to convert the input of the model, for example, RGB channels of image or yuv channels of video, into the input format that the model will be based on. And then perform general operations like scaling, rotation, clipping, etc. Write into the tensor of the input at last.

MNNImageProcess.java

public static boolean convertBuffer(byte[] buffer, int width, int height, MNNNetInstance.Session.Tensor tensor, Config config, Matrix matrix)- buffer: byte array

- width: buffer width

- height: buffer height

- tensor: input tensor

- config: configuration information. The configuration can specify the data source format, target format (such as BGR), normalized parameters, and so on. See MNNImageProcess.config

- matrix: Matrix for image translation, scaling, and rotation. Refer to the use of Android Matrix

Success/failure, bool value

MNNImageProcess.java

public static boolean convertBitmap(Bitmap sourceBitmap, Tensor tensor, MNNImageProcess.Config config, Matrix matrix);- sourceBitmap: Image bitmap object

- tensor: input tensor

- config: configuration information. The configuration can specify the target format (such as BGR, the source does not need to be specified), normalized parameters, etc. See MNNImageProcess.config

- matrix: Matrix for image translation, scaling, and rotation. Refer to the use of Android Matrix

Success/failure, bool value

public void run()public Tensor[] runWithCallback(String[] names)- names: name of the middle tensors

Return the corresponding middle tensor array

public Tensor getOutput(String name);- name: Specifies the name of the fetching output tensor, defaults as null

The output tensor object

public float[] getFloatData()public int[] getIntData()public byte[] getUINT8Data()public void release();Destroy net instance in time to release native memory.

When we use video or photos as input source, we usually need to clip, rotate, scale, or re-format input data. MNN provides the image processing module to handle these common operations. And it's convenient and fast. It provides:

- Data format conversion (RGBA/RGB/BGR/GRAY/BGRA/YUV420/NV21)

- normalization

- Image clipping, rotation, scaling processing

When inputing data, either convertBuffer or convertBitmap is called. During which, MNNImageProcess.Config config and Matrix matrix are used. MNNImageProcess.Config is used to configure the source data format (if the input is image, then the source data format is not needed), target format and the normalization parameters; Matrix is used to perform affine transformation on the image. It should be noted that the matrix parameter here refers to the transformation matrix from the target image to the source image. If you don't understand, you can transform from the source image to the target image and then take the inverse matrix.

Note: the Matrix parameter refers to the transformation Matrix from the target image to the source image

For example, we use the NV21 video outputs data from Android camera as input, and BGR is the input format of the model. Meanwhile, to adjust photo orientation of Android camera or video, rotation clockwise in 90 degrees is needed. The model requires an input sizes as 224*224 and takes values between 0 and 1.

MNNImageProcess.Config config = new MNNImageProcess.Config();

// normalization

config.mean=new float[]{0.0f,0.0f,0.0f};

config.normal=new float[]{1.0f,1.0f,1.0f};

// nv21 to bgr

config.source=MNNImageProcess.Format.YUV_NV21;// input source format

config.dest=MNNImageProcess.Format.BGR; // input data format

// matrix transform: dst to src

Matrix matrix=new Matrix();

matrix.postRotate(90);

matrix.preTranslate(-imageWidth/2,-imageHeight/2);

matrix.postTranslate(imageWidth/2,imageHeight/2);

matrix.postScale(224/imageWidth,224/imageHeight);

matrix.invert(matrix);// Because it's the matrix from the target to the source, you have to take the inverse

MNNImageProcess.convertBuffer(data, imageWidth, imageHeight, inputTensor,config,matrix);CVPixelBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

int w = (int)CVPixelBufferGetWidth(pixelBuffer);

int h = (int)CVPixelBufferGetHeight(pixelBuffer);

CVPixelBufferLockBaseAddress(pixelBuffer, kCVPixelBufferLock_ReadOnly);

unsigned char *bgra = (unsigned char *)CVPixelBufferGetBaseAddress(pixelBuffer);

const float means[3] = {103.94f, 116.78f, 123.68f};

const float normals[3] = {0.017f, 0.017f, 0.017f};

MNN::CV::ImageProcess::Config process;

::memcpy(process.mean, means, sizeof(means));

::memcpy(process.normal, normals, sizeof(normals));

process.sourceFormat = MNN::CV::BGRA;

process.destFormat = MNN::CV::BGR;

std::shared_ptr<MNN::CV::ImageProcess> pretreat(MNN::CV::ImageProcess::create(process));

MNN::CV::Matrix matrix;

matrix.postScale((w - 1) / 223.0, (h - 1) / 223.0);

pretreat->setMatrix(matrix);

auto input = interpreter->getSessionInput(session, nullptr);

pretreat->convert(bgra, w, h, 0, input);

CVPixelBufferUnlockBaseAddress(pixelBuffer, kCVPixelBufferLock_ReadOnly);