The Impact Pack's Detector includes three main types: BBOX, SEGM, and SAM.

-

The Detector detects specific regions based on the model and returns processed data in the form of SEGS.

-

SEGSis a comprehensive data format that includes information required for Detailer operations, such asmasks,bbox,crop regions,confidence,label, andcontrolnetinformation. -

Through SEGS, conditioning can be applied for Detailer[ControlNet], and SEGS can also be categorized using information such as labels or size within SEGS[SEGSFilter, Crowd Control].

-

bbox: Detected regions are represented by rectangular bounding boxes consisting of left, top, right, and bottom coordinates.

-

mask: Represents the silhouette of the object within the bbox as a mask, providing a more precise delineation of the object's area. In the case of BBOX detector, the mask area covers the entire bbox region.

-

crop region: Determines the size of the region to be cropped based on the bbox.

- When the bbox is formed near the border, the area on the opposite side is expanded, resulting in the bbox being off-centered within the crop region.

- Having a larger crop region provides more context for a more natural inpaint, but it also increases the time required for inpainting.

-

-

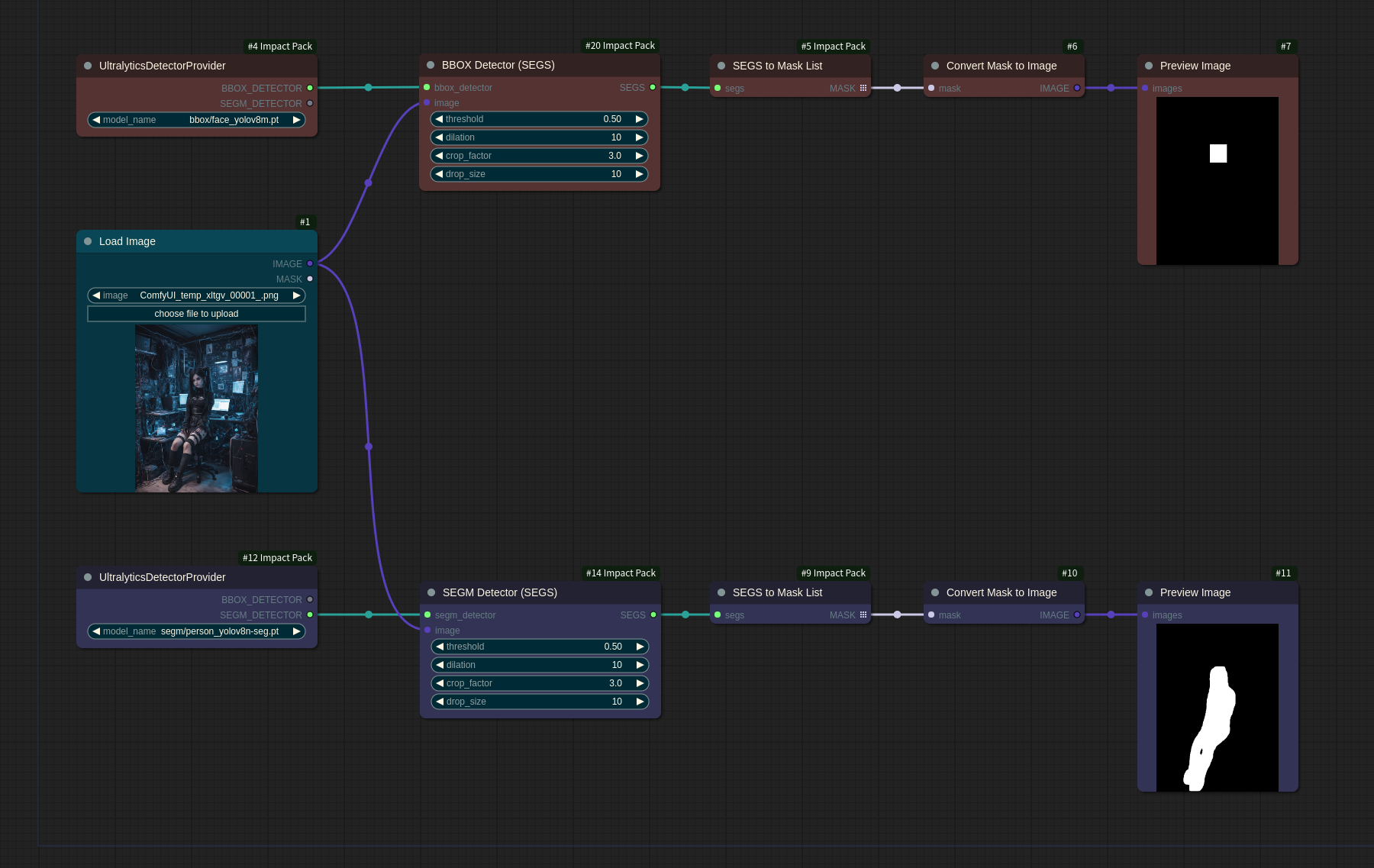

BBOX stands for Bounding Box, which captures detection areas as rectangular regions.

- For example, using the

bbox/face_yolov8m.ptmodel, you can obtain masks for the rectangular regions of faces. - This can be obtained using the

BBOX_DETECTORobtained throughUltralyticsDetectorProviderorONNXDetectorProvider.

- For example, using the

-

SEGM stands for Segmentation, which captures detection areas in the form of silhouettes.

- For instance, when using the

segm/person_yolov8n-seg.ptmodel, you can obtain silhouette masks for human shapes. - This can be obtained using the

SEGM_DETECTORobtained throughUltralyticsDetectorProvider.

- For instance, when using the

-

SAM generates silhouette masks using the Segment Anything technique.

- It cannot be used independently but, when used in conjunction with a

BBOXmodel to specify the target for detection, it can create finely detailed silhouette masks for the detected objects.

- It cannot be used independently but, when used in conjunction with a

The UltralyticsDetectorProvider node loads Ultralytics' detection models and returns either a BBOX_DETECTOR or SEGM_DETECTOR.

- When using a model that starts with

bbox/, onlyBBOX_DETECTORis valid, andSEGM_DETECTORcannot be used. - If using a model that starts with

segm/, bothBBOX_DETECTORandSEGM_DETECTORcan be used. BBOX_DETECTORandSEGM_DETECTORcan be used with theBBOX Detectornode andSEGM Detectornode, respectively.

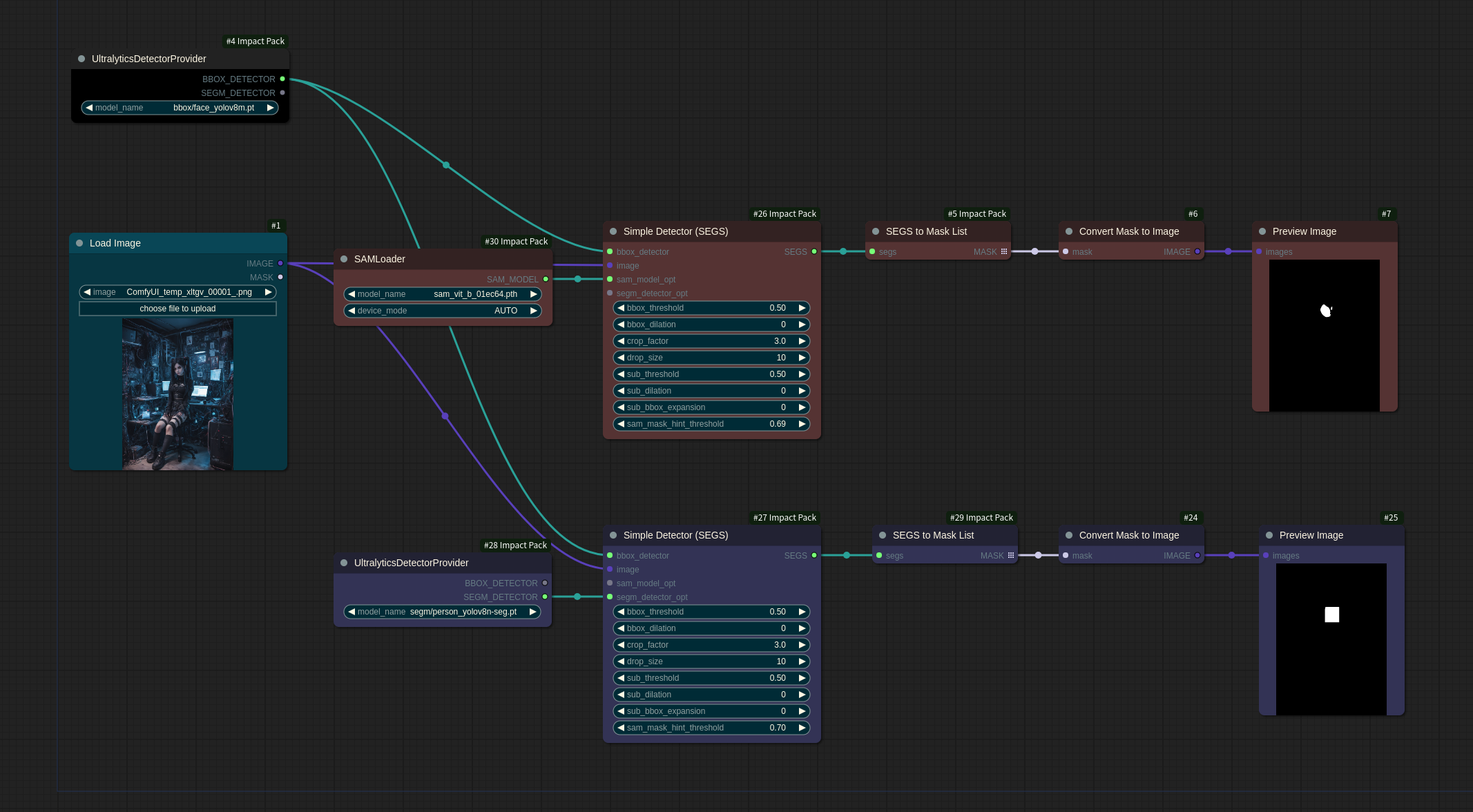

The workflow below is an example that utilizes BBOX_DETECTOR and SEGM_DETECTOR for detection.

The SAMDetector node loads the SAM model through the SAMLoader node for use.

-

Please note that it is not compatible with

WAS-NS's SAMLoader. -

The following workflow demonstrates the use of the

BBOX Detectorto generateSEGS, and then using theSAMDetectorfor creating more precise segments.

The Simple Detector first performs primary detection using BBOX_DETECTOR and then generates SEGS using the intersected mask from the detection results of SEGM_DETECTOR or SAMDetector.

-

sam_model_optandsegm_detector_optare both optional inputs, and if neither of them is provided, the detection results from BBOX are returned as is. When both inputs are provided,sam_model_opttakes precedence, and thesegm_detector_optinput is ignored. -

SAMhas the disadvantage of requiring direct specification of the target for segmentation, but it generates more precise silhouettes compared toSEGM. The workflow below is an example of compensateBBOXwithSAMandSEGM. In the case ofperson SEGM, it creates a silhouette that includes the entireface BBOX, resulting in a silhouette that is too rough and doesn't show significant improvement.

Simple Detector For AnimateDiff is a detector designed for video processing, such as AnimateDiff, based on the Simple Detector.

-

The basic configuration is similar to the Simple Detector, but additional features such as

masking_modeandsegs_pivotare provided. -

masking_modeconfigures how masks are composed:Pivot SEGS: Utilizes only one mask from the segs specified bysegs_pivot. When used in conjunction with the Combined mask, it employs a unified mask that encompasses all frames.Combine neighboring frames: Constructs each frame's mask by combining three masks, including the current frame and its adjacent frames. This method occasionally compensates for detection failures in specific frames.Don't combine: Uses the original mask for each frame without combining them.

-

segs_pivotsets the mask image that serves as the basis for identifying SEGS.Combined mask: Combines the masks of all frames into one frame before identifying separate masks for each SEGS.1st frame mask: Identifies separate masks for each SEGS based on the mask of the first frame.- Each option has its advantages and disadvantages. For instance, when

1st frame maskis selected, if the target object moves rapidly, the SEGS created based on the mask of the first frame might extend beyond its initial boundaries in later frames. Conversely, when using theCombined mask, if moving objects overlap, they may be recognized as a single object.