This is a quick implementation for Deep Hypersphere Embedding for Face Recognition(CVPR 2017).This paper proposed the angular softmax loss that enables convolutional neural networks(CNNs) to learn angularly discriminative features. The main content I replicated contains:

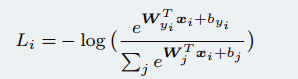

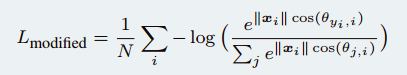

- 1. mathematical comparison among original softmax, modified softmax and angular softmax;

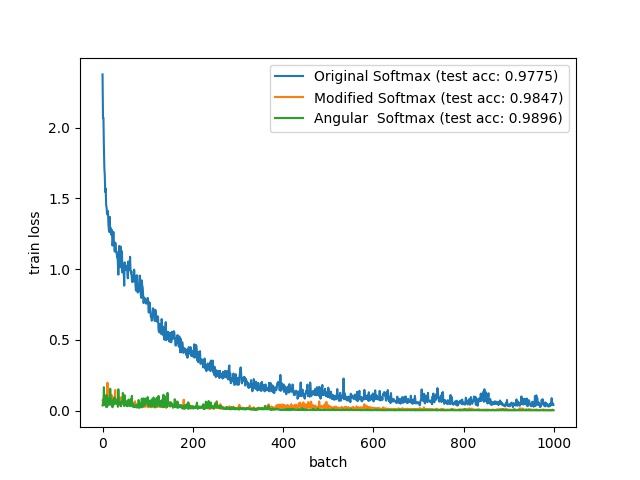

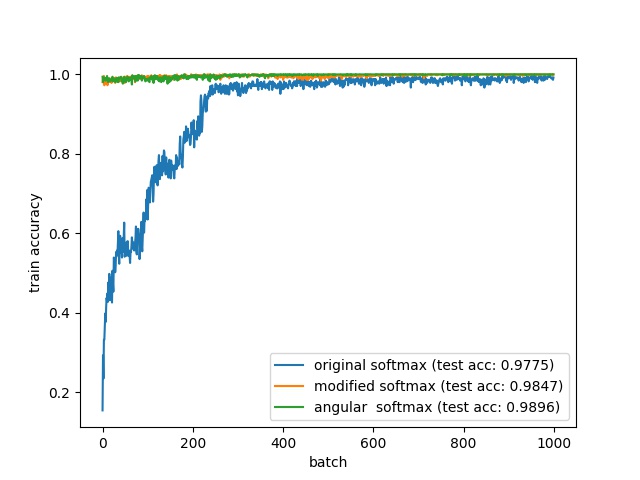

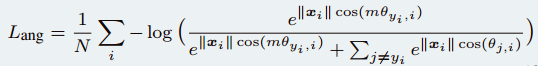

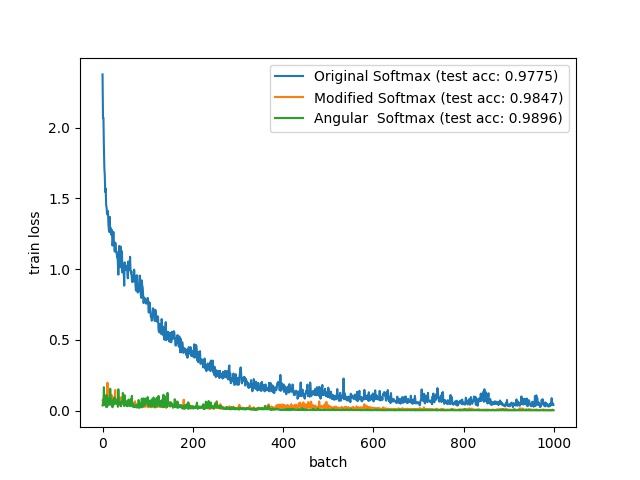

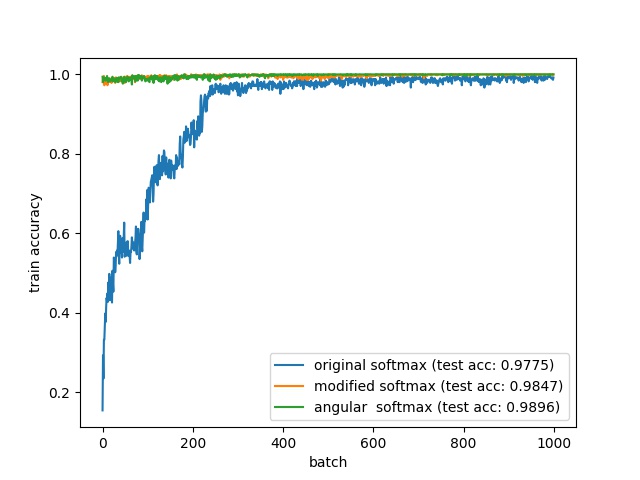

- 2. show the accuracy and loss comparison of different softmax in the experiment;

- 3. 2D and 3D visualization of embeddings learned with different softmax loss on MNIST dataset;

- 4. I replicated SphereFace-20 model with tensorflow.and here is code and graph.

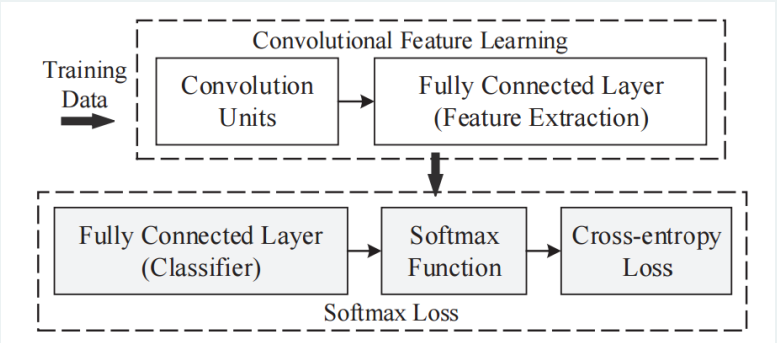

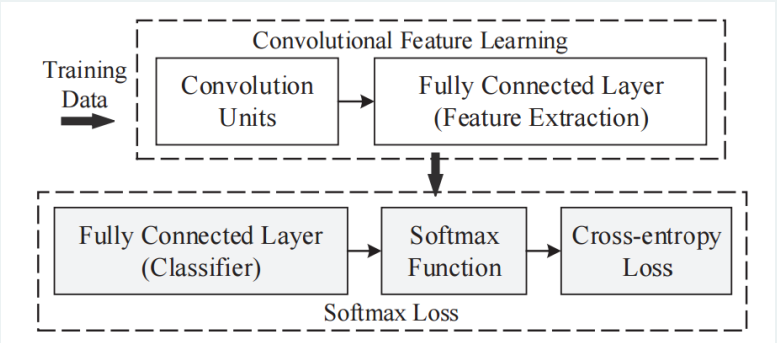

many current CNNS can viewed as convolution feature learning guided by softmax loss on top. however, softmax is easy to to optimize but does not explicitly encourage large margin between different classes.

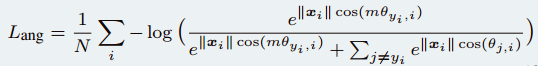

on this situation, the author proposed a new loss function that always encourages an angular decision margin between different classes.

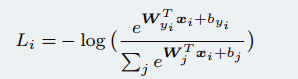

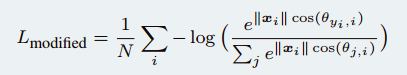

| softmax |

formula |

test acc(MNIST) |

| original softmax |

|

0.9775 |

| modified softmax |

|

0.9847 |

| angular softmax |

|

0.9896 |

A toy example on MNIST dataset, CNN features can be visualized by setting the output dimension as 2 or 3, as shown in following figures.

| original softmax |

modified softmax |

angular softmax |

|

|

|

| original softmax |

modified softmax |

angular softmax |

|

|

|

| training loss |

training accuracy |

|

|