Code for our ICML 2019 paper:

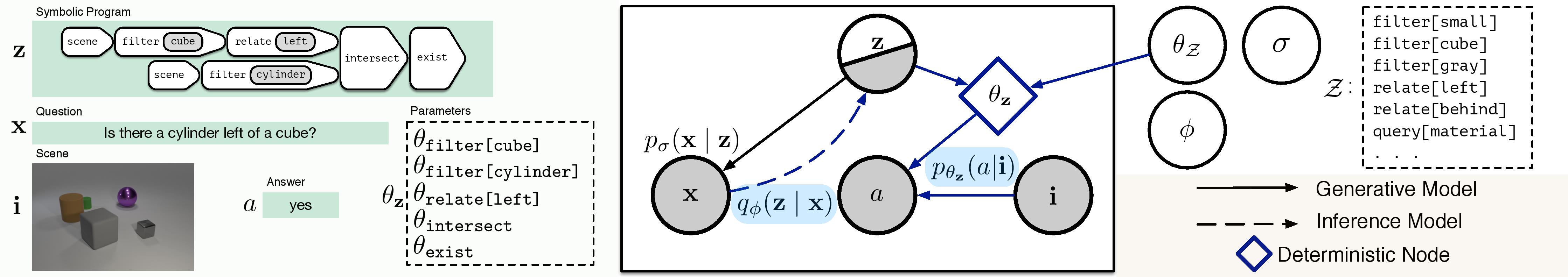

Probabilistic Neural-Symbolic Models for Interpretable Visual Question Answering Ramakrishna Vedantam, Karan Desai, Stefan Lee, Marcus Rohrbach, Dhruv Batra, Devi Parikh

If you find this code useful, please consider citing:

@inproceedings{vedantam2019probabilistic,

title={Probabilistic Neural-symbolic Models for Interpretable Visual Question Answering},

author={Ramakrishna Vedantam and Karan Desai and Stefan Lee and Marcus Rohrbach and Dhruv Batra and Devi Parikh},

booktitle={ICML},

year={2019}

}

This codebase uses PyTorch v1.0 and provides out of the box support with CUDA 9 and CuDNN 7. The recommended way to set up this codebase is throgh Anaconda / Miniconda, as a developement package:

- Install Anaconda or Miniconda distribution based on Python3+ from their downloads' site.

- Clone this repository and create an environment:

git clone https://www.github.com/kdexd/probnmn-clevr

conda create -n probnmn python=3.6- Activate the environment and install all dependencies.

conda activate probnmn

cd probnmn-clevr/

pip install -r requirements.txt- Install this codebase as a package in development version.

python setup.py develop- This codebase assumes all the data to be in

$PROJECT_ROOT/datadirectory by default, although custom paths can be provided through config. Download CLEVR v1.0 dataset from here and symlink it as follows:

$PROJECT_ROOT/data

|—— CLEVR_test_questions.json

|—— CLEVR_train_questions.json

|—— CLEVR_val_questions.json

`—— images

|—— train

| |—— CLEVR_train_000000.png

| `—— CLEVR_train_000001.png ...

|—— val

| |—— CLEVR_val_000000.png

| `—— CLEVR_val_000001.png ...

`—— test

|—— CLEVR_test_000000.png

`—— CLEVR_test_000001.png ...

- Build a vocabulary out of CLEVR programs, questions and answers, which can be read by AllenNLP, and will be used throughout the training and evaluation procedures. This will create a directory with separate text files containing unique tokens of questions, programs and answers.

python scripts/preprocess/build_vocabulary.py \

--clevr-jsonpath data/CLEVR_train_questions.json \

--output-dirpath data/clevr_vocabulary- Tokenize programs, questions and answers of CLEVR training, validation (and test) splits using

this vocabulary mapping. This will create H5 files to be read by

probnmn.data.readers.

python scripts/preprocess/preprocess_questions.py \

--clevr-jsonpath data/CLEVR_train_questions.json \

--vocab-dirpath data/clevr_vocabulary \

--output-h5path data/clevr_train_tokens.h5 \

--split train- Extract image features using pre-trained ResNet-101 from torchvision model zoo.

python scripts/preprocess/extract_features.py \

--image-dir data/images/train \

--output-h5path data/clevr_train_features.h5 \

--split train