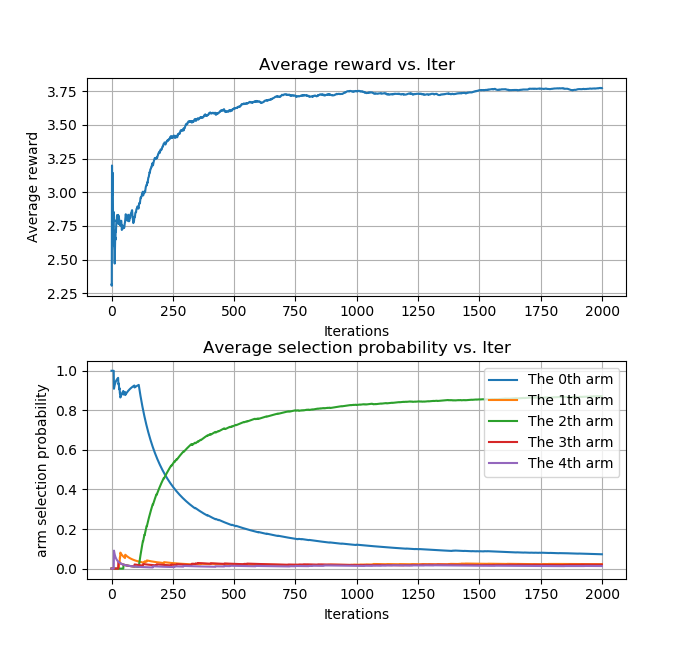

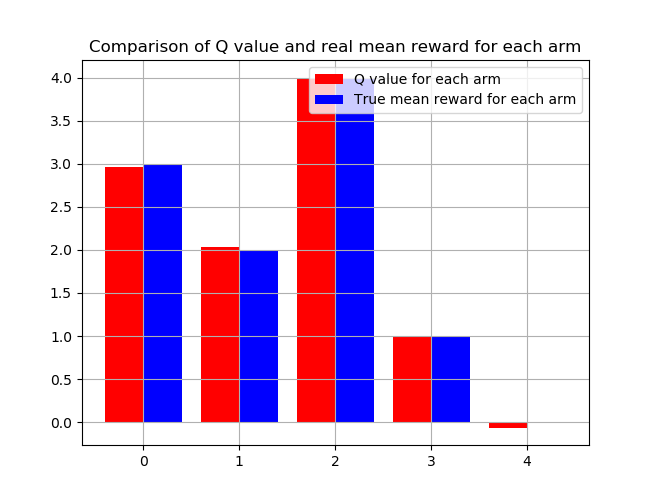

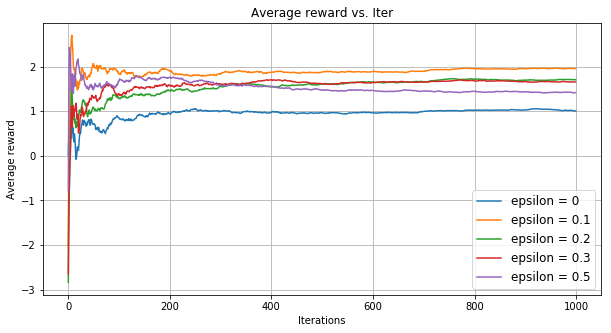

Multi-armed bandit (MAB) is a quite simple and easy to implement toy problem for understanding the concept of reinforcement learning. The codes here solve the MAB by epsilon-greedy strategy and UCB (Upper Confidence Bound) strategy for action selection, using the straightforward reward expectation as the Q value.

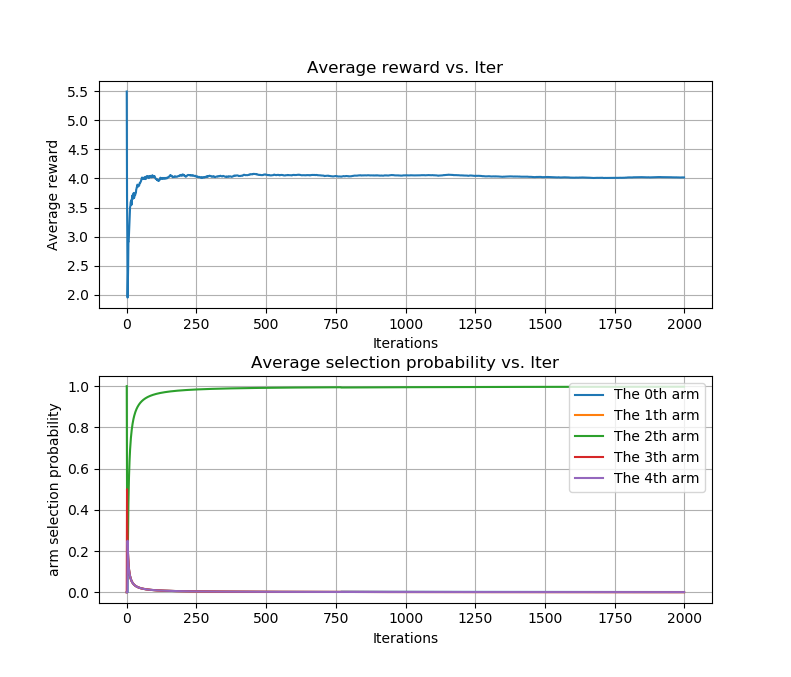

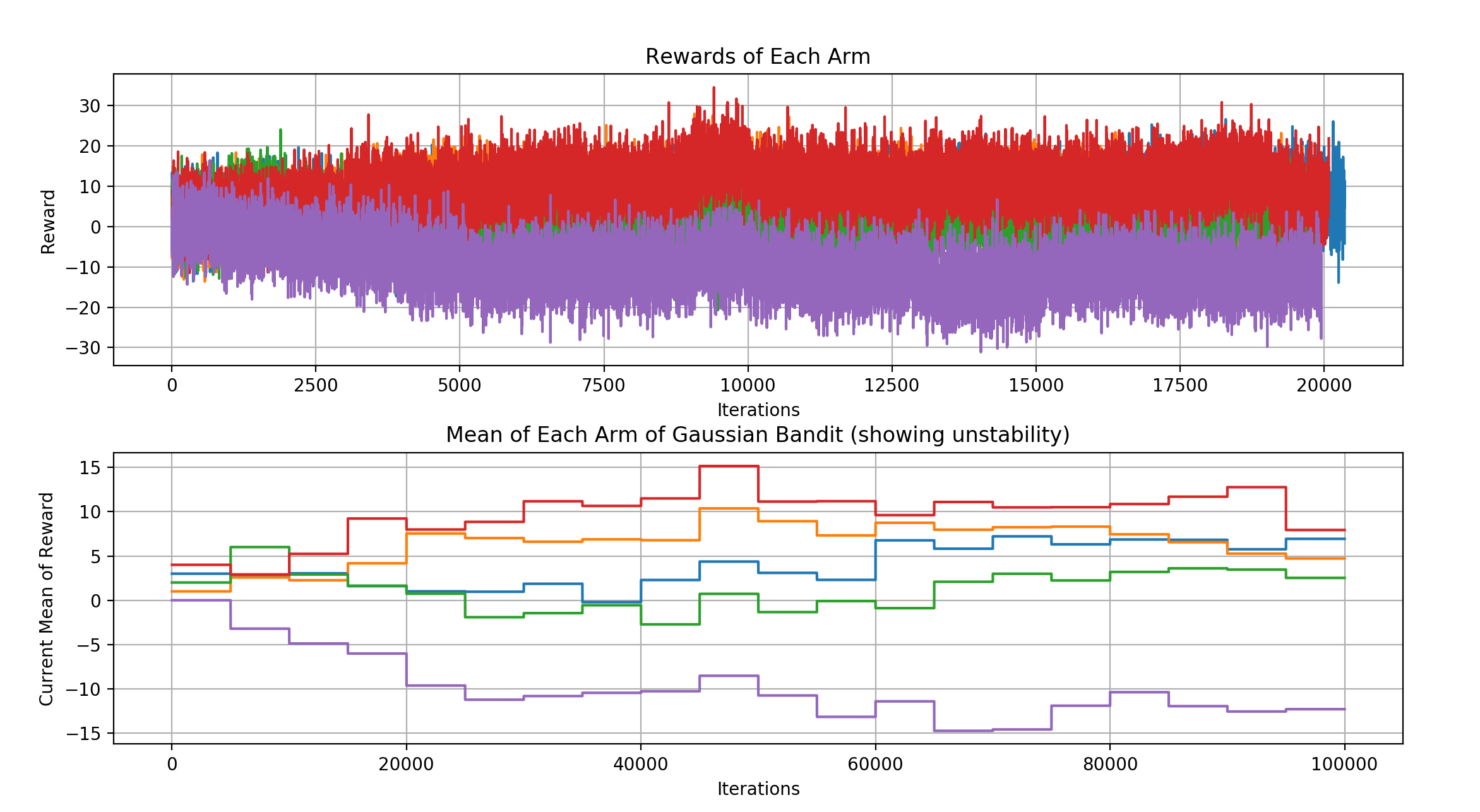

the unstable gaussian bandit is shown below, indicating the mean(reward) of each arm all changes with time.

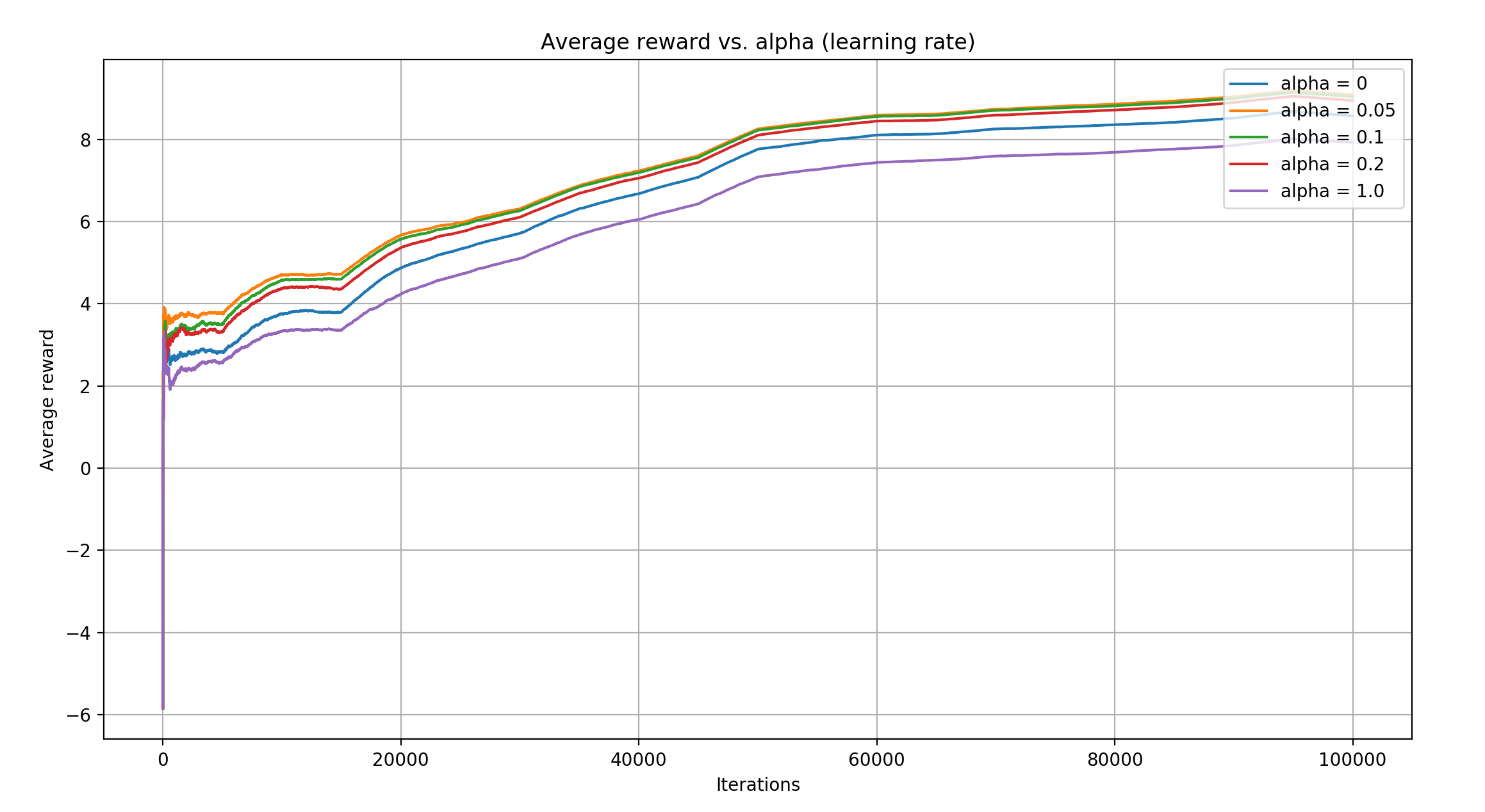

comparison alpha stride and common (1/n) stride for unstable MAB problem