School of Computer Science and Technology, Harbin Institute of Technology, Shenzhen

*Equal contribution

†Corresponding author

🔥 Details will be released. Stay tuned 🍻 👍

- [07/2024] Arxiv paper released.

This is the github repository of MoME: Mixture of Multimodal Experts for Generalist Multimodal Large Language Models. In this work, we propose a mixture of multimodal experts (MoME) to mitigate task interference and obtain a generalist MLLM.

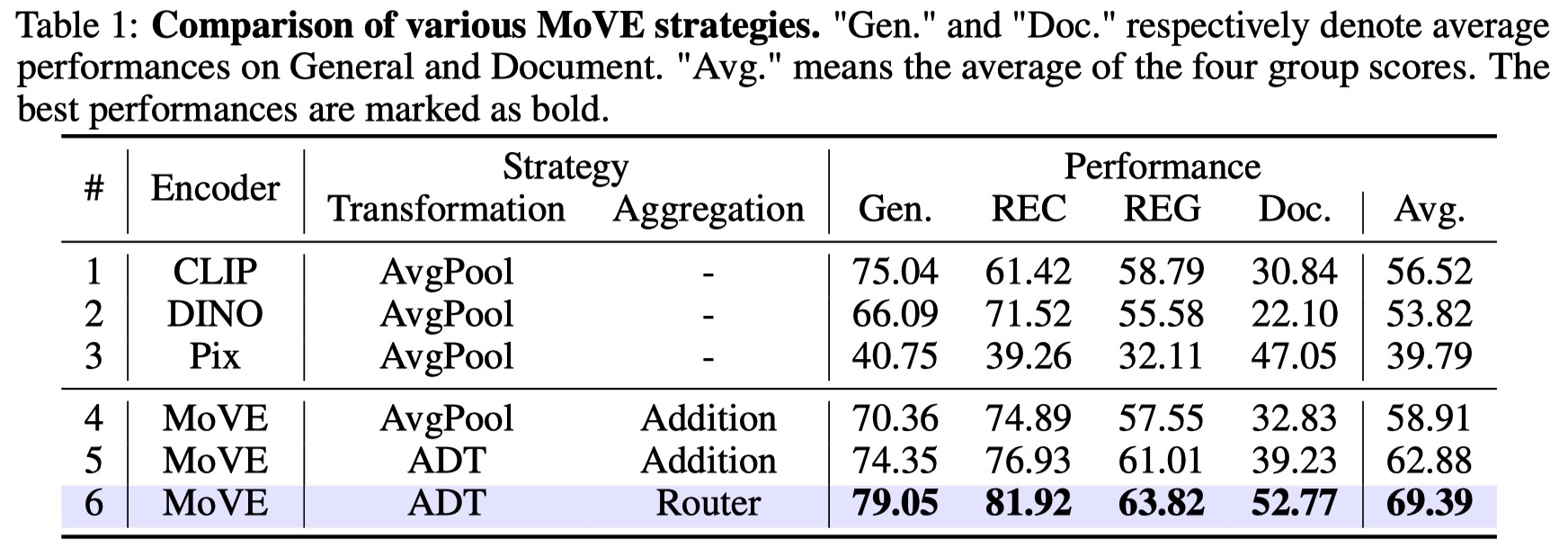

Our MoME is composed of two key components, a mixture of vision experts (MoVE) and a mixture of language experts (MoLE). MoVE can adaptively modulate the features transformed from various vision encoders, and has a strong compatibility in transformation architecture. MoLE incorporates sparsely gated experts into LLMs to achieve painless improvements with roughly unchanged inference costs.

The architecture of the proposed MoME model:

We collected 24 datasets and categorized them into four groups for instruction-tuning and evaluation:

Here we list the multitasking performance comparison of MoME and baselines. Please refer to our paper for more details.

If you find this work useful for your research, please kindly cite our paper:

@article{shen2024mome,

title={MoME: Mixture of Multimodal Experts for Generalist Multimodal Large Language Models},

author={Shen, Leyang and Chen, Gongwei and Shao, Rui and Guan, Weili and Nie, Liqiang},

journal={arXiv preprint arXiv:2407.12709},

year={2024}

}