Detect visual saliency from video or images.

NOTES:

- Need to implement inhibition of return for output saliency map

- USB camera option available but currently getting slow FPS.

Running ./saliency --help will show the following output:

Usage: saliency [params]

-?, -h, --help

print this help message

--alt_exit

sets program to allow right-clicking on the "Saliency" window to exit

--cam

usb camera index as input, use 0 for default device

--debug

toggle visualization of feature parameters. --dir output will be disabled

--dir

full path to where the saliency output directory will be created

--img

full path to image file as input

--no_gui

turn off displaying any output windows and using OpenCV GUI functionality. Will ignore --debug

--par

full path to the YAML parameters file

--split

output will be saved as a series of images instead of video

--start_frame

start detection at this value instead of starting at the first frame, default=1

--stop_frame

stop detection at this value instead of ending at the last frame, default=-1 (end)

--vid

full path to video file as input

--win_align

align debug windows, alignment depends on image and screen size

Assuming you are in the directory containing the saliency executable program..., e.g., VideoSalientCpp/saliency/bin.

Point to the sample video named vtest.avi, use the parameters settings from parameters.yml, and export data to the exported folder.

saliency --vid=../share/samples/vtest.avi --par=../share/parameters.yml --dir=../share/exportedExport will be a video if --dir is specified, even though input is an image. The video will be the number of frames before closing the window, unless --stop_frame is specified.

saliency --img=../share/samples/racoon.jpg --dir=../share/exportedInstead of a video as the output, frames will be split into images with --split.

saliency --img=../share/samples/racoon.jpg --dir=../share/exported --splitIf you want to use a series of images as input, images must be in the same folder and numbered (see samples/tennis as an example). You also must specify this as a video using --vid, and enter the numbering format as seen below.

saliency --vid=../share/samples/tennis/%05d.jpgUse the default camera device.

saliency --cam=0Specify different saliency parameters using the --par option. If not specified, uses the internal default parameters. Parameters that can be edited are:

# --------------------------------------------------------------------------------------

# General saliency model parameters

# --------------------------------------------------------------------------------------

model:

# Proportion of the image size used as the max LoG kernel size. Each kernel will be half the size of the previous.

max_LoG_prop: 5.0200000000000000e-01

# Number of LoG kernels. Set to -1 to get as many kernels as possible, i.e., until the smallest size is reached. Set to 0 to turn off all LoG convolutions.

n_LoG_kern: 3

# Window size for amount of blur applied to saliency map. Set to -1 to calculate window size from image size.

gauss_blur_win: -1

# Increase global contrast between high/low saliency.

contrast_factor: 2.

# Focal area proportion. Proportion of image size used to attenuate outer edges of the image area.

central_focus_prop: 6.3000000000000000e-01

# Threshold value to generate salient contours. Should be between 0 and 255. Set to -1 to use Otsu automatic thresholding.

saliency_thresh: -1.

# Threshold multiplier. Only applied to automatic threshold (i.e., saliency_thresh=-1).

saliency_thresh_mult: 1.5000000000000000e+00

# --------------------------------------------------------------------------------------

# List of parameters for each feature map channel

# --------------------------------------------------------------------------------------

feature_channels:

# Luminance/Color parameters --------------------------------------------------------

color:

# Color space to use as starting point for extracting luminance and color. Should be either "DKL", "LAB", or "RGB".

colorspace: DKL

# Scale parameter (k) for logistic function. Sharpens boundary between high/low intensity as value increases.

scale: 1.

# Shift parameter (mu) for logistic function. This threshold cuts lower level intensity as this value increases.

shift: 0.

# Weight applied to all pixels in each map/image. Set to 0 to toggle channel off.

weight: 1.

# Line orientation parameters -------------------------------------------------------

lines:

# Kernel size for square gabor patches. Set to -1 to calculate window size from image size.

kern_size: -1

# Number of rotations used to create differently angled Gabor patches. N rotations are split evenly between 0 and 2pi.

n_rotations: 8

# Sigma parameter for Gabor filter. Adjusts frequency.

sigma: 1.6250000000000000e+00

# Lambda parameter for Gabor filter. Adjusts width.

lambda: 6.

# Psi parameter for Gabor filter. Adjusts angle.

psi: 1.9634950000000000e+00

# Gamma parameter for Gabor filter. Adjusts ratio.

gamma: 3.7500000000000000e-01

# Weight applied to all pixels in each map/image. Set to 0 to toggle channel off.

weight: 1.

# Motion flicker parameters ---------------------------------------------------------

flicker:

# Cutoff value for minimum change in image contrast. Value should be between 0 and 1.

lower_limit: 2.0000000298023224e-01

# Cutoff value for maximum change in image contrast. Value should be between 0 and 1.

upper_limit: 1.

# Weight applied to all pixels in each map/image. Set to 0 to toggle channel off.

weight: 1.

# Optical flow parameters -----------------------------------------------------------

flow:

# Size of square window for sparse flow estimation. Set to -1 to calculate window size from image size. Setting this to a smaller value generates higher flow intensity but at the cost of accuracy.

flow_window_size: -1

# Maximum number of allotted points used to estimate flow between frames.

max_num_points: 200

# Minimum distance between new points used to estimate flow.

min_point_dist: 15.

# Half size of the dilation/erosion kernel used to expand flow points.

morph_half_win: 6

# Number of iterations for the morphology operations. This will perform N dilations and N/2 erosion steps.

morph_iters: 8

# Weight applied to all pixels in each map/image. Set to 0 to toggle channel off.

weight: 1.Download repository contents to your user folder (you can download anywhere but the example below uses the user folder). If you already have git installed, you can do the following in a terminal.

cd ~

git clone https://github.com/iamamutt/VideoSalientCpp.gitAnytime there are updates to the source code, you can navigate to the VideoSalientCpp folder and pull the new changes with:

cd ~/VideoSalientCpp

git pullYou will need some developer tools to build the program, open up the terminal app and run the following:

xcode-select --installIf you type clang --version in the terminal, you should see the output below. The version should be at least 11.

Apple clang version 11.0.0 (clang-1100.0.33.16)

Target: x86_64-apple-darwin19.6.0

Thread model: posixYou'll also need Homebrew to grab the rest of the libraries and dependencies: https://brew.sh/

After Homebrew is installed, run:

brew update

brew install cmake opencv ffmpegAfter the above dependencies are installed, navigate to the repository folder, e.g., if you saved the contents to ~/VideoSalientCpp then run cd ~/VideoSalientCpp. Once in the folder root, run the following to build the saliency binary.

mkdir build && cd build

cmake -DCMAKE_BUILD_TYPE=Release ..

cmake --build . --config Release --target install

cd ..The compiled binaries will be in ./saliency/bin. Test using the samples data:

cd saliency

./bin/saliency --vid=share/samples/vtest.aviHold the "ESC" key down to quit (make sure the saliency output window is selected).

Install dependencies:

- OpenCV (>=4.5.0) https://github.com/opencv/opencv/archive/4.5.0.zip

- CMake Binary distribution https://cmake.org/download/

- GCC (>= 10) https://winlibs.com/

TODO

Once OpenCV is built from source, build the saliency program by navigating to the repo. You'll need to know the path where OpenCVConfig.cmake is located. Substitute <OpenCVDir> with that path below.

cd path/to/VideoSalientCpp

mkdir build && cd build

cmake --no-warn-unused-cli -DOPENCV_INSTALL_DIR=<OpenCVDir> -DCMAKE_BUILD_TYPE=Release -G "MinGW Makefiles" ..

cmake --build . --config Release --target install -- -j

The saliency program will be in VideoSalientCpp/saliency/bin.

If you don't want to build from source you can use the docker image to run the program. The image can be found in Releases.

- Install Docker Desktop: https://www.docker.com/get-started

- Open the application after install. Allow privileged access if prompted.

- Check that Docker works from the command line. From a terminal type:

docker --version. - Obtain the docker image

saliency-image.tar.gzfrom "releases" on GitHub. - In a terminal, navigate to the directory containing the docker image and load it with

docker load -i saliency-image.tar.gz - Run the image by entering the command

docker run -it --rm saliency-app:latest. You should see the saliency program help documentation.

Open up the file docker-compose.yml in any text editor. The fields that need to be changed are environment, command, and source.

-

source: The keysvolumes: source:maps a directory on your host machine to a directory inside the docker container. E.g.,source: /path/to/my/data. If your data is located on your computer at~/videos, then use the full absolute path such assource: $USERPROFILE/videoson windows orsource: $HOME/videosfor unix-based systems. To use the samples from this repo, set the source mount as:source: <saliency>/saliency/share, where<saliency>is the full path to theVideoSalientCpprepo folder. -

command: These are the command-line arguments passed to the saliency program. If you want to specify a video to use with the--vid=option then use the relative path from the mapped volume. E.g.,--vid=my_video.aviwhich may be located in~/videos. Add the option--no_guito run the container without installing XQuartz or XLaunch on your host machine. -

environment: ChangeDISPLAY=#.#.#.#:0.0to whatever your IP address is. If your IP address is192.168.0.101then the field would be- DISPLAY=192.168.0.101:0.0. This setting is required for displaying output windows. If you would like to run the program without displaying any output, set the option--no_guiin thecommand:list. See theDisplaying windowssection below for GUI setup.

After configuring docker-compose.yml, run the saliency service by entering this in the terminal:

docker-compose run --rm saliencyWhen running from the docker container, the saliency program tries to show windows of the saliency output. These windows are generated by OpenCV and require access to the display. This access is operating system dependent, and without some way to map the display from the container to your own host machine you will generate an error such as Can't initialize GTK backend in function 'cvInitSystem'.

After performing the steps below, you should now be able to run docker-compose without the --no-gui option and be able to see the output windows. You'll need to have XLaunch or XQuartz running each time you try to run the docker container.

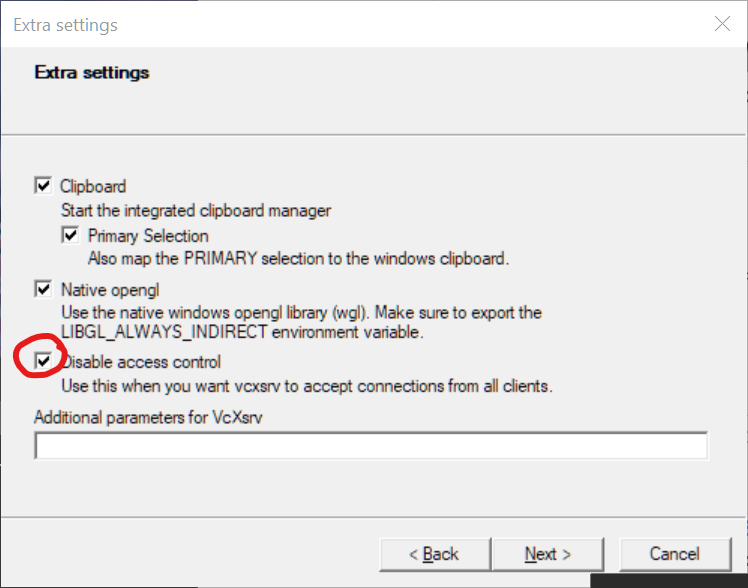

- Download and install VcXsrv from here: https://sourceforge.net/projects/vcxsrv/

- Run XLaunch and use all the default settings except for the last where it says "Disable access control." Make sure its selected.

To get your IP address on Windows:

ipconfig

Look for the line IPv4 Address and edit the docker-compose.yml file with your address.

Use Homebrew to install XQuartz and open the application.

brew install xquartz

open -a XquartzOnce XQuartz is open, allow connections by going to:

XQuartz > preferences > Security > allow connections from network clients

Next you will need your IP address. Get it with the command below.

ifconfig en0Look at the inet line from the ifconfig output. If your IP address is 192.168.0.101 then set you'll set the environment variable DISPLAY to this address using the docker -e option. To test if everything works, run the command below.

This command opens up XQuartz and allows a connection between docker and the X server. It then runs the docker image using a video sample stored in the image.

xhost +

docker run -e DISPLAY=192.168.0.101:0.0 saliency-app:latest -c --vid=../internal/samples/vtest.aviEdit the docker-compose.yml file with your IP address. You will have to run docker-compose with the xhost command each time.

xhost + && docker-compose run --rm saliencyQuit XQuartz when you're done.