Temporally Efficient Vision Transformer for Video Instance Segmentation (CVPR 2022, Oral)

by Shusheng Yang1,3, Xinggang Wang1 📧, Yu Li4, Yuxin Fang1, Jiemin Fang1,2, Wenyu Liu1, Xun Zhao3, Ying Shan3.

1 School of EIC, HUST, 2 AIA, HUST, 3 ARC Lab, Tencent PCG, 4 IDEA.

(📧) corresponding author.

- This repo provides code, models and training/inference recipes for TeViT(Temporally Efficient Vision Transformer for Video Instance Segmentation).

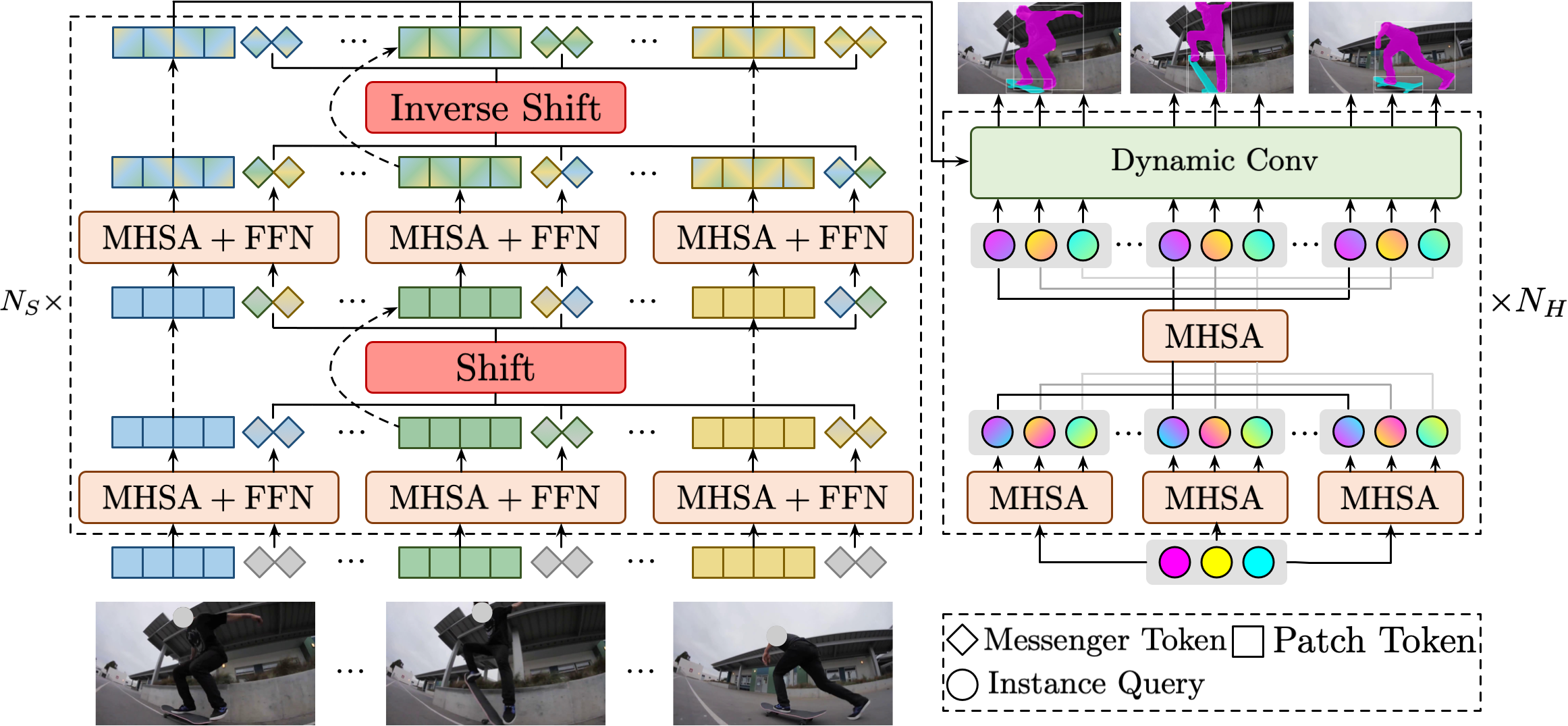

- TeViT is a transformer-based end-to-end video instance segmentation framework. We build our framework upon the query-based instance segmentation methods, i.e.,

QueryInst. - We propose a messenger shift mechanism in the transformer backbone, as well as a spatiotemporal query interaction head in the instance heads. These two designs fully utlizes both frame-level and instance-level temporal context information and obtains strong temporal modeling capacity with negligible extra computational cost.

- We provide both checkpoints and codalab server submissions on

YouTube-VIS-2019dataset.

| Name | AP | AP@50 | AP@75 | AR@1 | AR@10 | Params | model | submission |

|---|---|---|---|---|---|---|---|---|

| TeViT_MsgShifT | 46.3 | 70.6 | 50.9 | 45.2 | 54.3 | 161.83 M | link | link |

| TeViT_MsgShifT_MST | 46.9 | 70.1 | 52.9 | 45.0 | 53.4 | 161.83 M | link | link |

- We have conducted multiple runs due to the training instability and checkpoints above are all the best one among multiple runs. The average performances are reported in our paper.

- Besides basic models, we also provide TeViT with

ResNet-50andSwin-Lbackbone, models are also trained onYouTube-VIS-2019dataset. - MST denotes multi-scale traning.

| Name | AP | AP@50 | AP@75 | AR@1 | AR@10 | Params | model | submission |

|---|---|---|---|---|---|---|---|---|

| TeViT_R50 | 42.1 | 67.8 | 44.8 | 41.3 | 49.9 | 172.3 M | link | link |

| TeViT_Swin-L_MST | 56.8 | 80.6 | 63.1 | 52.0 | 63.3 | 343.86 M | link | link |

- Due to backbone limitations, TeViT models with

ResNet-50andSwin-Lbackbone are conducted withSTQI Headonly (i.e., without our proposedmessenger shift mechanism). - With

Swin-Las backbone network, we apply more instance queries (i.e., from 100 to 300) and stronger data augmentation strategies. Both of them can further boost the final performance.

- Linux

- Python 3.7+

- CUDA 10.2+

- GCC 5+

- Clone the repository locally:

git clone https://github.com/hustvl/TeViT.git- Create a conda virtual environment and activate it:

conda create --name tevit python=3.7.7

conda activate tevit- Install YTVOS Version API from youtubevos/cocoapi:

pip install git+https://github.com/youtubevos/cocoapi.git#"egg=pycocotools&subdirectory=PythonAPI

- Install Python requirements

torch==1.9.0

torchvision==0.10.0

mmcv==1.4.8

pip install -r requirements.txt

- Please follow Docs to install

MMDetection

python setup.py develop- Download

YouTube-VIS 2019dataset from here, and organize dataset as follows:

TeViT

├── data

│ ├── youtubevis

│ │ ├── train

│ │ │ ├── 003234408d

│ │ │ ├── ...

│ │ ├── val

│ │ │ ├── ...

│ │ ├── annotations

│ │ │ ├── train.json

│ │ │ ├── valid.json

python tools/test_vis.py configs/tevit/tevit_msgshift.py $PATH_TO_CHECKPOINTAfter inference process, the predicted results is stored in results.json, submit it to the evaluation server to get the final performance.

- Download the COCO pretrained

QueryInstwith PVT-B1 backbone from here. - Train TeViT with 8 GPUs:

./tools/dist_train.sh configs/tevit/tevit_msgshift.py 8 --no-validate --cfg-options load_from=$PATH_TO_PRETRAINED_WEIGHT- Train TeViT with multi-scale data augmentation:

./tools/dist_train.sh configs/tevit/tevit_msgshift_mstrain.py 8 --no-validate --cfg-options load_from=$PATH_TO_PRETRAINED_WEIGHT- The whole training process will cost about three hours with 8 TESLA V100 GPUs.

- To train TeViT with

ResNet-50orSwin-Lbackbone, please download the COCO pretrained weights fromQueryInst.

This code is mainly based on mmdetection and QueryInst, thanks for their awesome work and great contributions to the computer vision community!

If you find our paper and code useful in your research, please consider giving a star ⭐ and citation 📝 :

@inproceedings{yang2022tevit,

title={Temporally Efficient Vision Transformer for Video Instance Segmentation,

author={Yang, Shusheng and Wang, Xinggang and Li, Yu and Fang, Yuxin and Fang, Jiemin and Liu and Zhao, Xun and Shan, Ying},

booktitle = {Proc. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)},

year = {2022}

}