Jingfeng Yao1, Xinggang Wang1 📧, Yuehao Song1, Huangxuan Zhao2,

Jun Ma3,4,5, Yajie Chen1, Wenyu Liu1, Bo Wang3,4,5,6,7 📧

1School of Electronic Information and Communications,

Huazhong University of Science and Technology, Wuhan, Hubei, China

2Department of Radiology, Union Hospital, Tongji Medical College,

Huazhong University of Science and Technology, Wuhan, Hubei, China

3Peter Munk Cardiac Centre, University Health Network, Toronto, Ontario, Canada

4Department of Laboratory Medicine and Pathobiology, University of Toronto, Toronto, Ontario, Canada

5Vector Institute for Artificial Intelligence, Toronto, Ontario, Canada

6AI Hub, University Health Network, Toronto, Ontario, Canada

7Department of Computer Science, University of Toronto, Toronto, Ontario, Canada

(📧) corresponding author.

May 08th, 2024: EVA-X is realsed! We have released our codes and arxiv paper.

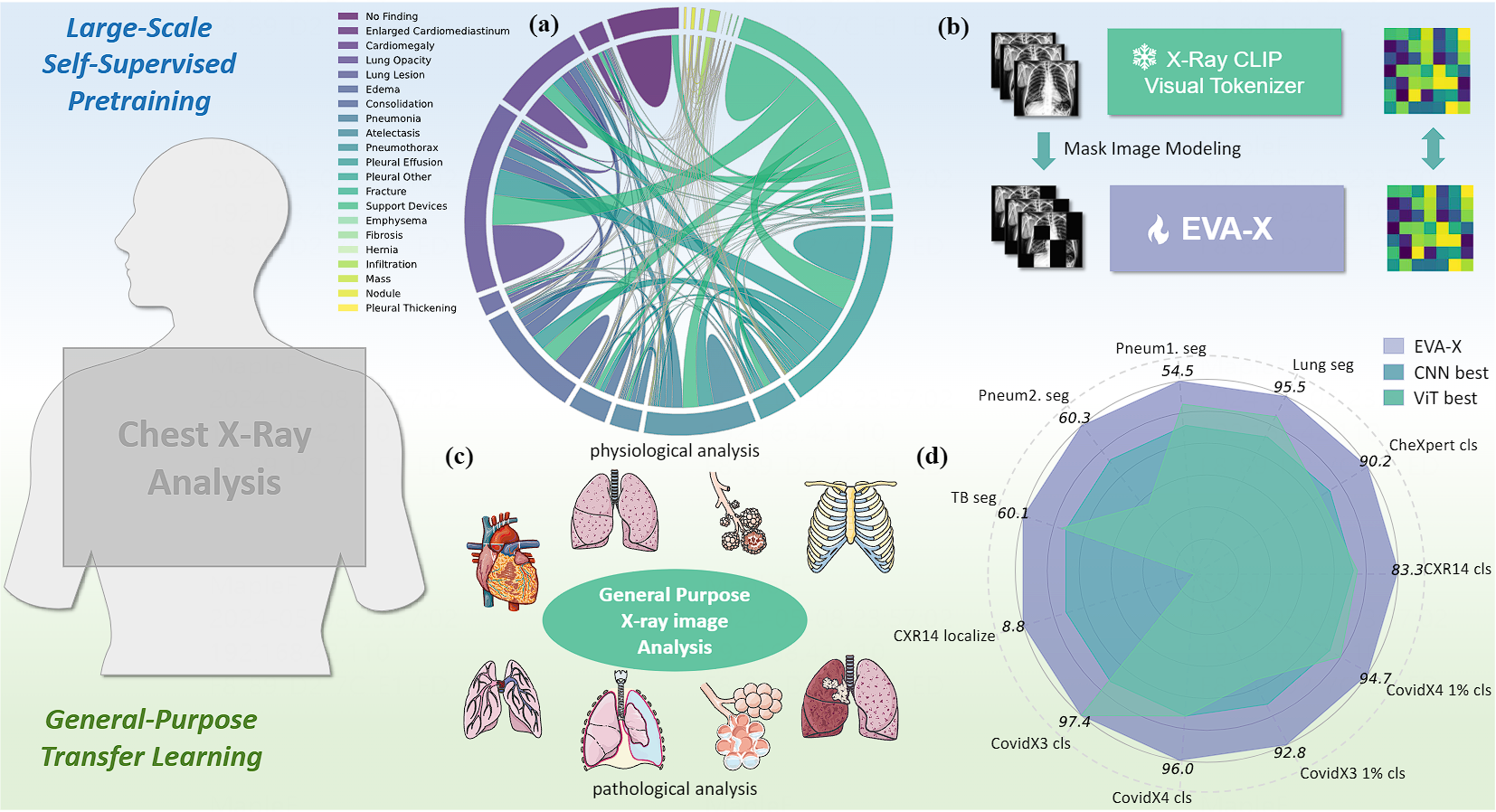

EVA-X are Vision Transformers (ViTs) pre-trained especially for medical X-ray images.

Take them away for your own X-ray tasks!

The diagnosis and treatment of chest diseases play a crucial role in maintaining human health. X-ray examination has become the most common clinical examination means due to its efficiency and cost-effectiveness. Artificial intelligence analysis methods for chest X-ray images are limited by insufficient annotation data and varying levels of annotation, resulting in weak generalization ability and difficulty in clinical dissemination. Here we present EVA-X, an innovative foundational model based on X-ray images with broad applicability to various chest disease detection tasks. EVA-X is the first X-ray image based self-supervised learning method capable of capturing both semantic and geometric information from unlabeled images for universal X-ray image representation. Through extensive experimentation, EVA-X has demonstrated exceptional performance in chest disease analysis and localization, becoming the first model capable of spanning over 20 different chest diseases and achieving leading results in over 11 different detection tasks in the medical field. Additionally, EVA-X significantly reduces the burden of data annotation in the medical AI field, showcasing strong potential in the domain of few-shot learning. The emergence of EVA-X will greatly propel the development and application of foundational medical models, bringing about revolutionary changes in future medical research and clinical practice.

| EVA-X Series | Architecture | #Params | Checkpoint | Tokenizer | MIM epochs |

|---|---|---|---|---|---|

| ViT-Ti/16 | 6M | 🤗download | MGCA-ViT-B/16 | 900 | |

| ViT-S/16 | 22M | 🤗download | MGCA-ViT-B/16 | 600 | |

| ViT-B/16 | 86M | 🤗download | MGCA-ViT-B/16 | 600 |

Countdown 3, 2, 1. Launch EVA-X! 🚀

-

Download pre-trained weights.

-

Install pytorch_image_models

! pip install timm==0.9.0 -

Initialize EVA-X with 2-line python codes. You could also check

eva_x.pyto modify it for your own X-ray tasks.from eva_x import eva_x_tiny_patch16, eva_x_small_patch16, eva_x_base_patch16 model = eva_x_small_patch16(pretrained=/path/to/pre-trained)

Try EVA-X representations for your own X-rays!

EVA-X has released all experimental code from the paper. Here is our contents. Please click 👉 and refer to the corresponding subsections as needed.

Use EVA-X as your backbone:

Finetuning:

Interpretability analysis:

Our codes are built upon EVA, EVA-02, MGCA, Medical MAE, mmsegmentation, timm, segmentation_models_pytorch, pytorch_grad_cam Thansk for these great repos!

If you find our work useful, please consider to cite:

@article{eva_x,

title={EVA-X: A Foundation Model for General Chest X-ray Analysis with Self-supervised Learning},

author={Yao, Jingfeng and Wang, Xinggang and Yuehao, Song and Huangxuan, Zhao and Jun, Ma and Yajie, Yang and Wenyu, Liu and Bo, Wang},

journal={arXiv preprint arXiv:2405.05237},

year={2024}

}