Note: For documentation on Pyroscope's golang integration visit our website for golang push mode or golang pull mode

In this example we show a simplified, basic use case of Pyroscope. We simulate a "ride share" company which has three endpoints found in main.go:

/bike: calls theOrderBike(search_radius)function to order a bike/car: calls theOrderCar(search_radius)function to order a car/scooter: calls theOrderScooter(search_radius)function to order a scooter

We also simulate running 3 distinct servers in 3 different regions (via docker-compose.yml)

- us-east

- eu-north

- ap-south

One of the most useful capabilities of Pyroscope is the ability to tag your data in a way that is meaningful to you. In this case, we have two natural divisions, and so we "tag" our data to represent those:

region: statically tags the region of the server running the codevehicle: dynamically tags the endpoint (similar to how one might tag a controller)

Tagging something static, like the region, can be done in the initialization code in the main() function:

pyroscope.Start(pyroscope.Config{

ApplicationName: "ride-sharing-app",

ServerAddress: serverAddress,

Logger: pyroscope.StandardLogger,

Tags: map[string]string{"region": os.Getenv("REGION")},

})

Tagging something more dynamically, like we do for the vehicle tag can be done inside our utility FindNearestVehicle() function using pyroscope.TagWrapper

func FindNearestVehicle(search_radius int64, vehicle string) {

pyroscope.TagWrapper(context.Background(), pyroscope.Labels("vehicle", vehicle), func(ctx context.Context) {

// Mock "doing work" to find a vehicle

var i int64 = 0

start_time := time.Now().Unix()

for (time.Now().Unix() - start_time) < search_radius {

i++

}

})

}

What this block does, is:

- Add the label

pyroscope.Labels("vehicle", vehicle) - execute the

FindNearestVehicle()function - Before the block ends it will (behind the scenes) remove the

pyroscope.Labels("vehicle", vehicle)from the application since that block is complete

To run the example run the following commands:

# Pull latest pyroscope image:

docker pull grafana/pyroscope:latest

# Run the example project:

docker-compose up --build

# Reset the database (if needed):

# docker-compose down

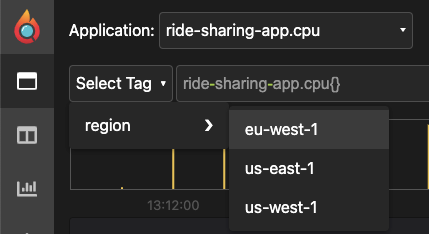

What this example will do is run all the code mentioned above and also send some mock-load to the 3 servers as well as their respective 3 endpoints. If you select our application: ride-sharing-app.cpu from the dropdown, you should see a flame graph that looks like this (below). After we give 20-30 seconds for the flame graph to update and then click the refresh button we see our 3 functions at the bottom of the flame graph taking CPU resources proportional to the size of their respective search_radius parameters.

The first step when analyzing a profile outputted from your application, is to take note of the largest node which is where your application is spending the most resources. In this case, it happens to be the OrderCar function.

The benefit of using the Pyroscope package, is that now that we can investigate further as to why the OrderCar() function is problematic. Tagging both region and vehicle allows us to test two good hypotheses:

- Something is wrong with the

/carendpoint code - Something is wrong with one of our regions

To analyze this we can select one or more tags from the "Select Tag" dropdown:

Knowing there is an issue with the OrderCar() function we automatically select that tag. Then, after inspecting multiple region tags, it becomes clear by looking at the timeline that there is an issue with the eu-north region, where it alternates between high-cpu times and low-cpu times.

We can also see that the mutexLock() function is consuming almost 70% of CPU resources during this time period.

Using Pyroscope's "comparison view" we can actually select two different time ranges from the timeline to compare the resulting flame graphs. The pink section on the left timeline results in the left flame graph, and the blue section on the right represents the right flame graph.

When we select a period of low-cpu utilization and a period of high-cpu utilization we can see that there is clearly different behavior in the mutexLock() function where it takes 33% of CPU during low-cpu times and 71% of CPU during high-cpu times.

While the difference in this case is stark enough to see in the comparison view, sometimes the diff between the two flame graphs is better visualized with them overlayed over each other. Without changing any parameters, we can simply select the diff view tab and see the difference represented in a color-coded diff flame graph.

We have been beta testing this feature with several different companies and some of the ways that we've seen companies tag their performance data:

- Tagging Kubernetes attributes

- Tagging controllers

- Tagging regions

- Tagging jobs from a queue

- Tagging commits

- Tagging staging / production environments

- Tagging different parts of their testing suites

- Etc...

We would love for you to try out this example and see what ways you can adapt this to your golang application. While this example focused on CPU debugging, Golang also provides memory profiling as well. Continuous profiling has become an increasingly popular tool for the monitoring and debugging of performance issues (arguably the fourth pillar of observability).

We'd love to continue to improve our golang integrations and so we would love to hear what features you would like to see.